If you’re evaluating using cloud databases, the most practical question is not “Which service is best?” but “Which service fits this workload right now?” This tutorial gives you a reliable, repeatable way to compare Amazon RDS, Amazon DynamoDB, and Google Cloud SQL, then implement your choice confidently. In short: pick a service by access patterns, consistency needs, and operational model—then configure HA/DR, capacity, security, and rollout without surprises. For quick orientation, the 10 steps are: (1) access patterns, (2) consistency & transactions, (3) throughput shape, (4) service choice, (5) HA/DR, (6) schema & keys, (7) capacity & storage, (8) security & connectivity, (9) migration plan, (10) operations & cost. Expect crisp criteria, numeric guardrails, and mini-cases along the way. The outcome is a decision you can defend and a deployment you can run.

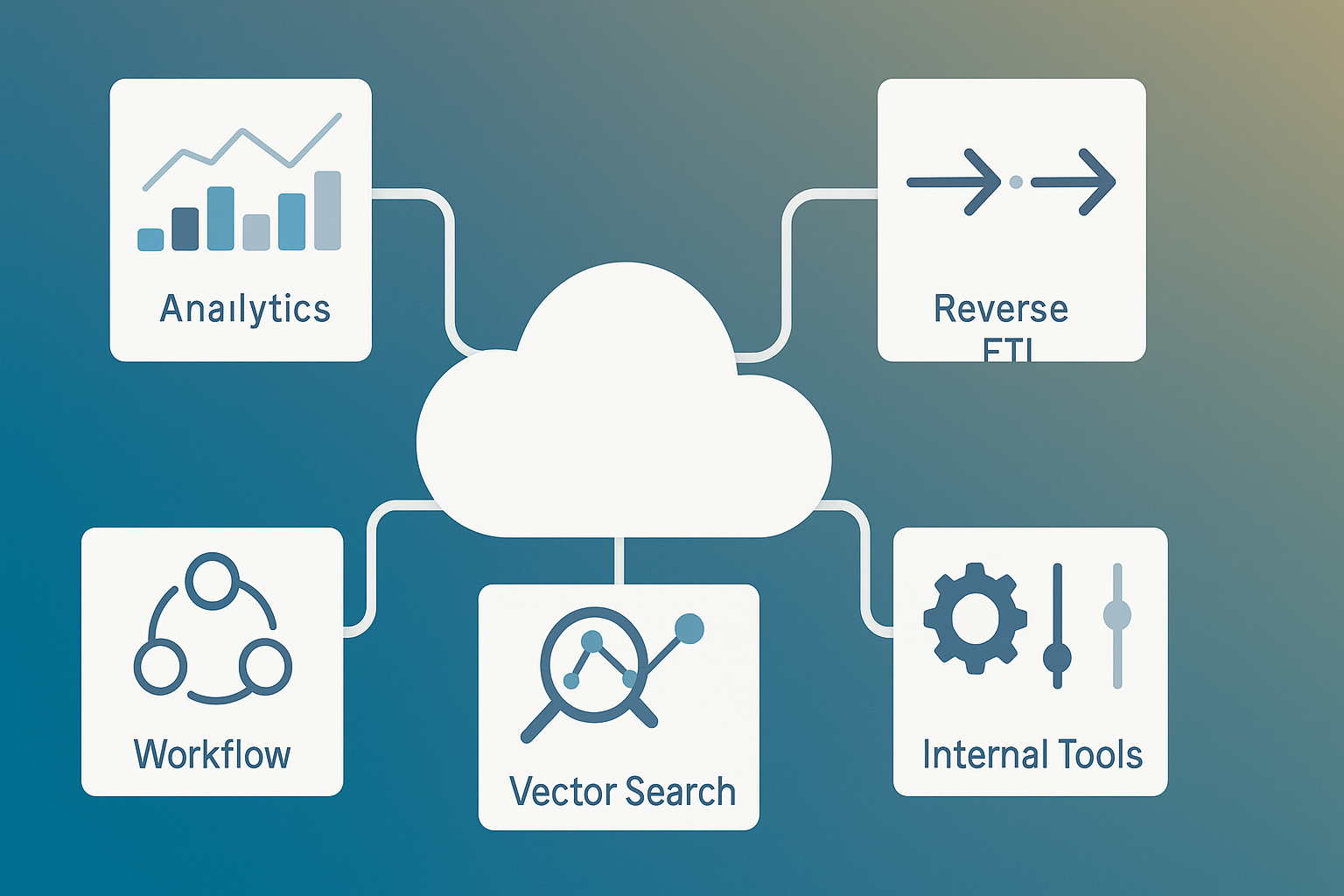

Definition: Amazon RDS and Google Cloud SQL are fully managed relational database services; Amazon DynamoDB is a fully managed NoSQL key-value and document store. You choose among them by the shape of reads/writes, query flexibility, and transaction semantics.

Fast path: If you need SQL joins, complex queries, and multi-row ACID, choose RDS or Cloud SQL. If you need massive scale, predictable single-digit millisecond key lookups, and automatic partitioning, choose DynamoDB.

1. Map Access Patterns Before You Pick a Service

Most “database regrets” start with choosing a technology before clarifying how the application will read and write data. Start by listing access patterns: the exact questions your app asks the database and how often. Capture primary keys, filters, sort orders, and the frequency/latency target for each pattern. Then, classify them into point lookups, range queries, ad hoc joins, aggregations, and scans. This tells you whether you need flexible relational queries or optimized key-based access. If most calls are joins, filters across multiple columns, and multi-row transactions, a relational engine (RDS or Cloud SQL) is a better default. If most calls are O(1) key-value gets/puts with predictable shapes, DynamoDB will shine. Keep one critical idea in mind: your access patterns are the schema—even in relational systems—because they drive indexes, partitions, and replicas. Documenting them explicitly saves you from painful refactors.

Mini-checklist (capture at least 8–12 patterns):

- Request path and method (e.g., GET /orders/{orderId}).

- Expected QPS and p95 latency target.

- Read/write ratio and burstiness (steady vs spiky).

- Exact filters and sorts; do you need secondary indexes?

- Contention risk (hot keys? sequential IDs?).

- Transaction boundaries (single item vs multi-row).

- Consistency requirement (strong vs eventual).

- Data lifecycle (TTL/archival/soft delete).

In practice: You’ll often find 3–5 dominant patterns responsible for ~80% of traffic. Optimize for those and consider analytics separately (e.g., export to BigQuery/Athena) rather than forcing the OLTP database to do everything. Concluding this step with a short table of patterns and targets gives you an objective yardstick for the remaining steps.

2. Decide Your Consistency Model and Transaction Semantics

Decide upfront how strict your consistency and transactions must be. RDS and Cloud SQL are relational systems that deliver familiar ACID semantics and strong consistency within a single instance. DynamoDB is eventually consistent by default for reads, supports strongly consistent reads in a single Region, and offers transactions (TransactWriteItems and TransactGetItems) for all-or-nothing multi-item operations when needed. For multi-Region activity, DynamoDB global tables replicate asynchronously; design for conflict avoidance (e.g., write-sharding or per-Region ownership of items). When your domain requires referential integrity, cross-table joins, or complex, multi-row invariants, the operational simplicity of RDS/Cloud SQL is hard to beat.

Why it matters

- Strong guarantees: Relational systems ease correctness for money movement, inventory decrements, and workflow state machines.

- Predictable reads: DynamoDB’s defaults favor availability and scale; opt into strong reads when strictly required.

- Cross-Region behavior: With global tables you gain locality and resilience but accept asynchronous replication semantics; plan around them.

Common mistakes

- Assuming DynamoDB can “query anything” like SQL; it can’t without explicit access-path design.

- Assuming read replicas provide transactional failover in all services; they usually don’t (they’re for scale, not HA).

Numbers & guardrails

- DynamoDB strong reads return the latest data in a single Region; global tables replicate asynchronously across Regions—treat cross-Region reads as eventually consistent by design.

- Use DynamoDB transactions sparingly on hot paths; they consume capacity for every item involved and add coordination overhead.

Synthesis: Choose relational if you need complex queries and multi-row atomicity most of the time; choose DynamoDB when single-item atomicity and key-based access dominate and you can design to avoid cross-item contention.

3. Estimate Throughput Shape and Latency Targets

Throughput shape determines capacity and cost. Steady workloads (e.g., constant 2,000 reads/s) behave differently from spiky ones (e.g., bursts to 15,000 reads/s for a few minutes). In relational services (RDS/Cloud SQL), capacity largely depends on instance class, storage, and indexing. In DynamoDB, you choose on-demand or provisioned modes; on-demand adapts instantly within certain scaling envelopes, while provisioned gives tight cost control with auto scaling. On-demand tables can instantly accommodate up to double the previous peak traffic and sustain substantial baseline throughput without pre-planning.

How to do it

- Derive read/write QPS and p95 latency per access pattern.

- For RDS/Cloud SQL, map to instance families and storage (see Step 7).

- For DynamoDB, decide on-demand for unpredictable traffic or provisioned with auto scaling for stable patterns.

- Validate against partition/key distribution (Step 6).

Mini case (DynamoDB burst):

Yesterday’s peak on your “orders” table was 20,000 strongly consistent reads/s. Today you see a sudden demand spike to 35,000. With on-demand capacity, the table can immediately scale to ~40,000 reads/s (2× the prior peak) without a throttle event, giving you headroom while you monitor. If this becomes the new normal, re-baseline after traffic stabilizes to avoid repeated step-ups.

Numbers & guardrails

- Prefer on-demand when daily peaks vary by >5× and are hard to predict; prefer provisioned when peaks are within ~2× and happen on a schedule.

- For relational systems, track CPU < 70%, buffer cache hit ratio high, and storage latency within vendor guidance—then scale vertically or add read replicas as needed (details in Step 7).

Synthesis: Model your load realistically; match DynamoDB capacity mode to volatility and match relational sizing to your heaviest consistent period, not your average.

4. Make the First-Pass Service Choice (with a Quick Table)

With patterns and consistency clarified, you can make an initial choice. Use the table to check your bias.

| Service | Best For | Scalability Model | Notes |

|---|---|---|---|

| Amazon RDS | OLTP with joins, multi-row ACID, familiar engines (MySQL, PostgreSQL, SQL Server, Oracle, Db2) | Vertical scale + read replicas; Multi-AZ for HA | Broad engine support and ecosystem; choose storage type for latency/IOPS. |

| Google Cloud SQL | Managed MySQL/PostgreSQL/SQL Server with tight GCP integration | Vertical scale + read replicas; HA configuration | Simple HA and backups; read replicas improve read scale, not HA. |

| Amazon DynamoDB | Massive scale key-value/document with predictable low-latency gets/puts | Horizontal partitioning; on-demand/provisioned capacity; global tables | Requires access-pattern-first design; transactions available when needed. |

How to use this table

- If most dominant patterns are key lookups and range reads on a single key, lean DynamoDB.

- If you rely on SQL joins, aggregates, window functions, or migrations that need foreign keys, lean RDS/Cloud SQL.

- If you need multi-Region active-active writes, DynamoDB global tables are first-class; relational equivalents are DIY and complex.

Synthesis: Treat this as a first pass. The next steps (HA/DR, schema, capacity, and operations) will confirm or refine the decision.

5. Plan High Availability (HA) and Disaster Recovery (DR)

HA and DR are about RTO/RPO expectations and failure domains. In RDS, Multi-AZ deployments synchronously replicate to a standby in another Availability Zone; failovers are automatic, and the standby doesn’t serve reads in the single-standby mode (Multi-AZ DB instance). Multi-AZ DB cluster deployments have two readable standbys for higher read capacity and faster failover. In Cloud SQL, enable HA during creation to get a standby instance in a different zone; you can also use read replicas (including cross-Region) to offload reads and assist with DR, but replicas are not automatic failover targets. For DynamoDB, HA within a Region is built in; for cross-Region DR and locality, use global tables and plan write routing and conflict avoidance.

How to do it

- Define RTO/RPO per service; choose RDS Multi-AZ or Cloud SQL HA when you need automatic failover in-Region.

- For regional DR: Cloud SQL cross-Region replicas (promote on disaster); RDS cross-Region read replicas (engine-dependent); DynamoDB global tables.

- Test failovers with realistic load.

Numbers & guardrails

- RDS Multi-AZ (instance) uses synchronous replication to a standby in another AZ to minimize data loss; plan for brief failover while connections retry.

- Cloud SQL HA places a standby in a different zone; use read replicas for scale, not for automatic HA failover.

- DynamoDB global tables replicate across Regions; treat cross-Region state as eventually consistent and avoid “last writer wins” ambiguity by assigning write ownership per Region or per key range.

Synthesis: Pick a built-in HA story for your chosen service, then add DR with cross-Region replicas or global tables as needed; practice failover procedures until boring.

6. Design Schemas and Keys That Match Your Workload

Relational (RDS/Cloud SQL): Normalize where it helps integrity and reduce duplication; denormalize surgically with indexes and materialized views to hit latency targets. Pay attention to surrogate vs natural keys and to index selectivity; write-skew hot spots often come from monotonically increasing IDs in insert-heavy tables—shard them, randomize, or use sequences with cache. Consider read replicas for expensive analytical queries instead of over-indexing the primary.

DynamoDB: Model from access patterns backward. Choose a partition key (and optional sort key) that spreads workload uniformly to avoid hot partitions; if key cardinality is low, add a random suffix or bucket by time. Use global secondary indexes (GSIs) for alternate query paths and sparse indexes for targeted reads. Design single-table schemas around entity types (e.g., ORDER#123, ORDER_ITEM#123#1) to co-locate related items under one partition and support transactional workflows.

Common mistakes (NoSQL)

- Picking human-readable, low-cardinality partition keys (e.g., country codes) that create hot partitions and throttling.

- Overusing scans; in DynamoDB you pay per item read—scans become cost and latency hazards.

Tools/Examples

- DynamoDB best practices emphasize partition-key distribution and “hot partition” avoidance; read these before schema commits.

Mini-checklist

- Enumerate primary and secondary indexes (why they exist and for which pattern).

- Confirm no single partition or index receives >10% of peak traffic for ≥1 minute.

- For DynamoDB, design item collections under a partition to support transactional writes and strong read paths.

Synthesis: Schemas and keys are performance contracts. Validate them against your top access patterns and the service’s physical model before load tests.

7. Configure Capacity, Storage, and Scaling

This is where your earlier estimates translate into concrete knobs.

Relational (RDS/Cloud SQL)

- Compute: Choose instance classes sized for p95 workload with ~30% headroom. Monitor CPU, memory, and I/O queues.

- Storage: On RDS, pick gp3 (recommended general-purpose SSD) for balanced price/perf; choose io1/io2 when you need consistently low latency and high IOPS (e.g., write-heavy OLTP, large indexes). gp2 is previous generation.

- Replicas: Add read replicas to scale reads; they do not provide automatic failover by themselves. On Cloud SQL, create replicas in-Region or cross-Region; use for analytics/offload.

DynamoDB

- Capacity mode: Pick on-demand for unpredictable spikes; provisioned with auto scaling for steady loads and tighter cost control.

- Throughput math: Start with your top three patterns; compute required RCU/WCU with safety margin, then revisit after observing real traffic. On-demand instantly scales up to 2× your prior peak—useful during launches.

Numbers & guardrails (example)

- A write-heavy orders table needs 6,000 WCUs at peak and 12,000 RCUs. In provisioned mode with auto scaling, set target utilization around 70% to avoid oscillations; in on-demand, expect cost to follow actual requests, with headroom to absorb sudden 2× surges without throttle.

- On RDS, if your gp3 volume shows p95 storage latency above vendor guidance during backups, consider Provisioned IOPS (io1/io2) to keep write latency stable.

Synthesis: Right-size once, then watch real metrics for a week’s worth of peak/valley cycles. Be intentional about when to pay for headroom versus elasticity.

8. Lock In Security, Connectivity, and Secrets

Security choices are similar across services but differ in details. Use private networking (VPC/Subnets) and avoid public endpoints wherever possible. For RDS/Cloud SQL, place instances in private subnets, restrict ingress via security groups/firewalls, and use a proxy if client IPs are dynamic. Use TLS in transit and encryption at rest—with customer-managed keys (CMEK/KMS) when you have regulatory requirements. For DynamoDB, prefer IAM-based access with granular policies mapped to tables, indexes, and item conditions; avoid embedding credentials in code.

Mini-checklist

- Network: VPC-only endpoints; no public IPs unless absolutely necessary.

- Auth: IAM roles for DynamoDB; managed identities/service accounts for RDS/Cloud SQL connections.

- Key management: Use KMS/CMEK for at-rest encryption.

- Secrets: Rotate DB user passwords or use IAM DB authentication (where available) to avoid long-lived secrets.

- Observability: Enable audit logs/CloudTrail/Cloud Logging; restrict who can restore snapshots/replicas.

Numbers & guardrails

- Limit DB users with DDL permissions to a small admin set; rotate credentials on a fixed cadence driven by policy.

- Enforce p95 CPU/network thresholds that trigger autoscaling or alerts; tie alerts to change windows to reduce pager noise.

Synthesis: Treat security and connectivity as first-class citizen requirements. Decide once, template everywhere (IaC), and keep secrets out of application code paths.

9. Plan Migration and Rollout Without Surprises

Moving from one database to another (or on-prem to cloud) fails when teams rush cutover. Choose a strategy: big bang (brief downtime), blue/green (dual write/dual read during a window), or CDC-based backfill (ongoing replication until cutover). For relational to relational (e.g., self-managed PostgreSQL to RDS/Cloud SQL), tools and logical replication make this straightforward. For relational to DynamoDB, design a translation layer that writes both the relational schema and the new item shape for a while; after lag converges and read paths are stable, flip reads.

How to do it

- Stand up target environment and enable backups/PITR from day one.

- For Cloud SQL, configure HA and replicas as needed before importing data; remember read replicas are not HA.

- For RDS, pre-create read replicas for offloading and Multi-AZ for HA during and after cutover.

- For DynamoDB, design idempotent upsert jobs and ensure partition-key distribution matches live traffic.

Mini case (throughput math for backfill):

You need to migrate 120 million order rows in 48 hours with minimal impact. That’s 2.5 million/hour or about 700 rows/second sustained. For RDS→RDS with logical replication, sustain >1,000 rows/s to leave buffer for retries. For relational→DynamoDB, budget >1,500 WCUs for backfill and throttle to keep p95 API latency within SLO; then switch to on-demand to absorb unpredictable launch-day spikes.

Synthesis: Choose a migration method that matches downtime tolerance; pre-provision HA and backups; throttle backfill by latency, not just by throughput.

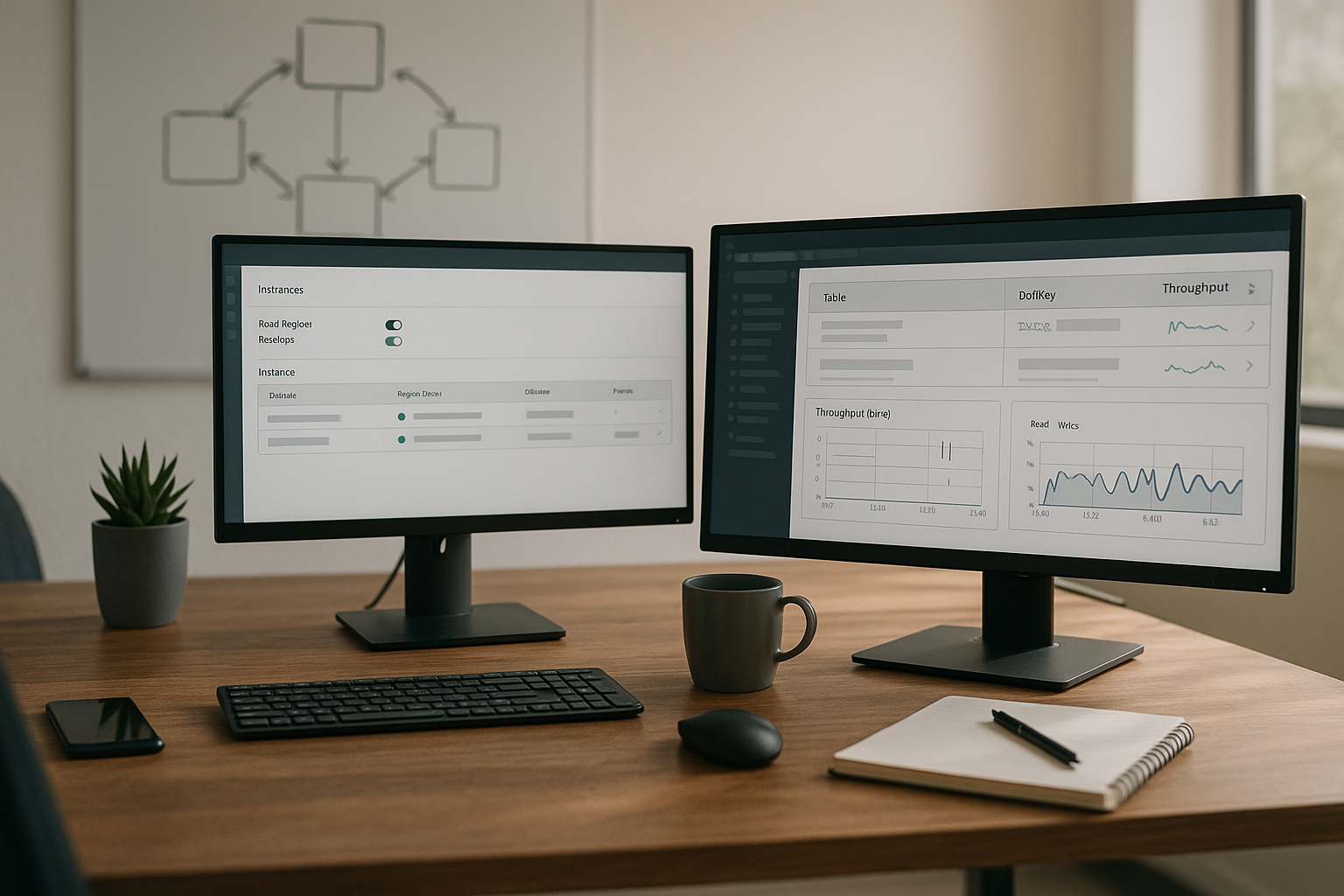

10. Runbooks: Backups, PITR, Maintenance, and Cost Watch

Operations cement reliability. In RDS, enable automated backups and set a reasonable retention; you can perform point-in-time recovery (PITR) to create a new instance at an exact moment. Automated backups run during a preferred window and can’t overlap the maintenance window. In Cloud SQL, configure automatic backups and PITR for MySQL/PostgreSQL and set maintenance windows to control when updates occur. Use read replicas for scale, not automatic failover. In DynamoDB, recovery is typically architectural (multi-Region/global tables) plus on-table features like TTL and streams for repair workflows; capacity mode influences cost directly.

Mini-checklist

- Backups/PITR: Enabled, tested quarterly; documented RTO/RPO and restoration steps for each service. AWS Documentation

- Maintenance: Align windows with off-peak; communicate change freezes; monitor failovers. Google Cloud

- Cost/Capacity: Track DynamoDB RCU/WCU and top keys; for RDS/Cloud SQL, watch instance right-sizing, storage latency, and replica lag.

- Runbooks: One-page steps to restore, fail over, and roll back; tie to alarms and dashboards.

Numbers & guardrails

- Keep replica lag consistently under 1–2 seconds for read-heavy apps that demand fresh reads; if higher, move analytics off the primary and consider schema/index adjustments.

- For backups, test restore speeds that achieve your RTO (e.g., restore 1 TB snapshot within your SLA); if not, shorten backup chains or keep a warm standby.

Synthesis: Reliability emerges from practiced recovery, predictable maintenance, and cost-aware scaling. Treat these as product features, not chores.

Conclusion

Choosing between RDS, DynamoDB, and Cloud SQL is straightforward when you ground the decision in access patterns, consistency, and throughput shape. Use relational services when you need multi-row ACID, flexible joins, and SQL tooling; use DynamoDB when your workload is key-based, massive, and latency-sensitive. Then, make the choice resilient: configure HA (Multi-AZ/HA), plan DR (replicas or global tables), design keys and indexes for your dominant patterns, size capacity with headroom, and put backups, PITR, and maintenance on rails. If you follow the 10 steps, you’ll ship a database platform that’s easy to reason about, easy to scale, and boring to operate—in the best possible way. Copy-ready CTA: Pick your top three access patterns, map them to the table in Step 4, and make your initial service choice today.

FAQs

1) Is DynamoDB “only for simple lookups,” or can it handle complex workflows?

DynamoDB excels at simple, high-scale key-based access but can support complex workflows using single-table design, GSIs for alternate query paths, and transactions for all-or-nothing multi-item updates. The trade-off is that you design query paths up front; ad hoc joins aren’t a goal of the system. When you routinely need joins and aggregations across many attributes, a relational system will stay simpler. AWS Documentation

2) Do read replicas in Cloud SQL or RDS provide automatic failover?

No. Read replicas exist to scale reads and offload analytics. In Cloud SQL, replicas mirror the primary in near real time but aren’t automatic failover targets; you must promote them during incidents. In RDS, read replicas also serve read scaling; Multi-AZ is the HA feature that handles automatic failover in-Region.

3) When should I choose DynamoDB on-demand vs provisioned capacity?

Choose on-demand when traffic is unpredictable or launch-driven; it automatically adapts to spikes and can immediately handle up to 2× your previous peak. Choose provisioned with auto scaling when your peaks are predictable and you want tighter cost control. Start on-demand, measure, then consider provisioned once traffic stabilizes.

4) What storage type should I pick for RDS, and why does it matter?

Pick gp3 for balanced price/performance in most cases. If you need consistently low latency and high IOPS (write-heavy OLTP, large indexes, or tight backup windows), consider Provisioned IOPS (io1/io2). Storage choice directly impacts latency during backups, checkpoints, and bursts.

5) How do Multi-AZ deployments in RDS differ from read replicas?

Multi-AZ synchronously replicates to a standby in another AZ for HA and automatic failover. In the single-standby mode, the standby does not serve reads. Read replicas are separate, asynchronous copies for scaling reads and don’t automatically fail over. The two features solve different problems and are often used together. AWS Documentation

6) Can Cloud SQL do cross-Region DR?

Yes. Use cross-Region read replicas and plan a promotion procedure if the primary Region fails. This improves read locality and gives you a controlled path to recover during regional incidents, but it’s not automatic HA. Practice the promotion steps as part of your DR runbooks.

7) How do I avoid “hot partitions” in DynamoDB?

Choose high-cardinality partition keys; consider adding random/bucketed suffixes or time buckets when natural keys are too concentrated. Validate that no partition receives a disproportionate share of requests during load testing. AWS’s partition-key design guidance is the canonical reference here.

8) What’s the role of point-in-time recovery (PITR) across services?

In RDS, enable automated backups to perform PITR and create a new instance at an exact moment. In Cloud SQL, enable backups and PITR features appropriate to your engine and test restores. PITR converts catastrophic mistakes into routine restores—provided it’s enabled and regularly tested. AWS Documentation

9) Do I need global tables for every DynamoDB use case?

No. Use global tables when you need multi-Region writes, low-latency local reads across continents, or regional resilience beyond a single Region. They add architectural complexity: conflict avoidance, routing, and capacity planning per Region. Many workloads do perfectly well in a single Region with good HA.

10) Are there cases where Cloud SQL is simpler than RDS?

If your platform is already GCP-centric, Cloud SQL integrates natively with IAM, networking, and logging and supports MySQL, PostgreSQL, and SQL Server. The decision often hinges on your broader stack and where your compute runs, not on database features alone. Conversely, if you’re AWS-centric, RDS fits better and supports more engines, including Oracle and Db2.

References

- Configuring and managing a Multi-AZ deployment — Amazon RDS User Guide, AWS. https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Concepts.MultiAZ.html

- Multi-AZ DB cluster deployments for Amazon RDS — Amazon RDS User Guide, AWS. https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/multi-az-db-clusters-concepts.html

- DynamoDB capacity modes (on-demand and provisioned) — Amazon DynamoDB Developer Guide, AWS. https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/capacity-mode.html

- DynamoDB on-demand capacity mode — Amazon DynamoDB Developer Guide, AWS. https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/on-demand-capacity-mode.html

- Best practices for designing and using partition keys — Amazon DynamoDB Developer Guide, AWS. https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/bp-partition-key-design.html

- About high availability (Cloud SQL) — Cloud SQL Docs, Google Cloud. https://cloud.google.com/sql/docs/mysql/high-availability

- About replication & read replicas (Cloud SQL) — Cloud SQL Docs, Google Cloud. https://cloud.google.com/sql/docs/mysql/replication

- Cloud SQL backups overview & PITR — Cloud SQL Docs, Google Cloud. https://cloud.google.com/sql/docs/mysql/backup-recovery/backups

- Use point-in-time recovery (PostgreSQL) — Cloud SQL Docs, Google Cloud. https://cloud.google.com/sql/docs/postgres/backup-recovery/pitr

- Amazon RDS DB instance storage types (gp3, gp2, io1, io2) — Amazon RDS User Guide, AWS. https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Storage.html

- Managing automated backups (RDS) — Amazon RDS User Guide, AWS. https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_ManagingAutomatedBackups.html

- Amazon RDS Read Replicas — AWS RDS Features, AWS. https://aws.amazon.com/rds/features/read-replicas/

- Global tables — multi-Region replication — Amazon DynamoDB Developer Guide, AWS. https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/GlobalTables.html

- Cloud SQL overview (engines supported) — Cloud SQL Docs, Google Cloud. https://cloud.google.com/sql/docs/introduction

- Amazon RDS DB instances (engines supported) — Amazon RDS User Guide, AWS. https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Overview.DBInstance.html