The era of artificial intelligence (AI) interacting with the world solely through text is rapidly ending. For decades, AI systems were largely “unimodal,” meaning they were specialists trained to do one thing exceptionally well—analyze text, recognize images, or transcribe audio—but never all at once. Today, we are witnessing a paradigm shift toward multimodal AI models, systems designed to process, understand, and generate multiple types of media simultaneously. By combining text, images, and speech, these models mimic human perception more closely than ever before, unlocking capabilities that were previously the stuff of science fiction.

Imagine an AI that doesn’t just read a repair manual but watches a video of a mechanic working, listens to the sound of the engine, and then verbally guides you through the repair process in real-time. This is the promise of multimodal AI. It is not merely an incremental improvement; it is a fundamental architectural evolution that allows machines to understand context, nuance, and intent across the full spectrum of human communication.

In this comprehensive guide, we will explore the inner workings of multimodal AI models, dissect the technologies that enable text, image, and speech convergence, and examine the transformative impact this technology is having on industries ranging from healthcare to creative arts.

Key Takeaways

- Definition: Multimodal AI models process and synthesize information from multiple data types (modalities) such as text, images, audio, and video within a single system.

- Human-Like Perception: Unlike unimodal systems, these models simulate human cognitive processes by associating visual cues with linguistic concepts and auditory signals.

- Core Technology: They rely on advanced transformer architectures and shared embedding spaces to “translate” different media types into a common mathematical language.

- Applications: Use cases span form autonomous driving and medical diagnostics to accessibility tools and creative content generation.

- Challenges: Significant hurdles remain regarding computational costs, data alignment, and ethical concerns regarding deepfakes and privacy.

- The Future: The trajectory points toward “embodied AI,” where multimodal brains are integrated into robots that navigate and interact with the physical world.

Scope of This Guide

This article focuses on the technical mechanisms, practical applications, and ethical implications of multimodal AI models that integrate text, images, and speech. While we will touch upon video and sensor data (like LiDAR) as extensions of these modalities, the primary focus is on the convergence of the three core human communication channels. This guide is designed for a general to intermediate audience—business leaders, developers, and tech enthusiasts—seeking a deep understanding of the landscape as of January 2026.

What Are Multimodal AI Models?

To understand multimodal AI, we must first understand the concept of “modality.” In data science, a modality refers to a specific type of information or the channel through which information is communicated. Text is one modality; images are another; audio (speech) is a third.

The Limitation of Unimodal Systems

For most of the history of artificial intelligence, models were unimodal.

- Natural Language Processing (NLP) models like early GPT versions could write poetry but were blind to the visual world.

- Computer Vision (CV) models could classify a photo of a cat but couldn’t explain why the cat looked angry or write a story about it.

- Automatic Speech Recognition (ASR) systems could transcribe words but often missed the emotional sentiment carried in the tone of voice.

These systems operated in silos. Connecting them required complex “pipelines”—stitching together different models that didn’t truly understand each other. Errors propagated through the chain: if the speech-to-text model heard a word wrong, the NLP model processing that text would fail to understand the query, and the downstream action would be incorrect.

The Multimodal Advantage

Multimodal AI models break down these silos. They are trained on vast datasets containing mixed modalities—labeled images, videos with subtitles, audio clips with transcripts, and documents containing charts and graphs.

By learning from these paired datasets, the model builds an internal representation of the world where the word “apple,” the image of a red fruit, and the sound of the word “apple” being spoken are all mapped to the same conceptual understanding. This allows the model to:

- Cross-Reference: Use visual context to resolve ambiguity in speech (e.g., distinguishing “bank” the river edge from “bank” the financial institution based on a photo).

- Generate Across Modalities: Take a text description and generate an image, or look at a chart and speak a summary of the data.

- Reason Holistically: Analyze a video meeting to produce a summary that captures not just what was said (text), but who seemed anxious (audio/visual) and what was written on the whiteboard (image).

How Multimodal AI Works: The Architecture of Convergence

The “magic” of combining text, images, and speech happens deep within the neural network architectures. While the mathematics are complex, the conceptual framework can be understood through a few key components.

1. Tokenization and Encoders

The first step in any AI model is converting raw data into a format the computer understands: numbers.

- Text: Broken down into tokens (parts of words).

- Images: Sliced into small patches (like puzzle pieces) and flattened into vectors.

- Audio: Converted into spectrograms (visual representations of sound waves) and then sliced into time-based segments.

Each modality has its own “encoder”—a specialized neural network layer designed to process that specific input type. The encoder transforms the raw tokens/patches/segments into vectors (lists of numbers).

2. The Shared Embedding Space

This is the most critical innovation. In a multimodal model, the vectors from different encoders are projected into a shared embedding space.

Think of this space as a giant, multi-dimensional map. In a well-trained multimodal model, the vector for the text “dog” is located very close to the vector for an image of a dog, and close to the vector for the sound of a bark. The model doesn’t just see a JPEG file or a text string; it sees the concept of “dogness” represented mathematically.

Because these concepts share the same space, the model can perform algebra on meaning. It can understand that (Image of King) – (Image of Man) + (Image of Woman) ≈ (Image of Queen), conceptually speaking.

3. The Transformer Backbone

Most modern multimodal models rely on the Transformer architecture. Originally designed for text, Transformers utilize a mechanism called “attention.” Attention allows the model to weigh the importance of different parts of the input data relative to each other.

In a multimodal context, cross-attention is key. This mechanism allows the model to attend to specific parts of an image while generating a sentence description. For example, when the model generates the word “frisbee,” its internal attention mechanism is heavily focused on the specific pixels in the image where the frisbee is located.

4. Alignment and Fine-Tuning

Training these models involves massive amounts of data and compute.

- Pre-training: The model learns general associations (e.g., training on billions of image-text pairs). One common technique is Contrastive Language-Image Pre-training (CLIP), where the model learns to match images to their correct captions and distinguish them from incorrect ones.

- Instruction Tuning: The model is further refined to follow instructions (e.g., “Look at this image and tell me what is wrong with the wiring”). This stage often involves Reinforcement Learning from Human Feedback (RLHF) to ensure the outputs are safe, helpful, and accurate.

The Three Pillars: Text, Image, and Speech

To fully appreciate the capability of these systems, we must look at how each modality interacts with the others. It is the interplay between these three pillars that defines the user experience.

1. Text-to-Image and Image-to-Text

This interaction is currently the most mature aspect of multimodal AI.

- Generation (Text-to-Image): Users input a descriptive prompt, and the model creates a novel image. This requires the model to have a deep semantic understanding of visual concepts, styles, lighting, and composition.

- Vision-Language (Image-to-Text): This is often called “Visual Question Answering” (VQA). Users upload an image and ask questions. The model can perform Optical Character Recognition (OCR) to read text in the image, identify objects, and reason about the scene (e.g., “Based on the clouds in this photo, is it likely to rain?”).

2. Speech-to-Text and Text-to-Speech (Audio Processing)

While transcription tools have existed for years, multimodal models bring context to audio.

- Nuanced Transcription: Instead of simple phonetic matching, a multimodal model uses context to disambiguate homophones.

- Speech Generation: Modern Text-to-Speech (TTS) within multimodal models is “expressive.” It can modify tone, cadence, and emotion based on the semantic content of the text. If the text is sad, the voice sounds somber.

- Voice Cloning: With just a few seconds of reference audio, these models can synthesize new speech that sounds exactly like the reference speaker.

3. Speech-to-Speech (The Holy Grail of Conversational AI)

This represents the cutting edge. In older systems, speech-to-speech translation involved three steps: Speech → Text → Translated Text → Speech. This introduced latency and lost non-verbal cues (like laughter or hesitation).

Native multimodal models (like GPT-4o or advanced Gemini iterations) can process audio directly. They take audio input and generate audio output without necessarily converting it to text in the middle. This preserves:

- Paralinguistics: Sighs, gasps, and tone.

- Turn-taking: The ability to handle interruptions naturally.

- Latency: Drastically reduced response times, allowing for fluid, real-time conversation.

Real-World Applications and Use Cases

The integration of text, images, and speech is transforming industries by enabling machines to handle tasks that previously required human perception.

Healthcare: Diagnostic Support and Patient Care

In healthcare, data is inherently multimodal. A patient’s record consists of written notes (text), X-rays or MRI scans (images), and conversations with doctors (speech).

- Radiology Assistants: AI can analyze medical images while reading the patient’s history. It can highlight potential anomalies in an X-ray and draft a preliminary report for the radiologist to review, citing the specific pixels that triggered the observation.

- Surgical Support: During procedures, systems can listen to the surgeon’s voice commands, display relevant medical imaging on screens, and record the procedure notes simultaneously.

- Accessibility: For patients with speech or visual impairments, multimodal apps can act as interpreters, describing the visual world or translating unclear speech into clear text.

Automotive: The Eyes and Ears of Autonomous Vehicles

Self-driving cars are essentially giant multimodal robots.

- Sensor Fusion: They combine visual data from cameras (to see lane markings and pedestrians) with auditory sensors (to hear sirens or horns) and textual data (reading road signs or GPS instructions).

- In-Cabin Interaction: Modern infotainment systems allow drivers to point at a landmark outside the window and ask, “What is that building?” The car’s cameras identify the building, and the voice assistant provides the answer via speech.

Education: Personalized Tutors

Multimodal AI is creating the “super-tutor.”

- Interactive Learning: A student can solve a math problem on paper, take a photo of it, and upload it to the AI. The AI reads the handwriting, identifies the mistake, and speaks an explanation of how to correct it—rather than just giving the answer.

- Language Learning: Students can converse with an AI that listens to their pronunciation (speech), corrects their grammar (text), and shows them diagrams of mouth positioning (images) to improve their accent.

Customer Service: Next-Generation Support Agents

The era of the “Press 1 for Billing” menu is fading.

- Visual Troubleshooting: A customer facing a broken router can initiate a video call with an AI agent. The AI watches the video feed, recognizes the router model, sees the blinking red light, and instructs the customer verbally: “I see the power light is blinking. Please unplug the yellow cable on the left.”

- Sentiment Analysis: The agent monitors the customer’s voice pitch and speed. If the customer sounds increasingly agitated, the AI can adjust its own tone to be more calming or escalate the issue to a human manager immediately.

Creative Industries: Co-Pilots for Art and Media

- Film Pre-visualization: Directors can speak a scene description (“A dark alley, raining, 1940s noir style”) and generate storyboards instantly.

- Game Design: Developers can generate 3D assets from sketches or create voice lines for non-player characters (NPCs) on the fly, making game worlds more immersive and responsive.

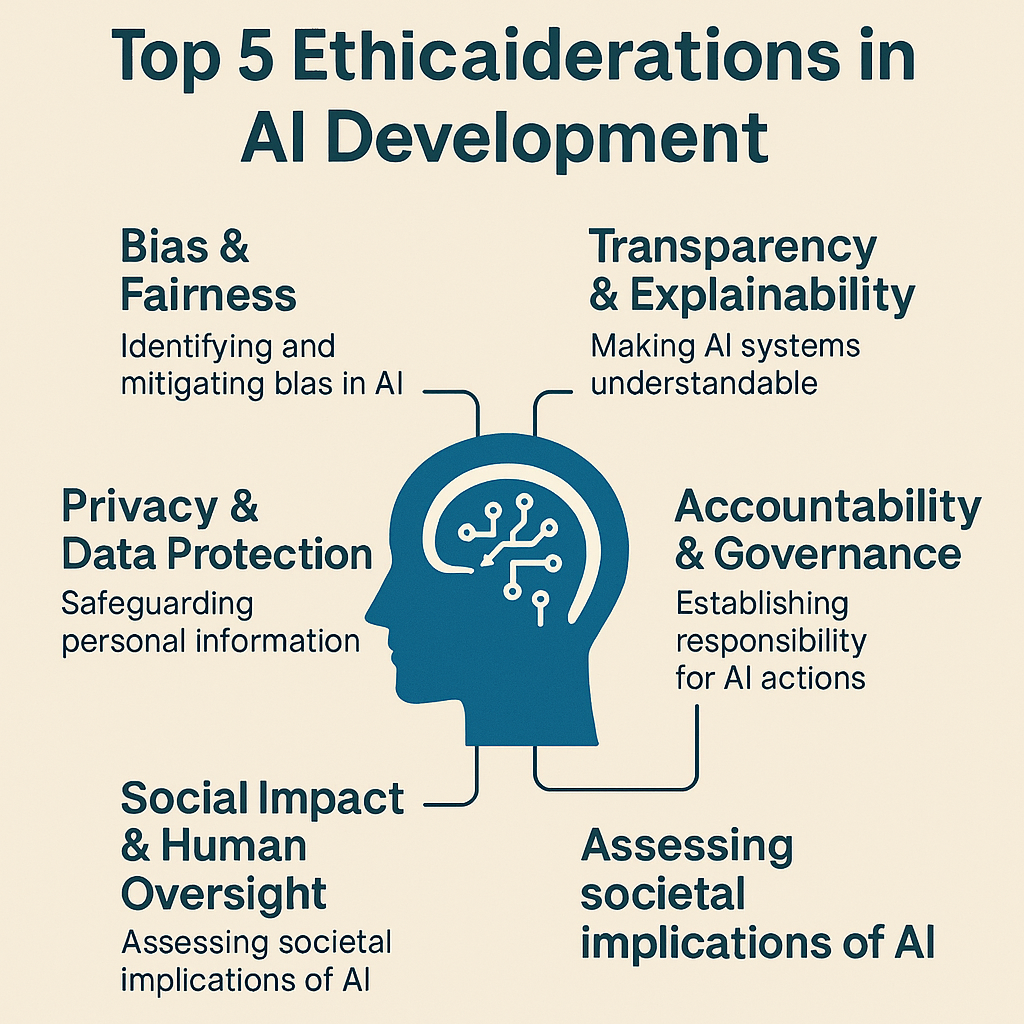

Ethical Considerations and Challenges

The power of multimodal AI comes with significant risks. As these models become more capable, the line between reality and fabrication blurs, raising urgent ethical questions.

1. Deepfakes and Misinformation

The most immediate threat is the democratization of high-quality fake media.

- Fabricated Evidence: It is trivial to create realistic audio of a CEO admitting to fraud or a video of a politician making offensive remarks.

- Identity Theft: Voice cloning and facial animation can be used to bypass biometric security or trick family members in “grandparent scams.”

- Mitigation: Researchers are working on “watermarking” standards (like C2PA) to embed unalterable provenance data into AI-generated files, but adoption is not yet universal.

2. Bias Amplification Across Modalities

Bias in AI is not new, but multimodality introduces “cross-modal bias.”

- Stereotyping: If a model is trained on data where doctors are mostly men and nurses are mostly women, asking it to “Draw a doctor” will yield male images.

- Visual-Linguistic Bias: A model might correctly identify a “cooking utensil” in a Western kitchen but mislabel traditional cookware from other cultures as “tools” or “debris” due to a lack of diverse training data.

- Impact: This exclusion can alienate users and perpetuate harmful societal stereotypes at a global scale.

3. Privacy and Data Harvesting

Training multimodal models requires exorbitant amounts of data.

- Data Scraping: Tech companies often scrape public internet data, including YouTube videos, podcasts, and social media photos, to train models. This raises questions about copyright and consent.

- Surveillance: The ability to analyze video and audio in real-time makes these models powerful tools for surveillance. In the wrong hands, they could be used to automate the tracking of individuals based on their face, gait, or voice print.

4. Hallucinations

Multimodal models can “hallucinate” just like text models, but the consequences can be more confusing.

- Visual Hallucinations: A model might describe an object in an image that simply isn’t there, or misread text in a blurry photo with high confidence.

- Grounding Issues: There is often a disconnect between the visual encoder and the language decoder, leading to descriptions that sound plausible but are factually wrong regarding the image content.

Evaluating Multimodal Models: What to Look For

If you are a business or developer looking to adopt multimodal AI, how do you choose? Here are the criteria that matter in practice:

1. Modality Alignment

How well do the modalities actually “talk” to each other?

- Test: Upload a complex image (e.g., a meme with sarcasm) and ask the model to explain the humor. A model with poor alignment will describe the image elements literally but miss the joke. A well-aligned model connects the text overlay with the visual context to explain the irony.

2. Latency and Inference Cost

Multimodal models are computationally heavy.

- Speech-to-Speech Latency: For conversational agents, latency must be under 500ms to feel natural. Anything higher results in awkward pauses.

- Token Costs: Processing images usually consumes significantly more “tokens” (units of compute) than text. Organizations must calculate the ROI of enabling vision capabilities for their specific use case.

3. Context Window Size

How much information can the model hold in its “short-term memory”?

- Long-Context Video: Can the model watch a 1-hour video and answer a question about a detail at the 5-minute mark? “Needle in a haystack” retrieval in video and audio is a major differentiator between top-tier models and average ones.

4. Safety Guardrails

- Jailbreaking: Visual inputs can sometimes bypass text-based safety filters. For example, a model might refuse to write instructions for making a weapon, but might comply if shown an image of the components and asked to “label the assembly steps.” Robust models have safety filters applied across all input modalities.

Implementation Guide: Best Practices

Adopting multimodal AI requires a strategic approach to data and infrastructure.

Data Preparation

Your model is only as good as your data.

- Cleanliness: Ensure audio recordings are free of background noise unless you specifically want the model to handle noisy environments.

- Metadata: When fine-tuning, verify that your image-text pairs are high quality. Automated captions (alt-text) scraped from the web are often noisy; human-verified captions yield better results.

Deployment Strategy

- Edge vs. Cloud: For privacy-sensitive applications (like healthcare), consider “Edge AI”—running smaller multimodal models directly on the device rather than sending data to the cloud. While less powerful, they offer better privacy and zero latency.

- Hybrid Approaches: Use unimodal models for simple tasks (e.g., standard OCR) and reserve the heavy multimodal models for complex reasoning tasks to save costs.

User Experience (UX) Design

Designing for multimodal interactions is different from designing for screens.

- Fallbacks: Always provide a fallback. If the voice recognition fails, allow the user to type.

- Feedback Loops: The AI should confirm what it saw or heard. “I see you are holding a red connector. Is that correct?” This allows the user to correct the model’s perception before it proceeds.

The Future: Embodied AI and Beyond

As of 2026, we are transitioning from “internet AI” (models that live on servers) to “embodied AI” (models that possess a physical form).

Robotics Integration

Multimodal models are becoming the “brains” of robots. A robot equipped with a multimodal model can understand a command like “Pick up the blue cup and put it near the sink.”

- Vision: It sees the cup and the sink.

- Language: It understands the spatial relationship “near.”

- Proprioception: It plans the movement of its arm. This convergence suggests a future where general-purpose household robots become viable consumer products.

Sensory Expansion

The definition of “multimodal” is expanding beyond human senses. Models are beginning to incorporate:

- Thermal Imaging: Seeing heat.

- LiDAR/Depth: Sensing distance with lasers.

- Hyperspectral Imaging: Seeing chemical compositions. By combining these super-human inputs with natural language reasoning, AI will assist in fields like agriculture (detecting crop disease from satellite imagery) and manufacturing (predicting machine failure from vibration sounds).

Conclusion

Multimodal AI models represent the reunification of sensory perception in the digital realm. By weaving together text, images, and speech, we are creating systems that do not just process data, but experience the world in a way that is strikingly similar to our own.

For businesses, the opportunity lies in creating smoother, more intuitive customer experiences and automating complex, perceptual tasks. For individuals, these tools offer unprecedented creative freedom and accessibility support. However, navigating this landscape requires a vigilant eye on the ethical implications—ensuring that as machines learn to see and speak, they do so with accuracy, fairness, and safety.

As we move forward, the most successful implementations will be those that treat multimodality not as a novelty, but as a bridge—connecting the digital intelligence of AI with the messy, beautiful, audio-visual reality of the human experience.

Next Steps

- Audit Your Workflows: Identify processes in your organization that currently require a human to look at an image or listen to audio and type a report. These are prime candidates for multimodal automation.

- Experiment: Use available APIs (like OpenAI or Google Cloud Vertex AI) to build a simple prototype. For example, build a tool that takes a photo of a meeting whiteboard and emails a summary to the team.

- Establish Governance: Before deploying, create a clear policy on how your organization will handle biometric data (voice/face) and how you will label AI-generated content.

FAQs

What is the difference between unimodal and multimodal AI?

Unimodal AI specializes in a single type of data, such as text (for writing) or images (for recognition). Multimodal AI integrates multiple data types—text, images, audio—into a single system, allowing it to understand and generate content across these different mediums simultaneously.

Can multimodal AI models understand video?

Yes. Video is essentially a sequence of images (frames) combined with audio. Multimodal models process video by analyzing the visual frames and the audio track in parallel, allowing them to summarize events, answer questions about the video content, and identify specific moments.

Are multimodal models more expensive to run?

Generally, yes. Processing images and audio requires significantly more computational power and memory than processing text alone. The “token count” for an image is much higher than for a text sentence, leading to higher inference costs and energy consumption.

How does “grounding” work in multimodal AI?

Grounding refers to linking abstract concepts (text) to concrete representations (pixels or sound). For example, if the text says “red balloon,” a grounded model knows exactly which pixels in the image correspond to the red balloon. Poor grounding leads to hallucinations where the description does not match the image.

Is my voice data safe when used with these models?

Privacy policies vary by provider. Some enterprise models do not train on customer data, while consumer-grade free tools might use inputs for training. It is crucial to review the data retention policy of any AI service, especially when using biometric data like voice recordings.

Can multimodal AI translate sign language?

Yes, this is a promising application. By using video input (computer vision) to track hand movements and facial expressions, multimodal models can translate sign language into text or spoken speech, bridging communication gaps for the Deaf community.

What is CLIP?

CLIP (Contrastive Language-Image Pre-training) is a foundational technique introduced by OpenAI. It trains a model to predict which caption goes with which image. This teaching method helped bridge the gap between computer vision and natural language processing, enabling many current multimodal capabilities.

Do these models actually “see” and “hear”?

No. They process mathematical representations of light and sound. When a model “sees” an image, it is analyzing a grid of numbers representing pixel values. When it “hears,” it is analyzing a spectrogram. However, the sophistication of these analyses allows them to mimic the results of seeing and hearing.

References

- OpenAI. (2023). GPT-4V(ision) System Card. OpenAI Research. https://openai.com/research

- Google DeepMind. (2023). Gemini: A Family of Highly Capable Multimodal Models. Google DeepMind Technical Report. https://deepmind.google/technologies/gemini/

- Radford, A., et al. (2021). Learning Transferable Visual Models From Natural Language Supervision (CLIP). OpenAI. arXiv preprint. https://arxiv.org/abs/2103.00020

- Meta AI. (2023). ImageBind: One Embedding Space to Bind Them All. Meta AI Research. https://ai.meta.com/research/

- National Institute of Standards and Technology (NIST). (2023). Artificial Intelligence Risk Management Framework (AI RMF 1.0). U.S. Department of Commerce. https://www.nist.gov/itl/ai-risk-management-framework

- Anthropic. (2024). The Claude 3 Model Family: Opus, Sonnet, Haiku. Anthropic Research. https://www.anthropic.com/news/claude-3-family

- Vaswani, A., et al. (2017). Attention Is All You Need. (The foundational paper on Transformer architecture). arXiv preprint. https://arxiv.org/abs/1706.03762

- McKinsey & Company. (2024). The Economic Potential of Generative AI: The Next Productivity Frontier. McKinsey Global Institute. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier

- Stanford University. (2024). Artificial Intelligence Index Report 2024. Stanford Institute for Human-Centered AI (HAI). https://ai.stanford.edu/report/