Machine learning (ML) has moved rapidly from experimental sandboxes to the critical path of enterprise operations. Whether it is a credit scoring algorithm in banking, a diagnostic tool in healthcare, or a recommendation engine in e-commerce, ML models are now decision-makers. However, with great power comes great liability. The ability to build a model is no longer the primary differentiator; the competitive edge now lies in the ability to manage, govern, and maintain that model safely and effectively over time.

This guide explores the intricate world of ML model governance and lifecycle management. It is not enough to train a model and deploy it; organizations must ensure that models remain accurate, fair, compliant, and transparent throughout their existence. We will move beyond the buzzwords of MLOps to look at the structural, ethical, and practical frameworks necessary to run AI at scale.

Scope of this guide

In this guide, ML model governance refers to the policies, controls, and verification processes that ensure a model fulfills its intended purpose without introducing unacceptable risk. Lifecycle management refers to the technical and operational execution of that model’s life, from conception to retirement.

What is IN scope:

- End-to-end phases of the ML lifecycle (design, build, deploy, monitor, retire).

- Risk management, compliance (including mentions of the EU AI Act), and auditability.

- The intersection of MLOps technology and governance policy.

- Practical frameworks for team roles and documentation.

What is OUT of scope:

- Deep code tutorials on writing Python/PyTorch (we focus on process and architecture).

- Vendor-specific comparisons (we focus on capabilities over brand names).

Key Takeaways

- Governance is not a blocker: When implemented correctly, governance accelerates deployment by reducing rework and providing legal safety nets.

- The lifecycle is circular, not linear: Deployment is not the end; it is the beginning of the monitoring and retraining loop.

- Documentation is code: In regulated industries, an undocumented model is a liability, regardless of its accuracy.

- Drift is inevitable: All models degrade over time. A robust lifecycle strategy is defined by how quickly you detect and remediate this decay.

- Humans remain central: Automation handles the heavy lifting, but human oversight is required for ethical alignment and edge-case adjudication.

1. Defining ML Model Governance and Lifecycle Management

Before diving into the mechanics, we must distinguish between the “rules of the road” (governance) and “driving the car” (management).

What is ML Model Governance?

Governance is the control layer. It answers the questions: Who authorized this model? Is it fair? Is it legal? Can we explain why it made that decision? It involves establishing:

- Access Controls: Who can touch the data and the code.

- Audit Trails: A permanent record of who changed what, and when.

- Validation Standards: The threshold of performance required before a model can go live.

- Ethical Guardrails: Checks for bias, toxicity, or discriminatory outputs.

In practice, governance often manifests as a “Model Registry”—a central repository where models are versioned, tagged with metadata, and gated by approval workflows.

What is Lifecycle Management?

Lifecycle management is the operational layer, often synonymous with MLOps (Machine Learning Operations). It answers the questions: Is the model up? Is it fast enough? Has the data changed? It involves:

- CI/CD Pipelines: Continuous Integration and Continuous Deployment automation to move code to production.

- Infrastructure Management: Scaling GPUs and servers based on demand.

- Monitoring: Tracking latency, throughput, and error rates.

- Retraining: The automated pipelines that refresh the model with new data.

While governance sets the policy, lifecycle management executes it. You cannot have a healthy AI strategy without both.

2. The Seven Phases of the ML Model Lifecycle

To manage models effectively, we must break their existence down into distinct phases. Each phase has its own governance requirements and operational tasks.

Phase 1: Inception and Problem Definition

Before a single line of code is written, the governance process begins. This phase is about justifying the existence of the model.

- Business Case: What problem are we solving? Is ML actually the right tool, or would a simple rules-based engine suffice?

- Feasibility Study: Do we have the data? Is the data clean?

- Risk Assessment: If this model fails, what happens? In a movie recommendation system, a failure is an annoyance. In a self-driving car or a loan approval system, failure can lead to injury or lawsuit.

- KPI Definition: We must define success metrics (e.g., accuracy, precision, recall) and business metrics (e.g., conversion rate, cost savings) upfront.

Governance Check: Sign-off from business stakeholders confirming the ROI justifies the risk.

Phase 2: Data Engineering and Management

Data is the fuel for the model. Governance here focuses on Data Lineage—knowing exactly where data came from and how it was modified.

- Data Sourcing: Ensuring licensing allows for commercial use (critical for Generative AI).

- Privacy Checks: Scrubbing PII (Personally Identifiable Information) regarding GDPR or HIPAA compliance.

- Versioning: Creating immutable snapshots of the datasets used for training. If you retrain a model in six months, you must be able to recreate the exact dataset used today.

- Bias Detection: Analyzing the dataset for historical prejudices (e.g., a hiring dataset that heavily favors one demographic).

Governance Check: A Data Card or datasheet documenting the dataset’s composition, source, and limitations.

Phase 3: Model Development and Training

This is the “science” part of data science, where algorithms are selected and hyperparameters are tuned.

- Experiment Tracking: Data scientists often run hundreds of experiments. Tools like MLflow or Weights & Biases are used to log every parameter and resulting metric.

- Reproducibility: A major governance headache is the “it worked on my laptop” syndrome. Training environments must be containerized (e.g., using Docker) to ensure the training process can be repeated exactly by someone else.

- Code Quality: Applying standard software engineering practices (version control, unit testing) to ML code.

Governance Check: Automated logs linking a specific model version to the code and data version used to create it.

Phase 4: Model Validation and Evaluation

The model is trained, but is it good enough? This phase is often where governance exerts the most control.

- Offline Evaluation: Testing the model against a “holdout” dataset that it has never seen before.

- Fairness Testing: Specifically testing the model’s performance across different protected classes (age, gender, race) to ensure disparate impact is minimized.

- Stress Testing: Throwing adversarial data or garbage data at the model to see if it breaks gracefully or hallucinates.

- Explainability (XAI): Generating SHAP or LIME values to understand why the model is making specific predictions.

Governance Check: A formal Model Validation Report signed off by a separate team (often Risk Management) that is not the development team.

Phase 5: Deployment and Integration

Moving the model from a research environment to a production environment.

- Serving Strategy: Real-time API vs. Batch processing.

- Shadow Mode: Running the model in production alongside the existing system (or human process) without acting on its decisions, simply to log how it would have performed.

- Canary Deployment: Rolling the model out to a small percentage of users (e.g., 5%) to check for technical issues before a full rollout.

- Approvals: Manual gates where a human must click “Approve” to push to production.

Governance Check: Verification that the deployed artifact matches the validated artifact (hashing/signing).

Phase 6: Monitoring and Observability

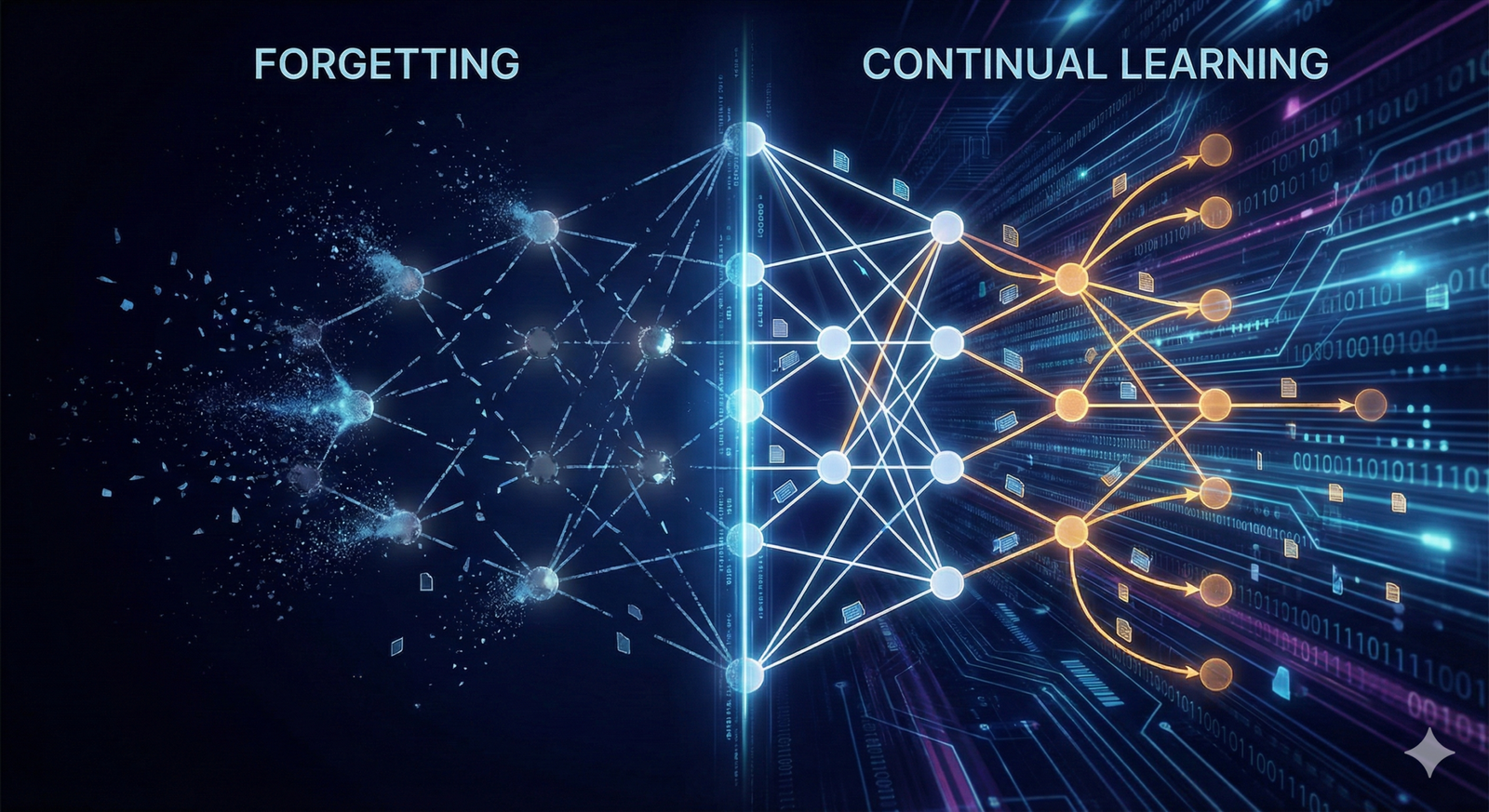

Once the model is live, it immediately begins to degrade. This is known as “drift.”

- Data Drift: The input data changes. For example, consumer spending habits changed drastically during the pandemic, causing fraud models trained on 2019 data to fail.

- Concept Drift: The relationship between variables changes. For example, the definition of “spam email” evolves as scammers change tactics.

- System Health: Latency (how long it takes to return a prediction) and throughput.

Governance Check: Automated alerts that trigger an incident response if performance drops below a defined threshold.

Phase 7: Retirement and Archiving

Models do not live forever. They eventually become obsolete or too costly to maintain.

- Decommissioning: Shutting down the API endpoints.

- Archiving: Storing the model artifacts, training data, and documentation for a required regulatory period (often 7 years in finance).

- Notification: Informing downstream systems and users that the model is no longer available.

Governance Check: Confirmation that no active systems rely on the retired model.

3. Why Governance Matters: Risk, Compliance, and Trust

Organizations often view governance as bureaucracy, but in the context of AI, it is a survival mechanism.

The Regulatory Landscape

As of 2024 and moving into 2025, the regulatory environment for AI has tightened significantly.

- EU AI Act: Categorizes AI systems by risk. High-risk systems (e.g., biometric identification, critical infrastructure, employment) face strict conformity assessments, data governance requirements, and human oversight mandates.

- GDPR (General Data Protection Regulation): While primarily about data privacy, Article 22 gives individuals the right not to be subject to a decision based solely on automated processing. This necessitates governance around human-in-the-loop interventions.

- Algorithmic Accountability Act (US proposed): Focuses on requiring impact assessments for automated decision systems.

Reputational and Financial Risk

Beyond the law, bad models lose money and destroy trust.

- Financial Loss: An algorithmic trading model with a bug can lose millions in seconds. A customized pricing model that hallucinates discounts can erode margins.

- Brand Damage: If a chat agent creates offensive content or a hiring algorithm is proven sexist, the PR fallout can be devastating. Governance provides the “kill switches” and testing protocols to prevent this.

The “Black Box” Problem

Deep learning models, particularly neural networks, are notoriously opaque. Governance mandates Explainable AI (XAI). If a bank denies a loan, they must be able to say why (e.g., “debt-to-income ratio too high”), rather than “the computer said no.” Governance frameworks ensure that black-box models are not used in scenarios where explainability is non-negotiable.

4. Key Components of a Governance Framework

To build a governance structure, you need three pillars: People, Process, and Technology.

People: Roles and Responsibilities (RACI)

Clear ownership is the first step.

- Data Scientists: Responsible for building the model and ensuring it meets technical metrics.

- ML Engineers / MLOps: Responsible for the pipeline, deployment, and infrastructure.

- Model Owners / Product Managers: Responsible for the business outcome and the ROI. They “own” the risk.

- Risk/Compliance Officers: The “second line of defense.” They validate the model independently and ensure it adheres to regulations.

- AI Ethics Committee: A diverse group that reviews models for societal impact, potential harm, and fairness.

Process: Policies and Standards

You need written standards that everyone follows.

- Minimum Viable Performance: What is the lowest accuracy score acceptable for release?

- Retraining Frequency: Does the model need to be retrained daily, weekly, or on-demand?

- Incident Response Plan: If the model goes rogue (e.g., a chatbot starts swearing), who gets the call at 2 AM? How do you roll back to the previous version?

Technology: The Governance Stack

Modern governance is automated via software.

- Model Registry: The single source of truth (e.g., MLflow Model Registry, AWS SageMaker Registry). It tracks versions like Staging, Production, and Archived.

- Feature Store: A centralized vault for data features to ensure training and serving use the exact same data definitions.

- Metadata Store: A database that logs every run, every hyperparameter, and every metric.

5. Implementing Governance in Practice: Tools and Examples

How does this look in the real world? Let’s look at practical implementation steps.

The Model Card

One of the most effective governance tools is the Model Card (concept popularized by Google). It is essentially a “nutrition label” for an AI model. A Model Card should include:

- Intended Use: What is this model for? (e.g., “Classifying customer emails”).

- Limitations: What is it NOT for? (e.g., “Not for use on medical records”).

- Training Data: Summary of demographics and sources.

- Performance Metrics: Accuracy across different groups.

- Ethical Considerations: Potential biases identified.

Automation via CI/CD/CT

Governance works best when it is invisible.

- Continuous Integration (CI): When a data scientist pushes code, automated tests run to check code quality.

- Continuous Training (CT): When new data arrives, the pipeline automatically retrains the model. If the new model beats the old one and passes bias checks, it moves to the next stage.

- Continuous Deployment (CD): The deployment pipeline requests approval. Once granted, it swaps the API traffic to the new model.

Example: Financial Fraud Detection

In a bank, governance is strict.

- Dev: Scientist builds a fraud model.

- Registry: Model is saved to the registry as v1.2 tagged experimental.

- Validation: Risk team runs v1.2 against historical data. It passes accuracy checks but fails explainability checks (it can’t explain why it flagged a transaction).

- Rejection: The model is rejected.

- Fix: Scientist swaps the algorithm for one with better interpretability.

- Approval: New version v1.3 passes. Risk team digitally signs the artifact.

- Prod: MLOps pipeline deploys v1.3.

6. Monitoring: The Heart of Lifecycle Management

Monitoring ML is different from monitoring software. In software, if you get a 200 OK response, it worked. In ML, you can get a 200 OK response, a predicted value, and still be completely wrong because the world has changed.

Drift Detection

- Covariate Shift: The distribution of independent variables (inputs) has changed. Example: A temperature forecasting model trained on data from 1950-2000 encounters the heatwaves of 2025.

- Prior Probability Shift: The distribution of the target variable (output) changes. Example: A spam filter suddenly seeing 90% spam instead of the usual 20%.

Performance Metrics vs. Proxy Metrics

Ideally, you want to measure Ground Truth (did the prediction actually happen?).

- Fast Feedback: In ad-tech, you know instantly if a user clicked an ad. You can monitor accuracy in real-time.

- Delayed Feedback: In credit lending, you won’t know if a borrower defaults for 2 years. Here, you must rely on Proxy Metrics (monitoring input drift) to guess if the model is degrading.

Alert Fatigue

A common mistake is setting alerts too tight. If an alert goes off every time data shifts by 0.1%, engineers will ignore it. Use adaptive thresholds that account for expected seasonality (e.g., shopping data always shifts on Black Friday).

7. Common Pitfalls and Challenges

Even well-intentioned organizations struggle with ML governance.

1. Shadow AI

This occurs when departments spin up ML models using credit cards and SaaS APIs without IT or Risk knowing. These “rogue” models bypass governance, posing massive security and compliance risks.

- Solution: Make the centralized platform easier to use than the rogue alternatives.

2. The “Set and Forget” Mentality

Treating models like static software code. Models are organic; they rot. Failing to budget for ongoing maintenance leads to catastrophic failure.

- Solution: Mandatory expiration dates on all deployed models.

3. Over-Governance

Creating so much red tape that data scientists cannot innovate. If it takes 6 months to deploy a simple regression model, your governance has failed.

- Solution: Tiered governance. Low-risk internal models get a “fast lane,” high-risk customer-facing models get the full audit.

8. Generative AI and LLM Governance

The rise of Large Language Models (LLMs) like GPT and Gemini introduces new governance challenges.

Hallucinations and Factual Accuracy

Standard accuracy metrics don’t work well on text generation. Governance requires Human Feedback (RLHF) and automated evaluation using other LLMs to grade responses.

Data IP and Copyright

Training Generative AI on scraped data creates legal risks regarding copyright infringement. Governance frameworks must now track the legal rights associated with training data more rigorously than ever.

Prompt Injection Security

Lifecycle management for LLMs includes security defenses against “jailbreaking” (users tricking the AI into doing forbidden tasks). Monitoring must look for adversarial prompt patterns.

9. Future Trends in ML Governance

As we look toward the future, governance is becoming more automated and integrated.

- Policy-as-Code: Instead of a PDF policy document, policies will be written in code. The deployment pipeline will programmatically check “Does this model have a fairness score > 0.9?” If not, the deployment fails automatically.

- Green AI Governance: Tracking the carbon footprint of model training and inference. Governance policies may soon dictate a “carbon budget” for AI projects.

- Decentralized Governance: Using blockchain or distributed ledgers to create immutable audit trails for high-stakes AI (e.g., autonomous weapons or medical surgery bots).

Conclusion

ML model governance and lifecycle management are no longer optional “nice-to-haves.” They are the foundational infrastructure required to scale AI safely. Without them, AI remains a science experiment—unpredictable, risky, and difficult to maintain.

By implementing a robust lifecycle that spans from inception to retirement, and wrapping it in a governance framework of people, processes, and technology, organizations can unlock the true value of AI. The goal is to move from “Wild West” experimentation to a mature, industrial AI factory where models are trusted, transparent, and reliable.

Next Steps:

- Audit your current models: Do you know where they are and who owns them?

- Establish a Model Registry: If you haven’t already, implement a tool to track model versions.

- Define your risk tiers: Differentiate between low-risk and high-risk applications to right-size your governance efforts.

FAQs

What is the difference between MLOps and Model Governance?

MLOps focuses on the how—the technical execution, automation, pipelines, and infrastructure to build and run models. Model Governance focuses on the what and why—the policies, compliance, risk management, and accountability standards. MLOps is the engine; governance is the steering wheel and the traffic laws.

How often should a machine learning model be retrained?

There is no single answer; it depends on the “freshness” of the data. A stock trading model might need retraining every minute. A language translation model might only need retraining once a year. The best approach is to monitor drift. When performance drops below a specific threshold, a retraining pipeline should be triggered automatically.

What is a “Model Registry” and why do I need one?

A Model Registry is a centralized repository that stores model artifacts (files), metadata, and version history. It acts like an inventory system for AI. You need one to prevent version conflicts, ensure you can roll back to previous versions if a new one fails, and provide a clear audit trail for regulators.

How does the EU AI Act affect model governance?

The EU AI Act classifies AI into risk categories. If your model is “High Risk” (e.g., employment, credit, healthcare), you legally must have comprehensive data governance, detailed technical documentation, human oversight, and accuracy/robustness logs. Failure to comply can result in massive fines, making governance a legal necessity for companies operating in Europe.

Can governance be automated?

Yes, to a large extent. “Policy-as-Code” allows you to automate checks. For example, you can write a script that prevents a model from being deployed if its bias score exceeds a certain limit, or if it lacks a linked dataset in the registry. However, high-risk decisions usually still require a final human approval step.

What happens when a model is retired?

Retiring a model involves more than just deleting the file. You must archive the model artifacts and training data for compliance purposes (audit trails). You must also ensure that any applications consuming the model’s API are redirected to a new model or informed of the service shutdown to prevent system crashes.

How do we govern Third-Party or Vendor AI models?

Governance applies even if you didn’t build the model. You must demand “Model Cards” or transparency reports from vendors. You should perform your own validation testing (Black Box testing) on their API to ensure it meets your internal fairness and performance standards before integrating it into your workflows.

What is “Drift” in machine learning?

Drift refers to the degradation of model performance over time. Data Drift occurs when the input data distribution changes (e.g., younger users start using your app). Concept Drift occurs when the relationship between input and output changes (e.g., what was considered a “risky” loan 5 years ago is now considered safe). Both require monitoring and retraining.

References

- European Commission. (2024). The AI Act. Official Journal of the European Union. https://artificialintelligenceact.eu

- Google Cloud. (n.d.). Practitioners Guide to MLOps: A Framework for Continuous Delivery and Automation of Machine Learning. https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation-pipelines-in-machine-learning

- Mitchell, M., et al. (2019). Model Cards for Model Reporting. Proceedings of the Conference on Fairness, Accountability, and Transparency. https://arxiv.org/abs/1810.03993

- Databricks. (2023). The Comprehensive Guide to Machine Learning Model Management.

- Microsoft. (2023). Responsible AI Standard v2 General Requirements. Microsoft Azure AI Documentation.

- NIST (National Institute of Standards and Technology). (2023). AI Risk Management Framework (AI RMF 1.0). U.S. Department of Commerce. https://www.nist.gov/itl/ai-risk-management-framework

- Sculley, D., et al. (2015). Hidden Technical Debt in Machine Learning Systems. Google Research. https://papers.nips.cc/paper/2015/file/86df7dcfd896fcaf2674f757a2463eba-Paper.pdf

- IBM. (n.d.). AI Governance: A holistic approach to implementing AI ethics. https://www.ibm.com/watson/ai-governance