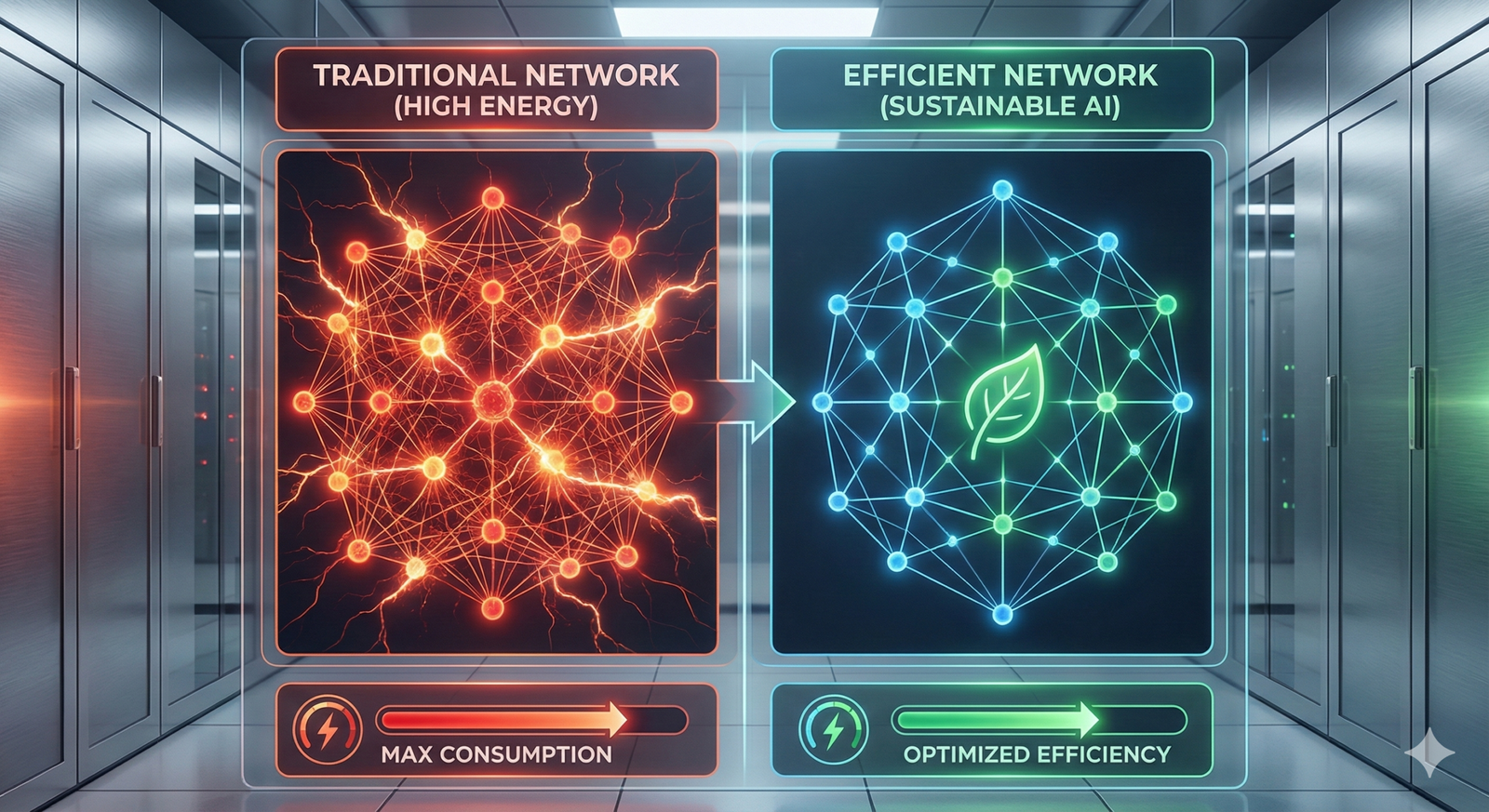

The era of “bigger is better” in artificial intelligence is facing a reckoning. For years, the dominant trend in Deep Learning (DL) has been to scale up parameters, data, and compute to achieve state-of-the-art results. However, this trajectory has led to massive energy consumption, significant carbon footprints, and deployment bottlenecks on edge devices. This is where efficient deep learning steps in—a paradigm shift focused on achieving high performance with fewer resources, lower latency, and reduced environmental impact, often referred to as “Green AI.”

In this comprehensive guide, we explore the core pillars of efficient DL: pruning, quantization, and hardware acceleration. We will examine how these techniques work, how they contribute to sustainability, and how developers can implement them to build smarter, greener AI systems.

Key Takeaways

- Green AI is Essential: As of January 2026, the energy cost of training and running large language models (LLMs) has made efficiency a financial and environmental necessity.

- Pruning Reduces Complexity: By removing redundant connections (weights) or neurons, models become smaller and faster without significant accuracy loss.

- Quantization Lowers Precision: Converting data from 32-bit floating-point to 8-bit integers (or lower) reduces memory usage and increases throughput.

- Hardware Matters: Specialized hardware like TPUs, FPGAs, and neuromorphic chips are designed to exploit sparsity and low-precision arithmetic for maximum efficiency.

- Synergy is Key: The best results come from combining algorithmic optimizations (software) with specialized architecture (hardware).

Scope of This Guide

This article covers the technical mechanisms of model compression and acceleration. We will focus on:

- In Scope: Pruning techniques, quantization schemes, knowledge distillation, hardware architectures (GPU, TPU, FPGA), and environmental metrics.

- Out of Scope: Detailed code tutorials for every specific framework (though we will mention tools like TensorFlow Lite and PyTorch Mobile), or proprietary algorithm secrets of specific tech giants.

The Imperative for Green AI

Before diving into the “how,” we must understand the “why.” The environmental impact of AI is no longer a niche concern; it is a central operational metric.

The Cost of Red AI

“Red AI” refers to the trend of buying performance improvements with massive computational increases. Training a single large Transformer model can emit as much carbon dioxide as five cars in their lifetimes. This energy usage isn’t limited to training; inference (running the model) often accounts for 80-90% of a model’s total lifecycle energy consumption.

When models are deployed to millions of users, every joule of energy per query adds up. Efficient deep learning aims to flatten this curve, decoupling AI progress from exponential resource consumption.

Edge Computing and Latency

Beyond sustainability, efficiency unlocks deployment. Mobile phones, IoT sensors, and autonomous drones have strict power and thermal budgets. A model that requires a rack of GPUs is useless on a battery-powered device. Efficient DL enables Edge AI—processing data locally on the device—which improves privacy (data doesn’t leave the device) and reduces latency (no round-trip to the cloud).

Pillar 1: Model Pruning

Pruning is inspired by a biological phenomenon: synaptic pruning in the human brain. As we grow, our brains strengthen useful connections and eliminate unused ones. Similarly, deep learning models often contain “dead weight”—parameters that contribute little to the final output.

How Pruning Works

Pruning involves identifying and removing unimportant weights from a trained neural network. The process typically follows a three-step cycle:

- Train: Train the model to convergence.

- Prune: Remove weights that fall below a certain threshold or importance metric.

- Fine-tune: Retrain the pruned model to recover any lost accuracy.

Unstructured vs. Structured Pruning

The granularity of pruning matters significantly for hardware implementation.

Unstructured Pruning

This method removes individual weights anywhere in the weight matrix, usually setting them to zero.

- Pros: Can achieve very high sparsity ratios (e.g., removing 90% of weights) with minimal accuracy loss.

- Cons: It results in “sparse matrices.” Standard hardware (CPUs/GPUs) is designed for dense matrix multiplication. Without specialized hardware or software support, unstructured pruning often leads to no speedup, as the processor still computes the zeros or struggles with irregular memory access patterns.

Structured Pruning

This method removes entire geometric structures, such as columns, filters, or channels.

- Pros: The resulting model is still a dense matrix, just smaller. This guarantees speedups on standard hardware without requiring special libraries.

- Cons: It is more aggressive and can degrade accuracy faster than unstructured pruning, as you are removing entire features rather than just weak connections.

Magnitude-based vs. Hessian-based Pruning

- Magnitude-based: The simplest approach. It assumes that weights with small absolute values are less important. If ∣w∣<λ, the weight is pruned.

- Hessian-based (e.g., Optimal Brain Damage/Surgeon): A more theoretically rigorous approach that uses the second derivative (Hessian matrix) of the loss function to determine which weights impact the loss the least. This is computationally expensive but often yields better results for sensitive models.

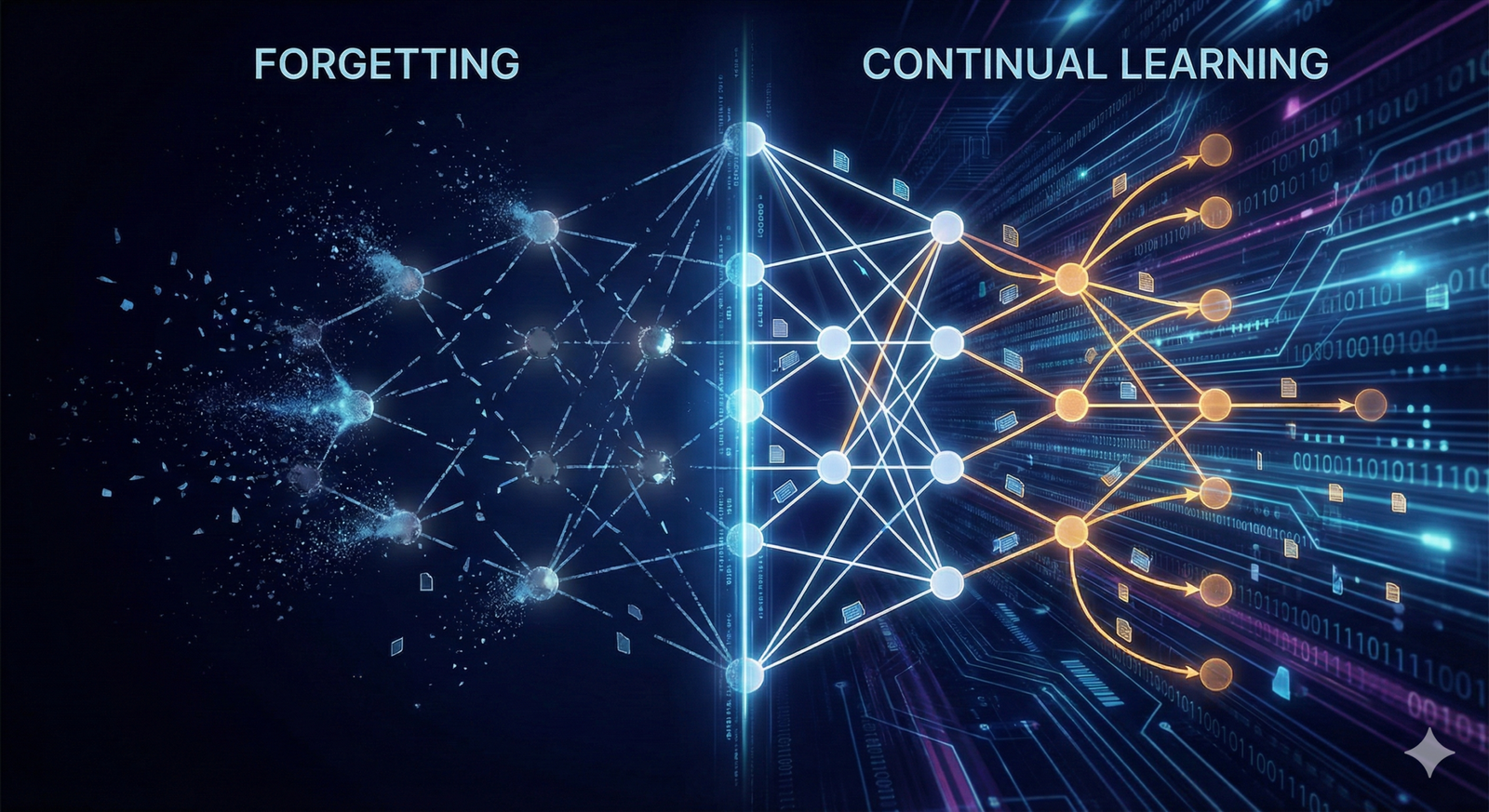

Lottery Ticket Hypothesis

A fascinating concept in efficient DL is the “Lottery Ticket Hypothesis,” which suggests that dense, randomly initialized networks contain subnetworks (“winning tickets”) that, when trained in isolation, reach test accuracy comparable to the original network in a similar number of iterations. Pruning is essentially a method to find these winning tickets.

Pillar 2: Quantization

While pruning reduces the number of operations, quantization reduces the cost of each operation. It involves reducing the precision of the numbers used to represent a model’s parameters and activations.

From Floating Point to Fixed Point

Most deep learning training happens in FP32 (32-bit floating-point), which offers high precision and a wide dynamic range. However, neural networks are surprisingly resilient to noise and low precision. Quantization maps these continuous FP32 values to a smaller set of discrete values, typically INT8 (8-bit integers).

The relationship can be expressed as:

Q(x,scale,zero_point)=round(scalex+zero_point)

Where:

- x is the floating-point value.

- scale is a scaling factor.

- zero_point allows the integer range to align with the floating-point range (asymmetric quantization).

Benefits of Quantization

- Memory Reduction: Moving from 32-bit to 8-bit immediately reduces the model size by 4x. This is critical for storing LLMs on mobile devices.

- Bandwidth Efficiency: Moving data from memory to the processor is often the bottleneck (the “memory wall”). Smaller data moves faster and consumes less energy.

- Compute Speed: Integer arithmetic is significantly faster and more energy-efficient than floating-point arithmetic on most processors.

Types of Quantization

1. Post-Training Quantization (PTQ)

This is applied after the model has been fully trained.

- Dynamic Quantization: Weights are quantized ahead of time, but activations are quantized dynamically at inference time. This is easy to implement but offers moderate speedups.

- Static Quantization: Both weights and activations are quantized. This requires a “calibration” step where a small subset of data is run through the model to determine the range (min/max) of the activations.

2. Quantization-Aware Training (QAT)

PTQ can sometimes hurt accuracy, especially in smaller networks (like MobileNets). QAT simulates the effects of quantization during the training process (forward pass simulates low precision, backward pass uses high precision). This allows the model to learn parameters that are robust to the quantization noise, usually yielding higher accuracy than PTQ.

Extreme Quantization: Binary and Ternary Networks

Pushing the limit, researchers explore Binarized Neural Networks (BNNs), where weights are only +1 or -1 (1-bit). While extremely efficient (replacing multiplications with bitwise XNOR operations), they often suffer from significant accuracy drops and are difficult to train for complex tasks.

Pillar 3: Knowledge Distillation

Pruning and quantization modify an existing architecture. Knowledge Distillation (KD) transfers the “intelligence” of a large model into a smaller, different architecture.

Teacher-Student Architecture

In KD, you start with a large, pre-trained Teacher model (e.g., BERT-Large) and a smaller Student model (e.g., DistilBERT).

- The Student is trained not just to predict the true labels (hard targets) but to mimic the probability distribution output by the Teacher (soft targets).

- The “dark knowledge”—the relationships learned by the Teacher (e.g., knowing that an image of a “dog” is somewhat similar to a “cat” but very different from a “car”)—is transferred to the Student.

Implementation in Practice

Distillation is frequently combined with quantization and pruning. For instance, a common workflow for mobile deployment might be:

- Train a large Teacher model.

- Distill it into a smaller Student architecture.

- Prune the Student model.

- Quantize the Pruned Student model for the target hardware.

Pillar 4: Hardware Acceleration

Software optimization can only go so far. To truly unlock Green AI, the hardware must be designed to support efficient operations.

The Evolution of AI Chips

- CPUs (Central Processing Units): Optimized for serial processing and complex logic (latency-oriented). Generally inefficient for the massive parallel matrix multiplications of DL.

- GPUs (Graphics Processing Units): The workhorse of modern AI. Optimized for parallel throughput. While powerful, they can be power-hungry.

- TPUs (Tensor Processing Units): Google’s ASICs (Application-Specific Integrated Circuits). Designed specifically for low-precision matrix multiplication using Systolic Arrays, which allow data to flow through the chip in a rhythmic, wave-like fashion, maximizing data reuse and minimizing energy-intensive memory access.

- FPGAs (Field-Programmable Gate Arrays): “Rewritable” chips. They offer a middle ground—more efficient than GPUs for specific tasks and more flexible than ASICs. They are excellent for custom quantization schemes (e.g., using exactly 7 bits if that’s optimal).

Hardware Support for Sparsity

Historically, hardware disliked sparsity (unstructured pruning). However, modern chips like NVIDIA’s Ampere architecture introduced support for N:M sparsity (e.g., 2:4 sparsity), where the hardware accelerates matrix math if every block of 4 weights contains at least 2 zeros. This bridges the gap between unstructured and structured pruning, allowing software to prune and hardware to accelerate.

Neuromorphic Computing

Looking further ahead, neuromorphic engineering aims to mimic the biological brain’s structure in silicon. Chips like Intel’s Loihi use Spiking Neural Networks (SNNs), where neurons only fire (consume energy) when there is a spike in input signal. This event-driven processing offers theoretical energy efficiency orders of magnitude better than standard deep learning hardware, particularly for temporal data.

Metric Matters: Measuring Green AI

To manage efficiency, you must measure it. Relying solely on “accuracy” is no longer sufficient.

Standard Metrics

- FLOPs (Floating Point Operations): A theoretical measure of compute complexity. While useful, it doesn’t always correlate perfectly with latency or energy due to memory access costs.

- Latency (ms): The time taken to process a single input. Crucial for real-time applications.

- Throughput (images/sec or tokens/sec): The volume of data processed per unit of time.

- Model Size (MB): Physical storage required.

Green Metrics

- Energy (Joules): The total energy consumed to perform a task.

- Power (Watts): The rate of energy consumption.

- Carbon Intensity (gCO2eq): The carbon emissions associated with the energy used. This varies heavily by region (e.g., a data center in a region powered by coal vs. hydro).

Tools for Measurement

- Carbon Tracker / CodeCarbon: Python libraries that estimate the carbon footprint of your training run based on hardware and location.

- NVIDIA SMI: Command-line utility to monitor GPU power draw in real-time.

- MLPerf: An industry-standard benchmark suite that now includes power measurement categories.

Efficient DL in Practice: Implementation Workflows

How does a team actually implement these strategies? Here is a typical workflow for deploying a Green AI model.

Phase 1: Model Selection & Training

- Start Small: Don’t default to the largest model. Try architectures designed for efficiency, like MobileNetV3, EfficientNet, or TinyBERT.

- Neural Architecture Search (NAS): Use NAS algorithms to automatically discover architectures that fit your specific latency/power constraints.

- Train with Distillation: If a larger model is needed for accuracy, use it as a Teacher to train your efficient Student.

Phase 2: Compression

- Pruning: Apply magnitude pruning. Start conservatively (e.g., 50% sparsity). If accuracy holds, increase.

- Calibration: Run a calibration dataset to determine quantization parameters.

- Quantization: Convert weights to INT8. Check accuracy. If the drop is >1%, perform Quantization-Aware Training (QAT).

Phase 3: Deployment & Acceleration

- Runtime Selection: Use an optimized inference engine.

- TensorRT (NVIDIA): Aggressively optimizes graphs for NVIDIA GPUs.

- TFLite / CoreML: Standard for mobile deployment on Android/iOS.

- OpenVINO: Optimized for Intel CPUs/VPUs.

- Hardware Mapping: Ensure your sparsity pattern matches your hardware (e.g., use 2:4 sparsity if deploying on Ampere GPUs).

Common Mistakes and Pitfalls

Achieving efficiency often comes with trade-offs. Here are common errors to avoid.

1. The “False Economy” of FLOPs

Reducing FLOPs doesn’t always reduce latency. If you replace a standard convolution with a complex, fragmented sparse operation, the memory access overhead might make the “lighter” model run slower. Always measure wall-clock time on the target hardware.

2. Over-Pruning

Pruning too aggressively can destroy the model’s ability to generalize, even if training accuracy looks okay. It creates a “fragile” model. Always evaluate on a held-out test set and consider robustness metrics.

3. Ignoring the “Long Tail”

Quantization can disproportionately affect rare classes or outliers in the data distribution. An 8-bit model might recognize the average case perfectly but fail catastrophically on edge cases that a 32-bit model would handle.

4. Hardware Mismatch

Optimizing a model for an FPGA requires different strategies than optimizing for a mobile CPU. Developing an efficient model in a vacuum without knowing the target deployment environment often leads to wasted effort.

The Future: Automating Efficiency

As of January 2026, the frontier of Efficient DL is moving toward automation. We are seeing the rise of AutoML for Embedded Systems (e.g., MCUNet), where the AI designs the AI to fit onto microcontrollers with kilobytes of RAM.

Furthermore, co-design is becoming the standard. Instead of designing software and hardware separately, future systems will evolve together. The algorithm will adjust its sparsity to match the chip, and the chip will dynamically adjust its voltage and frequency to match the algorithm’s needs.

Collaborative Robots and Green AI

In the context of robotics, efficiency translates to battery life. Collaborative robots (cobots) that work alongside humans need to process visual and sensor data in real-time. Efficient DL allows these robots to operate untethered for longer shifts, reducing downtime for charging and lowering the overall energy footprint of automated warehouses and factories.

Conclusion

Efficient Deep Learning is no longer an optional “nice-to-have” optimization; it is a fundamental requirement for the sustainable and scalable future of Artificial Intelligence. By mastering pruning, quantization, and hardware acceleration, we can transition from the era of “Red AI”—characterized by brute force and massive carbon footprints—to “Green AI,” where intelligence is ubiquitous, low-power, and environmentally responsible.

For developers and organizations, the path forward is clear: measure your energy, optimize your architectures, and view efficiency as a primary design constraint, equal in importance to accuracy.

Next Steps

- Audit your current models: Use tools like CodeCarbon to establish a baseline energy consumption.

- Experiment with PTQ: Try applying Post-Training Quantization to a non-critical model to observe the size/accuracy trade-off.

- Explore Inference Engines: If you are deploying on specific hardware, move beyond standard frameworks and try TensorRT or OpenVINO to see immediate speedups.

FAQs

Q: Does pruning always improve inference speed? Not always. Unstructured pruning creates sparse matrices. If the hardware (like a standard CPU) isn’t optimized for sparsity, it may process zeros just as slowly as non-zeros. Structured pruning (removing whole channels) is more likely to yield guaranteed speedups on standard hardware.

Q: How much accuracy is lost with quantization? It depends on the technique and the model. INT8 quantization typically incurs a negligible accuracy drop (often <1%) for many standard CNNs and Transformers. However, going lower (INT4 or binary) usually requires advanced techniques like Quantization-Aware Training to maintain usability.

Q: What is the difference between Edge AI and Green AI? They are related but distinct. Edge AI refers to where the processing happens (locally on a device). Green AI refers to the goal of reducing the carbon/energy footprint of AI. Edge AI is often a strategy used to achieve Green AI, but Green AI also applies to optimizing massive data centers.

Q: Can I use quantization on any model? Yes, most modern frameworks (PyTorch, TensorFlow) support quantization for standard layers (Conv2d, Linear, LSTM, etc.). However, custom layers or very deep, unstable networks might be harder to quantize without significant engineering effort.

Q: Why are FPGAs considered efficient for Deep Learning? FPGAs can be configured to have the exact data path required for a specific model. Unlike a GPU which has a fixed architecture, an FPGA can be “rewired” (logically) to support custom bit-widths (e.g., 3-bit) or custom data flows, eliminating the overhead of general-purpose logic.

Q: Is knowledge distillation only for compressing models? Primarily, yes, but it can also be used to improve the performance of a model of the same size. A student model of the same size as the teacher, trained via distillation, often outperforms a model trained solely on the raw data (labels), because the “soft targets” from the teacher provide richer training signals.

Q: What is “mixed-precision” training? Mixed-precision training uses a combination of 16-bit (FP16 or BF16) and 32-bit (FP32) floating-point types. It speeds up training on modern GPUs (like NVIDIA Voltas/Amperes) and reduces memory usage, while maintaining the numeric stability of FP32 where necessary. It is a standard practice for training large models efficiently.

Q: How does Green AI impact the bottom line? Directly. Cloud computing costs are largely driven by compute time and energy. Reducing model size and latency reduces the instance size required or the hours billed. For battery-powered devices, it extends product life and improves user experience.

References

- Schwartz, R., et al. (2020). “Green AI.” Communications of the ACM. Discusses the trade-off between Red AI (accuracy at all costs) and Green AI (efficiency).

- Han, S., Mao, H., & Dally, W. J. (2016). “Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding.” ICLR. A foundational paper on modern model compression techniques.

- Hinton, G., Vinyals, O., & Dean, J. (2015). “Distilling the Knowledge in a Neural Network.” NIPS Deep Learning Workshop. The seminal paper introducing Knowledge Distillation.

- NVIDIA. “NVIDIA Ampere Architecture Whitepaper.” Detailed documentation on hardware support for sparsity (A100/H100 GPUs) and mixed-precision training.

- Google. “TensorFlow Model Optimization Toolkit.” Official documentation and guides for pruning and quantization in TensorFlow.

- Frankle, J., & Carbin, M. (2019). “The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks.” ICLR. Explores the theoretical underpinnings of why pruning works.

- MIT HAN Lab. “MCUNet: Tiny Deep Learning on IoT Devices.” Research on bringing deep learning to microcontrollers via co-design of NAS and inference engines.

- Patterson, D., et al. (2021). “Carbon Emissions and Large Neural Network Training.” arXiv. Analysis of the carbon footprint of training large language models.

- Gholami, A., et al. (2021). “A Survey of Quantization Methods for Efficient Neural Network Inference.” arXiv. Comprehensive overview of different quantization schemes.

- Strubell, E., Ganesh, A., & McCallum, A. (2019). “Energy and Policy Considerations for Deep Learning in NLP.” ACL. Highlighted the massive energy costs of Transformer models.