In the early days of artificial intelligence, models were specialists living in silos. A computer vision model could identify a cat in a photo but couldn’t read a description of a cat. A natural language processing (NLP) model could write poetry but was blind to the visual world. Today, that barrier is crumbling. Vision-language transfer learning—the ability to transfer knowledge between the visual domain (pixels) and the textual domain (words)—has become one of the most transformative frontiers in deep learning.

This guide explores how machines are learning to “see” language and “read” images by transferring knowledge across these distinct modalities. We will unpack the mechanisms behind this convergence, explore the architectures that make it possible, and examine the real-world applications reshaping industries from e-commerce to robotics.

In this guide, “transfer learning across domains” specifically refers to multimodal learning that bridges computer vision and natural language processing (vision ↔ text), rather than single-domain transfer (e.g., vision-to-vision).

Key Takeaways

- Breaking Silos: Modern AI no longer treats vision and text as separate disciplines; it learns them jointly to create a shared understanding of the world.

- The Power of Zero-Shot: By connecting vision to language, models can recognize objects they have never seen during training, simply by reading a description of them.

- Joint Embeddings: The core mechanism involves mapping images and text into a shared mathematical space where “dog” (the word) and a picture of a dog are located close together.

- Efficiency: Transfer learning allows us to leverage massive pre-trained models (foundation models) for downstream tasks without training from scratch, saving immense computational resources.

- Real-World Impact: This technology powers everything from advanced image search and automated content moderation to accessibility tools for the visually impaired.

1. The Convergence of Vision and Language

For decades, Computer Vision (CV) and Natural Language Processing (NLP) evolved on parallel tracks. CV focused on Convolutional Neural Networks (CNNs) to process pixel grids, while NLP relied on Recurrent Neural Networks (RNNs) and later Transformers to process sequential text.

The paradigm shift occurred when researchers realized that language provides a powerful supervision signal for vision. Instead of relying on expensive, human-labeled datasets (like ImageNet, where humans manually tag millions of images), models could learn from the limitless supply of image-text pairs available on the internet.

Why Transfer Learning Matters Here

Transfer learning typically involves taking a model trained on Task A and repurposing it for Task B. In the vision-text context, it goes deeper:

- Semantic Richness: Text offers a richer, more nuanced description of the visual world than simple categorical labels. A label says “dog.” A caption says “a golden retriever catching a frisbee in a park.” Transferring this textual nuance to visual models makes them smarter.

- Generalization: By learning the relationship between words and pixels, models acquire “common sense” reasoning that allows them to perform tasks they weren’t explicitly trained for.

As of January 2026, the industry has moved beyond experimental multimodal models to deploying robust systems where the boundary between reading and seeing is effectively dissolved.

Scope of This Guide

- IN Scope: Multimodal architectures (CLIP, ALIGN, etc.), zero-shot classification, image captioning, visual question answering (VQA), cross-modal retrieval.

- OUT of Scope: Purely generative image models (like diffusion) unless discussed in the context of semantic understanding, pure NLP tasks, or audio-video transfer.

2. How It Works: The Mechanics of Vision-Language Transfer

To understand how knowledge is transferred between vision and text, we must look at the underlying architecture. The goal is to create a Joint Embedding Space.

The Concept of Embeddings

In deep learning, data is converted into vectors (lists of numbers) called embeddings.

- Text Embedding: The word “Apple” might be converted into a vector [0.1, 0.5, -0.2].

- Image Embedding: An image of an apple might be converted into a vector [0.9, 0.1, 0.4].

If these two models are trained separately, the vectors for “Apple” (text) and “Apple” (image) will have no mathematical relationship. Vision-language transfer learning forces these vectors to align.

Contrastive Learning (The “Matching Game”)

The most successful approach to aligning these domains is Contrastive Learning. Models like OpenAI’s CLIP (Contrastive Language-Image Pre-training) revolutionized this space.

Here is the simplified process:

- Input: The model receives a batch of N images and N corresponding text captions.

- Encoding:

- An Image Encoder (e.g., a Vision Transformer) converts images into vectors.

- A Text Encoder (e.g., a Transformer) converts captions into vectors.

- The Objective: The model is trained to maximize the similarity (usually cosine similarity) between the correct image-text pairs (the diagonal of the matrix) and minimize the similarity between incorrect pairs.

- Result: The model learns a shared space where the vector for the image of a cat is physically close to the vector for the word “cat.”

Tokenization and Patching

To make this transfer feasible, the inputs must be structurally compatible.

- Text: Broken down into tokens (words or sub-words).

- Vision: Images are broken down into “patches” (e.g., 16×16 pixel squares). These patches are treated like “visual words,” allowing the Transformer architecture—originally designed for text—to process images. This structural unification is key to efficient transfer.

3. Key Architectures Enabling Transfer

Several foundational architectures have paved the way for effective vision-language transfer. Understanding these helps in selecting the right tool for a specific application.

CLIP (Contrastive Language-Image Pre-training)

CLIP is perhaps the most famous example of vision-language transfer. Trained on 400 million image-text pairs, it demonstrated that a model could “read” an image without ever being trained on specific object classes.

- Transfer Mechanism: It aligns the visual encoder and text encoder.

- Strength: Incredible zero-shot performance. You can classify images using categories the model has never seen before by simply providing the class names as text.

ALIGN (A Large-scale Image and Noise-text Embedding)

Google’s ALIGN scaled up the data even further, using over one billion noisy image-text pairs. It proved that the sheer scale of data could overcome noise (bad captions, irrelevant text), leading to robust transfer learning capabilities even with imperfect data.

ViT (Vision Transformer)

While not strictly a multimodal model on its own, ViT applies the Transformer architecture (native to text) to images. This architectural transfer allowed the vision community to inherit the massive advancements made in NLP, such as scaling laws and attention mechanisms.

Multimodal Large Language Models (MLLMs)

As of early 2026, the cutting edge involves models like GPT-4V and Gemini. These are not just aligning embeddings; they are Large Language Models (LLMs) that have been given “eyes.” They process visual tokens alongside text tokens, allowing for complex reasoning. For example, you can show an MLLM a picture of a broken bike and ask, “How do I fix this?” The model transfers its textual knowledge of bike repair to the specific visual context of the image.

4. Zero-Shot Learning: The Holy Grail of Transfer

The most significant benefit of vision-language transfer is Zero-Shot Learning.

Definition

Zero-shot learning refers to a model’s ability to perform a task without having seen any specific examples of that task during training.

How Transfer Enables Zero-Shot

In traditional Computer Vision (supervised learning), if you wanted a model to recognize a “platypus,” you had to gather 1,000 photos of platypuses and train the model.

With vision-language transfer (e.g., CLIP), the model already understands the concept of a platypus from its text training and has learned to associate that textual concept with visual features during pre-training.

- In Practice: You simply provide the prompt “a photo of a platypus” to the model. The model looks at the image, converts it to an embedding, checks if it aligns with the embedding for the text “platypus,” and gives a prediction. No new training data is required.

Benefits

- Adaptability: Systems can be updated to recognize new objects instantly without retraining.

- Cost Reduction: Eliminates the need for massive, labeled datasets for every new niche category.

- Long-Tail Recognition: Capable of identifying rare objects that don’t have enough training images to train a traditional CNN.

5. Core Tasks and Applications

Vision-language transfer learning is not just theoretical; it powers diverse practical applications.

Image Captioning and Accessibility

- The Task: Generating a descriptive sentence for an image.

- Transfer: The model sees visual features (shapes, colors, actions) and transfers them into a coherent linguistic structure (grammar, vocabulary).

- Use Case: Automated alt-text generation for the visually impaired. A screen reader can describe an image on a social media feed in detail, rather than just saying “image.”

Visual Question Answering (VQA)

- The Task: Answering natural language questions about an image.

- Transfer: Requires complex reasoning. If asked “Is the traffic light red?”, the model must identify the traffic light (vision), understand the concept of “red” (vision/text link), and formulate a yes/no answer (text).

- Use Case: Robotics and autonomous agents. A robot can be asked, “Where did I leave my keys?” and it can scan the room to answer.

Cross-Modal Retrieval (Semantic Search)

- The Task: Searching for images using text queries, or finding text using images.

- Transfer: This relies entirely on the shared embedding space.

- Use Case: E-commerce. A user types “vintage floral summer dress,” and the search engine finds images that match that semantic description, even if the vendor never tagged the product with those specific keywords.

Content Moderation

- The Task: Identifying hate speech, violence, or explicit content.

- Transfer: Often, hate speech involves a benign image coupled with nasty text (memes), or vice versa. Unimodal models miss this. Multimodal transfer models understand the context created by the intersection of text and vision.

- Use Case: Social media platforms filtering toxic memes.

6. Practical Implementation: How to Do It

For developers and data scientists looking to leverage vision-language transfer, the workflow typically involves using pre-trained foundation models rather than training from scratch.

Step 1: Choose a Foundation Model

- For Retrieval/Classification: CLIP is the industry standard for lightweight, fast embedding generation.

- For Reasoning/Captioning: BLIP (Bootstrapping Language-Image Pre-training) or LLaVA (Large Language-and-Vision Assistant) are excellent open-source choices.

Step 2: Fine-Tuning (Optional but Recommended)

While zero-shot is powerful, transfer learning really shines when you fine-tune the model on your specific domain.

- Linear Probing: Freeze the heavy pre-trained model and train a small, simple classifier on top of the embeddings. This is computationally cheap and effective.

- LoRA (Low-Rank Adaptation): A technique to fine-tune massive models by adjusting only a tiny fraction of the parameters. This allows you to adapt a general vision-text model to specialized domains (e.g., medical imaging or satellite analysis) without breaking the bank.

Step 3: Prompt Engineering for Vision

Just as with ChatGPT, how you phrase the text matters.

- Example: Instead of checking similarity against the word “dog,” check against “a photo of a dog, a type of pet.”

- Ensembling: Average the embeddings of multiple prompts (“a photo of a big dog,” “a zoomed-in shot of a dog”) to get a more robust text representation for the transfer.

Example Workflow: Building a Semantic Image Search

- Load Model: Load a pre-trained CLIP model.

- Index Images: Pass your image database through the visual encoder to get vectors. Store these in a vector database (like FAISS or Pinecone).

- Query: Pass the user’s text query through the text encoder to get a query vector.

- Search: Perform a nearest neighbor search in the vector database.

- Result: Return images with the highest cosine similarity.

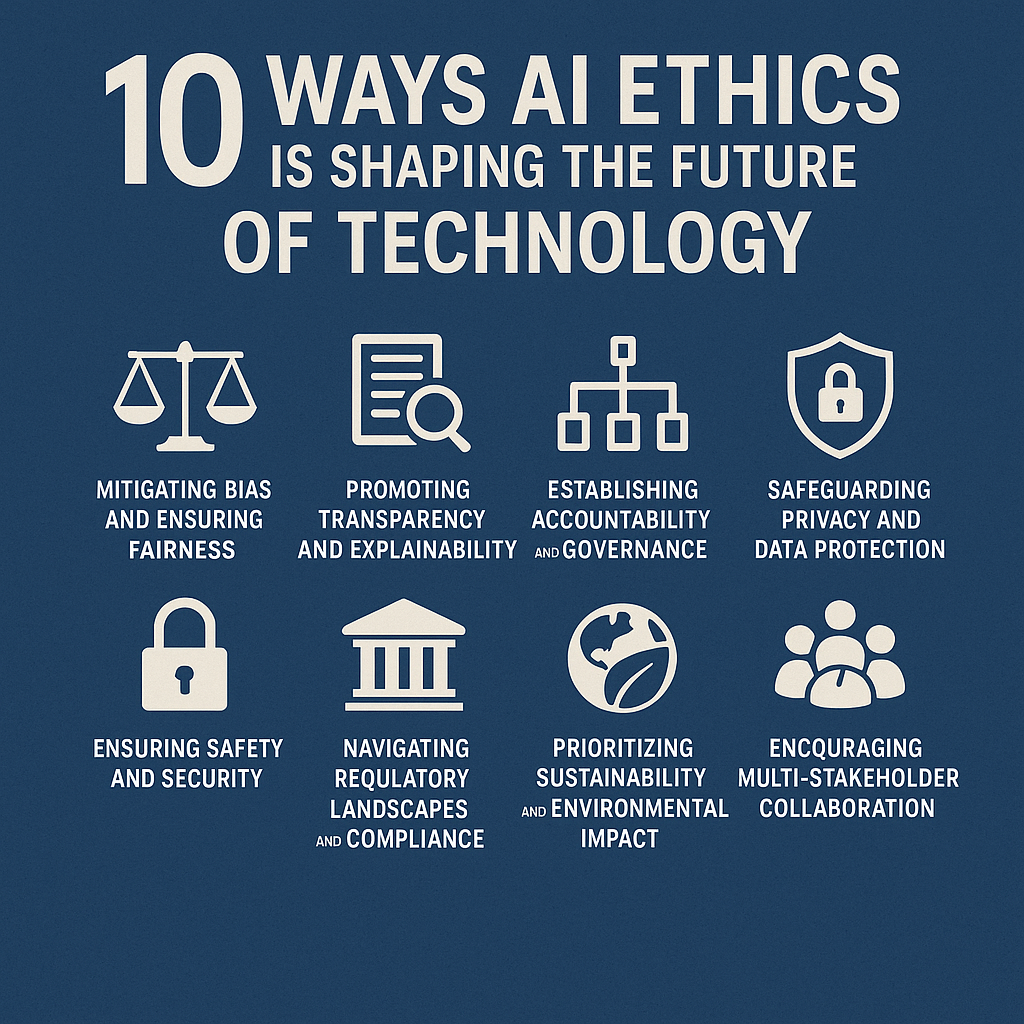

7. Challenges and Common Pitfalls

Despite the progress, transferring knowledge between vision and text is fraught with challenges.

The “Bag of Features” Problem

Models like CLIP sometimes act like a “bag of features.” They recognize that an image contains “grass,” “person,” and “sky,” but they might fail to understand the relationship (e.g., distinguishing “person sitting on grass” vs. “grass on person”). They transfer concepts well, but transfer compositionality poorly.

Bias Amplification

Because these models are trained on internet data, they inherit internet biases.

- The Risk: If the internet mostly contains images of men associated with “doctor” and women with “nurse,” the model transfers this bias. In a zero-shot classification task, it might misclassify a female doctor as a nurse simply due to this learned prior.

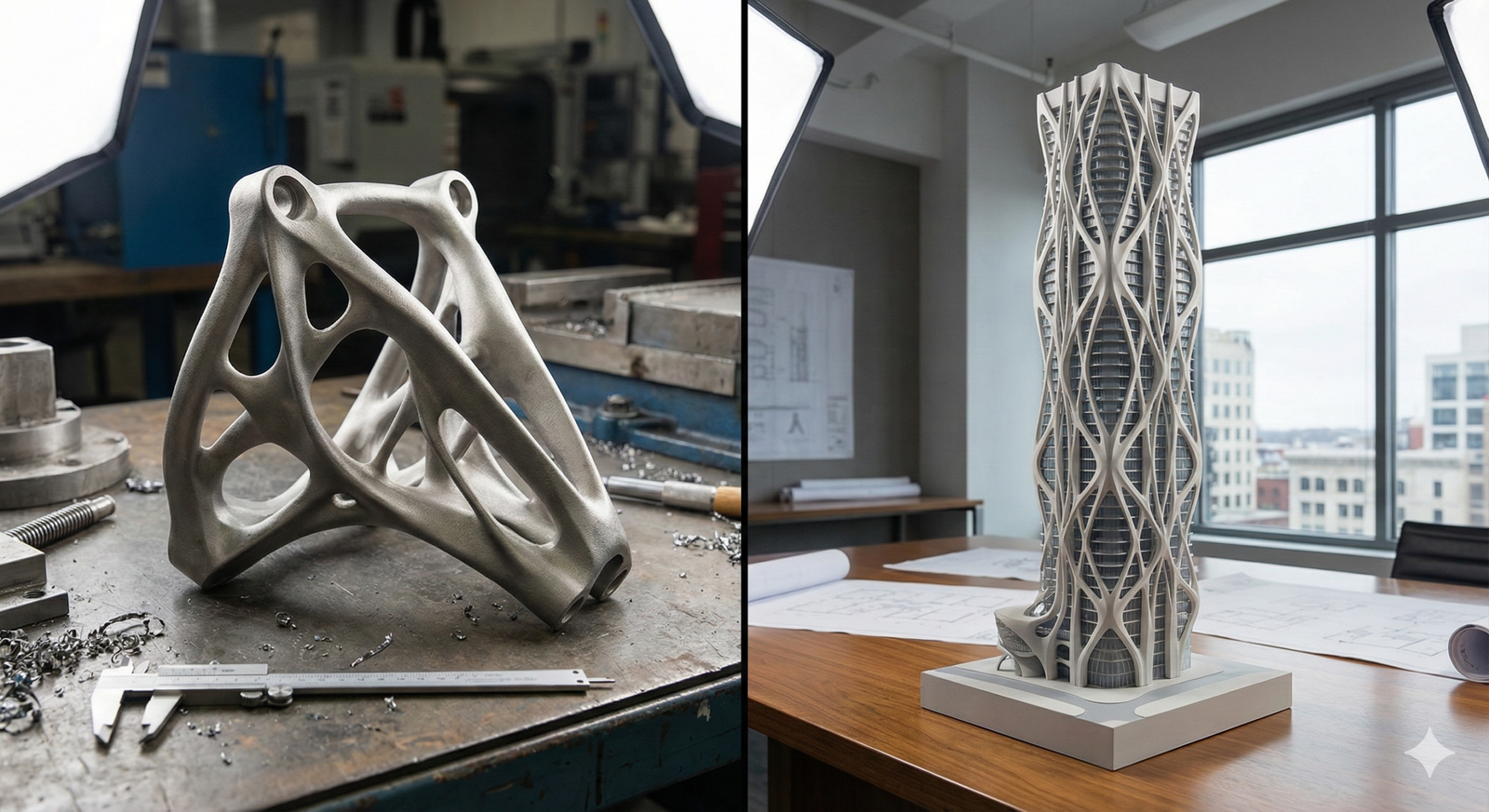

Domain Shift

Transfer learning struggles when the target domain is vastly different from the training domain.

- Example: A model trained on natural images (photos of dogs/cats) transfers poorly to medical X-rays or satellite imagery without significant fine-tuning. The “vision” language of an X-ray is fundamentally different from the “vision” language of a photograph.

Computational Cost

Processing multimodal data is expensive. Encoding high-resolution images requires significantly more VRAM and compute power than processing text alone. This latency can be a bottleneck for real-time applications on edge devices.

8. Hardware Acceleration and Efficiency

To make vision-language transfer viable for production, hardware optimization is critical.

Quantization

Converting models from 32-bit floating-point numbers to 8-bit or 4-bit integers reduces model size and speeds up inference with minimal loss in accuracy. This is essential for running vision-text models on mobile phones.

Distillation

Knowledge distillation involves training a smaller “student” model to mimic the behavior of a massive “teacher” model. You can distill a huge CLIP model into a tiny version that runs on a Raspberry Pi, retaining much of the transfer learning capability.

Specialized Hardware

As of 2026, Neural Processing Units (NPUs) in consumer electronics are increasingly optimized for Transformer architectures, supporting the specific matrix operations required for both vision and text encoders efficiently.

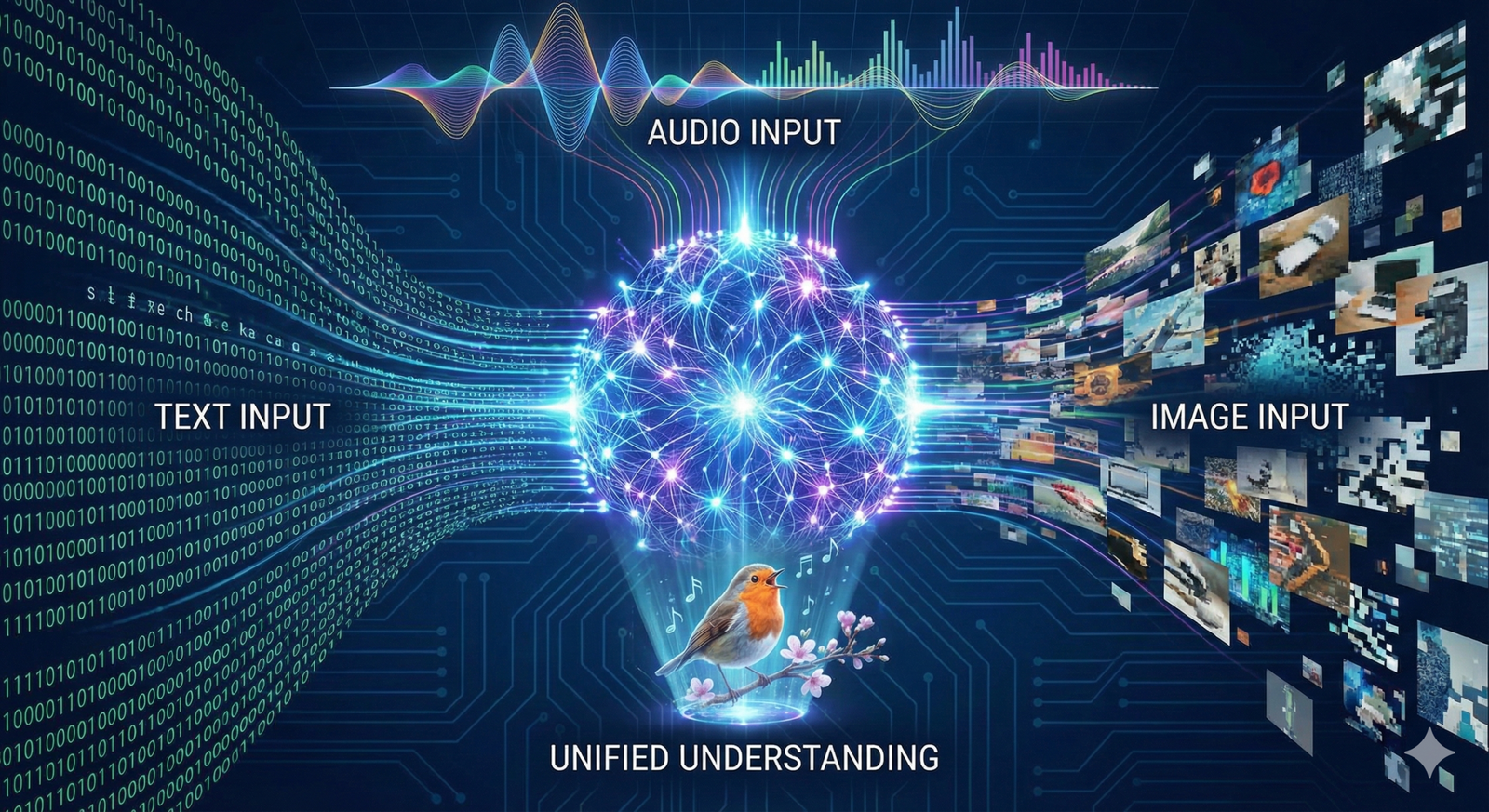

9. Future Trends: Expanding the Modalities

The future of transfer learning is not stopping at vision and text.

Audio and Video

The next frontier is integrating audio. Models are beginning to learn correlations between the sound of a crash, the visual of a car accident, and the text “traffic collision.” This creates a tripartite transfer learning system where any two modalities can inform the third.

Embodied AI

Transfer learning is moving from static datasets to the physical world. Robots are using vision-language models to navigate. A command “go to the kitchen and find the apple” requires transferring the semantic concept of “kitchen” and “apple” to the 3D visual data streaming from the robot’s cameras.

Generalized Perception

We are moving toward “Generalist Agents”—single models capable of handling text, images, video, depth, and thermal data simultaneously. In these systems, transfer learning isn’t a specialized technique; it’s the default mode of operation.

10. Who This Is For (and Who It Isn’t)

This guide is for:

- Data Scientists & ML Engineers: Looking to implement semantic search, zero-shot classification, or multimodal systems.

- Product Managers: seeking to understand how AI can improve user experience through better search, content moderation, or accessibility features.

- Researchers: exploring the intersection of NLP and Computer Vision.

This guide is NOT for:

- Pure NLP Specialists: If you only care about text-to-text generation without any visual component.

- Traditional CV Engineers: If you are strictly focused on classical photogrammetry or hardware-level signal processing without semantic interpretation.

11. Related Topics to Explore

For those interested in diving deeper, consider exploring these related concepts:

- Foundation Models: The broad category of models (like GPT-4, Claude, Gemini) that serve as the base for transfer learning.

- Vector Databases: The infrastructure required to store and query the embeddings generated by these models.

- Self-Supervised Learning: The training paradigm that allows models to learn from unlabeled data, fueling the success of transfer learning.

- Prompt Engineering for Images: The art of crafting text inputs to get the best visual outputs or classifications.

Conclusion

Transfer learning across domains—specifically bridging the gap between vision and text—represents a maturation of artificial intelligence. We have moved from models that simply “look” or “read” to models that understand. By mapping pixels and words into a shared semantic space, we unlock capabilities that were previously impossible: searching for images with natural language, classifying objects without training data, and building robots that understand verbal commands.

While challenges regarding bias, compositionality, and computational cost remain, the trajectory is clear. The silos are gone. The future of AI is multimodal, interconnected, and fundamentally based on the fluid transfer of knowledge between our visual and linguistic worlds.

Next Step: If you are a developer, try implementing a simple image search using Hugging Face’s Transformers library and a CLIP model to see the power of joint embeddings in action.

FAQs

Q: What is the main advantage of vision-language transfer learning? A: The primary advantage is Zero-Shot Learning. It allows models to recognize objects or concepts they were never explicitly trained on, simply by understanding the textual description of those concepts. This drastically reduces the need for labeled data.

Q: How does CLIP differ from traditional image classification? A: Traditional classification (like ResNet) is trained to predict a fixed set of labels (e.g., 1,000 categories). CLIP is trained to match images to free-form text. This means CLIP is not limited to a fixed set of categories and can classify distinct images based on any text prompt provided at runtime.

Q: Can I use vision-language models for medical imaging? A: Yes, but with caution. While general models like CLIP have some knowledge, they often fail on specialized domains like radiology due to Domain Shift. Effective use in medicine usually requires fine-tuning the general model on medical image-text pairs (e.g., X-rays and radiology reports).

Q: What is a “Joint Embedding Space”? A: It is a mathematical vector space where different types of data (images and text) are mapped. In this space, similarity is measured by distance. If the model works well, the vector for an image of a cat will be very close to the vector for the word “cat,” allowing the model to understand they represent the same concept.

Q: Is fine-tuning always necessary? A: No. For general tasks (like classifying common objects or searching stock photos), pre-trained models often perform well “out of the box” (zero-shot). However, for specialized tasks (like identifying specific machine parts or rare biological species), fine-tuning typically boosts performance significantly.

Q: What computational resources are needed? A: Inference (running the model) can be done on modern consumer GPUs or even optimized CPUs for smaller models. However, training a vision-language model from scratch requires massive compute clusters. Most practitioners rely on pre-trained models.

Q: Does this work for languages other than English? A: Yes, provided the model was trained on multilingual data. Many modern multimodal models are trained on datasets containing over 100 languages, allowing for cross-lingual, cross-modal transfer (e.g., searching for an image using a query in Spanish).

Q: What is the difference between multimodal learning and transfer learning? A: Multimodal learning is the type of model (using multiple modes of data). Transfer learning is the technique of applying knowledge from one context to another. Vision-language models utilize multimodal learning architecture to enable transfer learning capabilities (like zero-shot classification).

References

- OpenAI. (2021). Learning Transferable Visual Models From Natural Language Supervision (CLIP). https://openai.com/research/clip

- Google Research. (2021). ALIGN: Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision. https://ai.googleblog.com/2021/05/align-scaling-up-visual-and-vision.html

- Hugging Face. (n.d.). Tasks: Zero-Shot Image Classification. Hugging Face Documentation. https://huggingface.co/tasks/zero-shot-image-classification

- Dosovitskiy, A., et al. (2020). An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale (ViT). ICLR 2021. https://arxiv.org/abs/2010.11929

- Meta AI. (2023). Segment Anything Model (SAM). https://segment-anything.com/

- Liu, H., et al. (2023). Visual Instruction Tuning (LLaVA). NeurIPS 2023. https://llava-vl.github.io/

- Salesforce Research. (2022). BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation. https://github.com/salesforce/BLIP

- DeepMind. (2022). Flamingo: a Visual Language Model for Few-Shot Learning. https://www.deepmind.com/blog/tackling-multiple-tasks-with-a-single-visual-language-model