Blockchains promise open access, tamper-resistance, and programmable value. Yet building a network that is fast, safe, and truly open at the same time is hard. This tension is often summarized as the “blockchain trilemma”: optimizing for scalability, security, and decentralization simultaneously is difficult, and most real-world designs prioritize two at the expense of the third.

This article unpacks that trade space in plain language. You’ll learn what each pillar means, why they pull against each other, and how modern designs—from rollups to modular data layers—work to bend the triangle rather than break it. You’ll also get a practical framework you can apply to your own product: requirements, metrics, step-by-step implementation guidance, a four-week starter plan, and a troubleshooting checklist.

Disclaimer: This article is for educational purposes only and does not constitute financial, investment, or legal advice. Always consult qualified professionals for decisions affecting your organization or capital.

Key takeaways

- Scalability is about throughput, latency, and cost. It’s the user experience pillar.

- Security is about adversaries, incentives, and fault tolerance. It’s the resilience pillar.

- Decentralization is about who can participate, who can censor, and how upgrades happen. It’s the legitimacy pillar.

- No single design “solves” the trilemma. The goal is to choose trade-offs explicitly and mitigate the downsides with architecture, incentives, and measurement.

- Layer-2 systems, sharding, and modular data availability can raise throughput without surrendering core guarantees—if you operate them with sound assumptions and governance.

- Success requires a procedure, not a slogan: define SLOs, map threats, pick an architecture, iterate in testnets, measure, and adjust.

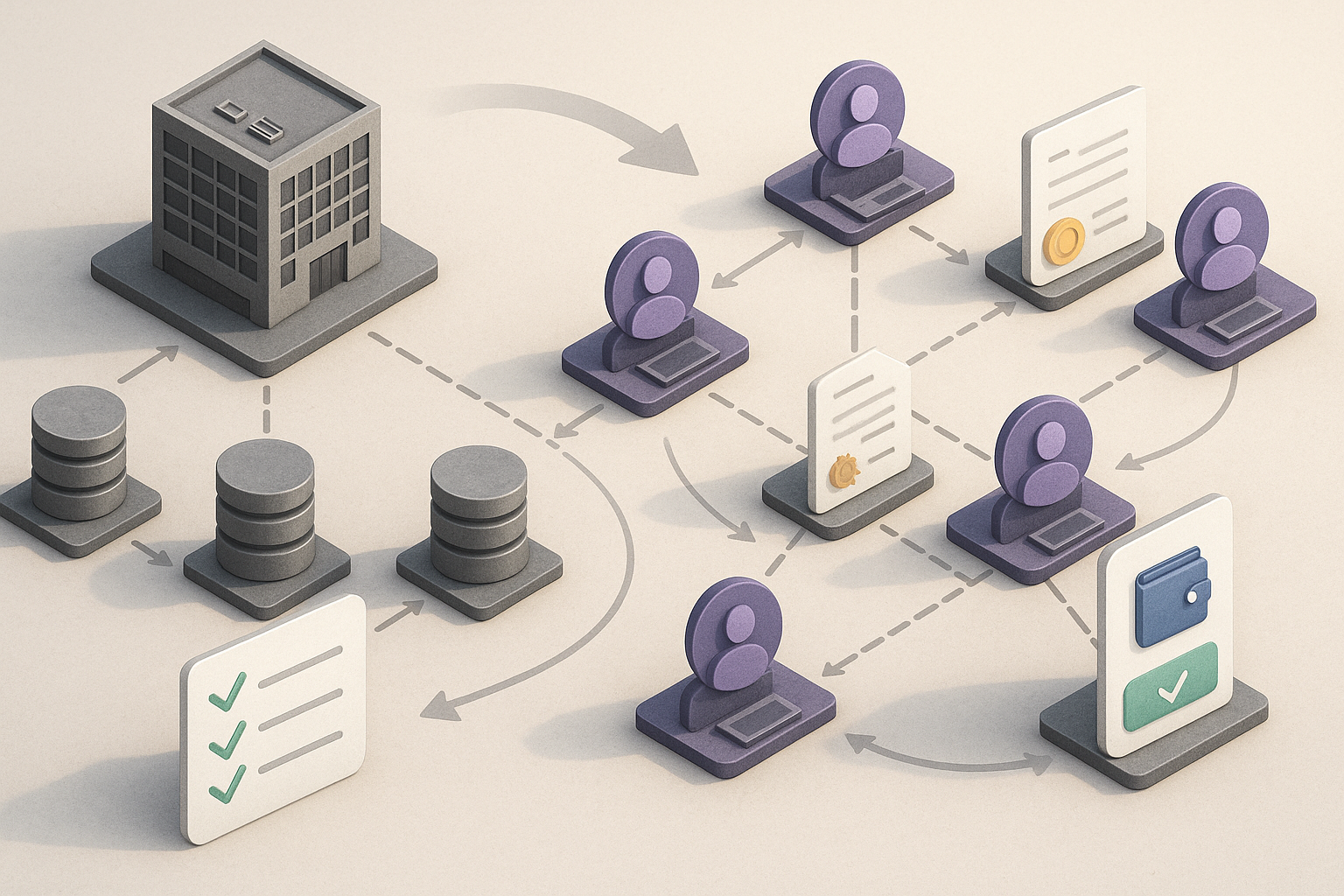

The trilemma in practice: a map for real decisions

What it is

The trilemma states that blockchain designs must trade off among scalability, security, and decentralization. Picture a triangle:

- Scalability: How many transactions the system can process, how quickly it finalizes, and what users pay.

- Security: How hard it is for attackers to rewrite history, steal funds, or halt the chain.

- Decentralization: How many independent parties verify and govern the system, and how easy it is to join or exit without permission.

Why these pillars pull against each other

- Bigger blocks or heavier validator requirements can improve throughput but raise hardware costs, reducing the number of people who can run nodes.

- Ultra-tight participation rules can strengthen safety in some designs but limit openness and concentrate control.

- Fully open participation can raise the burden of Sybil resistance and coordination overhead, affecting performance.

A practical way to think about it

You are not picking two and abandoning the third. You are setting target levels for all three that meet your product’s mission. For a payments wallet, sub-second latency and low fees may be primary. For a settlement chain, censorship resistance and verifiable finality may dominate. For a community network, broad participation may be paramount.

Requirements & prerequisites

- Skills: Product management, threat modeling, basic cryptography, smart-contract development, and ops.

- Equipment/software: Client software for your target chain(s), a devnet or testnet, monitoring and analytics, and a key management solution.

- Cost: Cloud or on-prem nodes, audit budget, bug bounty allocation, and ongoing observability.

- Low-cost alternative: Start on a mature Layer-2 (L2) to inherit base-layer security and keep infra lean.

Step-by-step (beginner-friendly)

- Define service-level objectives (SLOs) for each pillar (e.g., average fee target, time-to-finality, minimum validator count).

- Map your threats: double-spends, censorship, key compromise, contract bugs.

- Pick an architecture: monolithic L1, L2 rollup, app-chain, or hybrid modular stack.

- Prove your assumptions in a testnet: load tests, adversarial scenarios, and chaos experiments.

- Instrument everything: latency distribution, reorg depth, client diversity, validator concentration, MEV patterns.

- Plan for upgrades: governance rules, emergency procedures, and backwards compatibility.

Beginner modifications & progressions

- Start simple: deploy the first version on a popular rollup to minimize base-layer complexity.

- Progress: graduate to your own app-chain or modular stack only after measuring real bottlenecks (fees, throughput, or governance friction).

Frequency, duration, and metrics

- Cadence: monthly architecture reviews; weekly performance dashboards; per-release security reviews.

- Core KPIs: median/95th percentile confirmation time, effective throughput under load, fee variability, validator/client diversity, and objective decentralization metrics (e.g., distribution of stake).

Safety, caveats, and common mistakes

- Treat slogans as assumptions, not facts. Write them down and try to break them.

- Don’t conflate many nodes with meaningful decentralization if those nodes are controlled by a few operators.

- Avoid “premature optimization” that prices out small validators/users.

Mini-plan (example)

- Step 1: Set SLOs: fees <$0.10, median finality <10s, no single operator >10% of validation.

- Step 2: Choose L2 rollup, instrument the dApp, and design a transparent upgrade path.

Scalability: making blockchains feel instant

What it is and why it matters

Scalability determines whether users experience the network as snappy and affordable or slow and expensive. It encompasses throughput, latency, finality, and cost per transaction.

Core benefits:

- Better user experience and higher conversion.

- Room for complex apps: gaming, social, or high-frequency DeFi.

- Reduced congestion spillovers and fee spikes.

Requirements/prerequisites

- A performance-aware mindset: benchmarking, profiling, and capacity planning.

- Access to a testnet and load generation tools.

- Understanding of batching, compression, and L2 mechanics.

- Budget for monitoring and stress testing.

Low-cost alternative: Leverage L2s or sidechains and use batching (e.g., bundling transfers) to reduce per-user cost without heavy infra.

Step-by-step implementation

- Profile your workload

- Classify transactions: transfers, swaps, NFT mints, oracle updates.

- Identify write-amplification: how many state reads/writes per action?

- Reduce on-chain footprint

- Use efficient data encodings.

- Batch operations and compress calldata.

- Offload non-critical data to decentralized storage or specialized data-availability layers, anchoring commitments on-chain.

- Choose the right scaling lane

- L2 rollups: inherit base-layer security; publish proofs or fraud-proof windows.

- State channels and payment channels: many off-chain updates, infrequent on-chain settlement.

- App-specific chains: full control over parameters; trade easier scaling for extra ops and security responsibilities.

- Engineer for finality

- Target predictable confirmation times.

- Gate user actions on finality rather than mere inclusion if your risk tolerance is low.

- Run controlled load tests

- Ramp traffic gradually.

- Track p50/p95/p99 latencies, fee curves, and error budgets.

- Optimize client and node settings

- Use recommended hardware or cloud instances.

- Tune mempool and peer settings, and keep clients updated.

Beginner modifications & progressions

- Starter mode: build on an L2 with audited bridges; batch user actions; publish minimal data commitments on L1.

- Advanced mode: move to a modular stack where execution, settlement, and data availability are separate layers.

Recommended frequency/duration/metrics

- Quarterly: full capacity tests simulating peak events.

- Weekly: check cost per action and failed tx rates.

- Metrics: throughput under sustained load, median finality, cost stability, and revert rates.

Safety, caveats, and common mistakes

- Bigger blocks ≠ free scaling: they may centralize validators.

- Ignoring fees under stress: design for bad days, not good ones.

- Assuming L2s are “set and forget”: track your bridge assumptions and fraud-proof or validity-proof properties.

Mini-plan (example)

- Step 1: Move minting to a rollup and compress metadata; use scheduled batch settlement every 10 minutes.

- Step 2: Enforce UI rules that wait for finalized blocks before high-value actions.

Security: making blockchains hard to break

What it is and why it matters

Security is the assurance that history can’t be rewritten cheaply, funds can’t be stolen without detection, and contracts behave as intended. It spans consensus safety, economic incentives, software correctness, key management, and operational discipline.

Core benefits:

- Users can trust finality and balances.

- The system can withstand rational or irrational attackers.

- Incidents, when they happen, are contained and recoverable.

Requirements/prerequisites

- Threat modeling experience or templates.

- Audit and testing budget (unit tests, fuzzing, formal methods where appropriate).

- Key management and secrets rotation.

- Monitoring for slashing risks, client bugs, and liveness issues.

Low-cost alternative: Start with conservative contract patterns, well-maintained libraries, and a staged rollout with capped TVL.

Step-by-step implementation

- Define your adversaries

- Consider hashpower/stake majority attacks, censorship coalitions, oracle manipulation, reentrancy, and governance capture.

- Harden your smart contracts

- Automated testing: unit tests, property-based tests, fuzzing.

- Static analysis and linters.

- Audits ahead of mainnet launch; treat findings as learning, not box-checking.

- Bug bounties with clear scope and safe-harbor rules.

- Secure your keys and ops

- Use hardware security modules or hardware wallets for deployer and treasury keys.

- Employ multi-sig for upgrades and emergency controls, with published timelocks where possible.

- Practice key rotation and incident runbooks.

- Increase consensus robustness

- Aim for client diversity to avoid single-implementation bugs.

- Monitor validator performance and slashing conditions.

- Favor finality gadgets and liveness safeguards provided by your base layer.

- Plan for failure

- Establish circuit breakers for on-chain modules with clear thresholds.

- Pre-agree governance processes for pauses and restarts.

Beginner modifications & progressions

- Starter: deploy minimal contracts; cap per-user risk; run a public bounty.

- Progression: layer formal verification on critical modules; diversify clients; add real-time anomaly detection.

Recommended frequency/duration/metrics

- Before each major release: audit and threat-model review.

- Continuous: monitoring for reorgs, finality delays, slashing events, gas spikes.

- Metrics: economic security (stake or work at risk), time to finality, fraction of network on top two clients, upgrade timelock adherence.

Safety, caveats, and common mistakes

- Security theater: audits without tests or follow-ups.

- Upgrade keys too powerful: becomes a central point of failure.

- Ignoring social attack surfaces: phishing, governance proposals that sneak in traps.

Mini-plan (example)

- Step 1: Introduce a 48-hour timelock on upgrades governed by a multi-sig with doxxed signers and published policies.

- Step 2: Launch a bounty and integrate fuzzing into CI with coverage targets.

Decentralization: making blockchains fair and durable

What it is and why it matters

Decentralization is about who gets to participate, how easy it is to verify, and how upgrades happen. A decentralized network resists censorship, captures community legitimacy, and reduces single-point failures.

Core benefits:

- Credible neutrality and long-term durability.

- Lower governance capture risk.

- More eyes on code and behavior, which can surface bugs and abuses.

Requirements/prerequisites

- Accessible node requirements: ordinary hardware can verify the chain.

- Transparent governance: documented processes and recorded votes.

- Distribution: broad token or validator distribution where relevant.

- Independent clients: multiple implementations validated against specs.

Low-cost alternative: Encourage light clients for users, home staking where supported, and community-run RPCs to reduce reliance on centralized gateways.

Step-by-step implementation

- Lower the verification bar

- Keep base-layer data minimal enough that affordable hardware can verify it.

- Support stateless or light clients where possible.

- Broaden participation

- Reduce minimum stake and hardware needs for validators (if you control parameters).

- Offer delegations that don’t create kingmakers; cap validator concentration with incentive curves if the protocol supports it.

- Diversify clients and operators

- Provide grants and recognition for teams maintaining alternative clients.

- Promote geographic and jurisdictional distribution to limit correlated risks.

- Design resilient governance

- Publish a constitution or upgrade playbook.

- Separate emergency powers from routine parameter changes.

- Require open review periods and quorums.

Beginner modifications & progressions

- Starter: run at least one independently managed node; document your upgrade policy; encourage users to verify.

- Progression: seed grants for client diversity; incentivize small validators; fund community infrastructure.

Recommended frequency/duration/metrics

- Monthly: track validator concentration and client market share.

- Quarterly: review governance participation and proposal quality.

- Metrics: Nakamoto coefficient or similar concentration metrics, Herfindahl-Hirschman index on stake, nodes per autonomous system, share of non-custodial participants.

Safety, caveats, and common mistakes

- Node count vanity: thousands of nodes run by a handful of providers is not meaningful dispersion.

- Governance capture: low quorum thresholds or rushed votes.

- Blocking verifiability: heavy state growth without light-client paths.

Mini-plan (example)

- Step 1: Publish a transparent, timelocked upgrade process with community review periods.

- Step 2: Sponsor a community program that covers hardware costs for new independent validators.

Pattern catalog: ways to bend (not break) the trilemma

Use these patterns to lift scalability while protecting the core of security and decentralization your project needs.

Rollups (optimistic and zero-knowledge)

What it is & benefits

Rollups execute transactions off-chain and post compressed data and proofs to a base layer. Optimistic variants assume correctness and allow fraud-proof challenges; zero-knowledge variants submit succinct validity proofs. Benefits include lower fees and higher throughput while inheriting base-layer security assumptions.

Requirements/prerequisites

- Familiarity with your rollup’s proof and bridge model.

- Integration with sequencers and bridges.

- Testing for finality and withdrawal timing.

Steps

- Pick a rollup with the programming model you need.

- Deploy contracts and bridge adapters; batch transactions.

- Expose UX that reflects finality/withdrawal periods or proof latencies.

- Monitor data availability and proof submission.

Beginner modifications

- Start with a managed rollup stack; use audited token bridges.

Metrics

- Effective cost per action, challenge/validity proof rates, L1 data-availability costs, withdrawal times.

Safety & pitfalls

- Bridge risk is real; treat bridges as critical infrastructure.

- Understand censorability when sequencers are centralized; consider escape hatches.

Mini-plan

- Integrate your dApp with a major rollup; route heavy actions there and settle summaries on the base layer.

Sharding and data availability sampling

What it is & benefits

Sharding splits data across many parts so no single node must process everything, while sampling lets nodes verify that data was published without downloading it all. The aim is to scale data throughput without requiring supercomputer-class nodes.

Requirements

- Client support for sharded data and sampling.

- Strong networking and anti-eclipse measures.

Steps

- Ensure your clients support the sharding roadmap and sampling.

- Update infrastructure and monitoring for multiple data segments.

- Test resilience to partial unavailability.

Beginner modifications

- If sharding is not available on your target chain, use data-availability layers that expose similar guarantees and post commitments to your settlement chain.

Metrics

- Data throughput, sampling success, and finality under stress.

Safety & pitfalls

- Avoid assumptions that every node has everything; think in terms of verifiable availability, not omniscience.

Mini-plan

- Prototype on a testnet with sampling enabled; measure light-node verification cost.

State/payment channels

What it is & benefits

Channels allow parties to transact off-chain with instant updates, settling occasionally on-chain. Great for bilateral, high-frequency interactions.

Requirements

- Channel libraries, watchtowers or monitoring services, and on-chain dispute resolution contracts.

Steps

- Open a channel with an on-chain transaction.

- Exchange signed updates off-chain.

- Close or re-balance periodically; dispute on-chain if needed.

Beginner modifications

- Use a gateway service for routing until you learn to manage channels yourself.

Metrics

- Liquidity utilization, failed route frequency, average settlement intervals.

Safety & pitfalls

- Keep channels monitored; unattended channels can be exploited.

Mini-plan

- Pilot a micro-payments feature via channels for a subset of users; settle daily.

Sidechains and app-chains

What it is & benefits

Independent chains tailored for a specific app or ecosystem, bridged to a larger base layer. They give parameter control, custom fee markets, and governance autonomy.

Requirements

- Validators, bridge contracts, monitoring, and an upgrade policy.

- Security budget to defend the chain’s consensus.

Steps

- Launch an app-chain with conservative parameters and a small validator set.

- Bridge assets with minimal trust assumptions you can support.

- Grow the set of validators and decentralize operations as adoption grows.

Beginner modifications

- Start as an L2 or on a shared security pool before moving to an independent chain.

Metrics

- Validator concentration, time to finality, bridge volume versus TVL.

Safety & pitfalls

- Don’t overstate security equivalence with your settlement chain; be precise.

Mini-plan

- Begin on a shared security framework; move to an app-chain only when governance and ops are mature.

Modular stacks (execution, settlement, and data availability split)

What it is & benefits

A modular approach separates execution (running transactions), settlement (dispute resolution/finality), and data availability (ensuring data was published). This enables specialization and scaling each layer independently.

Requirements

- Clear assumptions between layers and robust proofs.

- Tooling to post commitments and verify data availability.

Steps

- Choose an execution environment and a settlement layer with mature proof systems.

- Use a data-availability layer with sampling guarantees.

- Build monitoring that correlates events across layers.

Beginner modifications

- Start with a managed modular stack to avoid integrating proofs yourself.

Metrics

- Cross-layer latency, DA costs, proof verification time, escape-hatch functionality.

Safety & pitfalls

- Complexity increases cross-layer risk; document emergency exits carefully.

Mini-plan

- Stand up a test app using a modular DA provider and measure cost per kilobyte of posted data.

A decision framework you can run this quarter

- State your mission: Payments wallet? Trading venue? On-chain game? Community governance?

- Set SLOs: choose concrete targets for fee, finality, minimum validator dispersion, and upgrade transparency.

- Pick an architecture: rollup vs app-chain vs hybrid modular; write down the assumptions you inherit.

- Design failure modes: what you will pause, how you will upgrade, and how users can self-custody or exit.

- Instrument everything: dashboards for performance, security, and decentralization; publish them.

- Stage rollout: devnet → testnet with public incentives → canary mainnet → general availability.

- Review quarterly: revisit SLOs and risks as usage shifts.

Example

- A gaming studio prioritizes low fees and fast UX but still needs credible settlement. It ships gameplay state on an L2, stores assets in a compressed format with periodic on-chain commitments, and routes large marketplace transactions through finality-aware flows on the base layer. Governance upgrades use timelocks and public votes, with emergency pause powers limited by quorum and transparency.

Quick-start checklist

- Define SLOs for fee, finality, validator/client diversity, and governance transparency.

- Choose your architecture (L2, app-chain, modular).

- Write a one-page threat model and a one-page governance plan.

- Stand up monitoring for latency, reorgs/finality, and validator concentration.

- Cap TVL and per-user risk during the first month.

- Schedule an external audit and launch a bug bounty.

- Publish an upgrade playbook and a user exit strategy.

Troubleshooting & common pitfalls

- Fees spiking under load → Batch more aggressively, compress calldata, or migrate hot paths to an L2 or modular DA.

- Finality delays → Review client versions, network health, and consensus parameters; surface finality in the UI.

- Validator concentration creeping up → Adjust incentives, court new operators, or add caps if protocol supports them.

- Bridge anxiety → Diversify routes, monitor proof windows, and communicate withdrawal timelines clearly.

- Governance apathy → Lower proposal friction but keep quorums; add phased rollouts and open discussion windows.

- Security regressions after upgrades → Enforce timelocks and checks; use feature flags and circuit breakers.

How to measure progress (make it visible)

Scalability KPIs

- Median and 95th percentile confirmation time.

- Effective throughput under sustained load.

- Cost per user action and its variance.

- Failed or reverted transaction ratio.

Security KPIs

- Time to finality versus target.

- Share of network on top two clients.

- Bug bounty participation and time-to-patch.

- Incidents per quarter and blast radius.

Decentralization KPIs

- Validator/operator concentration (e.g., top-N share).

- Objective dispersion metrics (e.g., coefficient-based).

- Geographic and AS-level node distribution.

- Governance participation rates and proposal transparency.

Publish these KPIs publicly. Sunlight strengthens legitimacy.

A simple 4-week starter plan

Week 1 — Define and design

- Write your mission, SLOs, and threat model.

- Pick architecture and target networks.

- Draft upgrade and emergency procedures.

Week 2 — Prototype and instrument

- Deploy a minimal dApp on a testnet or L2.

- Add metrics: latency, cost, error rates, and validator/client diversity snapshots.

- Set TVL caps and per-user risk limits.

Week 3 — Adversarial testing

- Run load tests at and beyond target throughput.

- Conduct a red-team review: censorship scenarios, key leak rehearsal, governance capture thought experiment.

- Launch a private bug bounty (expands to public next month).

Week 4 — Canary launch

- Deploy to mainnet/rollup with caps and feature flags.

- Publish dashboards and upgrade timeline.

- Schedule an external audit; open a public forum for feedback.

FAQs

1) Is the trilemma a hard law or just a rule of thumb?

It’s a design heuristic, not a mathematical impossibility theorem. But in practice, resource limits, network physics, and incentive design create real tensions that behave like a triangle.

2) Can’t faster hardware solve scalability without hurting decentralization?

Better hardware helps, but if minimum requirements climb, fewer people can verify or validate, which reduces openness and resilience. Good designs scale verification as well as execution.

3) Are rollups as secure as the base layer?

They aim to inherit base-layer security through proofs and data availability. The fine print matters: bridge risk, sequencer censorship, and proof/fraud windows all affect your risk profile.

4) Do more validators always mean more decentralization?

Not necessarily. What matters is independence and distribution. If many validators are controlled by a few operators or hosted in the same jurisdiction or cloud, the system is fragile.

5) How should I choose between optimistic and zero-knowledge rollups?

Consider withdrawal/finality needs, proof system maturity, workloads (general computation vs specific VMs), and operational complexity. Test both with your app’s real transactions.

6) Are sidechains “worse” than L2s?

They’re different. Sidechains have their own security and may not inherit guarantees from a base layer. They can be great for specific use cases if you accept and manage those assumptions.

7) What metrics best capture decentralization?

Use concentration metrics on stake/validators, client diversity, geographic dispersion, and the minimum coalition able to halt or censor. Publish the methodology to keep the debate honest.

8) How do I keep users safe during upgrades?

Use timelocks, staged rollouts, clear communications, and reversible switches. Separate emergency powers from routine parameter changes and document who holds what keys.

9) Won’t future sharding and data sampling make the trilemma irrelevant?

They help by scaling data availability and verification, but trade-offs remain: network complexity, honest majority assumptions, and governance choices still matter.

10) What if I’m building a tiny app with a small team?

Start on a mature L2, set strict TVL caps, use audited libraries, and focus on UX and observability. You can always evolve toward more autonomy as your needs crystallize.

11) How do I explain these trade-offs to non-technical stakeholders?

Use the triangle metaphor with clear SLOs: “We’re targeting sub-10s finality and <$0.10 fees while keeping any single operator below 10% control and publishing open audits.”

12) What’s the single most important habit for success?

Write assumptions down and measure them publicly. Openness about trade-offs builds trust and guides smart iteration.

Conclusion

The power of the trilemma isn’t in limiting you—it’s in forcing clarity. By defining the levels of scalability, security, and decentralization your product truly needs, and by choosing architectures that make those trade-offs explicit, you can build systems that feel fast, act safely, and remain legitimate over time. Treat the triangle as a compass, not a cage; measure relentlessly; and evolve in the open.

Call to action: Define your SLOs for all three pillars this week, and run your first trilemma review with your team.

References

- Bitcoin: A Peer-to-Peer Electronic Cash System, Bitcoin.org, 2008, https://bitcoin.org/bitcoin.pdf

- Ethereum Whitepaper, Ethereum Foundation site, accessed 2025, https://ethereum.org/en/whitepaper/

- Scalability overview, Ethereum Foundation site, accessed 2025, https://ethereum.org/en/developers/docs/scaling/

- Rollups (concepts and developer docs), Ethereum Foundation site, accessed 2025, https://ethereum.org/en/developers/docs/scaling/rollups/

- Optimistic rollups (developer docs), Ethereum Foundation site, accessed 2025, https://ethereum.org/en/developers/docs/scaling/optimistic-rollups/

- Zero-knowledge rollups (developer docs), Ethereum Foundation site, accessed 2025, https://ethereum.org/en/developers/docs/scaling/zk-rollups/

- Proof-of-Stake consensus (overview), Ethereum Foundation site, accessed 2025, https://ethereum.org/en/developers/docs/consensus-mechanisms/pos/

- EIP-4844: Proto-Danksharding, Ethereum Improvement Proposals, 2023, https://eips.ethereum.org/EIPS/eip-4844

- The Lightning Network: Scalable Off-Chain Instant Payments, Lightning Network, 2016, https://lightning.network/lightning-network-paper.pdf

- Byzantine Fault Tolerance, Wikipedia, accessed 2025, https://en.wikipedia.org/wiki/Byzantine_fault

- Sybil attack, Wikipedia, accessed 2025, https://en.wikipedia.org/wiki/Sybil_attack

- Measuring Decentralization (concept explainer), Nakamoto, accessed 2025, https://nakamoto.com/measuring-decentralization/

- Data availability sampling (concept overview), Ethereum Research Notes, accessed 2025, https://notes.ethereum.org/@vbuterin/proto-danksharding-faq

- Nodes and clients (client diversity overview), Ethereum Foundation site, accessed 2025, https://ethereum.org/en/developers/docs/nodes-and-clients/

- Inter-Blockchain Communication Protocol Overview, Cosmos IBC, accessed 2025, https://ibc.cosmos.network/

- Endgame (scaling and decentralization thought experiment), Vitalik’s blog, 2021, https://vitalik.ca/general/2021/12/06/endgame.html

10 Comments