Imagine trying to learn a new language by reading a dictionary where every definition is blacked out, or learning to identify animals by looking at photos with no captions. For humans, this is intuitive; we learn about the world primarily through observation and interaction, not because someone constantly labels every object we see. For years, however, Artificial Intelligence (AI) relied heavily on the opposite approach: supervised learning, where every piece of data requires a human-annotated label.

Enter self-supervised learning (SSL). This paradigm has emerged as one of the most transformative shifts in modern AI, powering the Large Language Models (LLMs) and computer vision systems that define today’s technology. By enabling machines to generate their own supervision signals from the data itself, SSL unlocks the ability to train on massive, unstructured datasets—like the entire internet—without the crushing bottleneck of human annotation.

In this comprehensive guide, we will explore what self-supervised learning is, how it differs from other learning methods, the intricate mechanisms that make it work, and why it is considered the “dark matter” of intelligence that could bridge the gap to human-level AI.

Key Takeaways

- Data Autonomy: Self-supervised learning allows models to learn from vast amounts of unlabeled data by creating their own labels from the data structure (e.g., predicting the next word in a sentence).

- The “Cake” Analogy: AI pioneer Yann LeCun famously described SSL as the “cake” (the bulk of learning), while supervised learning is merely the “icing” and reinforcement learning the “cherry.”

- Two Main Flavors: SSL generally falls into two categories: generative (predicting missing parts of data) and contrastive (learning to distinguish between similar and dissimilar data points).

- Efficiency at Scale: While training requires massive compute, the resulting “foundation models” are incredibly efficient data-wise when fine-tuned for specific downstream tasks.

- Universal Application: Originally dominant in Natural Language Processing (NLP), SSL is now revolutionizing Computer Vision, Robotics, and Audio processing.

What is Self-Supervised Learning?

At its core, self-supervised learning is a machine learning technique where a model learns representations of data by solving a “pretext task.” Instead of relying on external labels provided by humans (like tagging a picture as “cat” or “dog”), the system derives supervisory signals directly from the data itself.

The fundamental idea is to hide part of the input data and ask the model to predict the missing piece. If the model can accurately predict the missing part, it must understand the underlying structure, context, and semantic meaning of the data.

The “Student Practice” Analogy

Think of a student preparing for a history exam.

- Supervised Learning: A teacher asks, “When was the Battle of Hastings?” and immediately provides the answer: “1066.” The student memorizes the pair.

- Unsupervised Learning: The student is given a pile of history books and told to “find patterns.” They might stack books by color or size but might not learn the actual history.

- Self-Supervised Learning: The student takes a textbook, covers up the dates with their thumb, and tries to guess them based on the surrounding context (e.g., “William the Conqueror invaded…”). They check their answer by lifting their thumb. They are supervising themselves using the structure of the existing content.

Who This Is For (and Who It Isn’t)

This guide is designed for:

- Tech enthusiasts and students looking to understand the mechanics behind models like GPT-4 and Gemini.

- Business leaders and decision-makers evaluating AI strategies and data requirements.

- Data scientists and developers seeking a conceptual framework before diving into code.

This guide is not a coding tutorial. We will focus on concepts, architectures, and strategic implications rather than Python implementation or PyTorch syntax.

Supervised vs. Unsupervised vs. Self-Supervised Learning

To truly grasp the value of SSL, we must situate it within the broader machine learning landscape. While the lines can sometimes blur, the distinctions in data requirements are crucial.

1. Supervised Learning

- Mechanism: Inputs (x) are mapped to targets (y).

- Data Requirement: Requires a dataset of (x,y) pairs. Every image needs a label; every email needs a “spam/not spam” tag.

- Limitation: Labeling is expensive, slow, and unscalable. There are only so many humans available to label data, and human bias can creep into the labels.

2. Unsupervised Learning

- Mechanism: The model looks for hidden structures in input (x) without any specific target (y).

- Data Requirement: Raw data only.

- Common Tasks: Clustering (grouping similar customers) or dimensionality reduction.

- Limitation: While it uses cheap data, standard unsupervised methods (like K-means clustering) often fail to learn rich, high-level features useful for complex tasks like language understanding.

3. Self-Supervised Learning

- Mechanism: The model generates its own labels (y) from the input (x). It turns an unsupervised problem into a supervised one by framing it as a completion or discrimination task.

- Data Requirement: Raw, unlabeled data.

- Advantage: It combines the scalability of unsupervised learning (using raw data) with the powerful performance of supervised learning architectures (like deep neural networks).

The “LeCun Cake” Analogy

Yann LeCun, Chief AI Scientist at Meta and a Turing Award winner, popularized the “cake” analogy to explain the importance of SSL:

- The Cherry: Reinforcement Learning (requires very little feedback, sparse rewards).

- The Icing: Supervised Learning (requires medium feedback, thousands of samples).

- The Cake (Sponge): Self-Supervised Learning (requires massive feedback, dense signals). LeCun argues that most human learning is self-supervised—we learn how the world works (gravity, object permanence) just by observing, long before we are told the names of things.

How It Works: The Pretext Task

The magic of self-supervised learning lies in the Pretext Task. This is a task designed solely to force the model to learn good representations of the data. The model isn’t necessarily meant to be good at the pretext task forever; the goal is the knowledge gained while solving it.

Once the model is pre-trained on this pretext task, the knowledge is transferred to a Downstream Task (the actual problem you want to solve, like sentiment analysis or cancer detection), usually via a process called fine-tuning.

Common Pretext Tasks in Different Domains

In Natural Language Processing (NLP)

- Masked Language Modeling (MLM): The technique behind BERT. You take a sentence, mask 15% of the words, and ask the model to fill in the blanks.

- Input: “The quick [MASK] fox jumps over the [MASK] dog.”

- Target: “brown”, “lazy”.

- Next Token Prediction (Causal Language Modeling): The technique behind GPT. The model sees a sequence of words and must predict the very next word.

- Input: “The quick brown…”

- Target: “fox”.

In Computer Vision

- Jigsaw Puzzle: An image is chopped into a 3×3 grid of tiles. The tiles are shuffled, and the model must predict the correct arrangement. To do this, the model must understand that “heads” go above “bodies” and “sky” goes above “ground.”

- Colorization: The model is given a grayscale photo and must predict the color version. To succeed, it must recognize objects (e.g., knowing that grass is usually green and the sky is blue).

- Rotation Prediction: An image is rotated by 0, 90, 180, or 270 degrees. The model must predict the rotation angle. This forces the model to learn the canonical orientation of objects (e.g., trees grow upwards).

- Inpainting: A patch of the image is removed, and the model must generate the missing pixels based on the surroundings.

Core Architectures: Contrastive vs. Generative

As of 2026, self-supervised learning methods have largely coalesced into two dominant families: Contrastive Learning and Generative (or Masked) Learning.

1. Contrastive Learning (The Discriminative Approach)

Contrastive learning teaches the model to understand what makes two images (or data points) similar or different. It does not try to reconstruct the pixel details; it tries to map the data into a “embedding space” where similar items are close together.

- How it works:

- Take an image of a dog.

- Create two “augmented” versions of it (e.g., crop it, change the color, flip it). These are a positive pair.

- Take a completely different image (e.g., a chair). This is a negative sample.

- Train the neural network to pull the representations of the positive pair together and push the negative sample away.

- Famous Models: SimCLR (Simple Framework for Contrastive Learning of Visual Representations) and MoCo (Momentum Contrast).

- Why it matters: It is incredibly effective for classification and retrieval tasks because it teaches the model to be invariant to noise (lighting changes, cropping) while preserving semantic identity.

2. Generative / Masked Modeling (The Reconstructive Approach)

This approach dominated NLP first (BERT) and has recently taken over Computer Vision. Instead of comparing images, the model tries to reconstruct the input at a high fidelity.

- How it works: A significant portion of the data (e.g., 75% of an image patches) is masked out. The model uses an “encoder” to look at the visible parts and a “decoder” to predict the pixels of the missing parts.

- Famous Models: MAE (Masked Autoencoders) and BEiT (Bidirectional Encoder representation from Image Transformers).

- Why it matters: This forces a deeper understanding of the scene. You cannot reconstruct a dog’s hidden tail unless you recognize the visible part is a dog and understand dog anatomy.

3. Joint Embedding Architectures

A newer frontier, championed by Yann LeCun (specifically JEPA – Joint Embedding Predictive Architecture). This approach avoids generating pixels (which is computationally expensive and prone to focusing on irrelevant details) and avoids using negative pairs (which is tricky to engineer). Instead, it predicts the representation of the missing part in abstract space.

Benefits of Self-Supervised Learning

Why are tech giants like Google, Meta, and Microsoft investing billions into this specific paradigm? The return on investment comes from three strategic advantages.

1. Unlocking “Dark Data”

Estimates suggest that over 80-90% of the world’s data is unstructured and unlabeled. Supervised learning can only use the 10% that is labeled. SSL unlocks the other 90%. It allows models to train on the entire corpus of Wikipedia, all of GitHub’s code, or millions of hours of YouTube video.

2. Transfer Learning and Few-Shot Capability

A model pre-trained via SSL learns a robust “world view.” When you need it to do a specific task (e.g., detecting tumors in X-rays), you only need to show it a handful of labeled examples to “fine-tune” it. This is crucial for industries where data is scarce or privacy is paramount (like healthcare).

3. Robustness and Generalization

Because SSL models learn from diverse, messy, real-world data rather than curated, clean datasets, they are often more robust to noise and variations. A supervised model trained only on sunny road images might fail in the rain. An SSL model trained on all road videos has likely “seen” rain and understands the continuity of the road regardless of weather.

Challenges and Pitfalls

Despite its promise, self-supervised learning is not a magic wand. It introduces complex engineering and ethical challenges.

1. The Computational Cost

SSL requires processing massive datasets. Training a model like GPT-4 or a state-of-the-art vision transformer using SSL requires thousands of GPUs running for months. The energy consumption and carbon footprint are significant concerns.

2. Data Bias and Toxicity

Because SSL ingests raw data from the internet without human filtering, it ingests all the biases, stereotypes, and toxicity present in that data. If the internet contains gender bias in job descriptions, the self-supervised model will learn that bias as a “fact” of language structure.

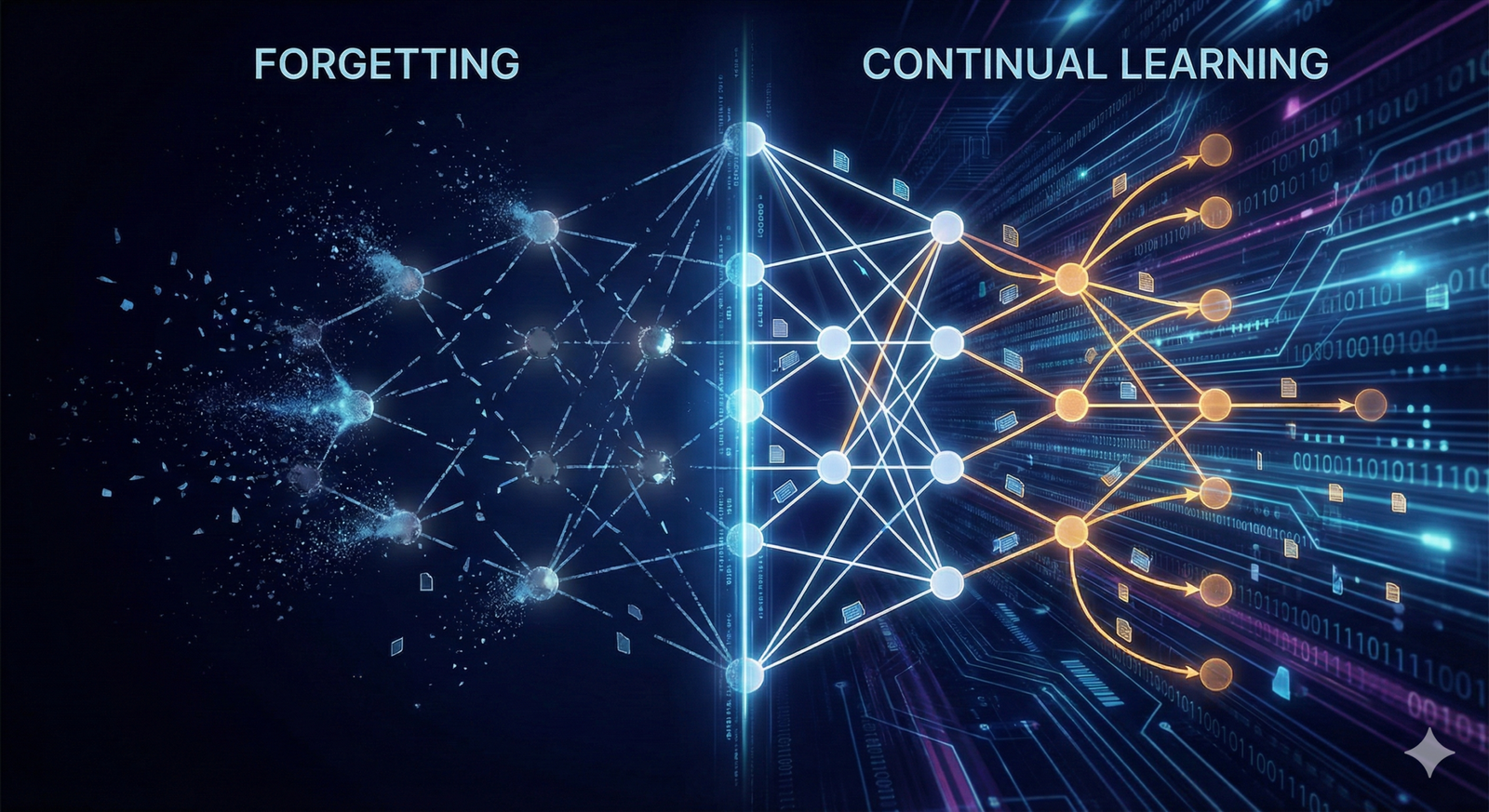

3. “Collapse” in Contrastive Learning

In contrastive learning, there is a risk of “mode collapse,” where the model cheats by mapping everything to the same representation to minimize the distance between positive pairs. Researchers have to use clever mathematical tricks (like negative sampling, momentum encoders, or stop-gradient operations) to prevent this.

4. Evaluation Difficulty

In supervised learning, you know the model is improving if accuracy goes up. In SSL, “accuracy” on the pretext task (e.g., predicting the missing word) doesn’t always correlate perfectly with performance on the downstream task (e.g., answering questions). This makes tuning these models difficult.

Real-World Examples and Applications

Self-supervised learning has moved out of the research lab and into the products we use daily.

Natural Language Processing (NLP)

- Translation and Search: Google Search uses models like BERT (trained via SSL) to understand the intent behind a query rather than just matching keywords.

- Code Generation: GitHub Copilot relies on models trained on billions of lines of public code, predicting the next line based on the previous ones—a classic SSL task.

Computer Vision

- Medical Imaging: Hospitals often have thousands of scans but few radiologists to label them. SSL models can pre-train on the raw scans to learn the anatomy, requiring only a few labeled examples to learn to spot rare diseases.

- Content Moderation: Meta uses SSL (like their DINOv2 model) to analyze images and videos for policy violations without needing a human to label every variation of a prohibited image.

Robotics

- Sensorimotor Learning: Robots collect massive amounts of data just by moving around. SSL allows them to predict the outcome of their movements (“If I move my arm this way, the camera view should shift that way”). If the prediction fails, they update their internal model, learning to navigate without explicit rewards.

Audio and Speech

- wav2vec: Meta’s wav2vec 2.0 uses SSL to learn speech representations from audio alone. This has enabled high-quality speech recognition for low-resource languages (languages with very little labeled training data).

The Future of SSL: As of 2026

The field is moving fast. As of early 2026, research is shifting away from pure text or image models toward Multimodal Self-Supervised Learning.

Multimodal Synergy

Current models are learning from video, audio, and text simultaneously. For example, a model might watch a YouTube video (visuals) and listen to the narration (audio) and read the subtitles (text). The correlation between the word “dog,” the sound of barking, and the image of a dog provides a triangulation signal that is far richer than any single modality could provide.

The Rise of World Models

The ultimate goal, championed by researchers like Yann LeCun, is to build “World Models.” These are systems that understand the physics of the world—cause and effect—through SSL. Instead of just predicting the next word, an AI would predict the next state of the world in a video, understanding that if a glass is pushed off a table, it will fall and break. This step is viewed as essential for achieving Artificial General Intelligence (AGI).

Related Topics to Explore

- Foundation Models: The large-scale models resulting from SSL training.

- Transfer Learning: The process of moving knowledge from the pretext task to the target task.

- Vision Transformers (ViT): The architecture that has largely replaced CNNs in modern SSL computer vision.

- Reinforcement Learning from Human Feedback (RLHF): The process often used after SSL to align the model with human values.

- Dimensionality Reduction: Classic unsupervised techniques like PCA and t-SNE.

Conclusion

Self-supervised learning represents a fundamental maturation of Artificial Intelligence. By moving away from the “spoon-feeding” of supervised labels and toward the “observation” of raw data, AI systems are becoming more autonomous, efficient, and capable of generalizing to new tasks.

While challenges in compute efficiency and bias remain, the trajectory is clear: the future of AI lies in learning without labels. For organizations and developers, the shift means focusing less on labeling massive datasets and more on curating high-quality, diverse, unstructured data streams that allow these powerful algorithms to teach themselves.

Next Steps: If you are looking to implement SSL, start by exploring the Hugging Face libraries for NLP (Transformers) or PyTorch Image Models (timm) for vision to see pre-trained SSL checkpoints in action.

FAQs

1. Is self-supervised learning the same as unsupervised learning? Technically, SSL is a subset of unsupervised learning because it uses unlabeled data. However, the industry distinguishes them because SSL uses the data to generate supervised-style signals (labels) internally, whereas traditional unsupervised learning (like clustering) does not use labels at all.

2. Does self-supervised learning eliminate the need for human labels entirely? Not exactly. It eliminates the need for massive labeled datasets for pre-training. However, for the final “fine-tuning” step where the model is specialized for a specific task (like legal contract review), a small set of high-quality human labels is still usually required.

3. What is the most famous example of self-supervised learning? BERT (Bidirectional Encoder Representations from Transformers) by Google and the GPT (Generative Pre-trained Transformer) series by OpenAI are the most famous examples. They reshaped the entire NLP landscape by learning from unlabeled text.

4. Can I use self-supervised learning on small datasets? SSL generally shines with massive datasets. On small datasets, supervised learning (with data augmentation) often performs better because SSL models need vast variety to learn robust features. However, you can use a model pre-trained via SSL on a large dataset and fine-tune it on your small dataset.

5. What is “contrastive learning”? Contrastive learning is a type of SSL where the model learns by comparing things. It pulls similar items (like two photos of the same cat) close together in its internal understanding and pushes dissimilar items (a cat and a car) apart.

6. Is SSL only for images and text? No. SSL is being applied to time-series data (for finance), graph data (for social networks), protein folding sequences (for biology), and audio waveforms.

7. Why is SSL called the “dark matter” of intelligence? This term, used by Yann LeCun, refers to the fact that SSL accounts for the vast majority of information we absorb. Just as dark matter makes up most of the universe’s mass but is invisible, background knowledge learned through self-supervision makes up the bulk of “intelligence,” supporting the visible sliver of supervised task performance.

8. What hardware do I need to train SSL models? Training an SSL model from scratch usually requires high-performance computing clusters (dozens or hundreds of GPUs). However, using or fine-tuning an existing SSL model can often be done on a single consumer-grade GPU or even a CPU.

9. How does SSL handle privacy? SSL helps privacy by allowing models to be trained on data that never leaves a device (Federated Learning). Since the data doesn’t need to be sent to a central labeling farm for humans to look at, sensitive data (like phone typing history) can remain private while still training the model.

10. What is a “pretext task”? A pretext task is the dummy assignment given to an AI to force it to learn. For example, “rotate this image and tell me the angle” is a pretext task. The AI doesn’t care about rotation angles; the goal is for it to learn what objects look like so it can determine the angle.

References

- LeCun, Y. (2022). A Path Towards Autonomous Machine Intelligence. OpenReview. (Foundational paper on World Models and JEPA architecture).

- Devlin, J., et al. (2018). BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. Google AI Language. (The seminal paper on Masked Language Modeling).

- Chen, T., et al. (2020). A Simple Framework for Contrastive Learning of Visual Representations (SimCLR). Google Research. (Key paper on Contrastive Learning).

- He, K., et al. (2022). Masked Autoencoders Are Scalable Vision Learners. Meta AI. (Seminal paper on Generative/Masked modeling for vision).

- Brown, T., et al. (2020). Language Models are Few-Shot Learners. OpenAI. (The GPT-3 paper demonstrating the scale of next-token prediction).

- Meta AI. (2023). DINOv2: Learning Robust Visual Features without Supervision. Meta AI Research Blog. https://ai.meta.com

- Radford, A., et al. (2021). Learning Transferable Visual Models From Natural Language Supervision (CLIP). OpenAI.

- Baevski, A., et al. (2020). wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. Facebook AI.

- Bengio, Y., LeCun, Y., & Hinton, G. (2021). Deep Learning for AI. Communications of the ACM.

- Hugging Face. (2024). Transformers Documentation: Task Summary. https://huggingface.co/docs/transformers (Official documentation for implementing SSL models).