Secure coding practices are the repeatable behaviors, design choices, and controls that prevent common software vulnerabilities from ever reaching production. In plain terms: you build security into how you plan, write, review, test, and deliver code. This tutorial gives you a clear, step-by-step path to make that happen in an engineering organization of any size. At a glance, the steps are: set a baseline; threat model; define secure requirements; validate and encode data; harden auth and sessions; enforce authorization; manage secrets; secure your supply chain; log safely; harden configuration; automate gates; and review and remediate quickly. Follow them and you’ll reduce risk, increase delivery speed, and pass audits with far less stress. Because this topic affects safety and compliance, treat the guidance as educational; for high-stakes decisions, consult qualified security professionals.

1. Set a Security Baseline and Shared Policy

A strong security program starts by deciding what “good” looks like and writing it down. Your baseline should define the minimum secure coding practices every service and library must meet before shipping. Begin with a short, human-readable policy: what standards you follow, who owns which decisions, which tools are mandatory, and what you will block at release time. The aim isn’t bureaucracy; it’s consistency. When everyone agrees on guardrails—password hashing approach, logging rules, dependency policies—you avoid ad-hoc choices that create risk. Treat the baseline as a living artifact tied to training, code review checklists, and CI rules. Keep it short enough to be memorable and specific enough to be testable. Finally, publish it where developers actually work (in the repo or monorepo docs) and make it easy to find and update.

How to do it

- Write a one-page baseline that links to deeper standards (e.g., input validation, auth, logging).

- Define ownership: a security lead per product area plus engineering managers as enforcers.

- Create a lightweight exception process with time-bound waivers and clear compensating controls.

- Map the baseline to tangible checks in CI (e.g., SAST must pass with no high findings).

- Incorporate the baseline into onboarding and quarterly training roadmaps.

Numbers & guardrails

- Training: aim for at least 4 hours of focused secure coding learning per engineer per cycle.

- Policy scope: cover at minimum data handling, secrets, authN/authZ, dependencies, logging, and CI gates.

- Exceptions: require a written risk statement and a planned remediation date before merge.

Close by making the baseline visible in your developer workflow. When the standard is part of the build, security becomes default, not optional.

2. Threat Model Early and Often

Threat modeling is the structured way you anticipate how systems could be attacked before code is written. Done well, it answers three questions: what are you building, what can go wrong, and what will you do about it? Start with a simple diagram of data flows, trust boundaries, and external dependencies. Use well-known lenses such as STRIDE (Spoofing, Tampering, Repudiation, Information disclosure, Denial of service, Elevation of privilege) to systematically brainstorm threats. You don’t need a dedicated team to get value—thirty minutes at kickoff and at major changes often prevents weeks of rework later. Document the findings as actionable requirements (e.g., “enforce server-side authorization for report exports” rather than “consider privilege issues”). Revisit the model when you add new integrations, expose new endpoints, or handle sensitive data.

How to do it

- Draw a quick data flow diagram for the feature, highlighting trust boundaries and secrets.

- Run a STRIDE pass and capture top threats with proposed mitigations.

- Convert each mitigation into a trackable ticket with an acceptance test.

- Add an automated checklist to PR templates to confirm threat model updates when relevant.

- Keep models in the repo so developers can evolve them alongside code.

Numbers & guardrails

- Cadence: at least once per significant feature, and whenever a trust boundary changes.

- Outcomes: each session should yield 3–10 concrete mitigations tied to owners and tests.

- Timebox: aim for 30–60 minutes; longer sessions tend to drift without more value.

Threat modeling builds shared understanding and turns abstract risks into crisp tasks, which is exactly what teams need to ship safely.

3. Translate Security into Requirements and Architecture

Security is not a post-hoc test; it’s an architectural property you specify up front. Convert threats and policy into non-functional requirements: encryption at rest, least privilege, immutable infrastructure, and explicit ownership of keys and secrets. Document allowed patterns—such as token-based stateless sessions or centralized identity—and disallowed ones—like storing credentials in cookies. Make tradeoffs visible: where you accept additional latency for stronger encryption or choose a more restrictive design to limit data access. When you record these decisions in an architecture decision record, you create a durable contract the team can test. The payoff is predictability: features land faster because the hard choices are already made.

How to do it

- Write one-sentence security requirements per concern (e.g., “All PII is encrypted at rest and in transit”).

- Choose approved building blocks (identity provider, key vault, message broker, secrets library).

- Define trust boundaries explicitly and place controls at each one (tokens validated, mTLS enforced).

- Create an architecture decision record for each major security tradeoff with rationale and fallback plan.

- Provide reference implementations or “golden paths” for common patterns to reduce guesswork.

Numbers & guardrails

- Privilege: minimize service account scopes to only required actions; verify with least-privilege tests.

- Boundary count: aim to keep cross-boundary calls intentionally small; clusters of 3–5 are easier to defend.

- Data classes: tag sensitive data fields and ensure handling rules exist for each class.

When security becomes part of the architecture vocabulary, later steps—testing, reviews, and operations—become simpler and more reliable.

4. Validate Inputs and Encode Outputs

Most injection and XSS issues start with untrusted data crossing trust boundaries. Your default stance should be to distrust all inputs and to encode outputs for their destination. Validate early using allowlists (what is permitted) rather than denylists (what is forbidden). Constrain types, lengths, and formats; reject or sanitize anything that doesn’t match. On the output side, encode for context: HTML, JavaScript, URL, or SQL, using the framework’s safe helpers rather than hand-rolled logic. Treat client-side checks as helpful UX but never sufficient; enforce the same rules on the server. Finally, rate limit and throttle to protect resource-intensive operations from abuse.

How to do it

- Use schema validators (e.g., JSON Schema, Joi, Zod) to define and enforce input contracts.

- Prefer parameterized queries or ORM bindings for database access to avoid injection.

- Encode outputs for the target sink: HTML templates, JSON serialization, CSV exports.

- Add server-side rate limits and size caps for uploads and expensive endpoints.

- Include fuzz and property-based tests that try malformed and boundary inputs.

Numeric mini case

A file upload endpoint accepts images. Set limits: type (JPEG/PNG), size (≤ 5 MB), dimensions (≤ 4,096×4,096), and rate (≤ 10 uploads per minute per user). Enforce on the edge and in the service. With these simple constraints, you eliminate a wide class of attacks (oversized payloads, unexpected formats, resource exhaustion) without harming legitimate use.

When you normalize and constrain inputs while encoding outputs by context, you remove the fuel that powers common exploits.

5. Harden Authentication and Session Management

Authentication and session handling are frequent sources of severe issues, so standardize rather than invent. Use a well-maintained identity provider for login, federated identity, and multi-factor authentication (MFA). For passwords, employ modern password hashing (e.g., Argon2id, scrypt, or bcrypt) with parameters tuned so a single verification takes roughly 100–250 milliseconds on your server hardware. Session tokens should be unguessable, short-lived, and bound to device/browser context where possible. Set secure cookie attributes (Secure, HttpOnly, SameSite) and rotate sessions after privilege changes. Avoid long-lived static API keys in favor of short-lived tokens with refresh flows. Finally, instrument suspicious sign-in behaviors—sudden location changes, impossible travel, repeated MFA failures—and respond automatically.

How to do it

- Centralize login via an identity provider; enable MFA and step-up auth for sensitive actions.

- Use modern password hashing with calibrated cost; never store plaintext or reversible passwords.

- Set cookie flags correctly and enforce short token lifetimes with rotation.

- Implement device binding or token binding where supported to reduce token replay risk.

- Monitor auth telemetry and trigger challenges or temporary lockouts on anomalous patterns.

Numbers & guardrails

- Password hashing: target a 100–250 ms verification window; adjust cost as hardware evolves.

- Session lifetime: interactive sessions ≤ 12 hours; sensitive admin sessions much shorter.

- Lockouts: temporary user lock after 5–10 failed attempts, with incremental backoff.

Treat identity as a platform capability, not a per-app task, and you’ll collapse a major risk area into a manageable service.

6. Enforce Authorization with Least Privilege

Authorization determines what an authenticated user or service can do. Implement it centrally and consistently using coarse-grained role-based access control (RBAC) or attribute-based access control (ABAC) where you need fine-grained decisions. The key is “enforce on the server”; never rely on client state alone. Build a policy engine or use an existing one to avoid duplicating logic across services. Deny by default, and log explicit reasons for access denials without leaking sensitive details. Test authorization like any feature: unit tests for policy outcomes, integration tests around trust boundaries, and regression tests for privilege escalation paths. Pair authorization checks with data-level filtering to prevent confused-deputy problems.

How to do it

- Centralize authorization decisions with a policy layer or service; expose a simple “can X do Y to Z?” API.

- Default to deny; grant narrowly scoped permissions and review them regularly.

- Test permission edges (e.g., ownership changes, role revocation, expired sessions).

- Use context (tenant, region, device posture) for step-up checks when risk is higher.

- Log policy decisions with correlation IDs to support audit and forensics.

Numeric mini case

A reporting service exposes “download CSV”. Define permissions:

- viewer: can view dashboard but not export,

- analyst: can export non-sensitive reports,

- admin: can export all.

Enforce server-side checks before generating the export. In tests, simulate role changes mid-session and verify access revocation within ≤ 60 seconds.

Clarity in authorization models eliminates fragile one-off checks and shuts down escalation routes.

7. Manage Secrets Safely

Secrets—API keys, database passwords, tokens—do not belong in source code, config files, or chat threads. Store them in a dedicated secrets manager and fetch them at runtime with least privilege. Rotate secrets on a defined cadence and on every suspected exposure. Use per-environment, per-service secrets so a single leak doesn’t compromise everything. Build detection into your workflow: pre-commit hooks and CI checks that scan for accidental secrets in code and history. When a secret is exposed, treat rotation as an incident with a checklist: revoke, reissue, update consumers, verify, and document. Finally, prefer workload identity (short-lived, automatically rotated credentials) over long-lived static secrets wherever your platform supports it.

How to do it

- Use a secrets manager and reference secrets via environment variables or secure mounts.

- Grant narrow read permissions to services; prohibit humans from reading production secrets by default.

- Automate rotation and notify owners when rotations fail or drift.

- Add secret scanning to pre-commit and CI to catch leaks before merge.

- Maintain an emergency rotation runbook with owners and on-call procedures.

Numbers & guardrails

- Rotation: set rotation intervals appropriate to risk; critical tokens more frequently than low-risk keys.

- Blast radius: scope secrets per service and per environment to cap exposure.

- Scanning: block merges if any high-confidence secret signature is detected.

When secrets are invisible in code and easy to rotate, accidental leaks become survivable rather than catastrophic.

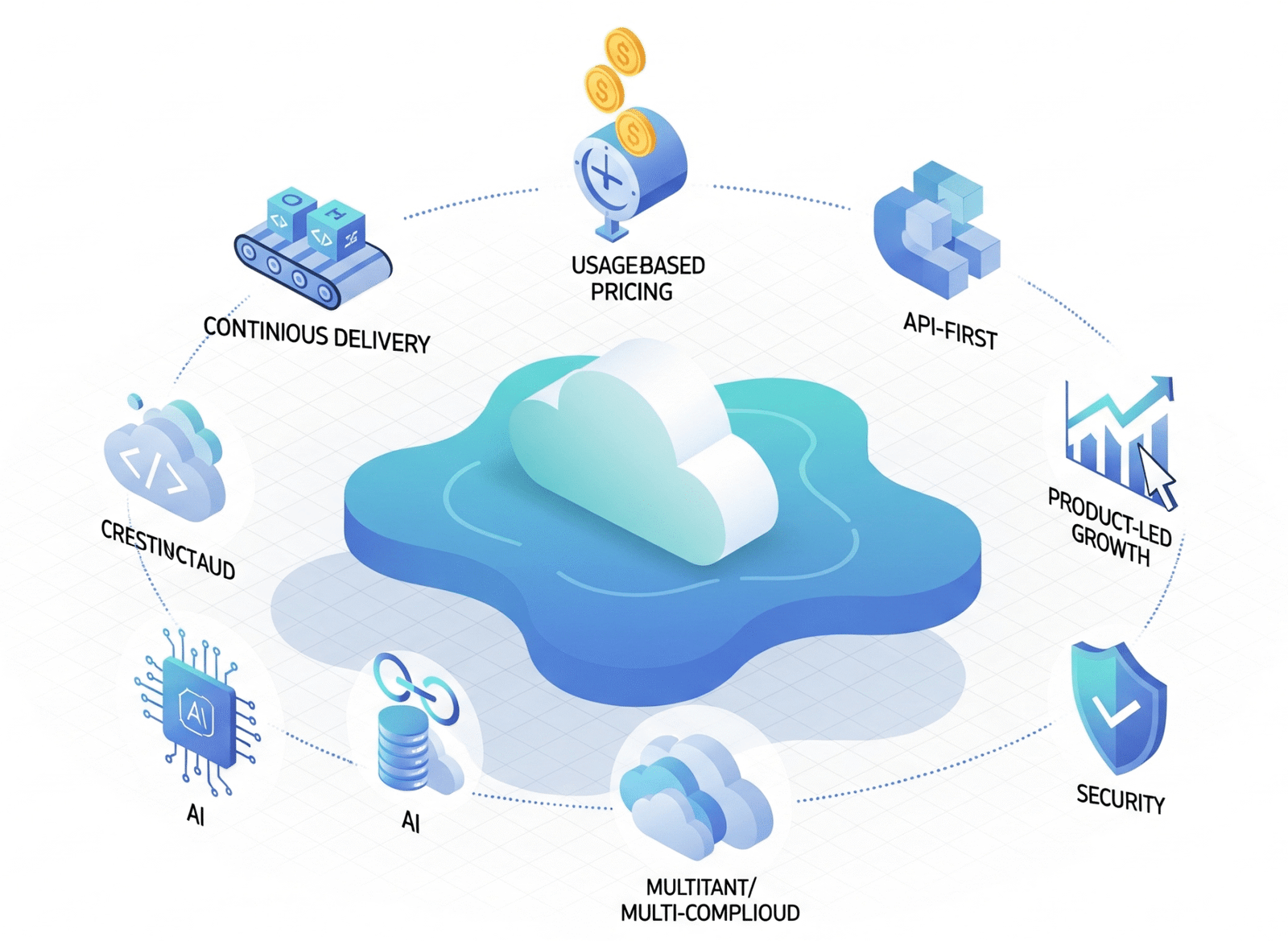

8. Secure Your Software Supply Chain

Modern applications rely on thousands of transitive dependencies and external build steps. Control this by maintaining a software bill of materials (SBOM) and scanning dependencies for known vulnerabilities. Pin versions to digest where possible to ensure reproducible builds. Verify the integrity of artifacts with signatures, and require provenance for critical components. Lock down CI/CD: protect runners, isolate secrets, and require review on pipeline changes. Treat dependency updates as a routine, automated process rather than a quarterly scramble. For internal packages, apply the same scrutiny: signed releases, clear ownership, and documented support levels. The goal is not zero dependencies, but transparent, verifiable ones.

How to do it

- Generate an SBOM during builds and store it with artifacts.

- Run software composition analysis (SCA) on every change; fail builds on critical issues.

- Pin dependency versions; prefer checksums or signature verification for fetched artifacts.

- Require code review for pipeline definitions and restrict who can modify them.

- Automate routine upgrades with bots and weekly merge windows.

Numeric mini case

Adopt gating rules:

- Critical CVEs: block merges until fixed or mitigated.

- High CVEs: require fix or explicit waiver with compensating control.

- Transitive updates: auto-merge patches that pass tests and keep change sets under 200 lines.

Visible supply chain metadata and consistent gates transform “dependency risk” from a guess into a managed process.

9. Log Safely, Handle Errors Wisely, and Monitor What Matters

Logging and error handling should illuminate behavior without exposing secrets or personal data. Decide which events matter—auth changes, permission denials, configuration changes, and sensitive data access—and log them with consistent structure and correlation IDs. Avoid logging secrets, tokens, or full payloads; redact or hash where necessary. Surface user-facing errors that are helpful but not revealing; detailed traces belong to internal logs. Pair logs with alerts: rate-limited failures, spikes in denials, or unusual data export volumes should notify the right team. Retain logs long enough to investigate incidents while respecting privacy regulations and storage costs.

How to do it

- Define a minimal event taxonomy and a consistent JSON log format across services.

- Redact secrets and personal data; log references, not raw sensitive content.

- Separate user messages from developer diagnostics; show friendly, generic errors to users.

- Correlate requests across services using IDs passed via headers.

- Wire alerts to on-call rotations with runbook links and escalation paths.

Numbers & guardrails

- Retention: choose a retention period that balances forensics needs with regulatory limits.

- Rate limits: cap repeated identical error alerts to avoid alert fatigue.

- Sampling: use sampling for high-volume debug logs while keeping security events at full fidelity.

Thoughtful logging and error handling shorten incident timelines and reduce the chance of leaking sensitive information during failures.

10. Harden Configuration and Security Headers

Secure defaults reduce the chance of accidental exposure. Start with infrastructure: least-privilege IAM roles, network segmentation, and encrypted storage. At the application layer, enforce HTTPS, prefer strong cipher suites, and set modern security headers. Content Security Policy (CSP) restricts where scripts and resources load from; HTTP Strict-Transport-Security (HSTS) ensures browsers stick to HTTPS; X-Content-Type-Options, X-Frame-Options, and Referrer-Policy close common holes. Disable directory listings and verbose error pages in production. Maintain configuration as code with environment-specific overlays so reviews catch risky changes. Document a standard hardening checklist and apply it uniformly across services.

How to do it

- Enforce TLS end-to-end and keep certificates managed automatically.

- Apply a baseline set of headers: HSTS, CSP, X-Content-Type-Options, X-Frame-Options, Referrer-Policy.

- Lock down admin surfaces by IP allowlists or step-up authentication.

- Store config in version control; require review and automated linting for risky toggles.

- Periodically run configuration scans to detect drift from the baseline.

Numeric mini case

An example header baseline:

- HSTS: include subdomains and preloading where appropriate.

- CSP: start with a strict script-src that avoids unsafe-eval/inline; measure violations via reports.

- Referrer-Policy: strict-origin-when-cross-origin.

Pair this with TLS across all environments and automated certificate renewal with overlap windows of ≥ 7 days to avoid expiry gaps.

When configuration is code-reviewed and enforced by automation, drift and accidental exposure become rare.

11. Automate Testing and CI/CD Security Gates

Automation is how you scale secure coding practices without slowing teams. Add static analysis (SAST) and secret scanning to pull requests for immediate feedback. Run dependency and container scanning on every build. Schedule dynamic testing (DAST) against deployed environments and infrastructure-as-code (IaC) checks before provisioning. Use pre-commit hooks for linting and quick checks; reserve heavier scans for CI to keep local loops fast. Make failures explain why they failed and how to fix them—developers act when guidance is clear. Finally, track trends rather than raw counts: you want risk moving down and time-to-fix trending shorter, even if absolute numbers fluctuate.

How to do it

- Add SAST and secret scanning to PRs; fail on high severity or confirmed secrets.

- Run SCA, container, and IaC scans in CI; store reports with artifacts.

- Schedule DAST against staging and production replicas with authentication configured.

- Use pre-commit hooks to catch simple issues before they hit CI.

- Publish a dashboard with trends on findings, fix times, and coverage.

Numbers & guardrails

- Build budget: keep per-PR checks under 10 minutes; shift heavier scans to nightly runs.

- Gating: block merges on critical findings; allow waivers only with a ticket and time-bound plan.

- Coverage: aim for SAST coverage across all primary languages in the codebase.

Automated gates that are fast, clear, and fair become part of the developer experience, not a roadblock to shipping.

12. Review Code Thoroughly and Remediate Fast

Peer review is where subtle security issues are often caught. Make security a first-class dimension of review by providing a checklist and training reviewers to spot risky patterns: input handling, auth and authorization, insecure defaults, and data exposure. Require at least one security-aware reviewer for sensitive areas. Pair reviews with a triage-to-fix workflow: classify findings by severity and exploitability, then assign owners and deadlines. Track time-to-fix as a metric, and close the loop by adding regression tests and updating the baseline if the issue reveals a pattern. Write post-incident retros that improve process rather than blame individuals.

How to do it

- Use a lightweight secure review checklist in PR templates to prompt focused scrutiny.

- Route risky changes (auth, crypto, secrets, data flows) to trained reviewers automatically.

- Classify findings and create tickets with clear, testable acceptance criteria.

- Add regression tests for each fix; prevent re-introductions.

- Hold short, blameless retros for significant issues and update standards accordingly.

Numeric mini case

Set service-level targets:

- Critical: fix or mitigate within 24–72 hours.

- High: fix within 7–14 days.

- Medium/Low: schedule in planned work, but never ignore.

Track time-to-fix and reopen rate; celebrate improvements and learn from outliers.

A predictable review and remediation loop turns security into a habit. Over time, your backlog shrinks and your release confidence rises.

Security Gate Summary (Quick Reference)

| Gate | Typical guardrail |

|---|---|

| Secrets scanning | Block merge on any high-confidence secret |

| SAST | No critical findings; highs require fix or waiver |

| SCA | Block on criticals; review highs |

| DAST | No exploitable highs on release branch |

| IaC | Deny public buckets and open security groups |

| Dependencies | Pinned versions; signed artifacts; SBOM generated |

The table reinforces policy in a scannable way; keep it alongside your CI configuration for easy updates.

Conclusion

Implementing secure coding practices is less about heroics and more about steady, visible habits built into everyday work. You defined a baseline, modeled threats, translated them into architecture, validated and encoded data, solidified identity, enforced authorization, and tamed secrets. You then stitched security into the supply chain, logging, configuration, automation, and reviews. The throughline is consistency: the same simple rules applied at each boundary reduce surprises and keep new risks from slipping in. Start small—pick two or three steps where your team feels the most pain—and make them automatic. Then iterate: measure, refine, and expand. The payoff is real: fewer incidents, faster delivery, happier auditors, and less cognitive load on developers. Adopt the first step this week, and commit to the rest over the next cycles—future you will thank you.

FAQs

What are secure coding practices in plain language?

Secure coding practices are the habits and controls that make vulnerabilities unlikely: validating inputs, encoding outputs, protecting identity and permissions, managing secrets outside code, and automating checks in CI. Instead of adding security at the end, you design and test for it throughout the lifecycle so issues are caught early when they’re cheap to fix.

How do I start if we’ve never done this before?

Pick one quick win and one structural win. Quick win: enable secrets scanning and block merges on leaks. Structural win: create a one-page baseline that lists required checks (SAST, SCA, PR review) and add them to CI. Announce the plan, provide examples, and measure improvements in time-to-fix and number of high-severity findings.

Is threat modeling only for complex systems?

No. Even small services benefit from a short session where you sketch data flows and ask what can go wrong. The goal is to discover obvious risks—like missing authorization or overly broad roles—and turn them into requirements before you write code. Short, frequent models beat occasional heavyweight exercises.

Which tools should we adopt first?

Start with those that catch the most issues with the least friction: secret scanning, SAST on pull requests, and dependency scanning on every build. Add IaC scanning if you manage cloud resources, and schedule DAST once you have stable staging environments. Choose tools your language and platform communities support well.

How do we choose password hashing parameters?

Tune them to your hardware so a single verification takes roughly 100–250 milliseconds for typical users. That keeps sign-ins responsive while making offline cracking materially harder. Re-evaluate periodically as hardware changes, and test on representative machines to avoid locking out slower devices.

What’s the difference between authentication and authorization?

Authentication answers “who are you?” and is implemented with logins, tokens, and MFA. Authorization answers “what are you allowed to do?” and is enforced with policies like RBAC or ABAC. You need both; a strong login won’t help if any user can call admin endpoints, and perfect policies won’t help if tokens can be forged.

How should we handle third-party dependencies safely?

Generate an SBOM, scan dependencies continuously, and pin versions. Block merges on critical vulnerabilities and review highs with context. Prefer signed artifacts and repositories with strong provenance. Automate updates so you’re not forced into risky, last-minute upgrades when a severe issue is disclosed.

What’s a reasonable logging strategy that won’t leak data?

Log events, not raw payloads. Use structured logs with correlation IDs, redact secrets, and keep personal data out unless absolutely needed. Focus on security-relevant events: authentication changes, permission denials, configuration updates, and data exports. Set alerts on unusual patterns and keep retention aligned with privacy obligations.

Do we need a dedicated security team?

A centralized security function helps with guidance, tooling, and incident response, but every engineer owns security decisions. Start by enabling champions within squads who care about the topic and give them time to improve tooling and documentation. Over time, decide whether a specialized team makes sense for your scale.

How do we keep from slowing down delivery?

Make the secure path the fastest path. Provide templates, golden paths, and pre-approved components. Add fast, actionable gates that explain exactly what to fix. Track delivery metrics like lead time and compare before and after; most teams find that fewer break-fix cycles and clearer requirements actually speed things up.

References

- OWASP Top Ten — OWASP — https://owasp.org/Top10/

- Application Security Verification Standard (ASVS) — OWASP — https://owasp.org/www-project-application-security-verification-standard/

- Secure Software Development Framework (SSDF) — NIST — https://www.nist.gov/ssdf

- Common Weakness Enumeration (CWE) — MITRE — https://cwe.mitre.org/

- Secure by Design — CISA — https://www.cisa.gov/secure-by-design

- Security Development Lifecycle (SDL) — Microsoft — https://www.microsoft.com/securityengineering/sdl

- SLSA: Supply-chain Levels for Software Artifacts — OpenSSF — https://slsa.dev/

- OWASP Code Review Guide — OWASP — https://owasp.org/www-project-code-review-guide/

- GitHub Code Security — GitHub — https://docs.github.com/code-security

- The Update Framework (TUF) — The Update Framework — https://theupdateframework.io/