Disclaimer: This article provides general information regarding the use of Artificial Intelligence in Human Resources and recruitment. It does not constitute legal advice. Employment laws and regulations regarding AI, such as the EU AI Act and local US laws, are subject to change. HR professionals and business leaders should consult with qualified legal counsel to ensure compliance with applicable jurisdiction-specific regulations.

The promise of Artificial Intelligence in recruitment is undeniable: faster screening, reduced time-to-hire, and the potential to uncover hidden talent in vast pools of data. However, as organizations increasingly delegate gatekeeping decisions to algorithms, the risks of systemic bias, legal liability, and reputational damage have grown in tandem. Responsible AI in hiring is no longer just an ethical preference; it is a business imperative and, increasingly, a legal requirement.

For HR leaders, the challenge lies in balancing the efficiency of automation with the nuance of human judgment. How do you ensure your shiny new resume parser isn’t rejecting qualified candidates based on zip codes or gendered language? How do you audit a “black box” algorithm? This guide explores the frameworks, strategies, and practical steps necessary to deploy AI tools that enhance, rather than compromise, the integrity of your hiring process.

Key Takeaways

- Human Oversight is Mandatory: “Human-in-the-loop” isn’t just a buzzword; it is a critical safeguard. AI should function as a decision-support tool, never the final decision-maker for high-stakes employment outcomes.

- Compliance is Evolving: From New York City’s Local Law 144 to the EU AI Act, regulatory bodies are targeting automated employment decision tools (AEDTs). Ignorance of these laws is a liability.

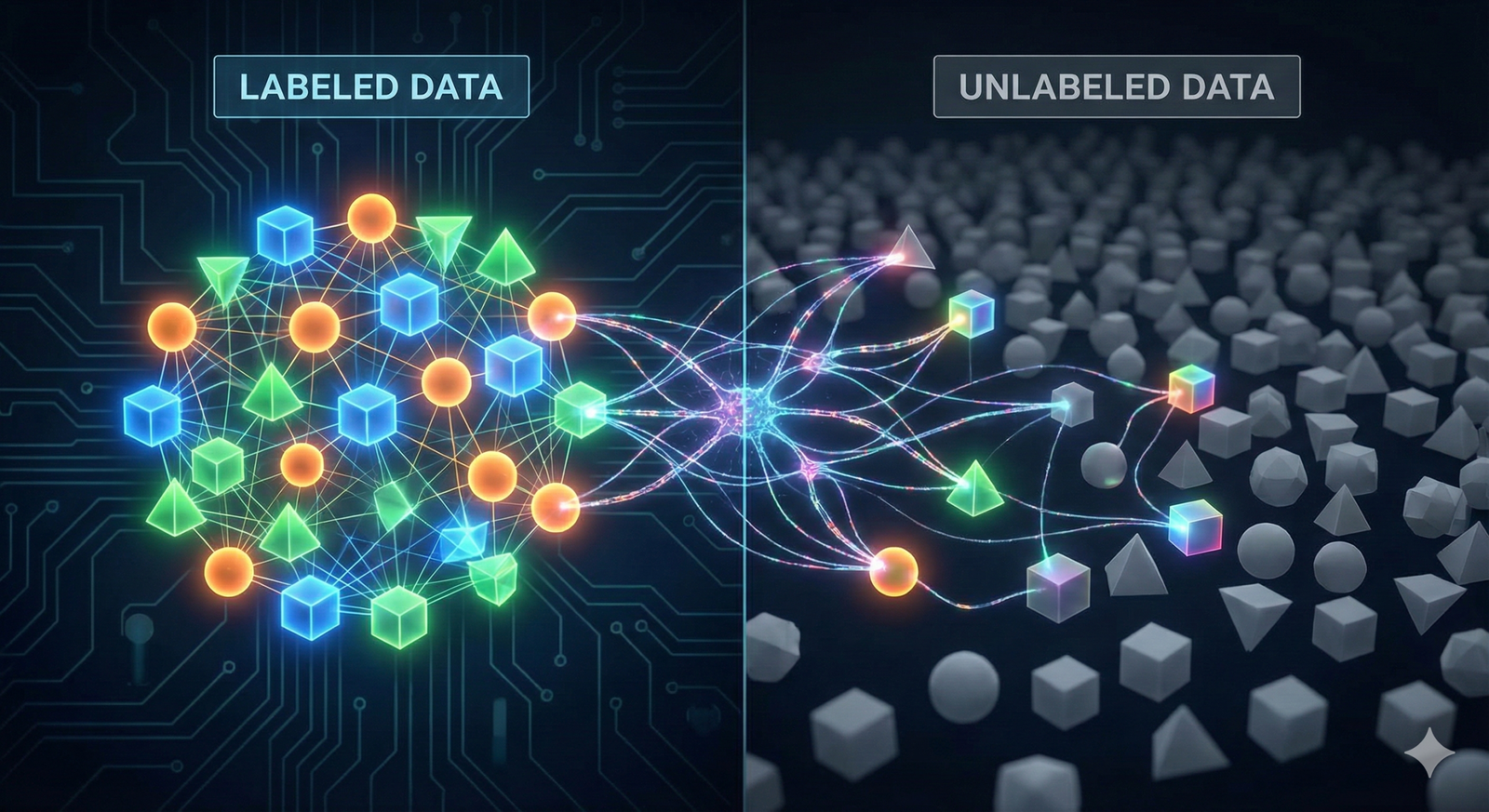

- Data Provenance Matters: An algorithm is only as good as the data it was trained on. If your historical hiring data contains bias, your AI will replicate and scale that bias.

- Vendor Accountability: You cannot outsource liability. HR leaders must rigorously vet vendors, demanding transparency reports, adverse impact studies, and explainability statements.

- Transparency Builds Trust: Candidates have a right to know when they are being evaluated by AI. Clear communication regarding how data is used improves candidate experience and brand perception.

Who This Is For (And Who It Isn’t)

This guide is designed for:

- HR Directors and CHROs: Who are setting the strategic vision for talent acquisition and need to understand the governance risks of AI adoption.

- Talent Acquisition Managers: Who are evaluating, purchasing, and using AI tools for sourcing, screening, and interviewing.

- Compliance and Legal Officers: Who need to understand the intersection of employment law (like the EEOC guidelines) and algorithmic accountability.

- DE&I Leaders: Who want to ensure technology supports diversity goals rather than hindering them.

This guide is NOT for:

- AI Engineers: While we touch on technical concepts, this is not a coding tutorial for building neural networks.

- General Staff: This focuses on high-level decision-making and implementation strategies rather than end-user tutorials for specific software platforms.

Defining Responsible AI in the Context of HR

Responsible AI in hiring refers to the practice of designing, developing, and deploying AI systems with the specific intent to empower employees and businesses, and fairly impact customers and society. In the context of HR, this means using tools that are:

- Fair and Unbiased: The system does not discriminate against candidates based on protected characteristics (race, gender, age, disability, etc.), either directly or through proxy variables.

- Explainable and Transparent: The logic behind an algorithmic recommendation can be understood by humans. HR teams should be able to explain why a candidate was ranked a certain way.

- Secure and Private: Candidate data is handled in strict compliance with privacy laws (GDPR, CCPA), ensuring that sensitive personal information is not misused.

- Accountable: There is a clear line of responsibility. When an AI tool makes an error, there is a human process to rectify it.

The “Black Box” Problem

One of the central challenges in responsible AI in hiring is the “black box” nature of many machine learning models, particularly deep learning. In these systems, data goes in and a prediction comes out, but the complex internal processing that links the two is often opaque, even to the developers.

In hiring, a black box is dangerous. If a tool rejects a candidate because they attended a women’s college (a proxy for gender) or live in a specific neighborhood (a proxy for race), and you cannot see that logic, you are unknowingly committing discrimination. Responsible AI demands “glass box” approaches or rigorous post-hoc auditing to uncover these correlations.

The Business Case for Ethical AI Implementation

Beyond the moral imperative to treat people fairly, there is a hard-nosed business case for investing in responsible AI in hiring.

1. Mitigating Legal and Regulatory Risk

As of January 2026, the regulatory landscape is tightening. In the United States, the Equal Employment Opportunity Commission (EEOC) has made it clear that employers are responsible for violating Title VII of the Civil Rights Act, even if the discrimination is caused by a third-party vendor’s software.

Specifically, automated tools that result in a “disparate impact”—where members of a protected class are selected at a significantly lower rate than others—can trigger federal investigations. Furthermore, jurisdictions like New York City (via Local Law 144) require employers to conduct annual impartial bias audits of their Automated Employment Decision Tools (AEDTs) and publish the results. Failing to adhere to responsible practices is now a direct path to litigation and fines.

2. Protecting Brand Reputation

In an era of social media transparency, a “biased algorithm” scandal can cause irreparable harm to an employer brand. Candidates talk. If your application process is perceived as dehumanizing, unfair, or discriminatory, top talent will go elsewhere. Conversely, a transparent, fair process enhances your Employer Value Proposition (EVP), signaling that you value merit and inclusivity.

3. improving Quality of Hire

Bias is, fundamentally, a mathematical error in evaluating talent. If your AI screens out candidates based on irrelevant factors (like the font on their resume or a gap year taken for caregiving), you are shrinking your talent pool. Responsible AI focuses on predictive validity—ensuring the tool actually measures job-relevant competencies rather than historical artifacts. By removing bias, you widen the funnel and access a richer diversity of skills.

The Legal Landscape: Compliance as of 2026

Navigating the compliance requirements for AI in HR requires awareness of both established employment laws and emerging AI-specific regulations.

The EEOC and “Disparate Impact”

The guiding principle in the US remains the Uniform Guidelines on Employee Selection Procedures. The EEOC applies the “four-fifths rule” (or 80% rule) as a benchmark. If the selection rate for a certain group (e.g., women) is less than 80% of the selection rate for the group with the highest rate (e.g., men), there is evidence of adverse impact.

Modern AI tools can easily violate this if not audited. For example, if an AI is trained on successful resumes from the past ten years, and the company predominantly hired men during that time, the AI might downgrade resumes containing the word “women’s” (as in “Women’s Chess Club”) because those terms do not statistically correlate with the training set of “successful” hires.

NYC Local Law 144

This landmark legislation, which set a precedent for other municipalities, mandates that employers in NYC cannot use an AEDT to screen candidates or employees for promotion unless:

- The tool has been the subject of a bias audit conducted by an independent auditor no more than one year prior to its use.

- A summary of the audit results is made publicly available on the employer’s website.

- Candidates are given notice 10 business days prior that an AEDT will be used and are informed of the specific qualifications or characteristics the tool will assess.

The EU AI Act

For organizations with operations in Europe, the EU AI Act classifies AI systems used in employment, worker management, and access to self-employment as “High Risk.” This designation imposes strict obligations regarding data governance, record-keeping, transparency, human oversight, and accuracy. Non-compliance can lead to massive fines (up to percentages of global turnover).

How AI Enters the Recruitment Funnel

To manage AI responsibly, you must identify where it sits in your tech stack. AI is rarely a single tool; it is a suite of capabilities embedded throughout the funnel.

1. Sourcing and Programmatic Advertising

AI algorithms determine who sees your job ads on platforms like LinkedIn or Google.

- The Risk: If the ad delivery algorithm optimizes for “lowest cost per click” or “highest engagement,” it might show executive roles primarily to men or younger people, effectively excluding older or female candidates before they even apply.

- Responsible Action: Audit your ad delivery settings. Ensure you are not using exclusionary targeting criteria (e.g., “Lookalike Audiences” based on a non-diverse current workforce).

2. Resume Screening and Parsing

These tools analyze text to rank candidates against a job description.

- The Risk: Keyword stuffing and format bias. An AI might reject a PDF that it can’t parse correctly or penalize a candidate for using different terminology for the same skill (e.g., “Client Relations” vs. “Customer Success”).

- Responsible Action: Use tools that utilize semantic matching (understanding meaning) rather than strict keyword matching. Regularly test the parser with varied resume formats.

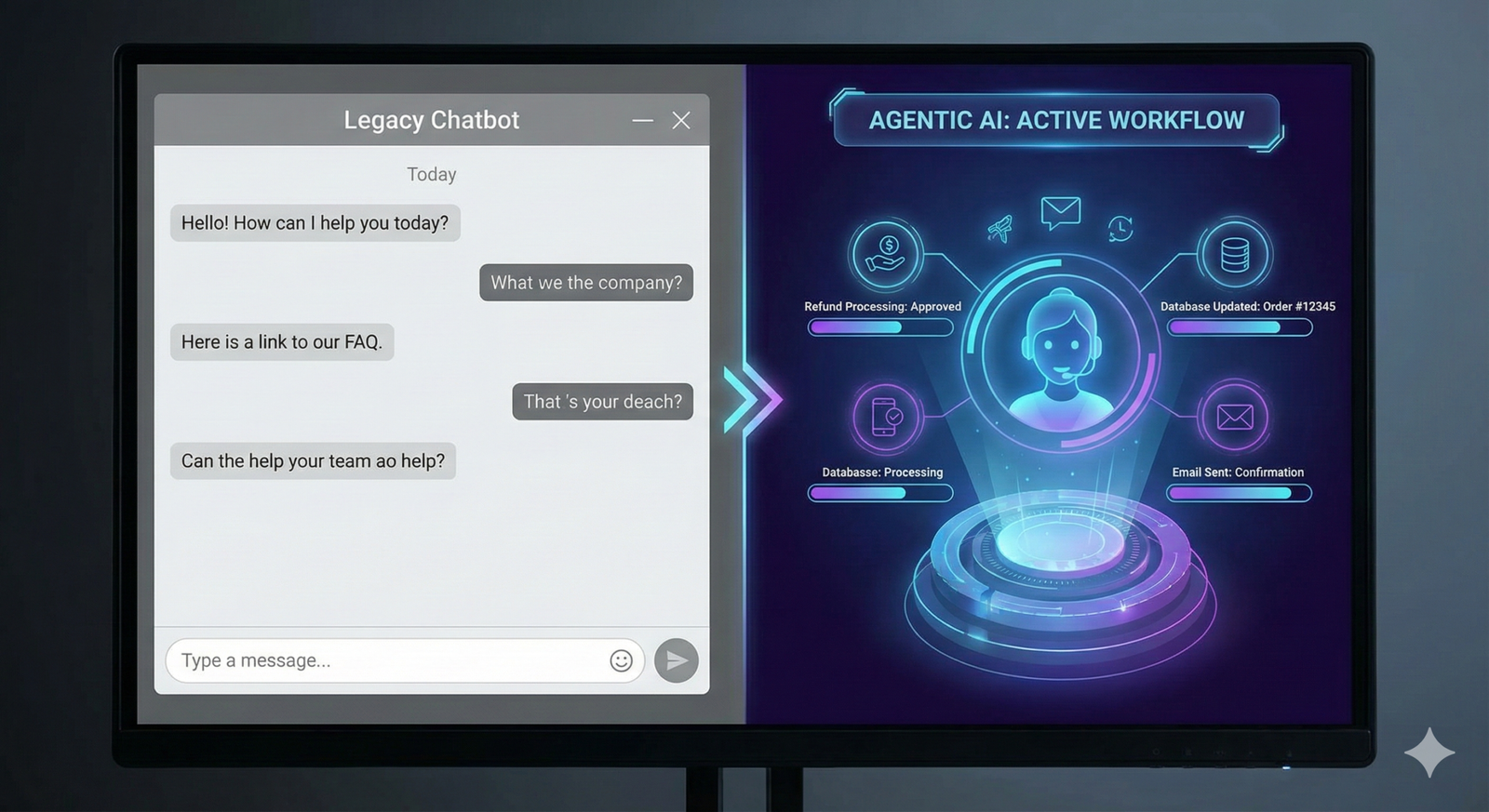

3. Chatbots and Conversational AI

Used for initial engagement, scheduling, and answering FAQs.

- The Risk: Accessibility. If a chatbot cannot understand non-native English speakers or dialects, it creates a barrier to entry.

- Responsible Action: Ensure chatbots have “off-ramps” to human recruiters. Test the Natural Language Processing (NLP) capabilities across different linguistic demographics.

4. Psychometric and Gamified Assessments

Games or tests that measure cognitive ability, personality traits, or risk tolerance.

- The Risk: Neurodiversity bias. A game that requires fast reaction times might unfairly penalize candidates with certain disabilities or older candidates, even if reaction time is irrelevant to the job.

- Responsible Action: Ensure alternative assessment methods are available. Validate that the traits being measured (e.g., “risk-taking”) strictly correlate with job performance.

5. Video Interview Analysis

AI that analyzes facial expressions, tone of voice, and word choice during video interviews.

- The Risk: This is one of the most controversial areas. “Affect recognition” (analyzing emotions from faces) is scientifically contested and often biased against different races, cultures, and people with disabilities (e.g., stroke survivors or those with facial paralysis).

- Responsible Action: Proceed with extreme caution. Many experts and jurisdictions recommend banning facial analysis for hiring entirely. Focus AI analysis on the content of the answer (transcripts), not the visual delivery, and always offer an audio-only or text-based alternative.

Mechanisms of Algorithmic Bias

Understanding how bias creeps into the machine is the first step toward mitigation. It usually stems from the data, not the code itself.

Historical Bias (The “Garbage In, Garbage Out” Principle)

If a company has historically hired mostly white men from Ivy League universities, the “training data” reflects this. The AI identifies patterns in this data—such as “attended Harvard” or “played lacrosse”—and correlates them with “success.” It will then downgrade candidates who attended HBCUs or state colleges, not because the code is racist, but because the code is accurately replicating a biased history.

Proxy Variables

You might remove “race” and “gender” from the dataset, but the AI can find “proxies.”

- Zip Code: Highly correlated with race and socioeconomic status.

- Employment Gaps: Highly correlated with gender (maternity leave/caregiving).

- Vocabulary: Certain words or phrasing can indicate gender or cultural background. A responsible AI system actively scans for and suppresses the weight of these proxy variables.

Label Bias

This occurs when the target variable (what the AI is trying to predict) is subjective. If the AI is trained to predict “Manager Approval,” it will learn the biases of the managers. If managers consistently rate introverts poorly despite good performance, the AI will learn to screen out introverts.

Strategies for Mitigating Bias and Ensuring Fairness

Achieving responsible AI in hiring requires a multi-layered defense strategy involving data hygiene, testing, and continuous monitoring.

1. Data Hygiene and Pre-Processing

Before training a model (or before a vendor trains theirs), the data must be scrubbed. This involves:

- De-identification: Removing names, photos, and explicit demographic markers.

- Reweighing: Adjusting the weights of training examples to ensure equal representation of different groups.

- Sampling: Ensuring the dataset is large enough and diverse enough. If you are hiring for a global role, do not train the AI solely on resumes from San Francisco.

2. Adversarial Testing

This involves trying to “break” the AI. Create synthetic resumes that are identical in skills and experience but differ in names (e.g., “John” vs. “Jamal”) or gender pronouns. Feed them into the system. If the ranking changes based solely on the name, the system is biased. This “audit study” method is the gold standard for detecting discrimination.

3. Adverse Impact Analysis

Regularly run statistical tests on the outcomes of the AI. Compare the pass rates of different demographic groups. If the pass rate for a protected group falls below the 80% threshold (or a stricter internal standard), halt the use of the tool immediately and investigate.

4. The “Human-in-the-Loop” Framework

Automation should never be fully autonomous in hiring. A common responsible structure is:

- AI as Sorter: The AI groups candidates into “High Match,” “Medium Match,” and “Low Match.”

- Human as Reviewer: A human recruiter reviews the “High Match” group to confirm. Crucially, a human also spot-checks the “Low Match” group to ensure qualified candidates aren’t being wrongly rejected (False Negatives).

- AI as Advisor: The AI provides a score + a reason code (e.g., “Score: 85/100. Reason: Strong match on SQL and Python skills”). The human uses this information to aid their interview prep, not to make the final hire/no-hire call.

Evaluating AI Vendors: A Checklist for Buyers

Most HR departments do not build their own AI; they buy it. This makes vendor selection the most critical control point. Do not accept marketing claims like “bias-free” at face value—mathematically, no system is 100% bias-free.

Ask these questions during the RFP process:

1. Data Provenance:

- “What data was this model trained on?”

- “Is the training data representative of our specific industry and geography?”

- “How often is the model retrained?”

2. Bias Testing:

- “Do you conduct disparate impact analyses? Can we see the most recent technical report?”

- “How do you handle proxy variables in your dataset?”

- “Have you undergone a third-party audit (e.g., NYC Local Law 144 compliance)?”

3. Explainability:

- “Can the system explain why a specific candidate was rejected?”

- “Does the tool provide ‘feature importance’ lists (showing which skills weighed most heavily)?”

4. Liability and Privacy:

- “Does your contract indemnify us against EEOC claims resulting from the use of your tool?”

- “How is candidate data stored, and for how long?”

5. Accommodation:

- “How does the tool handle candidates who request accommodations (e.g., extra time on a test due to dyslexia)?”

Implementation Strategy: A Phased Approach

Rolling out AI in hiring should be a careful, staged process, not a sudden switch flip.

Phase 1: Preparation and Governance

- Form a Committee: Assemble a cross-functional team (HR, Legal, IT, DE&I).

- Define Principles: Establish an ethical charter for AI use.

- Audit Current State: Map out where AI is currently used (you might be surprised by “shadow IT”).

Phase 2: Pilot and Validation

- Select a Pilot Role: Choose a high-volume role (e.g., Customer Service Rep) for a pilot.

- Run Parallel Processes: Have the AI score candidates, but have humans score them manually as well without seeing the AI score.

- Compare Results: Analyze the correlation. Did the AI bias against a group the humans didn’t? Did the AI find quality candidates the humans missed?

Phase 3: Deployment and Monitoring

- Go Live with Guardrails: implementation the tool with human oversight protocols in place.

- Candidate Notification: Update privacy policies and job descriptions to inform candidates about the use of AI.

- Quarterly Audits: Schedule regular reviews of the hiring data to check for adverse impact drift.

Common Mistakes and Pitfalls

Even with good intentions, organizations often stumble when adopting hiring AI.

- The “Set It and Forget It” Fallacy: AI models drift. A model trained on 2023 market data might be irrelevant in 2026. Without continuous monitoring, a functional model can degrade into a biased one.

- Over-Reliance on Efficiency: Prioritizing speed over quality. If you tune the AI to screen out 90% of applicants instantly, you are likely generating massive False Negatives (rejecting good people).

- Ignoring the “Rejected” Pile: Most organizations only audit the people they hired. To truly understand your AI, you must audit the people you didn’t hire. Periodically review the “rejected” stack to see if the AI is consistently filtering out qualified candidates from specific demographics.

- Lack of Accommodation Workflows: Failing to provide a non-AI alternative for candidates with disabilities. If a candidate cannot undergo a biometric video interview due to a medical condition, legal compliance requires an alternative format.

Related Topics to Explore

- Explainable AI (XAI) in HR: Deep dive into the technologies that make neural networks transparent.

- Neurodiversity and Algorithmic Testing: How to ensure gamified assessments don’t discriminate against autistic or ADHD candidates.

- The Future of Resume-Less Hiring: Using skills-based inference engines instead of document parsing.

- Global Data Privacy Standards: Navigating the differences between GDPR, CCPA, and emerging Asian privacy laws in recruitment.

- Generative AI in Interviewing: The rise of AI avatars conducting first-round interviews and the ethical implications.

Conclusion

Responsible AI in hiring is a journey, not a destination. It requires a fundamental shift in mindset from viewing AI as a “magic wand” that solves recruitment to viewing it as a powerful, yet fallible, tool that requires constant supervision.

As of 2026, the excuse of “the algorithm did it” is no longer acceptable to regulators, the public, or candidates. By adhering to strict data governance, maintaining human oversight, and rigorously auditing for bias, HR leaders can harness the power of AI to create a hiring landscape that is not only more efficient but also more equitable. The goal is not to replace the recruiter, but to elevate the recruiter—freeing them from administrative drudgery so they can focus on the distinctly human art of connection, empathy, and judgment.

FAQs

1. Is it legal to use AI to reject candidates automatically? While technically legal in many jurisdictions if disclosed, it is highly risky. Regulations like the GDPR give individuals the right not to be subject to a decision based solely on automated processing. Best practice—and often the only legally defensible path—is to ensure a “human-in-the-loop” reviews the decision, especially for rejections.

2. Can AI completely remove bias from hiring? No. AI can eliminate inconsistent human noise (e.g., a recruiter being tired on a Friday afternoon), but it cannot eliminate systemic bias present in training data. It can also introduce new forms of technical bias. The goal is bias mitigation, not total elimination.

3. What is the difference between predictive AI and generative AI in hiring? Predictive AI analyzes historical data to forecast outcomes (e.g., “How likely is this candidate to stay for 2 years?”). Generative AI creates new content (e.g., writing a job description or generating interview questions). Both have risks, but predictive AI carries higher risks regarding discrimination in selection decisions.

4. How often should we audit our hiring algorithms? At a minimum, audits should be conducted annually. However, if you make significant changes to the model or the job requirements, or if you notice a shift in applicant demographics, an immediate audit is recommended. NYC Local Law 144 requires an annual independent audit.

5. What is a “proxy variable” in AI hiring? A proxy variable is a neutral data point that correlates strongly with a protected characteristic. For example, “years of experience” can be a proxy for age. A zip code can be a proxy for race. Responsible AI must identify and neutralize these proxies to prevent indirect discrimination.

6. Do we need to tell candidates we are using AI? Yes. Transparency is a core tenet of responsible AI. Beyond being a legal requirement in places like NYC and the EU, it builds trust. Candidates should know if a machine is parsing their CV or analyzing their video interview.

7. How does the EEOC view AI in hiring? The EEOC views AI selection tools as subject to the same anti-discrimination laws (Title VII) as traditional tests. They focus on outcomes. If the tool causes a disparate impact on a protected class, the employer is liable unless they can prove the tool is job-related and consistent with business necessity, and that no less discriminatory alternative exists.

8. Can we use ChatGPT to screen resumes? Using a public Large Language Model (LLM) like ChatGPT for screening poses privacy and consistency risks. Public LLMs can hallucinate (make up facts) and may not be compliant with data retention laws. Specialized, private/enterprise AI tools designed for HR are safer and more reliable for this purpose.

9. What should small businesses do if they can’t afford expensive audits? Small businesses should rely on reputable vendors who provide audit certifications for their tools. Do not build custom models unless you have the resources to audit them. Stick to “off-the-shelf” tools that have publicized their compliance and validity studies.

10. What is “adverse impact”? Adverse impact refers to employment practices that appear neutral but have a discriminatory effect on a protected group. In AI, this happens if the algorithm selects one group at a significantly lower rate than another, even if the algorithm was not explicitly programmed to discriminate.

References

- U.S. Equal Employment Opportunity Commission (EEOC). (2023). Select Issues: Assessing Adverse Impact in Software, Algorithms, and Artificial Intelligence Used in Employment Selection Procedures under Title VII of the Civil Rights Act of 1964.

- New York City Department of Consumer and Worker Protection. (2023). Automated Employment Decision Tools (AEDT). https://www.nyc.gov/site/dca/about/automated-employment-decision-tools.page

- European Parliament. (2024). EU AI Act: first regulation on artificial intelligence. https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

- Society for Human Resource Management (SHRM). (n.d.). Artificial Intelligence in HR.

- National Institute of Standards and Technology (NIST). (2023). AI Risk Management Framework (AI RMF 1.0). https://www.nist.gov/itl/ai-risk-management-framework

- Data & Society. (2024). Algorithmic Accountability in the Workplace.

- World Economic Forum. (2023). Human-Centred Artificial Intelligence in Human Resources: A Toolkit for HR Professionals.

- Information Commissioner’s Office (ICO). (2023). Guidance on AI and Data Protection. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/guidance-on-ai-and-data-protection/

- Harvard Business Review. (2023). Using AI to Hire? Here’s How to Do It Without Bias.

- The White House. (2022). Blueprint for an AI Bill of Rights. https://www.whitehouse.gov/ostp/ai-bill-of-rights/