Generative AI has fundamentally shifted the creative landscape from a discipline of manual construction to one of curation, direction, and synthesis. As of January 2026, the technology has graduated from experimental novelty to a core infrastructure powering the global media supply chain. For creative professionals, the question is no longer if they should use these tools, but how to integrate them without losing the human nuance that defines art.

In this guide, generative AI multimedia creativity refers to the use of machine learning models—specifically diffusion models, transformers, and neural audio synthesizers—to produce or modify visual and auditory assets. This transformation is not merely about speed; it is about the democratization of high-fidelity production and the emergence of entirely new “multimodal” workflows where a single creator can orchestrate images, video, and sound simultaneously.

Key Takeaways

- From Creation to Direction: The artist’s role is evolving into that of a creative director, guiding AI models through prompting and iterative refinement rather than manually rendering every pixel or waveform.

- Multimodal Convergence: Distinct silos of production (graphic design, video editing, sound engineering) are collapsing into unified workflows where text or image prompts drive outputs across all three mediums.

- Control is the New Currency: The focus of AI development has moved from random generation to precise controllability (e.g., preserving character consistency in video or specific instrumentation in music).

- Ethical Friction: Copyright, consent, and the “black box” nature of training data remain the most significant hurdles for enterprise adoption.

- Hybrid Workflows: The most successful creators are not replacing traditional tools but augmenting them—using AI for texturing, storyboarding, and rough cuts while retaining manual control for final polishing.

Scope of This Guide

This article covers the impact of generative AI on static imagery (photography, illustration), moving images (video, animation), and audio (music, voice). It is designed for creative professionals, agency leaders, and independent producers looking to understand the mechanics, applications, and implications of these technologies. We will not cover code generation or pure text-writing AI except where they serve as prompts for multimedia.

The Mechanics of the Shift: How We Got Here

To understand the transformation of multimedia creativity, one must understand the underlying shift in technology. We have moved from “discriminative” AI (which categorizes data) to “generative” AI (which creates data).

The Rise of Diffusion and Transformers

Two primary architectures drive the current multimedia revolution:

- Diffusion Models (Images & Video): These models work by adding noise (static) to an image until it is unrecognizable, and then learning to reverse the process to reconstruct a clear image from pure noise. When guided by a text prompt, the model “denoises” the static into a visual representation of the text. This is the engine behind the massive leap in visual fidelity seen in the last three years.

- Transformers (Text & Music): Originally designed for language, transformer architectures analyze sequences of data to predict what comes next. In music and video, they analyze sequences of audio waveforms or video frames to predict and generate coherent continuations, allowing for temporal consistency that was previously impossible.

Latent Space: The New Canvas

For the modern digital artist, the “canvas” is no longer a blank raster grid; it is latent space. This is a multi-dimensional mathematical representation of all the concepts the AI model has learned. When a user writes a prompt like “a cyberpunk city in the style of 1980s anime,” they are navigating coordinates in this latent space to locate a specific intersection of visual concepts.

Understanding this concept is crucial because it explains why “prompt engineering” became a skill. It is not just about using fancy words; it is about effectively triangulation coordinates in the model’s latent space to retrieve the exact aesthetic desired.

1. Transforming the Visual: Generative AI in Images

The transformation in static imagery is the most mature of the three categories. What began as surreal, dream-like distortions has evolved into photorealistic commercial assets, vector graphics, and precise editing capabilities.

Beyond Text-to-Image: The Era of Control

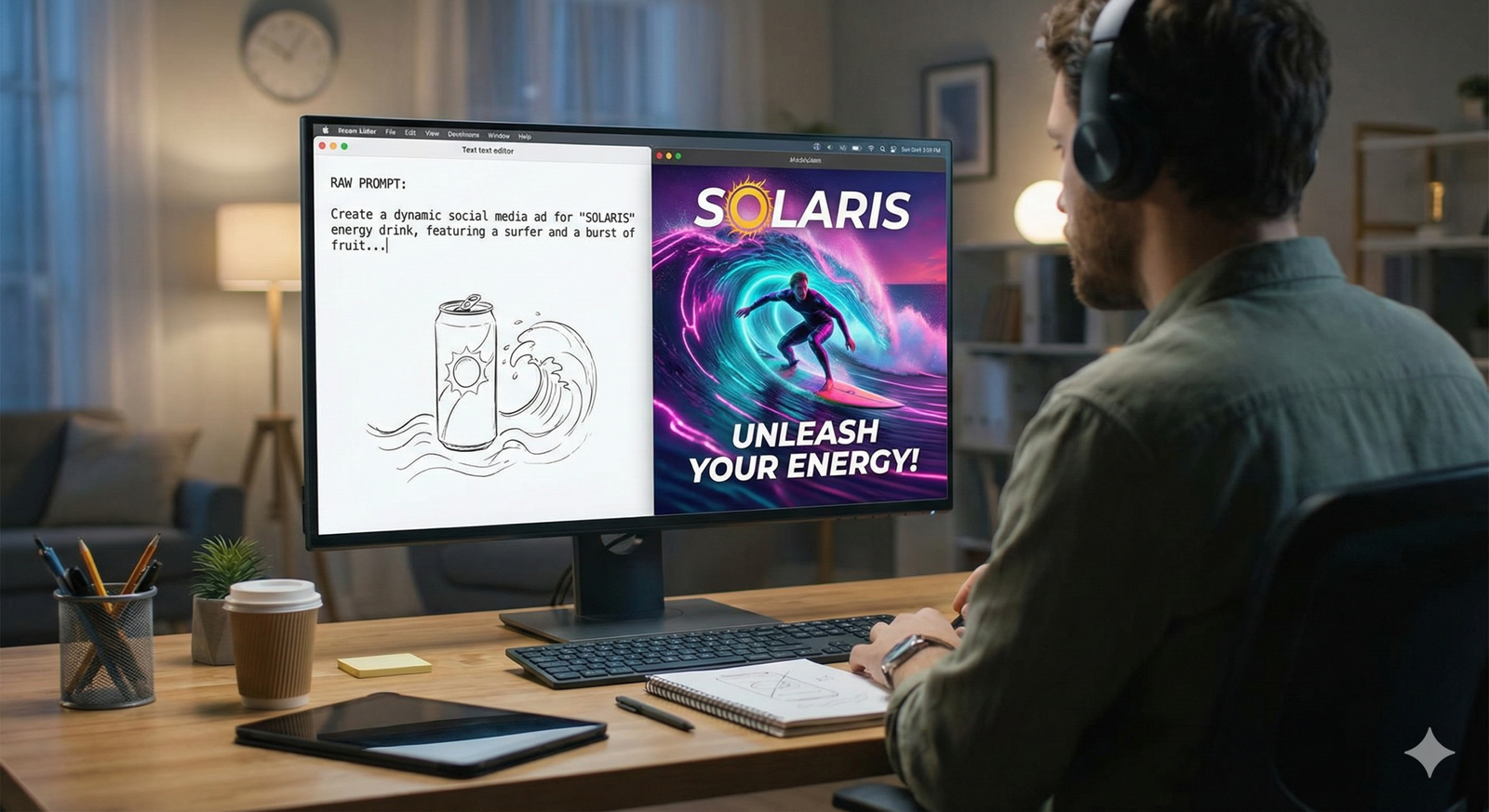

Early generative AI (circa 2022-2023) was chaotic. You rolled the dice and hoped for a good result. Today, the industry standard is controllable generation.

- Structure Guidance (ControlNet/Adapters): Professionals now use “structural references” alongside text prompts. For example, a fashion designer can sketch a rough outline of a dress and ask the AI to render it in denim, silk, or leather. The AI strictly adheres to the geometry of the sketch while generating the texture and lighting. This solves the “randomness” problem that plagued early adoption.

- In-Painting and Out-Painting: Generative fill technology allows editors to extend the borders of a photograph (out-painting) or replace specific elements within it (in-painting) with context-aware pixels. This has reduced tasks that previously took hours of cloning and stamping in Photoshop to seconds.

- Style Training (LoRAs): Studios can now train small, lightweight models (often called LoRAs – Low-Rank Adaptation) on their specific brand assets or characters. This ensures that every image generated adheres to the brand’s color palette and character design, a critical requirement for corporate consistency.

The Death of “Stock” and the Rise of “Bespoke”

The stock photography market has undergone a radical contraction. Creative directors no longer need to search for hours to find a “business meeting with diversity in a sunlit room.” They simply generate it.

This shift has two profound effects:

- Hyper-Specificity: Brands can create imagery that perfectly matches their campaign without the compromise of generic assets.

- Cost Displacement: Budget previously allocated to licensing fees is shifting toward “AI Operators” or “Synthetic Media Artists” who can generate, upscale, and retouch these assets.

Ethical Considerations in Image Generation

The visual sector faces the fiercest copyright battles. Because models were trained on billions of scraped images, the legal standing of AI-generated art remains complex.

- Copyrightability: As of early 2026, in many major jurisdictions (including the US), purely AI-generated images without significant human modification cannot be copyrighted. This limits the utility of raw AI output for major intellectual property (IP) creation.

- Opt-Out Mechanisms: New standards have emerged allowing artists to “poison” or tag their work to prevent it from being used in training data, though enforcement remains a technological arms race.

2. The Video Revolution: Motion, Physics, and Consistency

If image generation is mature, video generation is the explosive frontier. Moving from static pixels to temporal sequences introduces exponential complexity: the AI must understand not just what a cat looks like, but how a cat moves, how light shifts over time, and how object permanence works.

Solving the “Flicker” Problem

For years, AI video suffered from severe temporal instability—objects would morph, backgrounds would boil, and faces would distort from frame to frame. Modern video models have largely solved this through temporal attention mechanisms.

These mechanisms allow the model to “remember” what the subject looked like in frame 1 while generating frame 24. This consistency allows for the creation of:

- coherent character acting, where facial features remain stable during dialogue.

- consistent lighting physics, where shadows move realistically as objects rotate.

Text-to-Video vs. Image-to-Video

While “Text-to-Video” gets the headlines, Image-to-Video is the professional workflow of choice.

- The Workflow: A creator generates a perfect “hero frame” using image tools (where control is highest). They then feed this image into a video model to animate it.

- Why it matters: This grants the director control over the composition, lighting, and character design before motion is applied. It bridges the gap between concept art and final animation.

Use Cases in Production

- Pre-visualization (Pre-vis): Directors can generate animated storyboards to communicate camera moves and lighting to the crew before a physical set is built. This saves millions in production logistics.

- B-Roll Generation: Documentary and corporate video editors use AI to generate specific B-roll footage (e.g., “microscope zooming into a cell”) that would be too expensive to shoot or buy.

- VFX and Cleanup: AI is replacing manual rotoscoping (cutting distinct objects out of a scene). Automated object removal and background replacement are now standard features in editing software.

The “Uncanny Valley” of Motion

Despite progress, AI video still struggles with complex physical interactions. A generated video of a person walking is often convincing; a video of a person interacting with water or complex cloth physics often reveals “hallucinations” where limbs merge with objects. This limitation currently keeps AI video primarily in the realm of short clips, surreal music videos, and background assets rather than full narrative cinema.

3. Composing the Invisible: AI in Music and Audio

Music generation has historically lagged behind visual AI due to the complexity of high-fidelity audio sampling rates (44.1kHz audio requires 44,100 data points per second, far denser than video framerates). However, the gap has closed significantly.

From MIDI to Raw Audio

Older AI music tools generated MIDI (digital sheet music), which sounded mechanical. Modern generative audio models work on the raw waveform level. They generate the actual sound waves, capturing the breath of a saxophonist, the scratch of a guitar string, and the acoustics of a room.

The Three Pillars of Audio AI

- Text-to-Music: Users prompt “Lo-fi hip hop beat with a melancholic jazz piano” and receive a fully mixed track.

- Stem Separation: AI can deconstruct a mixed song back into its constituent parts (vocals, drums, bass, instruments). This creates massive opportunities for sampling and remixing but raises significant legal questions regarding derivative works.

- Voice Cloning and Synthesis: Text-to-Speech (TTS) has reached a level of emotional indistinguishability from human speech. Actors can now license their voice prints, allowing studios to generate dialogue for video games or dubbing without the actor being present.

The “Suno” Moment in Audio

Just as Midjourney changed images, tools that allow full lyrical and vocal generation have disrupted music. Users can type lyrics, specify a genre, and receive a radio-quality song with vocals.

Who this impacts:

- Background Music (BGM) Libraries: Similar to stock photos, generic BGM is being replaced by custom-generated tracks that fit the exact length and mood of a video.

- Demo Production: Songwriters use AI to rapidly prototype arrangements and melodies before recording with real musicians.

The Authenticity Debate

Music is deeply tied to human connection and performance. While AI can mimic the sound of a blues guitar, it cannot mimic the intent. Consequently, AI music is currently finding its home in functional audio (advertising, background tracks, game loops) rather than replacing artist-driven album releases. The audience creates a value distinction between “content audio” and “human expression.”

4. Multimodal Workflows: The New Creative Pipeline

The true power of generative AI lies not in using these tools in isolation, but in chaining them together. We are witnessing the rise of the One-Person Studio.

The Integrated Pipeline

A typical multimodal workflow in 2026 looks like this:

- Scripting: An LLM (Large Language Model) helps structure the script and generate scene descriptions.

- Visual Development: An image generator creates character sheets and environment backgrounds based on the script.

- Animation: An image-to-video model animates the static backgrounds and characters.

- Voice: A voice synthesis model reads the dialogue using a specific character persona.

- Lip Sync: A specialized AI tool maps the generated audio to the video character’s mouth movements.

- Scoring: An audio generator creates a musical score that matches the emotional beat of the scene (often analyzing the video content to time the drops).

Efficiency vs. Quality

This pipeline allows a single creator to produce content that would have previously required a team of ten. However, the quality ceiling is often lower than manual production. The skill of the modern creator lies in identifying which parts of the pipeline can be automated (e.g., background textures) and which require manual intervention (e.g., the hero’s emotional monologue).

The Role of Metadata

In these workflows, metadata becomes the glue. Carrying the “seed” numbers and prompt history from the image stage to the video stage ensures consistency. Software platforms are increasingly integrating these tools into a single dashboard to manage this data flow, preventing the “broken telephone” effect where the style drifts as assets move between tools.

5. Tools and Platforms Driving the Change

The ecosystem is vast, but it generally categorizes into three tiers of tools.

Tier 1: The Foundation Models (The Engines)

These are the raw, powerful models often accessed via Discord, APIs, or web interfaces.

- Visual: Midjourney, OpenAI’s DALL-E, Stability AI’s Stable Diffusion.

- Video: Runway Gen-series, OpenAI’s Sora (and its successors), Luma Dream Machine.

- Audio: Suno, Udio, ElevenLabs.

Pros: Highest quality, cutting-edge features. Cons: Steep learning curve, often lack workflow integration.

Tier 2: The Integrated Suites (The Workstations)

Established creative software giants embedding AI into familiar interfaces.

- Adobe Firefly: Integrated directly into Photoshop and Premiere. It is “commercially safe” (trained on Adobe Stock), making it the default for enterprise.

- Canva: Focuses on democratizing design with “Magic” tools for non-professionals.

- DaVinci Resolve: Uses “Neural Engine” features for complex video tasks like isolation and speed ramping.

Pros: Seamless workflow, legal safety. Cons: Sometimes lag behind the raw power of Tier 1 models.

Tier 3: The Specialized Utilities (The Surgeons)

Tools built for one specific task.

- Upscalers: Tools like Topaz Labs or Magnific AI that hallucinate detail to turn low-res generations into 4K assets.

- Lip Sync: Tools like HeyGen or Synclabs that specialize purely in matching mouth movement to audio.

6. Ethical Challenges and Copyright

The transformation of multimedia creativity is overshadowed by legal and ethical gray areas.

The “Fair Use” Battleground

As of 2026, the core legal question remains: Is training an AI model on copyrighted work “fair use”?

- Tech Companies argue: It is like a human student studying paintings in a museum to learn to paint. It learns concepts, not copies.

- Artists/Rights Holders argue: It is a commercial compression of their labor that directly competes with them in the marketplace.

Several high-profile lawsuits are ongoing. The outcome will likely result in a licensing ecosystem where AI companies must pay royalties to major IP holders (music labels, stock libraries, news publishers) for training data.

Deepfakes and Reality Apathy

In video and audio, the ability to clone voices and likenesses poses severe risks. “Reality Apathy” is a growing phenomenon where audiences become indifferent to video evidence because “it could be AI.”

- Watermarking Standards: Initiatives like the C2PA (Coalition for Content Provenance and Authenticity) are attempting to embed invisible, tamper-evident digital signatures into AI content to verify its origin. Major social platforms now label AI content, either automatically or via mandatory user disclosure.

Bias and Representation

Generative models amplify the biases present in their training data. If the internet contains mostly photos of male CEOs, the AI will default to generating male CEOs unless explicitly prompted otherwise. Creative directors must be hyper-vigilant against “default bias” to ensure inclusive and accurate representation in generated multimedia.

7. The Human Role: Adapting to the AI Studio

Does this make the human artist obsolete? History suggests otherwise. The camera did not kill painting; it freed painting from realism and birthed Impressionism. AI is doing the same for multimedia.

New Job Titles and Skills

- The AI Art Director: Someone who may not be able to draw, but understands composition, lighting, art history, and how to “speak” to the model to extract a specific vision.

- The Editor-Curator: As generation becomes instant, the bottleneck moves to selection. The ability to look at 100 generated variations and instantly identify the one that fits the brand narrative is a high-value skill.

- The Technical Artist (Pipeline Architect): Professionals who can chain together different AI tools, write Python scripts to automate handoffs, and train custom LoRA models.

The “Human Premium”

As synthetic media floods the internet, verified human-made content is accruing a premium status. “Handmade” or “Human-written” is becoming a marketing differentiator, much like “organic” food. In music and film, we are seeing a bifurcation: low-stakes content (social media filler, internal comms) goes AI, while high-stakes content (feature films, albums) leans heavily into human craftsmanship to signal value.

Conclusion

Generative AI is not merely a new tool in the multimedia toolbox; it is a new substrate for creativity. It has lowered the barrier to entry for production, allowing anyone with an idea to visualize it. However, it has raised the barrier for mastery.

The future belongs to the hybrid creative: the illustrator who uses AI to generate textures, the filmmaker who uses AI for pre-vis, and the musician who uses AI for sound design. These professionals treat generative AI not as a replacement for their taste, but as an infinite multiplier of their effort.

As we move forward, the novelty of “look what the AI made” will fade. The focus will return to the fundamentals of storytelling and emotion. The tools have changed, but the mandate remains the same: to make the audience feel something.

Next Steps for Creatives

- Audit Your Workflow: Identify the repetitive, low-value tasks in your creative process (e.g., masking, storyboarding, texture hunting) and test AI tools to automate them.

- Learn the Language: Dedicate time to understanding prompting syntax and parameters (like “temperature” and “guidance scale”). This is the new grammar of creation.

- Establish Ethics: Define your personal or agency-level policy on AI usage. Be transparent with clients about when and how AI is used in your deliverables.

FAQs

1. Can I copyright AI-generated images or music? In the US and many other jurisdictions, you generally cannot copyright content generated purely by AI with a simple prompt. However, if you significantly modify the output using human tools (like Photoshop or manual editing), the human-created elements may be copyrightable. The law is evolving, so consult an IP attorney for specific cases.

2. Will AI replace video editors and graphic designers? It is unlikely to replace the roles, but it will replace the tasks. Junior-level tasks like rote photo manipulation, basic video cutting, and generic asset creation are being automated. Professionals must upskill to become “creative directors” of these tools, focusing on strategy, complex storytelling, and final polish.

3. What is the difference between Midjourney and Stable Diffusion? Midjourney is a closed-source service known for its artistic, painterly aesthetic and ease of use via Discord/Web. Stable Diffusion is an open-source model that can be installed locally on your own computer. Stable Diffusion offers far more control, privacy, and customization (via plugins like ControlNet) but requires more technical skill to set up.

4. How can I tell if a video or image is AI-generated? Look for visual inconsistencies: hands with too many fingers, text that is gibberish, inconsistent lighting shadows, or “dream-like” blending of objects. In video, watch for “morphing” background details or physics that don’t make sense (e.g., hair moving through a collar). Metadata tools like Content Credentials (CR) can also help identify AI origins.

5. Is it ethical to use an artist’s name in a prompt? This is a contentious ethical issue. While technically possible, many in the creative community consider it “style theft,” especially if you are competing in the same market as that living artist. A more ethical approach is to describe the style (e.g., “impressionist, thick impasto brushstrokes, vibrant pastel colors”) rather than using a specific artist’s name.

6. What hardware do I need to run generative AI locally? To run models like Stable Diffusion or local LLMs efficiently, you need a powerful GPU (Graphics Processing Unit). NVIDIA cards are the industry standard for AI. As of 2026, a card with at least 12GB (preferably 16GB+) of VRAM is recommended for video and high-res image generation.

7. How does AI music generation handle vocals? Modern AI uses neural audio synthesis to generate vocals. It doesn’t “splice” existing recordings; it generates the sound wave of a voice singing specific words based on training data. This allows for creating vocals in specific styles (e.g., “raspy soul singer”) without hiring a vocalist, though emotional nuance often requires many attempts.

8. What is “in-painting” in multimedia? In-painting is the process of using AI to edit a specific area within an existing image or video frame. You mask (highlight) the area you want to change and provide a prompt. For example, you could highlight a vase on a table and type “a bowl of fruit,” and the AI will generate the fruit with the correct lighting and perspective of the original photo.

9. Are there AI tools for 3D modeling? Yes. Generative AI is rapidly expanding into 3D. Tools can now take a text prompt or a 2D image and generate a 3D mesh with textures (Text-to-3D). While still less detailed than manual sculpting, they are excellent for creating background props and game assets quickly.

10. How do I prevent my art from being used to train AI? Tools like “Glaze” and “Nightshade” apply invisible noise to your images that disrupt how AI models interpret the data (making a dog look like a cat to the AI). Additionally, you can check platforms for “Do Not Train” settings, though these rely on the platform’s voluntary compliance.

References

- Adobe. (2025). Adobe Firefly and the Future of Creative AI: Commercial Safety and Ethics. Adobe Official Blog. https://www.adobe.com/sensei/generative-ai/firefly.html

- MIT Technology Review. (2024). The precise control of generative video: How temporal attention works. https://www.technologyreview.com

- U.S. Copyright Office. (2023). Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence. Federal Register. https://www.copyright.gov/ai/

- Runway Research. (2024). Gen-3 Alpha: A New Frontier for Video Generation. Runway ML. https://research.runwayml.com

- Coalition for Content Provenance and Authenticity (C2PA). (2025). Technical Specifications for Digital Content Provenance. https://c2pa.org

- Stability AI. (2024). Stable Audio: High-Fidelity Music Generation with Latent Diffusion. https://stability.ai

- Suno AI. (2025). Chirping the Future: How Text-to-Song Models are Evolving. Suno Blog. https://suno.com/blog

- McKinsey & Company. (2024). The Economic Potential of Generative AI: The Next Productivity Frontier. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier

- Variety. (2025). AI in Hollywood: How Pre-vis and Background Generation are changing budgets. https://variety.com

- University of Chicago. (2023). Glaze: Protecting Artists from Style Mimicry. The Glaze Project. https://glaze.cs.uchicago.edu