In the traditional paradigm of artificial intelligence, data is king, and the castle is a massive, centralized server. For years, the standard approach to training deep neural networks involved collecting raw data from millions of users—photos, voice recordings, text messages—and uploading it all to a central data center. While effective, this model faces growing scrutiny regarding user privacy, data security, and the sheer bandwidth required to move exabytes of information across the globe.

Enter federated deep learning. This paradigm shift flips the standard model on its head: instead of bringing the data to the code, it brings the code to the data. By training models directly on edge devices—smartphones, IoT sensors, and local servers—organizations can build smarter AI systems without ever seeing the raw user data.

Key Takeaways

- Decentralization: Training occurs locally on edge devices rather than a central server.

- Privacy: Raw data never leaves the device; only model updates (weights/gradients) are shared.

- Efficiency: Reduces network bandwidth usage and latency by processing data where it is generated.

- Personalization: Enables models to learn from specific user behaviors without compromising anonymity.

- Challenges: Faces hurdles like device heterogeneity (different chips/batteries) and non-IID data distributions.

Scope of this Guide In this guide, “federated deep learning” refers specifically to the distributed training of deep neural networks across multiple decentralized edge devices. We will cover the mechanics of the training loop, the privacy technologies that secure it, and the practical challenges of deploying these systems in the real world. We will not cover general cloud computing or centralized machine learning workflows except for comparison.

What is Federated Deep Learning?

Federated Deep Learning (FDL) is a subset of machine learning that enables multiple actors to build a common, robust deep learning model without sharing data. It combines federated learning—the distributed approach to training—with deep learning, which involves multi-layered neural networks capable of learning complex patterns from vast datasets.

The Shift from Centralized to Decentralized

To understand FDL, one must first understand the limitations of centralized training. In a centralized setup, if you want to train a keyboard app to predict the next word a user will type, you must upload their typing history to your servers. This creates a “honey pot” of sensitive data that is attractive to hackers and concerning to privacy advocates.

FDL solves this by keeping the typing history on the phone. The phone downloads a generic model, improves it by learning from the local history, and then sends only the mathematical summary of those improvements (the “weights” or “gradients”) back to the central server. The server aggregates these summaries from millions of phones to create a better global model, which is then sent back to everyone.

Defining Edge Devices in this Context

When we discuss “edge devices” in FDL, we are referring to a broad spectrum of hardware that sits at the periphery of the network, closest to where data is created. This includes:

- Mobile Devices: Smartphones and tablets containing rich personal data (text, photos, location).

- IoT Sensors: Smart thermostats, industrial monitors, and security cameras.

- Wearables: Smartwatches and fitness trackers monitoring biometric data.

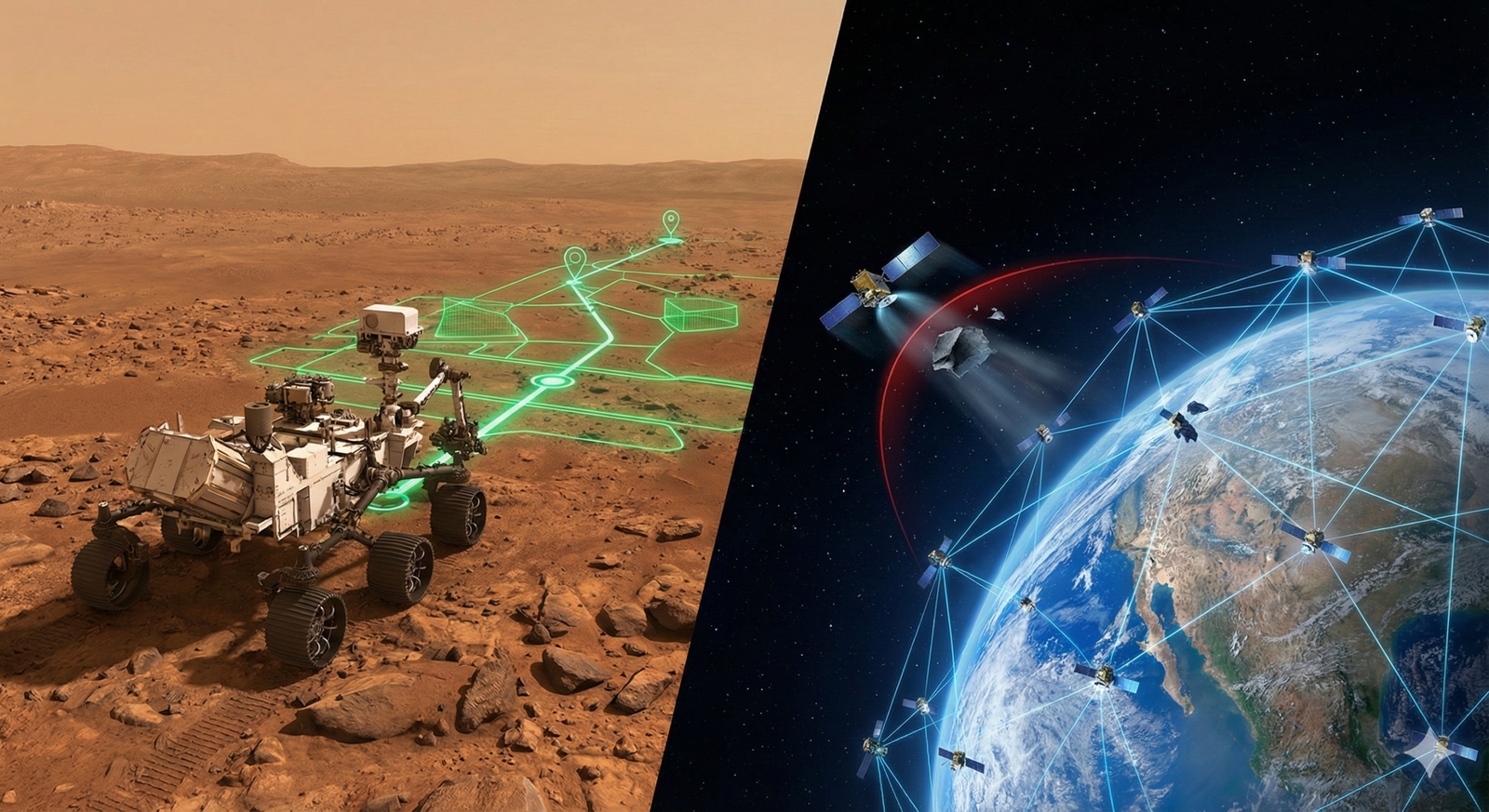

- Autonomous Machines: Drones and self-driving cars that process video feeds in real-time.

- Local Gateways: Small servers in hospitals or retail stores that act as a local hub for sensitive data.

How It Works: The Federated Learning Lifecycle

The process of federated deep learning is a cycle of communication and computation. It typically follows a “hub-and-spoke” topology where a central server orchestrates the training, but does not see the data.

Phase 1: Initialization and Selection

The central server initializes a global deep neural network. This could be a pre-trained model or a fresh model with random weights. The server then selects a subset of eligible edge devices to participate in the current training round. Eligibility is often determined by constraints: is the device plugged in? Is it on Wi-Fi? Is it idle?

Phase 2: Distribution

The server sends the current global model parameters to the selected edge devices. This ensures every device starts the round with the same baseline knowledge.

Phase 3: Local Training (On-Device)

This is where the “deep learning” happens. Each edge device uses its local dataset to train the model. It performs forward and backward propagation, calculating the error (loss) and adjusting the model’s weights to minimize that error. Crucially, this training usually runs for only a few epochs (cycles) to avoid draining the device’s battery or monopolizing its processor.

Phase 4: Model Update and Upload

Once local training is complete, the device computes the difference between the old model and the new, locally trained model. It creates an “update” packet containing these weight changes (gradients). This packet is compressed, encrypted, and sent back to the central server. The raw data remains locked on the device.

Phase 5: Aggregation

The central server receives updates from thousands or millions of devices. It uses an algorithm—most commonly Federated Averaging (FedAvg)—to mathematically combine these updates. It averages the weights to create a new, improved global model that reflects the learnings of all participating devices.

Phase 6: Iteration

The new global model replaces the old one, and the cycle repeats. Over many rounds, the model converges to a high level of accuracy, comparable to what would have been achieved if all the data had been centralized.

The Role of Edge Devices in Modern AI

The proliferation of powerful edge hardware has been the catalyst for FDL. A decade ago, mobile processors struggled to run simple apps; today, they include dedicated Neural Processing Units (NPUs) and AI accelerators capable of running complex transformers and convolutional neural networks (CNNs).

Reducing Latency

By keeping the learning process closer to the user, edge devices can eventually run inference (making predictions) much faster. While training is the heavy lifting, the ultimate goal is a responsive model. FDL ensures the model on the device is fine-tuned to general patterns, reducing the need for round-trip server calls for every prediction.

Bandwidth Conservation

In traditional learning, uploading a user’s entire photo library to the cloud to train a classification model consumes gigabytes of data. In FDL, the device might only send a few megabytes of model weights. For industrial IoT or remote locations with satellite connections, this reduction in bandwidth usage is not just a convenience—it is an operational necessity.

Key Benefits of Decentralized Training

Adopting a federated approach offers strategic advantages beyond just “it’s cool technology.” It addresses fundamental bottlenecks in the scalability of AI.

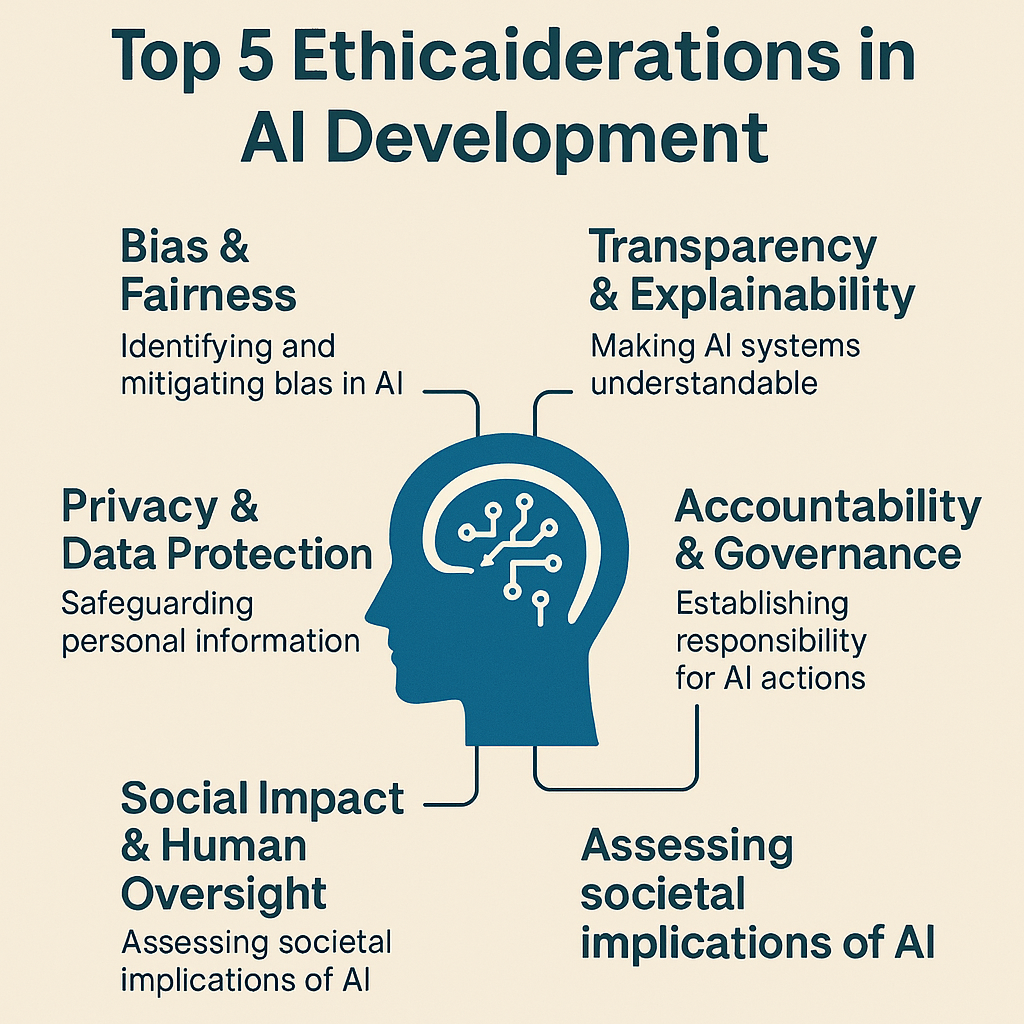

Data Privacy and Security

The most significant driver for FDL is privacy. By design, it minimizes the attack surface. If a central server is compromised, the attacker steals a model, not a database of user secrets. For industries like healthcare and finance, where data sovereignty laws (like GDPR or HIPAA) are strict, FDL offers a pathway to train models on data that legally cannot leave the premises.

Access to Siloed Data

Much of the world’s most valuable data is trapped in silos. Hospitals cannot share patient records with each other; banks cannot share transaction histories. Federated learning allows these institutions to collaborate on a shared model without ever exposing their proprietary or sensitive data to competitors or third parties.

Real-Time Continuous Learning

Centralized models often suffer from “data drift”—the model becomes outdated as the world changes, and retraining requires a massive new data collection effort. FDL enables continuous learning. As user behavior changes (e.g., new slang words appear), edge devices encounter this data immediately, learn from it, and update the global model in near real-time.

Privacy Mechanisms Behind the Scenes

While keeping data local is a massive privacy win, it is not a silver bullet. Sophisticated attackers can sometimes “reverse engineer” the raw data by analyzing the model updates sent by a device (a process known as model inversion or reconstruction attacks). To combat this, FDL is often paired with advanced privacy-enhancing technologies (PETs).

Secure Aggregation

Secure Aggregation is a cryptographic protocol that allows the server to compute the sum of the model updates without seeing the individual updates themselves. Imagine a room of people who want to calculate their average salary without revealing their individual salaries. They could use a protocol where they add random numbers to their salaries in a specific way so that the random numbers cancel out when summed, leaving only the total salary. Secure aggregation ensures the server sees only the aggregate result, not the contribution of any single phone.

Differential Privacy

Differential Privacy (DP) adds mathematical noise to the data or the model updates. This noise is carefully calibrated to obscure whether any specific individual’s data was included in the training set, while still allowing the overall patterns to remain visible. In FDL, “Local Differential Privacy” involves the device adding noise to its update before sending it. This provides a mathematical guarantee of privacy, though it often comes with a small trade-off in model accuracy.

Homomorphic Encryption

Though computationally expensive and less common on battery-powered devices, homomorphic encryption allows computations to be performed on encrypted data without decrypting it first. In an FDL context, this could allow the server to aggregate encrypted updates, ensuring that even if the server is malicious or compromised, it cannot read the incoming weights.

Technical Challenges and Constraints

Implementing federated deep learning is significantly more complex than centralized training. Engineers must contend with an environment they do not control.

System Heterogeneity

In a data center, every GPU is identical. On the edge, diversity is the rule.

- Hardware diversity: One user has the latest iPhone; another has a five-year-old budget Android. Their computation speeds differ vastly.

- Network diversity: Some devices are on 5G; others are on spotty 3G or restricted corporate Wi-Fi.

- Availability: Devices drop out of the network unpredictably. If a device runs out of battery mid-training, its update is lost. The aggregation algorithm must be robust enough to handle these “stragglers” and dropouts without stalling the global training round.

Statistical Heterogeneity (Non-IID Data)

In centralized training, data is usually shuffled and distributed evenly (Independent and Identically Distributed, or IID). In FDL, data is Non-IID.

- The Problem: Your smartphone’s photo gallery contains photos of your life (e.g., lots of dog photos). Your neighbor’s phone has photos of their life (e.g., landscapes). Neither dataset represents the global distribution of “all photos.”

- The Consequence: If a device trains only on dog photos, its update will pull the global model heavily toward predicting dogs, potentially “forgetting” what a cat looks like. This is known as “client drift.” Algorithms like FedProx or Scaffold are designed specifically to correct for this imbalance.

Communication Efficiency

Deep learning models, especially Large Language Models (LLMs) or complex Vision Transformers, can be massive—gigabytes in size. Transmitting these full models back and forth to millions of devices is impractical. Techniques like model compression, quantization (reducing the precision of the numbers), and sparse updates (sending only the most important weight changes) are critical to making FDL viable for large models.

Frameworks and Tools for Implementation

Several open-source frameworks have emerged to standardize the implementation of FDL, abstracting away the complex networking and cryptography.

TensorFlow Federated (TFF)

Developed by Google, TFF is one of the most mature frameworks. It allows researchers to simulate federated learning on their local machines using existing TensorFlow models. It provides high-level APIs for defining the federated computation and low-level interfaces for implementing custom aggregation algorithms.

PySyft and PyGrid

Created by OpenMined, PySyft is a library for encrypted, privacy-preserving machine learning. It extends PyTorch and allows data owners to keep their data on their own queryable servers. It is heavily focused on the privacy aspect, integrating differential privacy and multi-party computation deeply into the workflow.

Flower (Flwr)

Flower is a friendly, unified approach to federated learning. It is framework-agnostic, meaning it works with PyTorch, TensorFlow, JAX, and scikit-learn. It is designed to be easy to deploy on diverse edge devices, including mobile (iOS/Android) and embedded systems, making it a popular choice for moving from research to production.

NVIDIA FLARE

NVIDIA’s Federated Learning Application Runtime Environment (FLARE) is geared towards enterprise and industrial use cases, particularly in healthcare. It emphasizes security and robust orchestration, supporting complex workflows where different sites might have vastly different compute capabilities.

Real-World Applications and Use Cases

FDL is moving out of the research lab and into the products we use daily.

Predictive Text and Smart Keyboards

The most famous example of FDL is Google’s Gboard (Android keyboard). It uses federated learning to improve next-word prediction and query suggestions. The model learns new words and phrasing trends from millions of users without Google ever reading their text messages. This also extends to emoji prediction and autocorrect personalization.

Healthcare and Medical Imaging

Hospitals generate massive amounts of sensitive data—MRI scans, pathology slides, genomic data. Privacy regulations usually prevent this data from leaving the hospital’s secure servers. FDL allows a consortium of hospitals to collaboratively train a tumor-detection model. Each hospital trains the model locally on its own patients and shares only the learnings. This results in a global model that is more accurate and less biased than any single hospital could produce alone.

Smart Homes and Voice Assistants

Smart speakers and home assistants process voice commands. FDL allows these devices to improve their “wake word” detection (“Hey Siri” or “Alexa”) locally. By learning from the specific acoustics of a user’s living room and their unique accent, the device becomes more responsive without streaming 24/7 audio to the cloud.

Autonomous Vehicles

Self-driving cars generate terabytes of data daily—far too much to upload. However, if one car encounters a rare edge case (e.g., a plastic bag floating in the wind that looks like an obstacle), it can learn from that encounter. Through federated learning, that lesson can be aggregated and shared with the entire fleet, making every car safer based on the experience of one, without transmitting full video logs.

Financial Fraud Detection

Banks use FDL to spot money laundering patterns. Since money launderers often move funds across different banks to hide their tracks, a single bank has a limited view. A federated model across multiple institutions can identify suspicious cross-bank patterns without forcing banks to share their client lists or transaction details with competitors.

Common Pitfalls and How to Avoid Them

Deploying FDL requires a shift in mindset. Here are common traps and solutions.

1. Overlooking the “Cold Start” Problem

An FDL system needs a decent global model to start with. If you send a completely random, untrained model to edge devices, the user experience (inference) will be terrible until several rounds of training occur.

- Solution: Pre-train the model on a small proxy dataset (public data) in the cloud to get it to a baseline level of competence before deploying it to the edge for federated fine-tuning.

2. Underestimating Battery Drain

Users will uninstall an app if it drains their battery. Running deep learning training on a main processor is energy-intensive.

- Solution: Implement strict eligibility checks. Only run training when the device is charging, connected to unmetered Wi-Fi, and the screen is off (idle mode). Use on-device AI accelerators (NPU/DSP) whenever possible.

3. Data Poisoning Attacks

In a decentralized system, a malicious actor could modify their local data (or the update packet) to sabotage the global model—for example, teaching a car to ignore stop signs.

- Solution: Use robust aggregation algorithms that detect and discard statistical outliers (updates that look significantly different from the majority) before averaging them into the global model.

The Future of Edge AI and Federated Learning

As we look toward the latter half of the 2020s, several trends will accelerate the adoption of FDL.

Hardware Acceleration

Chip manufacturers like Apple (Silicon), Qualcomm (Snapdragon), and Google (Tensor) are dedicating more silicon real estate to NPUs. These specialized cores are vastly more efficient at matrix multiplication (the core math of deep learning) than general CPUs. This will allow for training larger, more complex models on-device without overheating the phone.

Split Learning

Split learning is a variation where the deep neural network is split into two parts. The initial layers (which process raw data) remain on the device, while the deeper layers (which process abstract representations) sit on the server. The device sends “smashed data” (abstract features) to the server. This reduces the computational load on the device while maintaining a degree of privacy, as the server never sees the raw input.

Personalized Federated Learning

The goal is shifting from “one global model for everyone” to “one personalized model for you.” In this approach, the global federated model serves as a base, but the local device maintains a private set of “personalization layers” that never leave the device. This gives the user the best of both worlds: the general intelligence of the crowd and the specific nuances of their own habits.

Related Topics to Explore

If you are interested in the broader ecosystem of decentralized AI, consider exploring these related concepts:

- Edge Computing Architecture: Understanding the infrastructure (MEC – Multi-access Edge Computing) that supports low-latency data processing.

- Privacy-Preserving Machine Learning (PPML): A deeper dive into cryptography, multi-party computation (MPC), and trusted execution environments (TEEs).

- On-Device Training: The specifics of optimizing backpropagation algorithms for low-power ARM-based processors.

- Swarm Intelligence: How decentralized agents (like drones) communicate and coordinate without a central commander.

- TinyML: The field of running machine learning on ultra-low-power microcontrollers (devices with kilobytes of RAM).

Conclusion

Federated deep learning represents a necessary evolution in the lifecycle of artificial intelligence. As society becomes increasingly data-conscious and regulations tighten, the era of unrestrained data collection is drawing to a close. By moving the training process to the edge devices where data lives, we can resolve the tension between the need for smarter AI and the right to privacy.

For organizations, the transition to FDL is not just a technical upgrade; it is a commitment to data stewardship. It allows companies to unlock value from data they otherwise could not touch, reduces infrastructure costs, and builds trust with users. While challenges in heterogeneity and communication remain, the maturation of frameworks like TensorFlow Federated and Flower is lowering the barrier to entry. The future of AI is not in a massive server farm; it is in the millions of devices in our pockets, learning collaboratively, privately, and securely.

FAQs

Q: What is the main difference between distributed learning and federated learning? A: In standard distributed learning, data is centrally owned but processing is split across multiple servers in a data center to speed up training. In federated learning, the data is decentralized and owned by the edge devices (users), and the processing happens locally to preserve privacy.

Q: Does federated learning drain my phone battery? A: It can, but well-designed systems prevent this. Most production FDL systems are configured to run only when the device is plugged into a power source, connected to Wi-Fi, and currently idle (not being used by you).

Q: Can federated learning prevent all privacy leaks? A: Not automatically. While it prevents direct access to raw data, skilled attackers can sometimes infer information from model updates. Combining FDL with Differential Privacy and Secure Aggregation provides much stronger protection against these inference attacks.

Q: Is federated learning slower than centralized training? A: Generally, yes. The process of convergence takes longer because communication between millions of devices is slower and less reliable than communication between servers in a data center. However, the total time to deployment can be shorter because you skip the massive data collection and cleaning phase.

Q: What happens if a device drops offline during training? A: Federated learning protocols are designed to be resilient to dropouts. If a device disconnects, the server simply ignores that device for the current round and proceeds with the updates from the devices that successfully finished the task.

Q: Can I use federated learning for any type of AI model? A: Theoretically yes, but it is best suited for Deep Learning models (neural networks) where the model is defined by weights. It is less common for models like Decision Trees or k-Nearest Neighbors, which require different aggregation methods.

Q: Do I need specialized hardware to run federated learning? A: For the central server, standard GPUs are fine. For edge devices, having a dedicated Neural Processing Unit (NPU) or GPU helps significantly with speed and efficiency, but modern CPUs can also handle smaller models.

Q: Who owns the model in a federated learning setup? A: Typically, the entity that orchestrates the training (e.g., the app developer or service provider) owns the global model. However, the data used to train it remains the property of the individual users.

References

- McMahan, B., et al. (2017). “Communication-Efficient Learning of Deep Networks from Decentralized Data.” Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS). Available at: https://proceedings.mlr.press/v54/mcmahan17a.html

- Google AI Blog. (2017). “Federated Learning: Collaborative Machine Learning without Centralized Training Data.” Available at: https://ai.googleblog.com/2017/04/federated-learning-collaborative.html

- Li, T., et al. (2020). “Federated Learning: Challenges, Methods, and Future Directions.” IEEE Signal Processing Magazine. Available at: https://arxiv.org/abs/1908.07873

- Kairouz, P., et al. (2021). “Advances and Open Problems in Federated Learning.” Foundations and Trends® in Machine Learning. Available at: https://arxiv.org/abs/1912.04977

- TensorFlow. “TensorFlow Federated: Machine Learning on Decentralized Data.” Official Documentation. Available at: https://www.tensorflow.org/federated

- Bonawitz, K., et al. (2017). “Practical Secure Aggregation for Privacy-Preserving Machine Learning.” CCS ’17: Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security. Available at: https://dl.acm.org/doi/10.1145/3133956.3133982

- OpenMined. “PySyft: A library for encrypted, privacy-preserving deep learning.” Official Documentation. Available at: https://github.com/OpenMined/PySyft

- Yang, Q., et al. (2019). “Federated Machine Learning: Concept and Applications.” ACM Transactions on Intelligent Systems and Technology (TIST). Available at: https://dl.acm.org/doi/10.1145/3298981

- NVIDIA. “NVIDIA FLARE: Federated Learning Application Runtime Environment.” Official Documentation. Available at: https://developer.nvidia.com/flare

- Flower. “A Friendly Federated Learning Framework.” Official Documentation. Available at: https://flower.dev/docs/