Customer onboarding is the end-to-end process that helps a new user reach first value fast, then builds the habits that make your product part of their daily work. In plain terms: you show people how to succeed, not just how to click buttons. Done well, onboarding turns signups into active accounts, shortens time to value (TTV), and lifts expansion and retention. This guide gives you a practical path to plan, launch, and iterate onboarding with confidence. Short disclaimer: this article offers general, educational guidance; if you’re making legal, financial, or employment decisions, consult qualified professionals.

Quick answer: effective customer onboarding defines a clear activation milestone, minimizes signup friction, designs a helpful first-run experience, supports learning with contextual guidance and lifecycle messages, measures the right metrics, and improves continuously through experiments.

Fast skim—10 steps you’ll implement:

- Define activation and time-to-value.

- Map journeys and segment by jobs-to-be-done.

- Reduce signup friction with progressive profiling.

- Craft a first-run experience that sets users up to win.

- Use in-app guides and tours purposefully (not everywhere).

- Orchestrate a concise, personalized welcome series.

- Instrument analytics and track onboarding KPIs.

- Build in-product help and human support where it matters.

- Nudge with social proof, reminders, and smart defaults.

- Close the loop with feedback and structured experiments.

1. Lock Your Activation Milestone and Time-to-Value

Your onboarding should start from a single, specific definition of activation—the moment a new user reliably experiences core value. By naming that moment and measuring time to reach it (TTV), you convert fuzzy intentions into a target that design, product, success, and marketing can rally around. For many SaaS products, activation is a short sequence, not one event: import data, invite a teammate, complete a key setup, and perform the first instance of the product’s core action. The right definition depends on your product’s promise, not vanity metrics. If you can’t describe activation in one sentence and instrument it with one metric, it’s not ready. A good heuristic is “the smallest set of actions that predicts retained usage in the next period.” Product-analytics primers define activation as the “aha” moment when users orient to product value and complete a milestone event—use that as your north star while tailoring to your context.

How to define it

- Write a one-line statement: “A user is activated when they ______.”

- Tie it to behavior, not pageviews: “Created 1 project and shared it,” not “visited dashboard.”

- Use cohorts to validate: Activated users should retain at materially higher rates in the next period.

- Limit to ≤3 milestone events: Too many steps dilutes focus.

- Set a TTV target: e.g., “80% of signups activate within 48 hours,” then iterate.

Numbers & guardrails

- Event precision: Each activation event must be uniquely tracked with a stable name.

- TTV ceiling: If median TTV exceeds one typical working session, redesign the setup to compress it.

- Predictive check: Activation cohort should show significantly higher week-over-week retention than non-activated cohorts (aim for a practical gap you can sustain).

Synthesis: When activation is crisp and instrumented, every later decision—from copy to emails—serves a measurable goal instead of vibes.

2. Map Real Journeys and Segment by Jobs-to-Be-Done

Start by mapping how different personas actually reach value. Two customers can sign up for identical software but need very different first steps because their job-to-be-done (JTBD) and context differ. A solo operator might want a fast setup with sensible defaults; a manager may need to invite a team and connect systems before doing anything meaningful. Journey maps should include entry channel, first run, key dependencies (data, integrations, permissions), and the smallest path to activation. Segmenting by JTBD prevents a “one-size-fits-none” onboarding that overwhelms some users and under-teaches others. Treat segmentation as a service, not a barrier: ask for the minimum inputs you need to tailor the experience and defer the rest until after first value. Remember, onboarding isn’t limited to first-time users—new features and redesigns also require micro-onboarding designed with the same care. Nielsen Norman Group

Mini-checklist

- Identify 2–4 primary JTBD segments (not 12).

- For each, sketch the shortest path to activation.

- Decide what you must ask at signup vs. what can wait.

- List blockers per segment (data access, approvals, SSO).

- Pair each segment with a first-run checklist and messaging variant.

Why it matters

- Cognitive load: People understand faster when steps match their task language.

- Speed: Fewer irrelevant steps means faster TTV.

- Signal: Segmentation improves downstream personalization in product and emails.

Synthesis: Journey-by-segment planning turns onboarding into a set of tailored ramps instead of a single staircase, which is how you make speed and clarity feel effortless.

3. Reduce Signup Friction with Progressive Profiling

High-friction signup flows kill momentum before it starts. Ask only for what you need to deliver value now, and progressively profile later. Long forms, forced phone verification, and early payment demands all depress completion rates; even one extra field can be the difference between a trial and a bounce. Form-usability research shows the count and complexity of fields—not just steps—drives abandonment; simplifying, grouping, and deferring fields improves performance. Translate that into your SaaS: capture email and password (or SSO), perhaps one purpose selector for segmentation, and move the rest to the first-run flow. If you need billing for a trial, consider delaying it until the user approaches a meaningful limit (seats, projects, minutes) while clearly communicating the policy.

Common mistakes

- Everything upfront: Asking company size, phone number, role, and use case before value.

- Captcha overkill: Heavy anti-bot steps for low-risk trials.

- Static forms: Same fields for every persona and plan.

- Hidden costs: Surprising users with credit-card walls after a multi-step form.

Numbers & guardrails (illustrative)

- Field count target: Try to keep visible fields ≤5 on the first screen; reduce optional fields to zero.

- SSO adoption: Offer at least one common SSO or passwordless option to cut drop-off.

- Microcopy: Test a one-line privacy assurance near sensitive fields.

Synthesis: Every field must earn its place at signup; if it doesn’t help the user reach value immediately, it belongs later. Baymard Institute

4. Design a First-Run Experience that Sets Users Up to Win

First-run is the bridge from “I’ve signed up” to “I’ve done the first meaningful thing.” A great first-run experience removes blank-page anxiety, supplies just-enough guidance, and makes the next best action obvious. Replace empty dashboards with empty-state designs that teach and invite action: show what success looks like, provide a sample object (a demo project, a template dataset), and include a clear CTA to the first step on the activation path. Use a lightweight checklist to show progress and create a sense of momentum. Make settings discoverable but not required; a user shouldn’t have to rename their workspace to create their first item. Empty-state and first-run research consistently recommends communicating system status, offering direct pathways to key tasks, and using meaningful, actionable CTAs that reduce uncertainty.

How to do it

- Seed with defaults: Provide starter templates or demo data users can immediately edit.

- One path forward: Offer a single prominent CTA aligned to activation (e.g., “Create your first invoice”).

- Inline help: Use contextual tips adjacent to fields rather than modal walls.

- Visible progress: A small checklist with 3–5 items (auto-check what the user already did).

Mini case

- Teams that added a one-screen checklist (“Create project,” “Invite teammate,” “Connect data”) often saw more users complete the second and third steps because the path was explicit; instrument your own baseline and test variations.

Synthesis: First-run should immerse new users in doing, not reading, and your empty states should nudge them straight to value.

5. Use In-App Guides and Tours Purposefully (Not Everywhere)

In-app guides are powerful when they remove ambiguity at the exact moment of need; they’re annoying when they preempt action with a lecture. Evidence from usability experts warns that generic tutorials are often forgotten and can interrupt flow; contextual help and just-in-time prompts work better. Treat tooltips, hotspots, and walkthroughs as precision instruments: target them by segment and behavior, keep copy short, and give users control to dismiss or revisit. Define a governance model so guides don’t multiply unchecked—decide who can publish, how you test, and when guides expire. Vendor resources emphasize concise copy, relevant CTAs, and measurement for continual iteration; build these into your playbook from day one.

Practical tips

- Target by behavior: Show a guide only after someone reaches the confusing step.

- Keep it skimmable: 1–2 short sentences per step; link to deeper docs.

- Offer a “Need help?” affordance: Let users pull guidance when they want it.

- Expire content: Retire or refresh guides after a defined threshold of views or time.

Numbers & guardrails

- Guide length: Keep walkthroughs to ≤5 steps; beyond that, split by task.

- Dismiss rates: If >60% dismiss the first step, revisit copy or trigger logic.

- A/B test impact: Compare activation or task-completion rates with/without a guide per segment.

Synthesis: In-app guidance should feel like a friendly colleague pointing at the next button—timely, brief, and optional. Nielsen Norman Group

6. Orchestrate a Concise, Personalized Welcome Series

Not everyone logs back in immediately. A targeted welcome series—a handful of short, helpful messages across email or in-app—bridges gaps and reinforces the next action. Start with a simple structure: Day 0 “welcome + first step,” then 2–3 follow-ups anchored to user behavior (e.g., hasn’t created first project; created project but didn’t invite teammate). Keep copy plain and human; personalization beyond first name means referencing the task the user is trying to do, not just attributes. Industry resources recommend clear objectives, segment-specific content, and succinct writing; benchmarks suggest typical open and click ranges you can use as initial guardrails while you develop your own. intercom.com

Mini-checklist

- Each message = one action: “Import your data,” not a catalog of features.

- Short subject lines: State the benefit, avoid cleverness that obscures value.

- Behavioral triggers: Send based on what users did or didn’t do, not on calendar days alone.

- Deliverability basics: Authenticate sending domain (SPF/DKIM/DMARC) and warm gradually.

Numbers & guardrails

- Starting targets: Aim for open rates in the healthy industry range and clicks of a few percent, then tighten to your product’s reality; track by segment and message type.

- Cadence: 3–5 emails over the first few sessions or days is usually enough to reinforce action without fatigue.

Synthesis: A good welcome series feels like a courteous guide, not a campaign—short, specific nudges tied to what the user is trying to accomplish. intercom.com

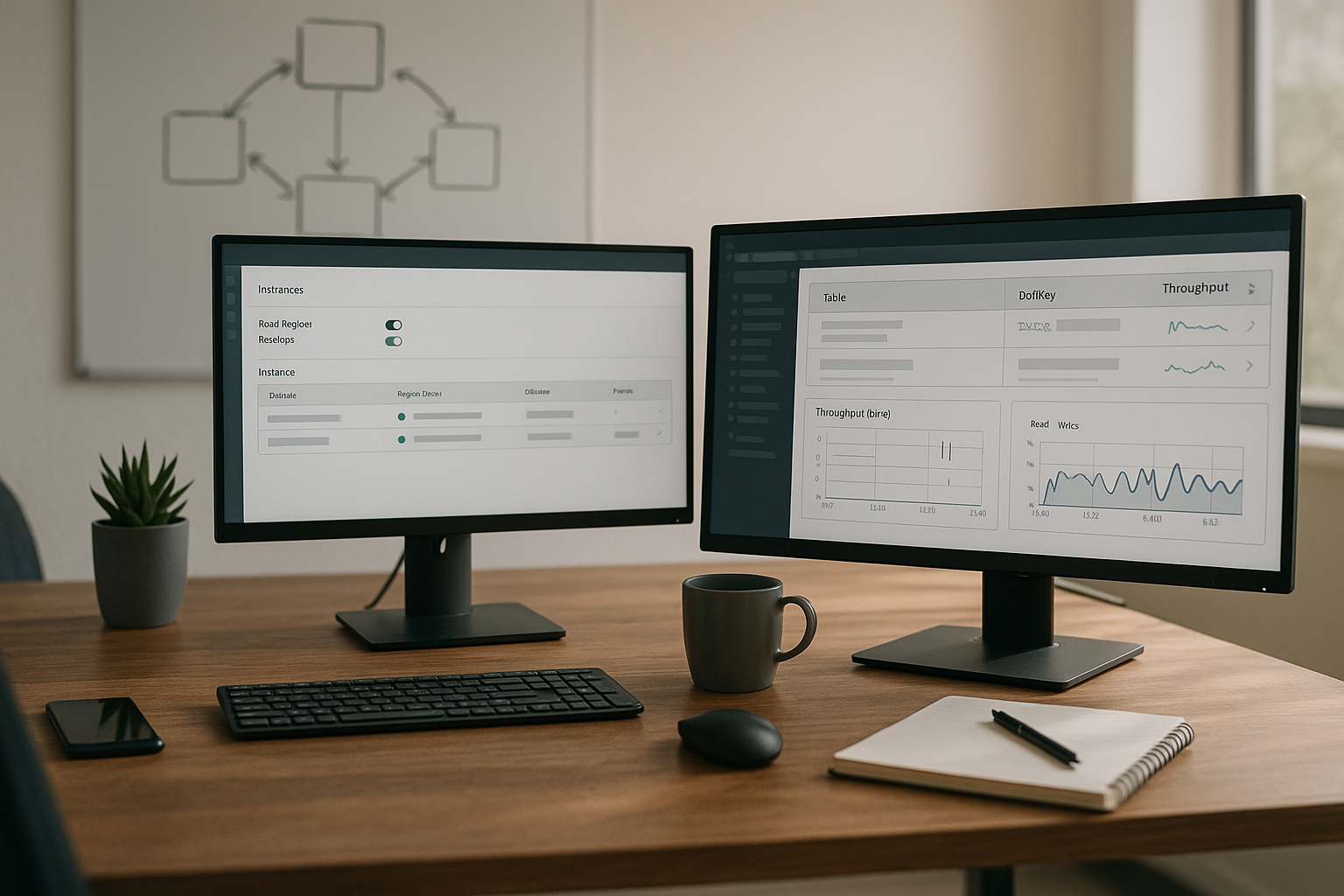

7. Instrument Analytics and Track Onboarding KPIs

If you can’t measure onboarding, you can’t improve it. Instrument the funnel from signup to activation with a product-analytics tool, define trustworthy events, and build a concise dashboard your team reviews regularly. Critical metrics include activation rate, time to value, new-user retention, task success for first-run workflows, and message engagement. Use cohort analysis to see whether changes actually produce durable adoption, not just first-day activity. Vendor guides frame product analytics as using behavioral data to put customers at the center; adopt that stance and you’ll ask better questions and ship more useful iterations. amplitude.com

Numbers & guardrails

| KPI | Definition | Practical guardrail |

|---|---|---|

| Activation rate | % of new users who reach your activation milestone | Set a baseline, then target a step-change uplift per quarter (e.g., +10–20% relative). |

| Time-to-value (TTV) | Median time from signup to activation | Keep within one typical working session; compress via templates and defaults. |

| Day-N retention | % of signups active again by a chosen future day/period | Compare activated vs. non-activated cohorts; the gap should be material. |

| First-run task success | Completion rate for key setup tasks | Watch drop-offs by step; eliminate the worst offenders first. |

| Message engagement | Open and click rates for onboarding messages | Compare to industry ranges initially; optimize to your own benchmarks. |

Tools/Examples

- Use Amplitude or Mixpanel for event tracking and cohorts; define stable, human-readable event names.

- Create a single “Onboarding” dashboard with 5–8 charts max.

- Review key metrics weekly with product, success, and marketing.

Synthesis: Tight instrumentation turns opinions about onboarding into testable hypotheses, making each change compounding instead of random. amplitude.com

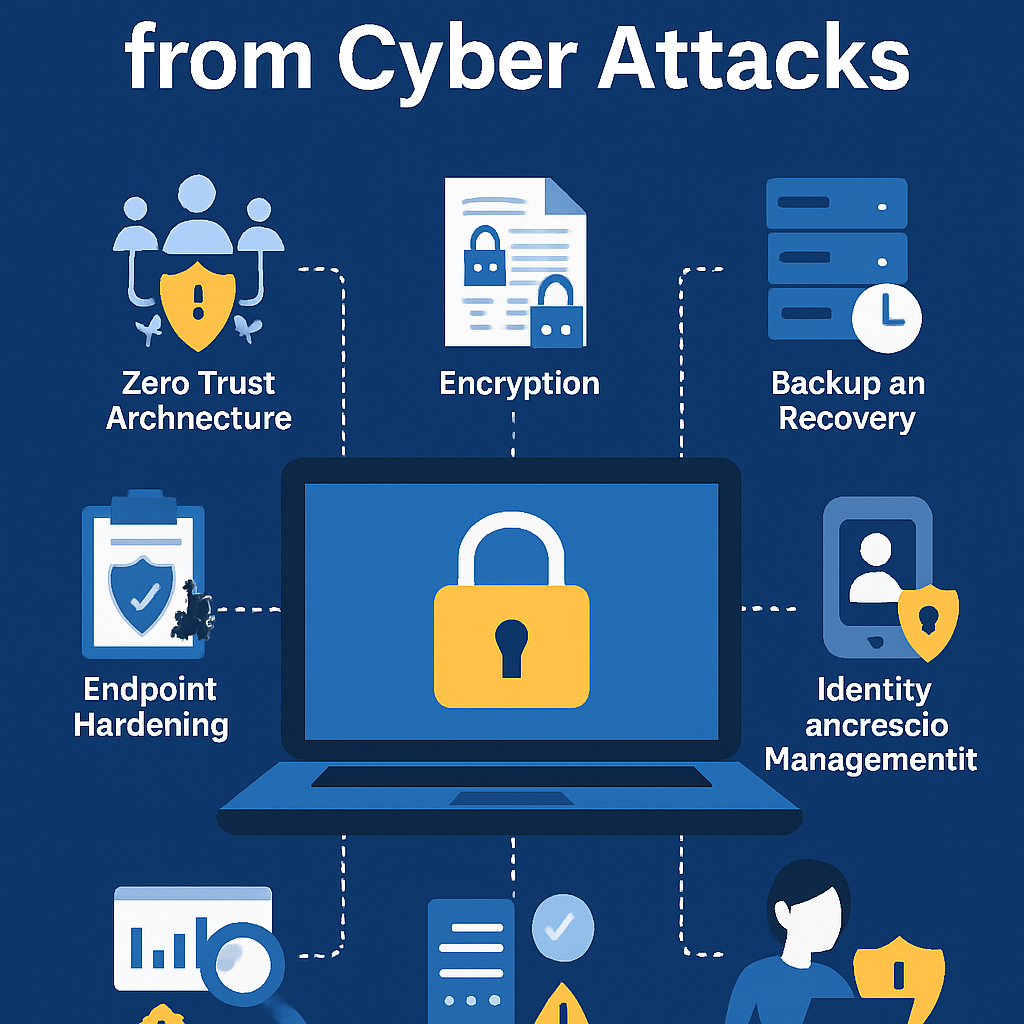

8. Build In-Product Help and Human Support Where It Matters

Users will get stuck. The question is whether they can recover inside the product or must leave to hunt for help. The best onboarding blends embedded help (searchable docs, contextual links, micro-videos) with lightweight human support (chat, office hours for complex setups). Place help exactly where confusion peaks—on empty states, complex forms, or integration screens. Keep help content short and action-oriented; link to a longer knowledge-base article only when necessary. In-app guidance platforms highlight best practices like simple, direct language and relevant CTAs; adopt a content style guide so tips feel consistent across your product. When an issue truly requires a human, set expectations: show typical response windows and provide a transcript so users can reference advice later.

Mini-checklist

- Add “Need help?” links next to complex fields, not just in the header.

- Include a search box in the help panel and return task-specific results.

- Record 30–60 second micro-videos for the top 3 confusing tasks.

- Provide a low-friction handoff to chat or a calendar link for thorny setups.

Region-specific notes

- If support or onboarding involves personal data, align with privacy rules applicable to your users; regulators and guidance bodies publish practical overviews for lawful processing and communication norms you should review with counsel before launch. edpb.europa.eu

Synthesis: Treat help as part of the product, not an external manual; when assistance is one click away, people keep moving toward value.

9. Nudge with Social Proof, Reminders, and Smart Defaults

Nudges accelerate habit-forming when they reduce friction or uncertainty without coercion. Social proof (e.g., “teams like yours often invite one collaborator next”), unobtrusive reminders (e.g., a gentle banner if data hasn’t been connected), and smart defaults (prefilled templates that match the segment) can all lift completion rates. Use these ethically: the goal is to help users achieve their goals, not to trick them into vanity actions. Keep copy honest and specific; “Finish setup to publish your first report” is more useful than “Complete your profile.” Draw inspiration from e-commerce research on reducing effort—simpler forms and fewer decisions tend to increase completion—and adapt it to SaaS setup tasks. Track the cumulative effect of nudges; too many banners and badges become noise and erode trust. Baymard Institute

How to implement nudges

- Smart defaults: Preselect options based on segment (e.g., “solo consultant” gets simpler template).

- Just-in-time reminders: Trigger only if the user stalls on a critical step for a reasonable interval.

- Positive reinforcement: Celebrate the first successful core action with a subtle in-product moment.

Mini case

- After adding a single, targeted reminder banner for “Connect your data,” one team saw connection completion rise noticeably while keeping dismissals low—proof that well-timed nudges can be both respectful and effective; measure your own baseline to validate.

Synthesis: Nudges should make the right path the easy path; if you need a dozen prompts, the underlying flow is probably too hard.

10. Close the Loop with Feedback and Structured Experiments

Onboarding is never “done.” Build a closed loop: capture feedback, prioritize issues, run experiments, and roll out improvements with instrumentation in place. Mix qualitative signals (interviews, open-ended survey responses, support tickets) with quantitative metrics (activation, TTV, retention). Use CES (Customer Effort Score) to assess how easy core tasks feel and NPS/CSAT for broader sentiment. Then design experiments where each variant is anchored to an explicit hypothesis (“If we provide a default template, TTV will shrink”). Keep experiments lean but disciplined: focus on one change at a time for a clear read. When you communicate via email during onboarding, respect regional requirements for consent, identity, and opt-out—official guidance outlines core principles like truthful headers, clear subject lines, and valid unsubscribe paths for commercial messages; privacy rules in the EU and UK govern consent for electronic marketing. Incorporate compliance into your definition of “done.”

Mini-checklist

- Instrument before you test: Ensure events and goals are trustworthy.

- Start where pain is highest: Fix the steepest drop-offs first.

- Limit concurrency: Run fewer, clearer tests to avoid noise.

- Share learnings: Add a one-page experiment log so the team doesn’t repeat work.

Numbers & guardrails

- Practical sample sizing: Even small teams can run directional tests if the effect size is meaningful; prioritize big, user-visible changes over micro-tweaks.

- Ethical boundaries: Don’t withhold essential guidance just to test novelty.

Synthesis: Feedback plus disciplined experiments compound into a smoother path to value—and a calmer team that knows why something worked.

Conclusion

Onboarding is the deliberate craft of turning a promise into a habit. When you lock a clear activation milestone, tailor journeys by job, remove signup friction, and design a first-run experience that gets people doing rather than reading, you compress time to value and make users feel competent. Purposeful in-app guidance and a short, behavior-based welcome series reinforce the next action without overwhelming anyone, while instrumentation lets you replace hunches with evidence. Pull help into the product, add nudges that reduce effort, and treat privacy and consent as non-negotiable foundations rather than afterthoughts. Finally, close the loop with feedback and experiments so onboarding gets smoother every week. Put these steps into practice, measure honestly, and you’ll welcome new users in a way that earns their trust and retains their business—starting today.

CTA: Save this guide and block time to implement Step 1 this week—define activation, instrument it, and share the target with your team.

FAQs

1) What’s the difference between customer onboarding and user onboarding?

Customer onboarding spans the entire account, including commercial and administrative steps like plan selection, billing, and stakeholder alignment. User onboarding focuses on the individual’s path to first value—what they must see and do in the interface to succeed. In many products, you need both: the account needs the right plan and permissions, while each person needs guidance to complete the first meaningful task. Treat them as two layers of the same outcome: successful adoption.

2) How many emails should be in a welcome series?

Most teams find that three to five concise emails—anchored to behavior, not a calendar—strike a balance between helpful and noisy. Start with Day 0 “welcome + first step,” then follow-ups based on what the user did or didn’t do (e.g., created a project but didn’t invite a teammate). Measure opens and clicks, but optimize for the downstream metric you care about: activation. Keep copy short, specific, and focused on one action.

3) Do I need a product tour?

Not always. Tours can help on complex workflows, but generic, linear tours are often ignored and forgotten. Favor contextual help that appears where confusion actually occurs and let users pull guidance on demand. If you do create a tour, keep steps few, copy brief, and provide an exit. Always A/B test whether the tour improves task completion and activation for the segments you target.

4) What is a reasonable activation target?

A useful starting point is to define activation precisely and set a target like “80% of signups activate within a single working session,” then refine with your own data. The important part is the definition and the measurement: your activation metric should predict retention and expansion better than superficial indicators. Revisit your definition as your product and customers evolve.

5) How do I personalize onboarding without asking too many questions?

Use progressive profiling. Capture only the essentials at signup (email, password or SSO, one purpose selector), then ask for more context in the product once the user has experienced value. Combine this with behavioral data to tailor content—for instance, show templates relevant to the actions they’ve taken rather than their job title alone.

6) Which onboarding metrics matter most?

Start with activation rate, TTV, early retention, first-run task completion, and message engagement. Build a small dashboard that the whole team can interpret. Use cohort analysis to validate that improvements persist beyond day one; it’s easy to inflate click metrics without creating lasting adoption. Instrument first, then iterate.

7) How should I handle onboarding for enterprise customers?

Enterprise onboarding often adds integrations, security reviews, SSO, and change management. Provide a dedicated path: a project plan template, named owner, and office hours. Break integration steps into small, verifiable tasks with status indicators and environment checks. Keep stakeholders aligned with a single view that shows progress to activation and the next decision required from IT or security.

8) What’s the role of Customer Success during onboarding?

Customer Success ensures the account reaches value and knows how to sustain it. Even in product-led motions, Success can provide playbooks for complex setups, run short kickoff calls, and track risk signals (stalled setup, low engagement). Success should partner with Product and Marketing to turn repeated manual explanations into built-in guidance or docs so assistance scales without burning out humans.

9) How can I reduce drop-off between signup and first action?

Audit every step from the Thank-You screen to the first core action. Replace blank pages with empty-state guidance and provide a single prominent CTA toward the first meaningful task. Defer non-essential steps, introduce templates, and add a short checklist to show progress. Test small nudges like a reminder banner if the user stalls and confirm via analytics that each change moves activation in the right direction.

10) How do regulations affect onboarding emails?

If you send commercial emails, follow core principles such as truthful headers, accurate subject lines, a physical address, and easy opt-out. In many regions you’ll also need valid consent before marketing to individuals, particularly in the EU and UK under privacy and electronic communications rules. Consult your counsel and adopt a compliance checklist so each new message is both helpful and lawful.

References

- How to measure acquisition and activation, Amplitude — https://amplitude.com/books/mastering-acquisition/how-to-measure-acquisition-and-activation

- The Amplitude Guide to Product Metrics (PDF), Amplitude — https://info.amplitude.com/rs/138-CDN-550/images/The%20Amplitude%20Guide%20to%20Product%20Metrics.pdf

- Onboarding Tutorials vs. Contextual Help, Nielsen Norman Group — https://www.nngroup.com/articles/onboarding-tutorials/

- Designing Empty States in Complex Applications, Nielsen Norman Group — https://www.nngroup.com/articles/empty-state-interface-design/

- Email Marketing Benchmarks & Industry Statistics, Mailchimp — https://mailchimp.com/resources/email-marketing-benchmarks/

- About Open and Click Rates, Mailchimp — https://mailchimp.com/help/about-open-and-click-rates/

- Checkout Optimization: Minimize Form Fields, Baymard Institute — https://baymard.com/blog/checkout-flow-average-form-fields

- The dos and don’ts of building strategic in-app guides, Pendo — https://www.pendo.io/resources/going-beyond-gut-instinct-the-dos-and-donts-of-strategic-in-app-guides/

- Create an effective onboarding series, Intercom Help Center — https://www.intercom.com/help/en/articles/421-create-an-effective-onboarding-series

- CAN-SPAM Act: A Compliance Guide for Business, Federal Trade Commission — https://www.ftc.gov/business-guidance/resources/can-spam-act-compliance-guide-business

- Electronic and telephone marketing (PECR), Information Commissioner’s Office (UK) — https://ico.org.uk/for-organisations/direct-marketing-and-privacy-and-electronic-communications/guide-to-pecr/electronic-and-telephone-marketing/