Blockchain scalability is the system’s ability to handle more transactions, bigger state, and heavier applications without breaking the core guarantees of security and decentralization. In plain terms: can your chain process more users at lower fees while keeping trust assumptions sane? This guide maps the space—sharding, sidechains, and off-chain approaches such as rollups and channels—so you can choose the right tool for your use case. At a glance, sharding splits work across the network, sidechains move activity to separate blockchains linked by bridges, and off-chain solutions shift computation or data off the base layer while preserving verifiability. The payoff is straightforward: higher throughput, faster confirmations, and better user experience.

Quick plan you can follow now:

- Diagnose your bottleneck (execution, data availability, or latency).

- Choose your trust model (L1 security, committee, or standalone).

- Pick a path (shard, sidechain, rollup, channel).

- Design data availability (on-chain blobs vs external DA).

- Plan bridges and exits before launch.

- Monitor costs, latency, and security assumptions.

1. Execution Sharding: Split Computation to Multiply Throughput

Execution sharding divides the transaction-processing workload into parallel “shards,” so not every node executes every transaction. This directly answers the core scalability constraint: a single global computer is a bottleneck; many coordinated shard computers are not. With execution sharding, accounts and contracts are partitioned, and cross-shard messaging ensures composability. You’ll see throughput scale with the number of shards, though complexity rises around cross-shard ordering and atomicity. For builders, the message is simple: if your chain’s bottleneck is compute, sharding can unlock parallelism while keeping a unified network. The trade-off is extra protocol machinery and a new class of consistency bugs to guard against.

How it works

- The network defines multiple shards; each proposes and executes its own block stream.

- Cross-shard transactions rely on asynchronous messages or receipts.

- Validators or committees rotate so no shard becomes a permanent trust hotspot.

- Light clients verify shard commitments via succinct proofs.

Numbers & guardrails

- Throughput scaling is typically near-linear with the number of shards when workloads are independent; cross-shard calls reduce realized gains.

- Cross-shard latency adds at least one extra block interval for message delivery and confirmation; design for multi-block finalization.

- State growth per shard must remain within consumer-hardware limits.

Common mistakes

- Assuming atomic, same-block composability across shards.

- Underestimating implications for wallet UX when users span multiple shards.

- Ignoring cross-shard MEV and ordering externalities.

In practice, execution sharding is most compelling when your workload naturally partitions—think gaming realms, regional payments, or app silos—so you capture parallelism without drowning in cross-shard calls.

2. Data Sharding & Data Availability Sampling: Scale the “Data Plane”

Even if computation is off-chain, scalability collapses when data has to be fully downloaded by every node. Data sharding splits the data plane, and data availability sampling (DAS) lets light nodes check that all data in a block exists without downloading it all. Each node samples random pieces; if many nodes independently recover the required fractions, the network can be confident the full data is available. DAS reduces per-node bandwidth while allowing the protocol to raise data throughput safely. For builders, this means cheaper batches for rollups and more room for blobs, lowering end-user fees without squeezing out verifiability.

How it works

- Blocks include erasure-coded data “blobs.”

- Nodes randomly request small shares; successful recovery implies global availability.

- Consensus only accepts blocks whose data passes availability checks.

Numbers & guardrails

- Sampling fraction per node is tiny (think small percentages per block), yet confidence compounds across many peers.

- Raising blob counts increases network throughput without forcing every node to download all blobs.

Tools & examples

- Protocol research and docs formalize DAS as a prerequisite for high-throughput data layers and lightweight clients.

The practical upshot: if your fees are dominated by data posting, embrace ecosystems that support DAS-backed blob space so your batches stay cheap and verifiable.

3. Sidechains: Independent Blockchains Connected by Bridges

A sidechain is a separate blockchain that runs alongside the main chain and connects via a two-way bridge. Sidechains can choose their own consensus, block times, and fee markets, often achieving higher throughput and lower costs than the base chain. The trade-off is security: a sidechain does not automatically inherit the main chain’s consensus guarantees, so users accept the sidechain’s validator set and bridge risk. Sidechains shine for high-volume apps that value speed and low fees over the strongest L1 security, such as social, gaming, or micro-commerce.

How it works

- Assets move via a bridge that locks on L1 and mints or credits on the sidechain (and vice versa).

- The sidechain runs its own consensus (often PoS), block production, and gas token.

- Periodic checkpoints can be posted to L1 for auditability.

Numbers & guardrails

- Latency is determined by the sidechain’s block time (often sub-second to a few seconds) but finality depends on its consensus.

- Bridge withdrawal times range from minutes to hours depending on design.

- Validator decentralization is crucial; aim for a committee size and distribution that aligns with your threat model.

Tools & examples

- Polygon PoS is a widely used EVM-compatible sidechain with documented architecture and node roles.

Synthesis: sidechains deliver raw speed and low fees; just be explicit about the distinct trust assumptions and set user expectations around bridges.

4. Plasma: Off-Chain Chains with On-Chain Commitments

Plasma proposed scaling by creating child chains anchored to the base chain, where operators periodically commit Merkle roots of transactions to L1. Users could exit to L1 using fraud-proof-style challenges if operators misbehaved. Plasma pushed throughput dramatically, but general-purpose smart contract support and data availability constraints limited adoption. It remains instructive: Plasma clarified how to anchor an off-chain ledger to L1 security with exit games and dispute periods. For payments or UTXO-like assets with simple logic, Plasma-style designs can still be compelling under the right conditions.

How it works

- Operator runs a high-throughput chain; periodically posts commitments to L1.

- Users keep proofs needed to exit in case of data withholding.

- Disputes are resolved on L1 within a challenge window.

Numbers & guardrails

- Exit data overhead falls on end users; wallets must store or retrieve proofs.

- Challenge windows add withdrawal latency (hours to days depending on design).

- Throughput is high while operator is honest and data is available.

Tools & examples

- Historic Plasma research and implementations informed modern rollup designs and DA priorities. Medium

Bottom line: Plasma’s lessons underpin today’s scaling—treat it as a conceptual foundation, especially for payments-centric systems.

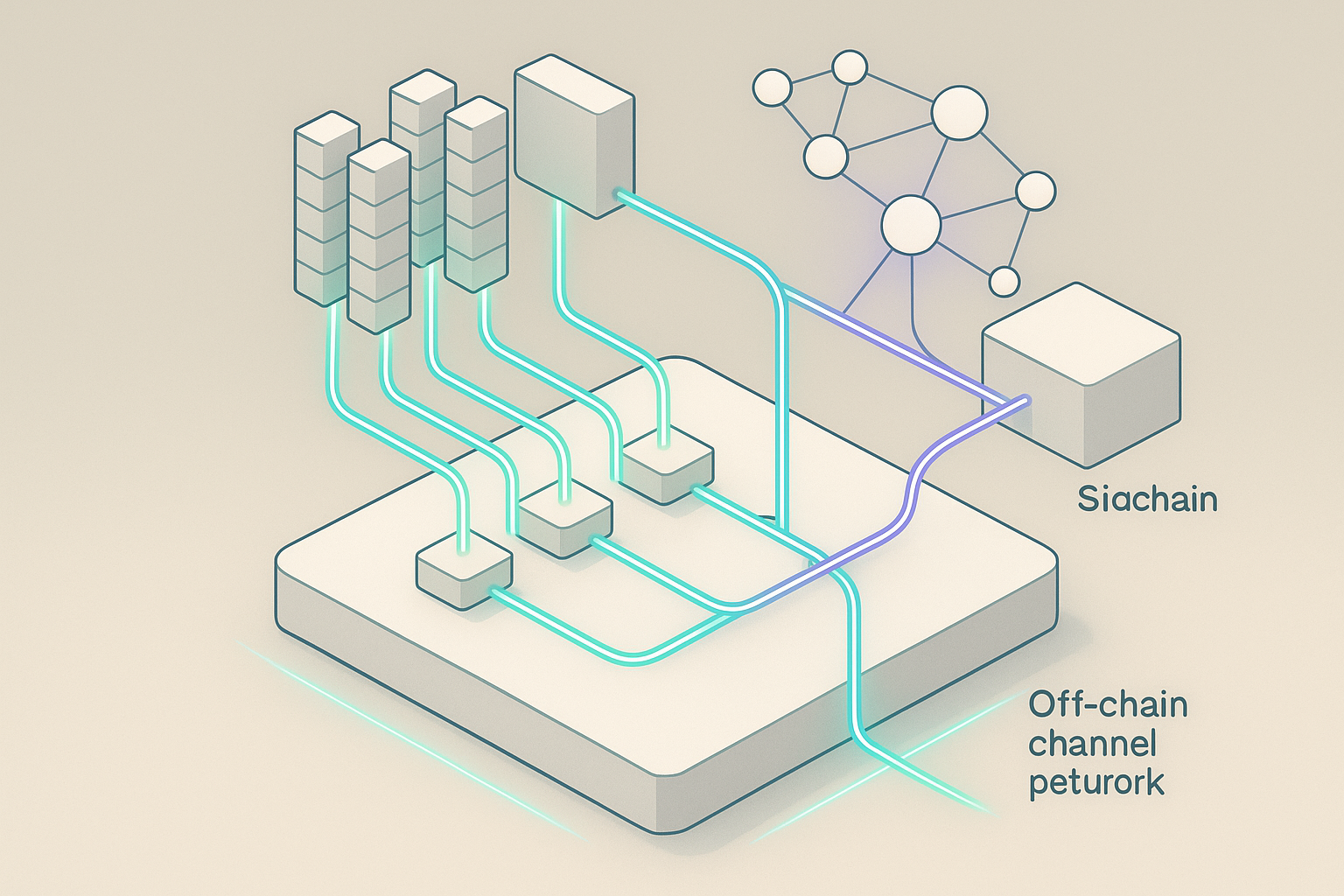

5. State Channels & Payment Channels: Instant Off-Chain UX

State channels lock funds or state on L1 and then allow parties to exchange signed messages off-chain. Only the final state settles on-chain, slashing latency and fees for repeated interactions between known participants. Payment channels, a specific form, are ideal for streaming micropayments or high-frequency commerce. Channels work best when participant sets are small and mostly online. They’re less ideal for massive open participation, but they deliver unbeatable user experience where they fit.

How it works

- Parties open a channel by depositing into a smart contract.

- They exchange signed updates; the latest valid state supersedes prior ones.

- On closing, the contract enforces the final split, with time-locks to deter griefing.

Numbers & guardrails

- Per-payment fees approach zero; you pay only for opens/closes.

- Liquidity must be pre-funded; allocate channels for expected flow (e.g., 0.1–5 units for retail, more for hubs).

- Routing across a network adds hops and potential failure; success rates improve with well-funded hubs.

Tools & examples

- Payment channel protocols like Lightning demonstrate multi-hop routed payments with hashed time-locks and dispute-safe settlement. lightning.network

In short, channels trade global liveness for local speed—perfect for interactive apps, subscriptions, or games with tight loops.

6. Optimistic Rollups: Scale by Assuming Honesty, Then Checking

Optimistic rollups execute transactions off-chain, post compressed data back to L1, and assume batches are valid unless challenged. Anyone can submit a fraud proof during a dispute window; if the batch is invalid, it’s reverted and the challenger is rewarded. Optimistic rollups inherit L1 security for data and settlement while unlocking big throughput gains and EVM-level programmability. The cost model is dominated by data posting (calldata or blobs), which becomes cheaper as the DA layer scales.

How it works

- A sequencer orders transactions and produces L2 blocks.

- Batch data is posted to L1; a challenge period allows fraud proofs.

- Withdrawals wait out the challenge window; fast bridges use liquidity providers.

Numbers & guardrails

- Throughput scales with batch size; thousands of tx per batch are common.

- Withdrawal times are tied to the dispute window (often measured in days); fast bridges can settle in minutes using liquidity and risk pricing.

- Costs fall when data moves to blob space; ABI compression and calldata optimizations help further. ethereum.org

Tools & examples

- Optimism and similar stacks document bridges and batch posting flows; data lands on L1 for full reconstructability. ethereum.org

Synthesis: optimistic rollups offer developer-friendly scaling with strong security; plan user flows around challenge-window exits.

7. Zero-Knowledge Rollups: Validity First, Finality Fast

ZK-rollups bundle transactions and submit a succinct validity proof showing the state transition is correct. Because the proof verifies all constraints, L1 can accept the new state immediately on proof verification—no challenge window is required. ZK-rollups deliver faster finality and stronger safety against invalid execution. The trade-off is prover complexity and hardware requirements, though modern systems are steadily optimizing. For high-frequency trading, payments, and privacy-sensitive apps, ZK-rollups set a high bar for security and UX.

How it works

- The sequencer executes off-chain; a prover generates a SNARK/STARK.

- L1 verifies the succinct proof and applies the state update.

- Users withdraw without long delays since validity is guaranteed.

Numbers & guardrails

- Proof generation time and cost determine batch frequency; many systems target seconds to minutes per batch.

- Throughput in practice reaches thousands of tx per batch; data posting is still the primary fee component.

- Hardware: provers benefit from GPUs or specialized accelerators.

Tools & examples

- Core docs outline that ZK-rollups increase throughput while posting minimal summary data plus a cryptographic proof.

In sum, ZK-rollups trade prover complexity for immediate, mathematically enforced correctness—excellent for low-latency withdrawals and exchange-like workloads.

8. Validium & Volition: ZK Security with Off-Chain Data

Validiums publish validity proofs to L1 but keep most transaction data off-chain, often secured by a data availability committee (DAC). This slashes data costs while maintaining correctness via proofs; however, if the DAC withholds data, users might be locked out of reconstructing the state. Volition lets users or assets choose between rollup mode (on-chain data) and validium mode (off-chain data), mixing cost savings with optional L1 DA. These modes are powerful for apps with diverse security needs—e.g., high-value assets on rollup mode, high-volume trades on validium.

How it works

- The prover posts proof to L1; DA is handled by a committee or external layer.

- In volition, users select DA per asset or transaction type.

- Applications can later migrate assets between modes when priorities shift.

Numbers & guardrails

- Fee reduction from off-chain DA can be substantial when batches are data-heavy.

- DAC size and independence are critical; larger, diverse committees reduce withholding risk.

- User choice in volition adds UX complexity—communicate guarantees clearly. docs.starkware.co

These designs let you target a precise cost/security point instead of a one-size-fits-all posture.

9. Sovereign Rollups & Modular DA Layers: Keep Settlement Flexible

A sovereign rollup posts its data to a data availability layer but does not rely on a separate settlement layer to define validity rules; instead, the rollup’s own consensus rules determine its canonical state. This grants autonomy (your chain can fork independently) while leveraging an external network for scalable data. Modular DA layers make this practical by providing cheap, verifiable blob space that light clients can check via DAS. For teams that want their own execution rules but don’t want to run a full L1 validator set, sovereign rollups can be a sweet spot.

How it works

- The rollup posts data to a DA layer; light clients verify availability via sampling.

- The rollup defines its own fork choice and validity rules—no external settlement required.

- Bridges to other ecosystems are built on top using proofs or trusted relays.

Numbers & guardrails

- DA costs are typically lower than posting to a congested L1, especially when blobs scale with DAS.

- Security hinges on honest majority of your rollup validators for validity and the DA layer for data.

- Interoperability requires careful bridge design since you don’t inherit a settlement layer’s guarantees.

Tools & examples

- Vendor docs and blogs explain how rollups inherit DA from an external network while maintaining sovereignty.

Takeaway: sovereignty buys flexibility; pair it with robust DA and a conservative bridge strategy.

10. App-Specific L2s and L3s: Vertical Scale for Focused Workloads

Instead of sharing a general-purpose L2, teams can launch app-specific L2s or even L3s layered on top of L2s. The idea is to isolate congestion, tune gas economics, and optimize the stack (VM, prover, fee market) for a single application or family of apps. This concentrates sequencer resources and simplifies MEV mitigation for your domain. The trade-off is more infrastructure to operate and additional bridging surfaces. L3s can be compelling for games, exchanges, or high-throughput dapps that want near-instant confirmations and predictable costs. yellow.com

How it works

- Deploy your own rollup instance (L2 or L3) with custom parameters.

- Reuse settlement and DA from a parent layer, or choose external DA.

- Bridge users and liquidity from parent layers; aggregate proofs where possible.

Numbers & guardrails

- Latency targets can be aggressive (sub-second local confirmations) with batched proofs to parent layers.

- Cost control improves when you own the sequencer fee market.

- Security depends on your proof system and DA; keep auditor and monitoring budgets realistic.

Mini-checklist

- Define your hottest code paths and tailor the VM/prover.

- Reserve bandwidth with a conservative batch schedule.

- Publish operational metrics and incident response runbooks.

For focused workloads, app-chains and L3s deliver bespoke UX without fighting for shared blockspace. docs.espressosys.com

11. Shared Sequencers & Proof Aggregation: Reduce Fragmentation

Multiple rollups can coordinate through a shared, decentralized sequencer to align ordering, reduce cross-rollup MEV, and enable atomic multi-rollup transactions. Separately, proof aggregation merges many rollup proofs into a single succinct object, lowering on-chain verification costs. Together, these approaches help the ecosystem scale without every chain reinventing the wheel. For builders, shared sequencing simplifies interop and reduces latency for cross-rollup flows; proof aggregation cuts fees when many small batches would otherwise be expensive.

How it works

- Rollups submit transactions to a common sequencer protocol.

- The sequencer outputs a consistent ordering across participants.

- Aggregators combine multiple SNARKs/STARKs into one proof for L1 verification.

Numbers & guardrails

- Cross-rollup atomicity becomes feasible when participants share ordering.

- Fee savings from aggregated proofs grow with the number of participating rollups.

- Decentralization of the sequencer set matters—avoid recreating a central chokepoint.

Common mistakes

- Treating shared sequencing as a silver bullet; you still need sound DA and bridge design.

- Ignoring liveness assumptions—consider fallback paths if a sequencer set stalls.

Use shared sequencing to smooth UX across rollups, then amplify savings by aggregating proofs where your stack supports it.

12. Hybrid Off-Chain Compute & Order Flow: Commit on-Chain, Scale Off-Chain

Not every operation needs full on-chain execution. Hybrid designs run heavy computation or order matching off-chain and commit results on-chain with proofs or verifiable logs. Examples include off-chain order books that settle on-chain, oracle-driven computations, or zk-coprocessors that crunch large datasets. The principle is simple: keep the chain as a verifiable arbiter while pushing transients off-chain. Success hinges on determinism, transparent logs, and crisp failure modes. For DEXs and data-hungry apps, this can unlock large UX wins without sacrificing auditability.

How it works

- Off-chain engine computes results; an on-chain contract verifies proofs or signatures.

- Batches of fills, updates, or results are committed periodically.

- Disputes fall back to on-chain re-execution or challenge logic.

Numbers & guardrails

- Throughput scales with off-chain infra; on-chain costs scale with batch frequency and proof size.

- Latency can approach web-native speeds between commits; finality equals on-chain verification time.

- Data retention: persist logs for audit; prune wisely to control storage.

Mini-checklist

- Publish verifiable logs and replay tools.

- Define challenge flows and time-outs.

- Separate price-time priority rules from custody logic.

Hybrid architectures are pragmatic: they reserve on-chain trust for settlement while delivering responsive, web-grade UX for users.

One compact comparison (for quick scanning)

| Solution | Trust & DA model | Typical latency | Best for |

|---|---|---|---|

| Execution sharding | L1 consensus; data split across shards | Multi-block across shards | Apps with natural partitions |

| Sidechains | Independent consensus; bridged assets | Sidechain block time | High-throughput, lower-security apps |

| Plasma | Operator with L1 commitments; exit games | Fast in-chain; slow exits | Payments/UTXO flows |

| State/payment channels | Bilateral/multilateral contracts | Near-instant | Repeated interactions |

| Optimistic rollups | L1 DA + fraud proofs | Batch time + dispute window for exits | General-purpose apps |

| ZK-rollups | L1 DA + validity proofs | Batch time; fast withdrawals | Trading, payments |

| Validium/Volition | Off-chain DA committee or optional L1 DA | Batch time; depends on mode | Cost-sensitive, mixed needs |

| Sovereign rollups | Own validity rules + external DA | Depends on stack | Autonomous appchains |

| Shared sequencers | Cross-rollup ordering; L1 DA | Batch time; cross-rollup atomicity | Interop, MEV mitigation |

| Hybrid off-chain compute | Off-chain engines + on-chain commits | Web-grade between commits | Order books, data-heavy apps |

Conclusion

Scalability is not a single feature but a design choice lattice spanning execution, data, and trust. Sharding tackles parallel compute and data availability at the protocol level. Sidechains deliver speed by shifting security to an independent validator set. Off-chain families—channels, rollups, validium, volition, sovereign rollups, and hybrid compute—let you pick exactly where to spend gas, time, and trust. The right answer depends on your bottleneck: choose sharding when you can partition workloads; choose sidechains when you can accept different security for better UX; choose rollups or validium when you want L1-anchored guarantees and modular costs; add shared sequencing and proof aggregation as your footprint grows. Start with your users: target the UX you need, then back into the minimum trust you can accept. Your next step: map your app’s hottest paths, pick one scaling path from the twelve above, and pilot it with real users.

FAQs

1) What’s the quickest way to lower fees for my dapp without a full rewrite?

Move execution to a rollup that fits your needs. If long exit times are acceptable, an optimistic rollup reduces fees immediately; if you need fast withdrawals and strong correctness, a ZK-rollup is attractive. In both cases, fees are dominated by data posting, so pick ecosystems with ample blob space or external DA to keep costs stable.

2) How do state channels differ from rollups for a simple game or subscription app?

Channels are best when the same participants interact repeatedly and can stay online; they’re near-instant and nearly free between opens/closes. Rollups suit open participation and on-chain composability at the cost of higher per-tx fees and batch latency. Many teams prototype with channels for head-to-head modes and adopt a rollup for open lobbies and marketplaces. ethereum.org

3) Are sidechains safe for high-value assets?

They can be, but security is independent from the main chain. You rely on the sidechain validator set and bridge design. For very high-value assets, teams often prefer L2s that inherit L1 DA and settlement, or validium/volition with clear DA committees and exit guarantees.

4) When should I consider validium or volition over a rollup?

Choose validium/volition when your gas costs are dominated by data but your application can tolerate DA risk from a committee. Volition is particularly flexible: keep core assets in rollup mode and move high-volume, lower-value flows to validium mode to cut costs. Document guarantees clearly in your UI.

5) What does “sovereign rollup” really buy me?

Autonomy. Your chain defines its own validity rules and fork choice while outsourcing data availability to a specialized layer. This reduces the need to run a full validator set and lets you evolve the protocol without depending on a separate settlement layer’s governance. Bridges and interop require extra care.

6) How does data availability sampling affect end-user fees?

By making blob space verifiable without full downloads, DAS allows networks to raise data throughput safely. Rollups and other batch posters then fit more data per block at lower marginal cost, which typically translates to lower per-transaction fees for users. Ethereum Foundation Blog

7) Can shared sequencers solve cross-rollup MEV and failed atomic swaps?

They help. A shared, decentralized sequencer provides a common ordering across multiple rollups, enabling atomic multi-rollup transactions and curbing cross-domain MEV. You still need robust DA and fallback liveness paths, but shared ordering reduces fragmentation.

8) Do ZK-rollups always beat optimistic rollups?

Not universally. ZK-rollups excel at fast finality and correctness, but provers can be heavy and complex. Optimistic rollups are simpler and mature for EVM-level contracts, though they impose withdrawal delays. Pick based on your latency targets, developer tooling, and operating budget.

9) Is Plasma obsolete now that rollups exist?

Plasma’s general-purpose limitations reduced adoption, but the model taught the ecosystem about exit games, operator incentives, and DA risks. For payments-focused cases, Plasma-like architectures can still make sense if users can handle exit mechanics and proof storage. haseeb qureshi

10) What should I measure to know if scaling “worked”?

Track end-to-end effective cost per successful interaction, p50/p95 latency from user action to finality, reorg/rollback incidence, bridge failure rate, and sequencer liveness. Add user-centric metrics like completion rate and churn. Scaling isn’t just TPS; it’s consistent, predictable UX under load.

References

- “Scaling,” Ethereum.org — ethereum.org

- “Optimistic Rollups,” Ethereum.org — ethereum.org

- “Zero-Knowledge Rollups,” Ethereum.org — ethereum.org

- “Data Availability & DAS,” Ethereum.org — ethereum.org

- “Danksharding,” Ethereum.org — ethereum.org

- “Validium,” Ethereum.org — ethereum.org

- “Sidechains,” Ethereum.org — ethereum.org

- “Rollup, Validium, Volition—Where Is Your Data Stored?,” StarkWare — StarkWare

- “Data Availability FAQ,” Celestia Docs — Celestia Docs

- “Rollups as Sovereign Chains,” Celestia Blog — Celestia Blog

- “The Espresso Sequencer,” HackMD — HackMD

- “Polygon PoS,” Polygon Docs — Polygon Knowledge Layer