The video game industry has always been a frontier for technological innovation, but the integration of artificial intelligence (AI) is pushing boundaries that were once thought to be the exclusive domain of human creativity. We are moving past the era of pre-scripted events and predictable enemy patterns into a new age of AI in gaming: one defined by truly immersive experiences and dynamic storytelling that reacts to the player in real-time.

For decades, “AI” in games referred to simple scripts—finite state machines that told a guard to patrol a hallway or a ghost to chase Pac-Man. Today, the term encompasses machine learning, large language models (LLMs), and generative systems that can build worlds, write dialogue, and adapt gameplay mechanics on the fly. This shift promises to transform games from static entertainment products into living, breathing ecosystems.

Key Takeaways

- From Scripted to Systemic: AI is evolving from rigid “if-this-then-that” logic to adaptive systems that learn and react to player behavior in organic ways.

- Dynamic Storytelling: Generative AI enables narratives that are not just branching but unique to every playthrough, effectively acting as an infinite Dungeon Master.

- Smarter NPCs: Non-Player Characters are gaining the ability to hold natural conversations, remember past interactions, and exhibit complex emotional states.

- Procedural Mastery: Beyond just terrain, AI can now generate quests, lore, items, and entire civilizations, ensuring no two gaming experiences are identical.

- Personalized Difficulty: Adaptive systems can analyze player skill and engagement in real-time to adjust challenges, keeping players in the “flow state.”

Who This Is For (And Who It Isn’t)

This guide is designed for gamers curious about the future of their hobby, game developers looking to understand the landscape of AI tools, and investors or tech enthusiasts analyzing the trajectory of interactive media.

It is not a technical coding tutorial for implementing neural networks in Unity or Unreal Engine, nor is it a dry academic paper on algorithm optimization. It is a comprehensive exploration of how AI impacts the user experience and the creative process.

1. The Evolution of AI in Gaming: Beyond the Script

To understand where AI in gaming is going, we must first appreciate where it began. The “AI” found in classic games is fundamentally different from the “AI” driving ChatGPT or Midjourney.

Traditional Game AI: The Illusion of Intelligence

For most of gaming history, AI has been a sleight of hand. It relied on Finite State Machines (FSMs) and Behavior Trees. An FSM consists of predefined states—like “Idle,” “Chase,” or “Attack”—and the rules for switching between them. If the player enters a specific radius, the enemy switches from “Idle” to “Chase.”

While effective for gameplay mechanics (players need predictable patterns to learn and master a game), this approach has limitations:

- Repetition: Once a player figures out the pattern, the challenge diminishes.

- Brittleness: If a player does something the developer didn’t anticipate, the AI often breaks or behaves absurdly (e.g., an NPC running continuously into a wall).

- Lack of Memory: Traditional NPCs rarely “remember” interactions beyond simple reputation meters.

The New Era: Machine Learning and Generative AI

The modern revolution involves Machine Learning (ML) and Generative AI. Instead of following a strict script, these systems analyze vast amounts of data to make decisions or create new content.

- Reinforcement Learning: Agents learn to play the game by playing millions of matches against themselves, discovering strategies no human developer programmed (as seen in DeepMind’s AlphaStar for StarCraft II).

- Generative Models: These systems can create assets, dialogue, and textures from scratch based on prompts or context, allowing for infinite variations.

This transition marks the difference between a game that simulates a response and a game that understands the context of that response.

2. Dynamic Storytelling: The AI Game Master

One of the most exciting promises of AI in gaming is the concept of dynamic storytelling. In traditional narrative games, writers create a “dialogue tree.” Players might choose between three options (Good, Neutral, Bad), leading to three pre-written outcomes. While this offers agency, it is still a “Choose Your Own Adventure” book with invisible walls.

The Rise of Emergent Narrative

AI allows for emergent narrative, where the story arises from the interaction of game systems rather than a pre-written script.

- The AI Director: Games like Left 4 Dead pioneered this with a “Director” AI that controlled enemy pacing based on player health and stress levels. Modern iterations can go much further, altering the plot itself.

- Context-Aware Plotting: Imagine a mystery game where the culprit changes based on the clues you find first, or a role-playing game (RPG) where a faction war evolves differently depending on who you helped in a side quest 20 hours ago.

Generative Dialogue and Quest Design

With the integration of Large Language Models (LLMs), games can theoretically offer infinite dialogue options. Instead of selecting from a list, a player could type or speak a question to an NPC, and the NPC would generate a response consistent with the game’s lore and their character profile.

- Dynamic Quests: An AI system could analyze a player’s playstyle. If the player prefers stealth, the AI generates a heist mission. If they prefer combat, it generates a fortress assault.

- Coherent Lore Generation: The system ensures that generated quests fit the world’s history, checking against a “world bible” to prevent contradictions (hallucinations).

Challenges in Narrative AI

While promising, dynamic storytelling faces significant hurdles:

- Tonal Consistency: AI might generate dialogue that is technically correct but tonally jarring (e.g., a gritty soldier using modern slang in a medieval setting).

- Narrative Arcs: Human writers are masters of pacing, foreshadowing, and emotional payoff. AI struggles to maintain a coherent three-act structure over a 40-hour game.

- Safety bounds: Developers must implement strict guardrails to prevent AI characters from generating offensive or inappropriate content.

3. Immersive Experiences: The Next Generation of NPCs

Immersion is the holy grail of game design—the feeling of being truly transported into a virtual world. Nothing breaks immersion faster than a robotic NPC repeating the same line: “Arrow to the knee.”

The “Smart” NPC

AI-driven NPCs are moving toward “Smart Agents” capable of perception, memory, and planning.

- Perception: Using computer vision and audio processing within the game engine, NPCs can “see” what the player is wearing or holding and “hear” if the player is sneaking.

- Memory (Vector Databases): Advanced NPCs can have long-term memory. If you saved a villager in the tutorial, they might remember you when you return to the village at the end of the game, not because a boolean flag was switched, but because the interaction was stored in their semantic memory.

- Goal-Oriented Action Planning (GOAP): Instead of following a script, NPCs act to fulfill needs. A hungry NPC might decide to hunt, trade, or steal food based on their morality stats and the environment.

Natural Language Processing (NLP) Integration

Companies like NVIDIA (with their Avatar Cloud Engine, or ACE) and various startups are building middleware that allows developers to drop LLM-powered brains into 3D models.

- Voice-to-Voice Interaction: Players can speak into their microphone, and the NPC understands the intent and responds with synthesized speech that carries emotional inflection (anger, whisper, joy) matching the context.

- Lip Sync and Animation: AI models now generate realistic lip-syncing and facial animations in real-time to match the generated audio, eliminating the “ventriloquist dummy” effect of older games.

The “uncanny valley” of Behavior

As NPCs become more realistic, they risk falling into the Uncanny Valley—where they are close to human but “off” enough to be creepy. AI in gaming must balance hyper-realism with stylized consistency to maintain player comfort.

4. Procedural Content Generation (PCG) on Steroids

Procedural Content Generation (PCG) is not new; games like Rogue (1980) and Minecraft use algorithms to generate maps. However, traditional PCG is often based on noise functions (like Perlin noise), which can result in “oatmeal” content—infinite bowls of oatmeal are technically unique, but they all taste the same.

AI-Assisted World Building

Modern AI uses machine learning to understand the rules of good design, not just the math of randomness.

- Texture and Asset Synthesis: Instead of an artist hand-painting every rock, AI can generate thousands of unique rock variations that all fit the specific geological biome of the level.

- Layout Logic: Machine learning models trained on well-designed architectural blueprints can generate dungeon layouts that make logical sense (e.g., kitchens near dining halls, guard posts near entrances), solving the chaotic randomness of older PCG.

Infinite Replayability vs. Curated Design

The debate in the industry is often “Hand-crafted vs. Procedural.” The future is likely hybrid.

- The “Sandwich” Method: Human designers create the “bread” (the start and end, key narrative beats) and the “meat” (core mechanics), while AI generates the “salad and sauce” (terrain between cities, loot distribution, civilian NPC faces).

- Runtime Generation: Some games are experimenting with generating content as you play. A horror game could analyze what scares you (e.g., do you run away from spiders but freeze when you hear whispers?) and procedurally generate the next room to exploit that specific fear.

5. Adaptive Difficulty and Personalized Gameplay

One of the oldest problems in game design is balancing difficulty. A game that is too hard causes frustration; a game that is too easy causes boredom. The ideal zone between them is called the Flow State.

Dynamic Difficulty Adjustment (DDA)

Traditional DDA is simple: if the player dies three times, lower enemy health. AI-driven DDA is subtle and invisible.

- Behavioral Analysis: The AI tracks metrics like reaction time, accuracy, inventory usage, and movement patterns.

- Micro-Adjustments: Instead of just giving enemies less health, the AI might make enemies slightly less aggressive, drop more health potions, or subtly adjust the aim-assist friction.

- Pacing Control: In a survival horror game, an AI manager (like in Alien: Isolation) learns the player’s hiding spots and habits. If the player always hides in lockers, the Alien learns to check lockers. This forces the player to adapt, keeping the tension high.

Accessibility and Inclusion

AI plays a massive role in accessibility.

- Visual Assistance: Real-time object recognition can describe the scene for visually impaired players.

- Input Adaptation: AI can learn a player’s physical limitations (e.g., tremors or slower reaction times) and filter input signals to interpret the player’s intent rather than their raw input, effectively stabilizing controls for players with motor disabilities.

6. The Developer’s Toolkit: How AI Helps Make Games

While players see the result, AI is radically changing the production of games. Game development has become prohibitively expensive and time-consuming. AI tools are becoming force multipliers for developers.

Asset Creation and Prototyping

- Concept Art: Tools like Midjourney and Stable Diffusion allow art directors to generate hundreds of mood boards and concept iterations in hours rather than weeks.

- 3D Modeling: Photogrammetry combined with AI (NeRFs and Gaussian Splatting) allows devs to scan real-world objects and turn them into game-ready 3D assets instantly.

- Coding Assistants: AI coding partners (like GitHub Copilot) help programmers write boilerplate code, debug scripts, and optimize shaders, freeing them to work on complex logic.

QA and Playtesting

Playtesting is a bottleneck. You need hundreds of humans to play the game to find bugs and balance issues.

- AI Bots as Testers: Developers can unleash thousands of AI agents into a level. These agents play the game 24/7, trying to walk into every wall, jump on every object, and break every mechanic. They generate heatmaps showing where players get stuck or where the level is too easy.

- Balancing Economies: In complex MMOs (Massively Multiplayer Online games), AI simulations can predict how changes to item drop rates will affect the in-game economy months down the line, preventing inflation or market crashes.

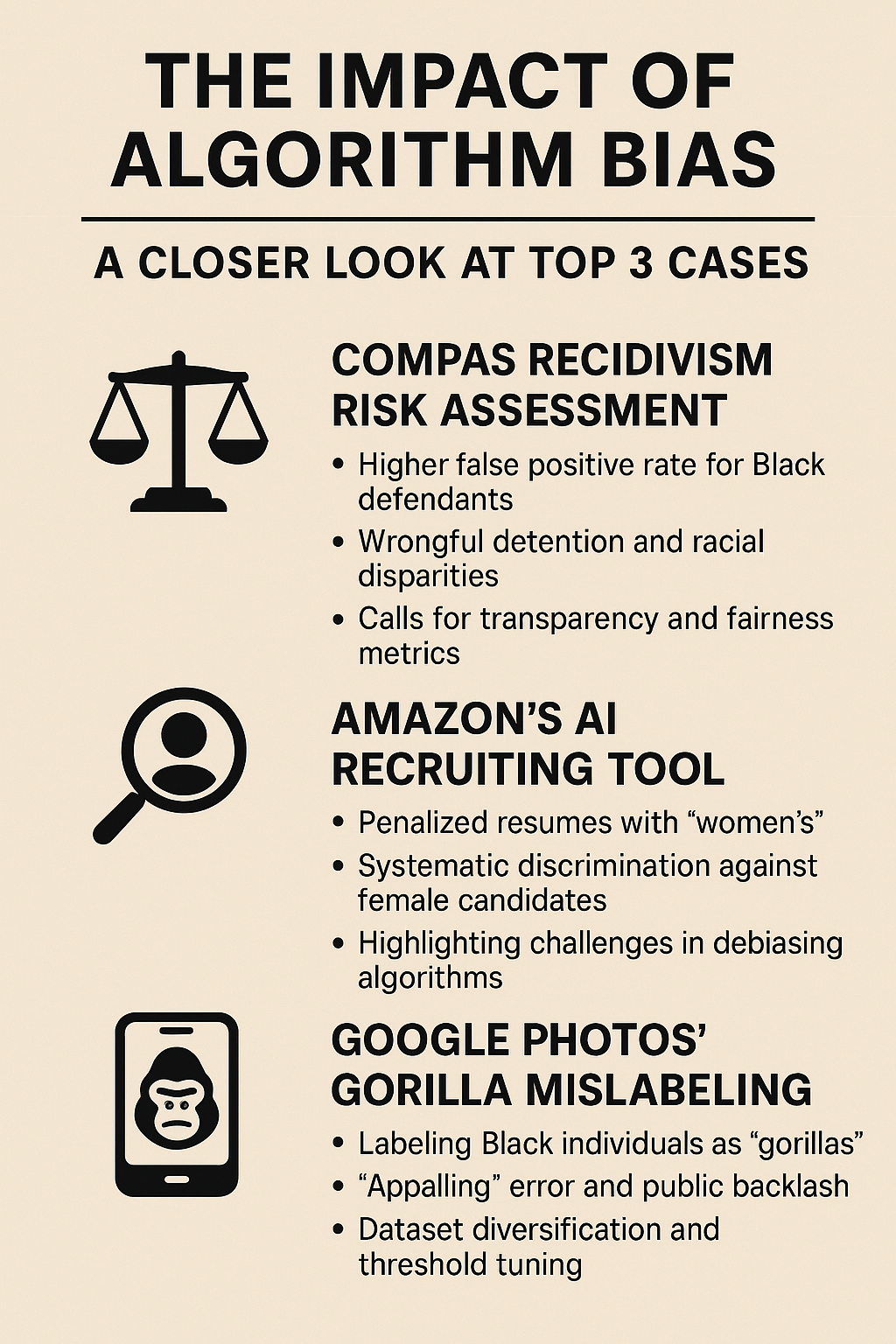

7. Ethical Considerations and Industry Challenges

The integration of AI in gaming is not without controversy. As we embrace these tools, we must address the human and ethical costs.

The “Human Touch” vs. Automation

There is a fear that AI will lead to “soulless” games. Players often value the intentionality behind a level design or a line of dialogue. If an AI generates it, does it carry the same artistic weight?

- The Consensus: Most experts argue AI should be a tool for augmentation, not replacement. It handles the drudgery so humans can focus on the “soul.”

Job Displacement

The fears of voice actors, writers, and concept artists are valid.

- Voice Acting: The ability to clone voices or generate performances raises legal and ethical questions regarding consent and compensation. Unions like SAG-AFTRA are actively defining the boundaries of AI usage in performance.

- Copyright: Using generative AI trained on copyrighted art or writing without permission remains a legal grey area that studios are navigating cautiously.

Toxicity and Moderation

In multiplayer games, AI is the frontline defense against toxicity.

- Real-time Moderation: AI models (like Modulate’s ToxMod) analyze voice chat in real-time to detect hate speech, harassment, and grooming, handling context better than simple keyword filters.

- Bias: Developers must ensure their AI systems are not biased against specific dialects or accents when moderating voice chat.

8. Case Studies: AI in Action

To see these concepts in practice, we can look at current titles and tech demos that are pioneering AI integration.

No Man’s Sky (Procedural Generation)

While an older example, No Man’s Sky remains the benchmark for PCG. It uses mathematical formulas to generate 18 quintillion planets. The “AI” here is the set of rules that ensures planets have breathable atmospheres, flora, and fauna that follow biological logic (mostly). It demonstrated the scale AI can achieve.

The Last of Us Part II (Enemy Coordination)

Naughty Dog’s enemies are not using LLMs, but they use advanced systemic AI. They communicate. If you kill an enemy named “David,” his ally will scream “David!” and become more aggressive. They flank, suppress, and communicate based on the player’s last known position. This creates an illusion of high intelligence through complex behavior trees and “barks” (audio cues).

AI Dungeon (Generative Narrative)

AI Dungeon was one of the first games to rely entirely on an LLM (GPT-3, initially) to act as a text-based Dungeon Master. It showed the potential—infinite freedom—and the pitfalls—incoherence and memory loss. It serves as a prototype for the future of RPGs.

9. Future Trends: What Lies Ahead?

The convergence of gaming and AI is accelerating. Here is what the next 5-10 years might look like.

Neuro-Gaming

Connecting Brain-Computer Interfaces (BCIs) with AI. The AI reads your EEG signals to determine your emotional state (bored, excited, scared) and adjusts the game in real-time. If you aren’t scared enough in a horror game, the AI dims the lights and spawns a monster behind you.

The Metaverse and “Living” Worlds

Persistent virtual worlds where NPCs continue their lives even when you log off. AI agents running on cloud servers simulating economies, ecosystems, and social structures that evolve without player intervention, creating a world that feels genuinely alive.

Text-to-Game

We are approaching a point where a user could type “Make me a platformer where I play as a cybernetic squirrel in a neon Tokyo,” and the AI generates the assets, code, and levels instantly. This democratizes game creation, blurring the line between player and developer.

Related Topics to Explore

- Generative Adversarial Networks (GANs): Understanding the tech behind image generation.

- Reinforcement Learning (RL): How AI learns to play games better than humans.

- The Turing Test in Gaming: Can players distinguish between human and AI opponents?

- Ethics of AI Art: The legal landscape of using AI assets in commercial games.

- Adaptive Music Systems: How AI composes soundtracks that react to gameplay intensity.

Conclusion

AI in gaming is reshaping the medium from a static loop of input-and-response to a dynamic dialogue between player and system. By leveraging machine learning for immersive experiences and dynamic storytelling, developers are creating worlds that are more responsive, accessible, and vast than ever before.

However, the technology is merely a canvas. The masterpiece requires the human intent of designers, writers, and artists to guide the AI, ensuring that efficiency does not come at the cost of the emotional core that makes gaming so special. As we move forward, the best games will not be those made by AI, but those made with AI to amplify human creativity.

Next Steps: If you are a gamer, pay attention to the “adaptive” settings in your next game menu. If you are a developer, start experimenting with tools like Unity Muse or GitHub Copilot to see how they can streamline your workflow today.

FAQs

How does AI improve storytelling in video games?

AI improves storytelling by enabling emergent narratives. Instead of following a fixed script, AI systems can generate dialogue, quests, and plot twists in real-time based on the player’s choices, playstyle, and past interactions, making every playthrough unique.

What is the difference between NPC AI and Generative AI?

Traditional NPC AI uses pre-defined scripts and decision trees to control character behavior (e.g., pathfinding, combat tactics). Generative AI uses large models to create new content from scratch, such as generating unique dialogue lines, textures, or even character backstories that didn’t exist in the game files previously.

Can AI make video games too difficult?

It can, but the goal is usually the opposite. AI is used for Dynamic Difficulty Adjustment (DDA), which analyzes a player’s performance in real-time to adjust the challenge. If a player is struggling, the AI might subtly reduce enemy accuracy; if they are breezing through, it might increase enemy aggression to maintain engagement.

Will AI replace human game developers?

Unlikely. AI is viewed as a productivity multiplier. It can handle repetitive tasks like asset generation, bug testing, and coding boilerplate, allowing human developers to focus on high-level creative direction, narrative design, and unique mechanics.

What is Procedural Content Generation (PCG)?

PCG is a method where data is created algorithmically rather than manually. In gaming, AI uses PCG to create vast terrain, infinite dungeon layouts, unique weapons, or entire planets (like in No Man’s Sky) automatically, ensuring the game world is massive and varied without requiring an army of artists.

Are there ethical concerns with AI in gaming?

Yes. Major concerns include the potential displacement of jobs (voice actors, concept artists), copyright issues regarding the data used to train AI models, and the risk of toxic or biased content being generated by AI in real-time interactions with players.

What is an “AI Game Master”?

An AI Game Master is a system that oversees the game state, similar to a Dungeon Master in Dungeons & Dragons. It controls the pacing, spawns enemies, distributes loot, and alters the narrative flow based on player stress levels and performance to ensure a dramatic and enjoyable experience.

How do NPCs remember player interactions?

Modern AI NPCs use Vector Databases to store memories. When a player interacts with an NPC, the interaction is converted into data embeddings. Later, the NPC can query this database to “recall” what the player said or did previously, allowing for long-term continuity in relationships.

References

- Unity Technologies. (n.d.). Unity Muse: AI capabilities for game creation. Unity. https://unity.com/products/muse

- NVIDIA. (2023). NVIDIA ACE (Avatar Cloud Engine) for Games. NVIDIA Developer. https://developer.nvidia.com/ace

- Yannakakis, G. N., & Togelius, J. (2018). Artificial Intelligence and Games. Springer. https://gameaibook.org/

- Valve Corporation. (2009). The AI Systems of Left 4 Dead. presented at the Game Developers Conference (GDC). https://steamcdn-a.akamaihd.net/apps/valve/2009/ai_systems_of_l4d_mike_booth.pdf

- Park, J. S., et al. (2023). Generative Agents: Interactive Simulacra of Human Behavior. Stanford University / Google Research. https://arxiv.org/abs/2304.03442

- SAG-AFTRA. (2024). AI & Digital Voice Protections. SAG-AFTRA Official Site. https://www.sagaftra.org/

- Riedl, M. O., & Bulitko, V. (2013). Interactive Narrative: An Intelligent Systems Approach. AI Magazine, 34(1), 67-77. https://ojs.aaai.org/index.php/aimagazine/article/view/2449

- Hello Games. (2016). No Man’s Sky: Technology of a Procedural Universe. GDC Vault. https://www.gdcvault.com/play/1024265/No-Man-s-Sky-Technology