The era of passive learning—clicking through static slides or watching pre-recorded videos—is rapidly fading in the face of a technological convergence that is reshaping how humans acquire skills. AI for immersive training represents the fusion of Extended Reality (XR)—including Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR)—with advanced Artificial Intelligence. This combination moves simulation beyond mere visualization into the realm of intelligent, adaptive, and responsive experiences.

For decades, simulations were scripted and linear. If a pilot in a flight simulator made a specific error, the system responded with a pre-programmed outcome. Today, AI agents and machine learning algorithms drive these environments, allowing for infinite variability, realistic conversation with virtual characters, and environments that evolve based on the learner’s stress levels and performance.

In this guide, AI for immersive training refers to the integration of machine learning, natural language processing, and computer vision within 3D simulated environments to create educational experiences that mimic the complexity and unpredictability of the real world.

Key Takeaways

- Dynamic Adaptation: Unlike static simulations, AI-driven systems adjust the difficulty and complexity of a scenario in real-time based on the learner’s performance.

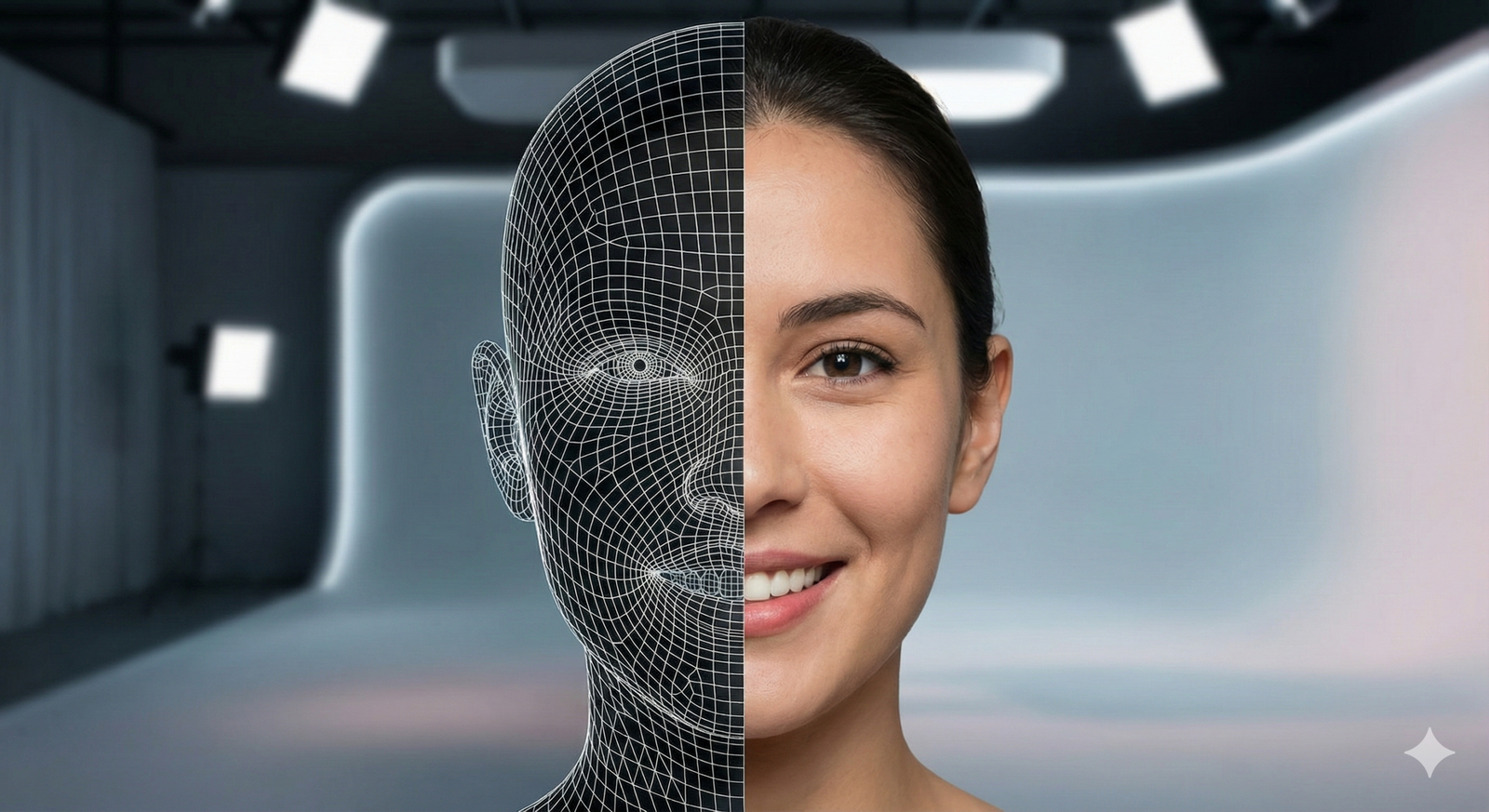

- Intelligent Virtual Agents: Large Language Models (LLMs) now power avatars, enabling trainees to have natural, open-ended voice conversations for soft skills and leadership training.

- Cost Efficiency at Scale: Generative AI dramatically reduces the cost of building 3D environments, making immersive training accessible to more industries.

- Enhanced Safety: Trainees can practice high-risk procedures—from surgery to hazardous waste disposal—with zero physical risk but high psychological fidelity.

- Deep Analytics: AI analyzes biometric data (eye tracking, hesitation, voice stress) to provide objective feedback on confidence and competence, not just test scores.

Who This Is For (And Who It Isn’t)

This guide is designed for:

- L&D Managers and HR Directors looking to modernize corporate training programs and improve employee retention.

- Operations Leaders in high-stakes industries (manufacturing, energy, healthcare, aviation) seeking safer ways to train staff.

- Educators and Instructional Designers interested in the technical integration of AI into curriculum design.

- Tech Enthusiasts wanting to understand the mechanics behind the “Industrial Metaverse” and spatial computing.

This guide is NOT for:

- Readers looking for a simple list of VR headsets (we focus on the software/AI layer).

- Those seeking purely theoretical academic papers on neural networks without practical application.

The Convergence: Where AI Meets Extended Reality (XR)

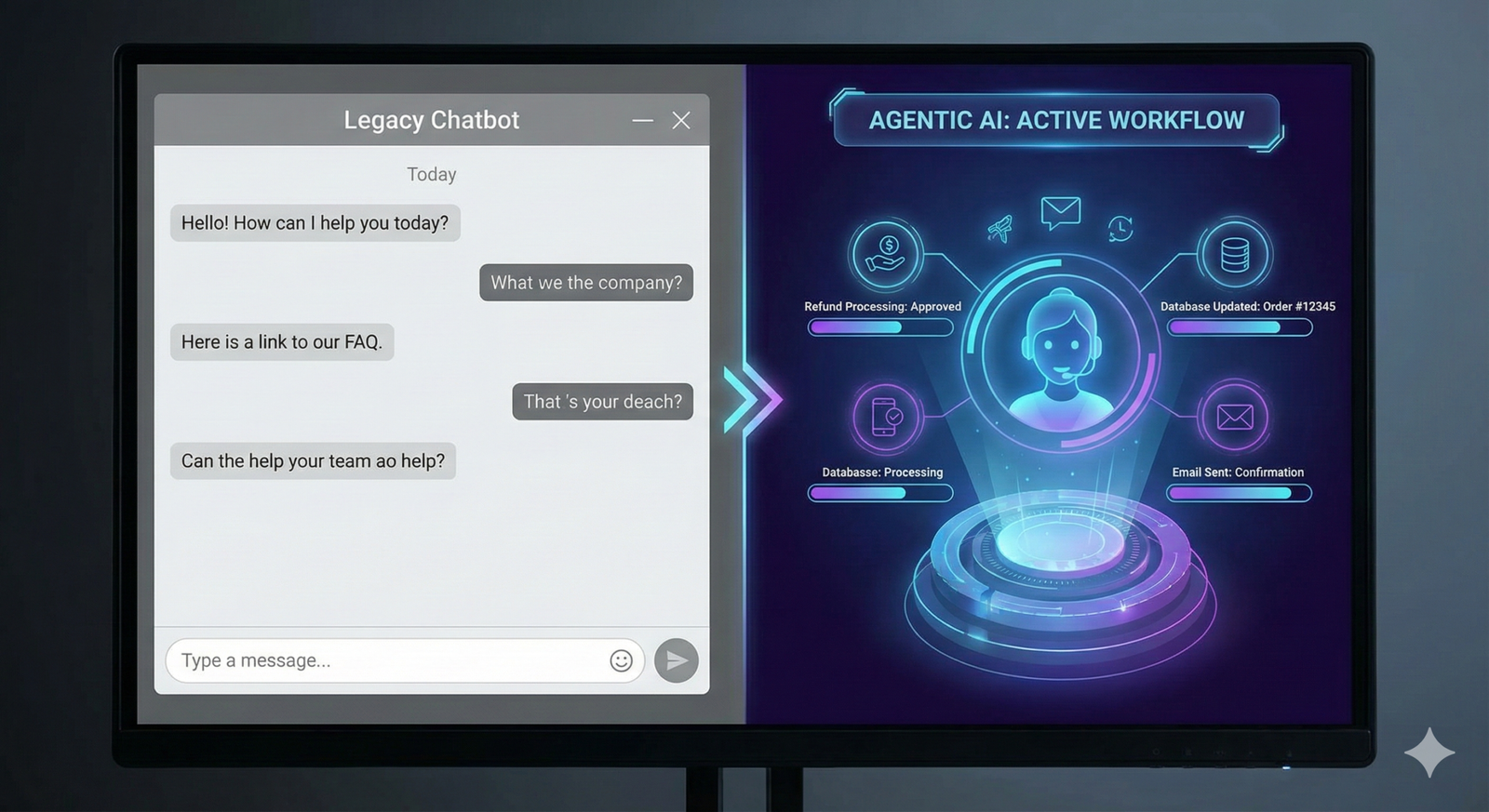

To understand why AI for immersive training is transformative, we must first look at the limitations of traditional VR. Standard VR is a powerful visualization tool—it puts you in a 3D world. However, without AI, that world is often a “ghost town” or a rigid movie set. You can look around, but the interactions are limited to what a developer explicitly coded.

AI acts as the “brain” for this virtual body. It transforms a static 3D environment into a living, breathing ecosystem.

The Three Pillars of AI-Driven Immersion

- Generative AI for Content Creation: Instead of artists manually modeling every tree or building, AI generates complex environments instantly. This allows for specific scenario generation—e.g., “Create a warehouse floor with a spilled chemical hazard and low lighting.”

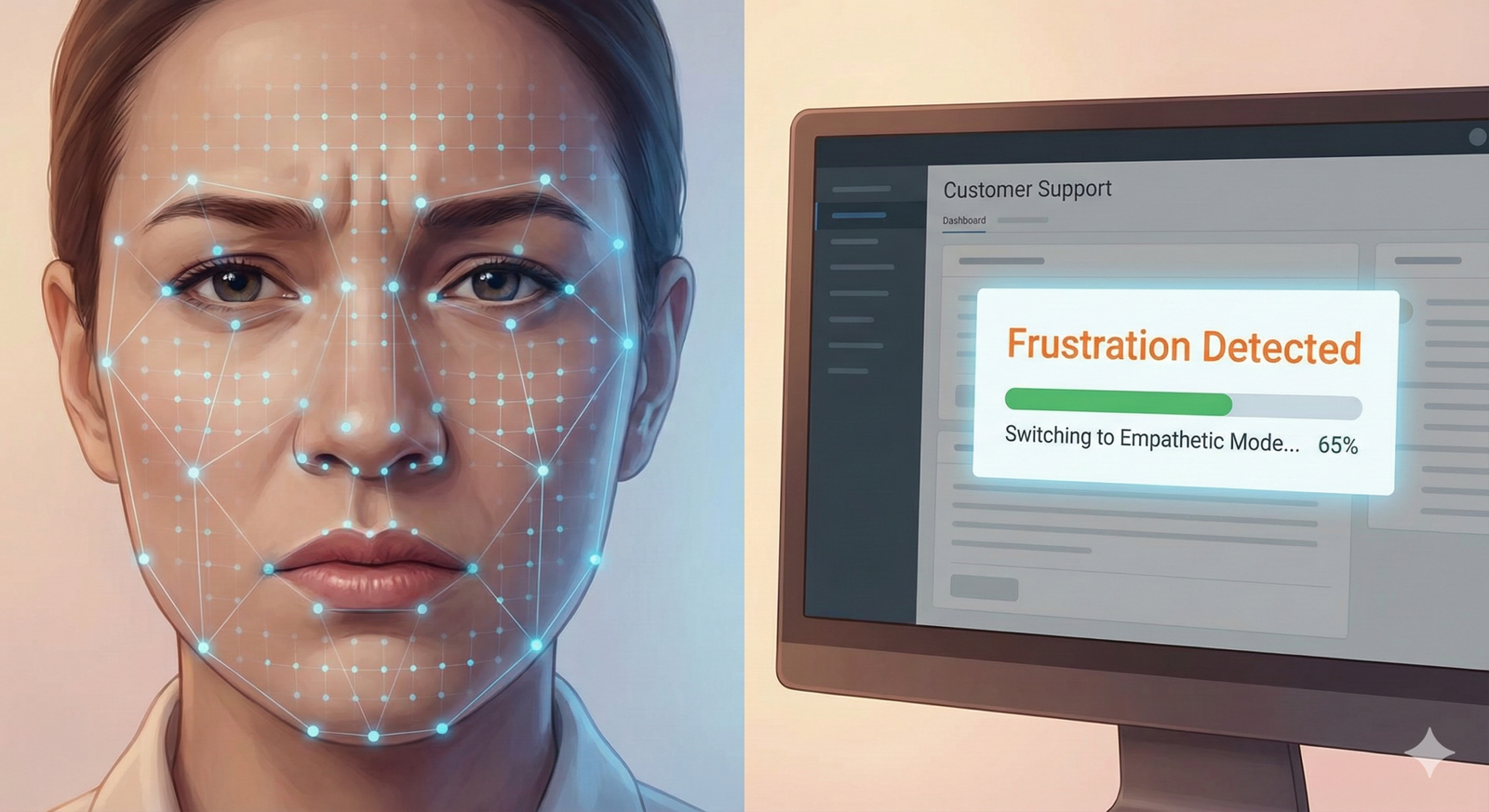

- Natural Language Processing (NLP) for Interaction: Trainees speak naturally to virtual patients, customers, or suspects. The AI understands context, sentiment, and intent, responding vocally in real-time.

- Computer Vision and Biometrics for Assessment: The system “sees” what the user is doing. It tracks hand movements, gaze fixation, and even posture to judge not just what decision was made, but how confidently it was executed.

How AI Enhances Simulation Mechanics

The primary value of AI in this context is the shift from scripted logic to probabilistic reasoning. In a scripted simulation, interactions are decision trees (If A, then B). In an AI simulation, interactions are fluid.

1. Intelligent Virtual Agents (IVAs)

In traditional role-play training, a human actor or peer is required, which is expensive and inconsistent. AI-driven avatars use LLMs to simulate distinct personalities.

- In Practice: A retail employee can train on “de-escalating an angry customer.” One run-through might feature a customer who is angry about a return policy; the next might feature a customer shouting because of a perceived insult. The AI randomizes the trigger and reacts dynamically to the employee’s tone of voice. If the employee sounds sarcastic, the AI avatar gets angrier.

2. Adaptive Learning Algorithms

This is often called “stealth assessment.” The AI constantly monitors the trainee’s performance baseline.

- The Mechanism: If a medical student is breezing through a virtual surgery, the AI injects complications—an unexpected hemorrhage or a drop in patient blood pressure—to test critical thinking under pressure. Conversely, if a novice is struggling, the AI simplifies the scenario, offering scaffolding hints to prevent frustration.

- Flow State: The goal is to keep the learner in the “Zone of Proximal Development,” where the task is challenging but achievable.

3. Procedural Environment Generation

Generative AI allows for “infinite” scenarios.

- Example: In firefighter training, the layout of the burning building, the source of the fire, and the structural integrity can be randomized every time the simulation loads. This prevents learners from simply memorizing the map; they must learn the principles of navigation and safety.

4. Biometric Feedback Loops

Modern headsets (like the Apple Vision Pro or Meta Quest Pro) contain eye-tracking and facial sensors.

- Data Usage: AI analyzes this data to infer cognitive load. If a pilot’s pupils dilate significantly and their gaze darts erratically across the dashboard, the AI infers panic or confusion. It can then pause the simulation to provide immediate feedback or tag that moment for the post-simulation review.

Key Use Cases by Industry

AI for immersive training is not a futuristic concept; it is currently deployed across major sectors to solve specific, high-value problems.

Healthcare: Surgical Precision and Bedside Manner

Medical errors are a leading cause of accidental injury. AI simulations allow practitioners to fail safely.

- Surgical Robotics Training: Surgeons use VR to operate robotic arms. AI analyzes the telemetry of their hand movements, scoring them on smoothness, efficiency, and tremor control.

- Virtual Patients: Doctors practice delivering bad news to AI-driven patient avatars. The AI evaluates the doctor’s empathy, choice of words, and ability to maintain eye contact, providing a “bedside manner score.”

Defense and Law Enforcement: Situational Awareness

For soldiers and police officers, split-second decisions determine survival.

- Shoot/Don’t Shoot Scenarios: Instead of video projection screens, officers enter VR scenes where AI suspects exhibit ambiguous behaviors (e.g., reaching for a wallet vs. a weapon). The AI varies the suspect’s behavior based on the officer’s verbal commands.

- Tactical Strategy: AI controls enemy combatants in war games, using reinforcement learning to adapt to the squad’s tactics. If the human squad always flanks left, the AI enemy learns to set traps on the left.

Manufacturing and Heavy Industry: The Industrial Metaverse

Safety and efficiency are paramount in environments with heavy machinery.

- Digital Twins: Companies create a perfect digital replica (Twin) of a factory. New hires train in the Twin using VR. AI simulates equipment failures—like a steam valve bursting—to train emergency response protocols without shutting down the real plant.

- Assembly Training: AR glasses highlight parts in the real world. AI verifies if the worker has attached the correct bolt with the correct torque (using computer vision verification) before letting them proceed.

Corporate Enterprise: Soft Skills and Leadership

This is the fastest-growing sector for AI immersive training.

- DEI (Diversity, Equity, and Inclusion): Users embody an avatar of a different gender or race to experience microaggressions in a virtual workplace. AI characters react to the user’s interventions (or lack thereof), teaching bystander intervention skills.

- Public Speaking: A user speaks to a virtual audience. AI analyzes pacing, filler words (“um,” “uh”), and eye contact distribution, while the virtual audience reacts—leaning in when interested or looking at phones when bored.

The Technology Stack: What Powers AI Simulations?

Understanding the “how” requires looking at the software and hardware stack that makes these experiences possible.

1. The Physics and Rendering Engine

The foundation is usually a game engine like Unity or Unreal Engine. These provide the visual fidelity and physics (gravity, collision).

- AI Integration: Developers use SDKs (Software Development Kits) to plug AI models directly into these engines. For example, NVIDIA’s Omniverse allows for physically accurate, AI-enhanced simulations.

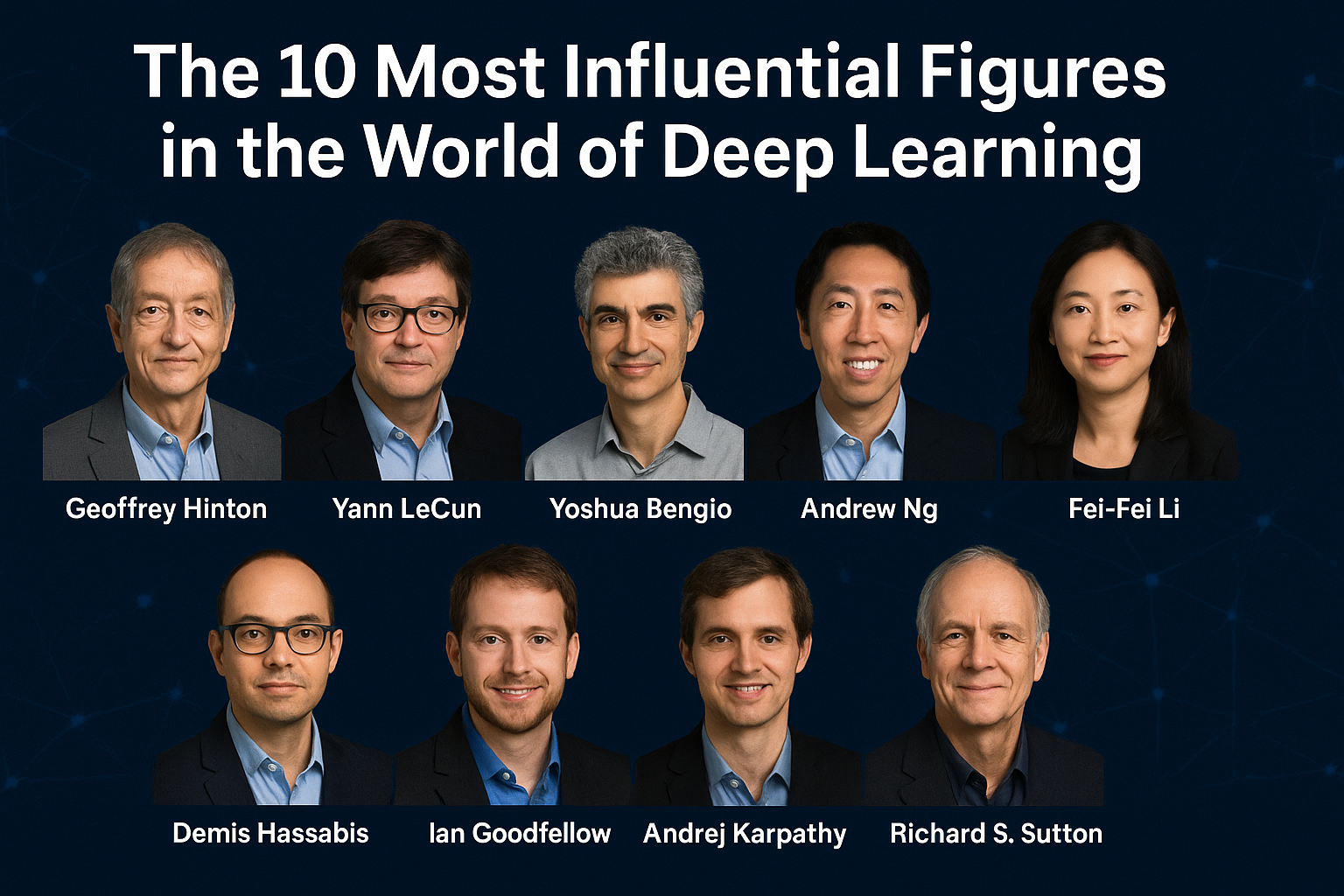

2. The Brain: Large Language Models (LLMs)

To power conversation, training platforms integrate models like GPT-4, Llama 3, or proprietary enterprise models.

- Latency Challenges: A key challenge is latency. In a voice conversation, a 3-second delay breaks immersion. “Edge AI” (processing data on the device rather than the cloud) is increasingly used to reduce this lag.

3. Neural Radiance Fields (NeRFs) and Gaussian Splatting

These are AI techniques used to create realistic 3D environments from 2D photos.

- Application: An instructor can walk through a real factory floor recording a video on their phone. AI converts that video into a fully navigable 3D training environment in minutes, rather than the weeks it used to take manual 3D modelers.

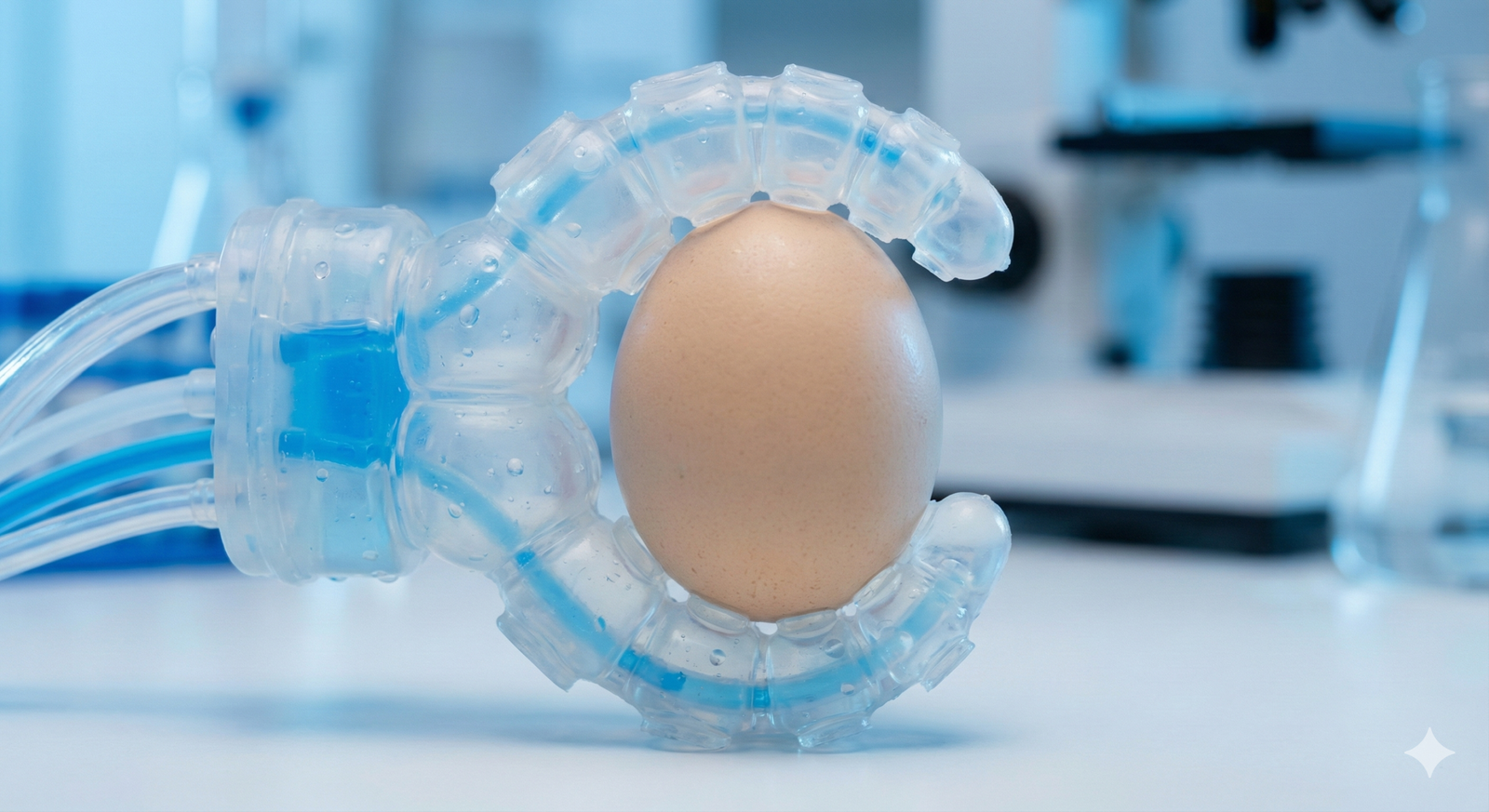

4. Haptics and Sensory Feedback

While visual and auditory AI is advanced, touch is the next frontier.

- AI-Driven Haptics: Gloves and vests use AI to interpret interaction data and trigger vibrations or resistance. If a mechanic strips a screw in VR, the controller resistance changes instantly to mimic the sensation of metal shearing.

Benefits vs. Traditional Training Methods

Why are companies investing millions into this technology? The ROI (Return on Investment) comes from specific comparative advantages.

| Feature | Traditional Classroom/E-Learning | AI-Driven Immersive Training |

| Engagement | Passive; low retention rates. | Active; “learning by doing” drives muscle memory. |

| Scalability | High for video, low for hands-on mentorship. | High; one AI mentor can train thousands simultaneously. |

| Safety | Safe but theoretical. | Safe and practical; allows failure without consequence. |

| Feedback | Delayed (grades returned days later). | Instantaneous; real-time correction during the task. |

| Personalization | One-size-fits-all curriculum. | Hyper-personalized; adapts to individual learning curves. |

The “Retention” Argument

Studies (such as those by PwC) have shown that VR learners learn up to 4x faster than classroom learners and are 275% more confident to apply skills learned after training. AI amplifies this by ensuring the training is relevant to the user’s specific gaps in knowledge.

Implementation Guide: Adopting AI Immersive Training

For organizations looking to deploy this technology, the path forward involves strategic planning.

Phase 1: Needs Analysis and Use Case Definition

Do not buy headsets looking for a problem to solve. Identify a bottleneck.

- Criteria: Is the skill hard to simulate? Is it dangerous? Is it expensive to organize (e.g., flying teams to a central site)? Is it rare (e.g., responding to a specific disaster)?

Phase 2: Hardware Selection

- Standalone VR (e.g., Meta Quest 3, HTC Vive Focus): Best for scalable, wireless training. Sufficient for most soft skills and procedural tasks.

- PC-Tethered VR (e.g., Varjo, Pimax): Necessary for ultra-high-fidelity simulations like flight or detailed surgical planning where graphical precision is non-negotiable.

- AR/MR (e.g., Microsoft HoloLens, Apple Vision Pro): Best for “heads-up” training where the user needs to see their real hands and tools.

Phase 3: Software and AI Integration

- Off-the-Shelf vs. Custom: Many vendors offer pre-built AI modules for leadership or safety. For proprietary machinery, custom development using Unity/Unreal is required.

- Data Privacy Setup: Ensure the AI models (especially if cloud-based) comply with GDPR or enterprise security standards. Voice data and biometric data are highly sensitive.

Phase 4: Pilot and Iterate

- The “Motion Sickness” Check: Run a small pilot to test comfort levels. AI can help here by stabilizing frame rates (using technique like DLSS – Deep Learning Super Sampling) to reduce nausea.

- Baseline Calibration: Use the first cohort to train the AI. Gather data on “average” performance to help the algorithms distinguish between a novice and an expert.

Phase 5: Deployment and Analytics

- LMS Integration: The VR system must talk to the company’s Learning Management System (LMS).

- Dashboarding: Operations managers should have a dashboard showing not just “completion,” but “competency heatmaps” generated by the AI.

Common Mistakes and Pitfalls

While promising, AI immersive training is not without risks.

1. The “Uncanny Valley” and AI Hallucinations

If an AI character looks realistic but moves jerkily or says something factually incorrect (hallucination), it breaks immersion and trust.

- Mitigation: Use “Bounded” AI agents. Restrict the LLM to a specific knowledge base (RAG – Retrieval-Augmented Generation) so a virtual safety inspector doesn’t start discussing philosophy or inventing safety regulations.

2. Biometric Privacy Concerns

Collecting data on how a user’s eyes move or how their hands tremble is invasive.

- Ethical Guardrails: Data must be anonymized. Employees must be explicitly informed what is being tracked and why. It should be used for development, not punitive performance reviews.

3. Over-Engineering

Not every training needs AI or VR.

- Rule of Thumb: If it can be learned effectively via a PDF or a Zoom call, do not build a VR simulation for it. Save AI immersion for complex, behavioral, or spatial skills.

4. Technical Debt and Maintenance

Hardware creates friction. Managing a fleet of 500 headsets—keeping them charged, updated, and sanitized—is a logistical challenge often underestimated.

- Solution: Mobile Device Management (MDM) software specifically for XR is essential for scaling.

Future Trends: What to Expect Next

As of early 2026, the trajectory for AI in immersive training points toward deeper integration of biological data and seamless generation.

Brain-Computer Interfaces (BCI)

Early experimentation is combining lightweight EEG sensors with VR headsets.

- Potential: The AI could literally read “focus” or “frustration” brainwaves directly, adjusting the simulation before the user even physically reacts.

Hyper-Realistic Avatars

advancements in Gaussian Splatting and AI rigging will make avatars indistinguishable from video. This is crucial for high-stakes empathy training, such as suicide prevention or hostage negotiation, where micro-expressions matter.

Automated Content Generation (Text-to-World)

Instructional designers will soon be able to type: “Generate a hospital room scenario with a patient suffering from cardiac arrest, surrounded by three panicked family members,” and the AI will build the 3D assets, script the agents, and set the lighting in seconds.

Related Topics to Explore

- Digital Twins in Industry 4.0: How mirroring physical assets in digital space optimizes operations.

- The Ethics of AI in Education: balancing personalized learning with data privacy.

- Haptic Technology Evolution: Moving beyond vibration to thermal and resistance feedback.

- Retrieval-Augmented Generation (RAG): How to keep AI agents accurate in corporate settings.

- Spatial Computing Hardware: A comparison of AR vs. VR headsets for enterprise.

Conclusion

AI for immersive training is more than a technological upgrade; it is a paradigm shift in how we transfer knowledge. By moving from passive observation to active, AI-guided participation, organizations can compress the time to competency and produce a workforce that is more resilient, empathetic, and skilled.

The synergy of AI and XR solves the “scalability vs. efficacy” paradox. Historically, highly effective training (like 1-on-1 mentorship) was not scalable, and scalable training (like videos) was not highly effective. AI-driven simulation breaks this trade-off, offering personalized mentorship at infinite scale.

Next Steps: If you are considering implementing this technology, start by identifying your “high-consequence, low-frequency” events—situations that are rare but catastrophic if mishandled. These are your highest ROI candidates for an AI immersive training pilot.

FAQs

1. What is the difference between VR training and AI immersive training? Standard VR training places the user in a 3D environment, but the interactions are often static or scripted (e.g., clicking buttons). AI immersive training adds intelligence to the environment, allowing for adaptive difficulty, realistic conversations with avatars, and dynamic scenarios that change based on user behavior.

2. Is AI immersive training expensive to implement? Initially, yes, due to hardware and software development costs. However, Generative AI is rapidly lowering the cost of content creation. Over time, it is often cheaper than physical training, especially for industries requiring expensive equipment, travel, or raw materials (e.g., welding or flight training).

3. Can AI training replace human instructors? No, it is best used as an augmentation tool. AI handles the repetitive “drills” and baseline knowledge transfer, allowing human instructors to focus on advanced coaching, nuance, and emotional support. It acts as a “force multiplier” for instructors.

4. How does the AI measure “soft skills” in VR? AI measures soft skills by analyzing voice modulation (tone, volume, speed), choice of words (sentiment analysis), eye contact (gaze tracking), and body language. It compares these metrics against a database of “successful” interactions to score the user on empathy, confidence, and clarity.

5. Does AI immersive training cause motion sickness? It can, but AI is helping to solve this. AI upscaling technologies (like DLSS) improve frame rates, which is the main factor in reducing nausea. Furthermore, better headset tracking and optimized software design have significantly reduced motion sickness in modern applications.

6. What hardware is needed for AI immersive training? It ranges from standalone headsets like the Meta Quest 3 (good for general training) to high-end PC-VR setups for complex simulations. Some lightweight AR applications can even run on tablets or smartphones, though they offer less immersion than headsets.

7. Is data collected during training secure? Security is a major concern. Reputable enterprise platforms encrypt biometric and performance data. Organizations should ensure their vendors are SOC2 or ISO 27001 compliant and that AI processing happens locally (on-device) or in a secure private cloud, not on public servers.

8. Can I use AI immersive training for office workers? Absolutely. It is widely used for leadership development, public speaking, negotiation, and diversity training. It provides a safe space for managers to practice difficult conversations before having them with real employees.

9. How long does it take to build a custom AI simulation? Traditionally, this took months. With new Generative AI tools assisting in coding and 3D asset creation, simple scenarios can be built in weeks. Complex, verified digital twins for engineering still require several months of development and validation.

10. What is “adaptive learning” in this context? Adaptive learning means the simulation changes based on your performance. If you are solving a problem too easily, the AI introduces a new variable or time constraint to challenge you. If you are failing, it slows down or provides hints, ensuring personalized pacing.

References

- PwC. (2022). The Effectiveness of Virtual Reality Soft Skills Training in the Enterprise. PricewaterhouseCoopers. https://www.pwc.com/us/en/tech-effect/emerging-tech/virtual-reality-study.html

- Deloitte. (2023). The Spatial Computing Era: How XR and AI are transforming the workforce. Deloitte Insights.

- Unity Technologies. (2024). AI and Machine Learning in Real-Time 3D. Unity. https://unity.com/solutions/artificial-intelligence

- NVIDIA. (2025). Omniverse and the Industrial Metaverse: Simulating Reality with AI. NVIDIA Developer Zone. https://developer.nvidia.com/omniverse

- Microsoft. (2024). Mixed Reality and AI on the Edge: HoloLens 2 Capabilities. Microsoft Learn. https://learn.microsoft.com/en-us/windows/mixed-reality/

- Journal of Medical Internet Research (JMIR). (2023). Artificial Intelligence and Virtual Reality in Surgical Training: A Systematic Review. JMIR Serious Games. https://games.jmir.org/

- IEEE. (2024). Standards for Biometric Privacy in Extended Reality. IEEE Standards Association. https://standards.ieee.org/

- Harvard Business Review. (2023). How Generative AI Will Change Learning and Development. HBR.org. https://hbr.org/