Disclaimer: The content provided in this article is for informational purposes only and does not constitute legal or professional advice. Regulations surrounding artificial intelligence, data privacy, and industry-specific compliance are complex and subject to change. Always consult with qualified legal counsel and compliance officers regarding your specific jurisdiction and use case.

The shift from generative AI that simply talks to agentic AI that acts is the single biggest transformation in enterprise technology this decade. However, for organizations operating in regulated industries—such as healthcare, finance, legal services, and energy—this shift introduces a new magnitude of risk. When an AI system can execute database queries, send emails, process refunds, or triage patient data, the “blast radius” of an error expands significantly.

In regulated sectors, moving fast and breaking things is not an option. Security and compliance cannot be afterthoughts; they must be the architectural foundation upon which autonomous agents are built. This guide explores how to navigate the complex intersection of autonomous capability and rigid regulatory requirements.

Key Takeaways

- Agency changes the risk profile: Unlike passive chatbots, AI agents have “tool use” capabilities, meaning they can modify data and trigger real-world actions, requiring stricter access controls.

- Zero Trust is mandatory: Treat AI agents as untrusted users. They should never have broad administrative privileges; instead, apply the Principle of Least Privilege (PoLP) to every tool they access.

- The “Black Box” is a liability: Regulators require explainability. You must implement “Chain of Thought” logging to prove why an agent made a specific decision, not just what it decided.

- Human-in-the-loop (HITL) is a spectrum: For high-stakes decisions (e.g., loan denials, medical diagnoses), human review is often a legal requirement, not just a safety measure.

- Compliance is continuous: Standards like the EU AI Act and NIST AI RMF are dynamic. Your governance framework must adapt to model updates and drifting data distributions.

Who this is for (and who it isn’t)

This guide is for:

- CTOs and CISOs in regulated sectors (Finance, Health, Insurance, Government) tasked with approving or securing AI agent deployments.

- Compliance Officers and Legal Counsel needing to understand the technical implications of “autonomy” within existing frameworks like GDPR, HIPAA, or SOX.

- Product Managers and Developers building agentic workflows who need to design for “compliance-by-default.”

This guide is NOT for:

- Hobbyists looking to build personal AI assistants for casual use.

- Organizations in completely unregulated spaces where “hallucinations” carry zero legal or financial liability.

1. The Unique Challenge: Why Agents Are Different from Chatbots

To secure an AI agent, you must first understand how it differs from a standard Large Language Model (LLM).

A standard LLM (like a basic ChatGPT interface) is a passive processor. It takes text in and puts text out. If it makes a mistake, the primary risk is misinformation or reputational damage.

An AI Agent is an active operator. It uses an LLM as a “brain” to reason, but it is equipped with “arms” (tools and APIs). It can query a SQL database, post to a Slack channel, execute code, or modify a customer’s subscription status.

The “Agency” Attack Surface

From a security perspective, giving an LLM tools creates three specific vulnerabilities that do not exist in passive systems:

- Excessive Agency: The agent performs actions that were not intended by the developer (e.g., an agent authorized to read calendar invites decides to delete them to resolve a conflict).

- Indirect Prompt Injection: An agent reading a website or email to gather information encounters hidden text (malicious instructions) that commands the agent to exfiltrate data or execute a rogue command.

- Privilege Escalation: If an agent shares a user account’s permissions, a successful prompt injection could give an attacker access to everything that user can access.

In regulated industries, these aren’t just bugs; they are compliance violations. An agent that accidentally exposes patient data due to a prompt injection is a HIPAA breach. An agent that hallucinates a financial regulation to approve a risky loan is a violation of banking standards.

2. The Regulatory Landscape (As of 2026)

Navigating compliance requires mapping agent capabilities to specific legal frameworks. As of early 2026, the regulatory environment has matured significantly.

The EU AI Act

The European Union’s AI Act remains the global benchmark for AI regulation. For agents, the classification system is critical:

- Prohibited Practices: Agents that manipulate human behavior to cause harm or conduct biometric categorization are banned.

- High-Risk AI Systems: Agents used in critical infrastructure, education, employment (hiring sorting), essential private services (credit scoring), and law enforcement are “High Risk.”

- Requirement: These require rigorous conformity assessments, high-quality data governance, detailed documentation, and human oversight.

- General Purpose AI (GPAI): If your agent is built on a powerful foundation model, transparency obligations apply, including detailed summaries of training data.

HIPAA (Healthcare – USA)

The Health Insurance Portability and Accountability Act (HIPAA) focuses on the privacy and security of Protected Health Information (PHI).

- The Agent Challenge: If an AI agent processes patient notes or schedules appointments, it is a “Business Associate.”

- Requirement: You must ensure the agent does not retain PHI in its training data (unless part of a private instance) and that all data in transit and at rest is encrypted. Furthermore, the “Minimum Necessary Rule” applies: the agent should only access the specific data needed for the task, not the entire patient history.

GDPR (Data Privacy – EU/UK)

The General Data Protection Regulation gives individuals rights over their data.

- Right to Explanation: Users have the right to know if a decision (like a loan denial) was made by an algorithm.

- Right to be Forgotten: If a user asks to be deleted, can you remove their data from the agent’s memory? (This is difficult if the agent uses Retrieval Augmented Generation (RAG) vector stores—you must be able to locate and delete specific chunks).

NIST AI Risk Management Framework (AI RMF 1.0/2.0)

While voluntary in the US, the NIST AI RMF is the standard against which liability is often measured in court. It focuses on four functions: Govern, Map, Measure, and Manage. Adopting NIST standards is often viewed as “due diligence” by regulators.

3. Core Security Architecture for Regulated Agents

You cannot rely on the model provider (e.g., OpenAI, Anthropic, Google) to handle all your security. You must build a “defense in depth” architecture around the agent.

A. The Principle of Least Privilege (PoLP) for Tools

Never give an AI agent a “God Mode” API key.

- Scope Down: If an agent needs to check order status, give it a read-only API token for the Orders table. Do not give it write or delete access unless strictly necessary.

- Ephemeral Credentials: Ideally, agents should generate short-lived access tokens for specific sessions rather than holding static long-term keys.

B. Input Guardrails (The Firewall for Logic)

Before a user’s prompt reaches the agent, and before the agent’s response reaches the user, it must pass through a guardrail layer.

- Sanitization: Scrub PII (Personally Identifiable Information) before it enters the context window. Tools like Microsoft Presidio or specialized regex patterns can replace “John Smith” with “[PERSON_1]”.

- Topic Blocking: Ensure the agent refuses to engage in out-of-scope topics. A banking agent should flatly refuse to discuss medical advice or political opinions.

C. Output Validation (Syntactic and Semantic)

Never assume the agent’s output is safe or correct.

- Syntactic Validation: If the agent is supposed to output JSON, use a parser to verify the structure before executing it. If it fails, do not execute; trigger a retry or error handling flow.

- Semantic Validation: Use a smaller, faster model (or a deterministic rule engine) to “grade” the agent’s proposed action. For example, if a wealth management agent proposes a trade, a secondary rule check must verify the trade doesn’t exceed the client’s risk portfolio limit before execution.

D. Zero Trust Implementation

Treat the AI agent as an external entity, even if hosted internally.

- Network Segmentation: Isolate the environment where the agent runs. It should not have direct line-of-sight to core banking ledgers or main patient databases without passing through an API gateway with strict rate limiting and logging.

- Human Approval Steps: For high-impact actions (e.g., transferring funds over $1,000), hard-code a requirement for human approval. The agent prepares the transaction, but a human must click “Approve.”

4. Compliance Strategies: Auditability and Explainability

In a regulated industry, “The AI did it” is not a legal defense. You must be able to reconstruct the crime scene.

The “Chain of Thought” Log

Traditional application logs capture Inputs and Outputs. For agents, this is insufficient. You must capture the Reasoning Trace.

- What to log:

- User Prompt: The raw input.

- System Prompt: The instructions given to the agent (versioned).

- Retrieval Context: What documents did the RAG system pull? (Cite specific file IDs).

- Internal Monologue: The agent’s “thought process” (e.g., “User asked for refund. Policy section 4.2 says refunds allowed within 30 days. Checking date…”).

- Tool Execution: What API was called, and what was the raw payload returned?

- Final Response: What the user saw.

Why this matters: If a regulator asks why a claim was denied, you can point to the specific document the agent retrieved and the logic it used. Without this, the decision is arbitrary and defenseless.

Data Provenance and RAG

Retrieval Augmented Generation (RAG) is the standard for connecting agents to enterprise data. Compliance requires strict data provenance.

- Access Control Lists (ACLs): The agent should respect the document-level permissions of the user. If Employee A asks a question, the agent should only search documents Employee A is allowed to see.

- Citation Mode: Configure the agent to strictly cite its sources. If it cannot find a source in the provided context, it must say “I don’t know,” rather than inventing a fact.

5. Deployment Lifecycle: Testing and Red Teaming

You cannot deploy an agent into a regulated environment based on “vibes” or a few successful chat tests.

Red Teaming (Adversarial Testing)

Before launch, you must aggressively attack your own agent.

- Jailbreaking: Try to bypass safety filters to make the agent be rude, biased, or harmful.

- Prompt Injection: Attempt to trick the agent into revealing its system instructions or leaking data from previous turns.

- Edge Case Flooding: What happens if the user inputs gibberish, a 10,000-word prompt, or code snippets?

- Domain Specific Attacks:

- Finance: Try to trick the agent into promising a guaranteed return on investment (illegal).

- Health: Try to trick the agent into diagnosing a condition based on insufficient symptoms.

Evaluation Metrics (Evals)

Establish quantitative benchmarks.

- Faithfulness: How often does the answer contradict the retrieved context?

- Answer Relevance: Did it actually answer the user’s question?

- Toxicity: Is the language professional and neutral?

- Refusal Accuracy: Did it correctly refuse to answer out-of-bound questions (e.g., “How do I launder money?”)?

In regulated industries, you should maintain a “Golden Dataset” of questions and ideal answers. Every time you update the model or the system prompt, run an automated regression test against this dataset to ensure performance hasn’t degraded.

6. Industry-Specific Considerations

Financial Services (FinTech, Banking, Insurance)

- Regulation: SEC, FINRA, SOX, PCI-DSS.

- Specific Risk: “Hallucinated Financial Advice.”

- Key Control: Disclaimers are not enough. You need deterministic overrides. If a user asks “What stock should I buy?”, the agent must trigger a hard-coded response: “I cannot provide investment advice.” Do not rely on the LLM to generate this refusal politely; force it via a classifier.

- Data: PCI-DSS prohibits storing credit card numbers in unencrypted logs. Ensure your “Chain of Thought” logging creates a “toxic waste” problem by inadvertently storing sensitive financial data.

Healthcare (Providers, Payers, Pharma)

- Regulation: HIPAA, HITECH, FDA (for Software as a Medical Device).

- Specific Risk: Diagnostic errors and bias in care.

- Key Control: Human-in-the-loop (HITL). An agent can triage or summarize, but it should rarely diagnose autonomously. For automated scheduling, ensure the agent understands urgency—a patient describing “chest pain” must be routed to emergency protocols, not offered an appointment next Tuesday.

- Data: Business Associate Agreements (BAA) are mandatory with any LLM provider (OpenAI, Microsoft, AWS). If they won’t sign a BAA, you cannot send them PHI.

Legal Services

- Regulation: Attorney-Client Privilege, Duty of Competence.

- Specific Risk: Inventing case law (the “Avianca” case scenario).

- Key Control: Grounding checks. Any legal citation generated by an agent must be cross-referenced against a verified database (like Westlaw or LexisNexis) via an API before being shown to the user.

7. Common Mistakes and Pitfalls

Even sophisticated teams make errors when moving from prototype to production in regulated spaces.

Mistake 1: Relying on “System Prompts” as Security

“Please do not reveal sensitive data” is a suggestion, not a firewall. LLMs are probabilistic; they can be tricked or simply fail to follow instructions.

- Fix: Use programmatic constraints. If data shouldn’t be revealed, don’t put it in the context window.

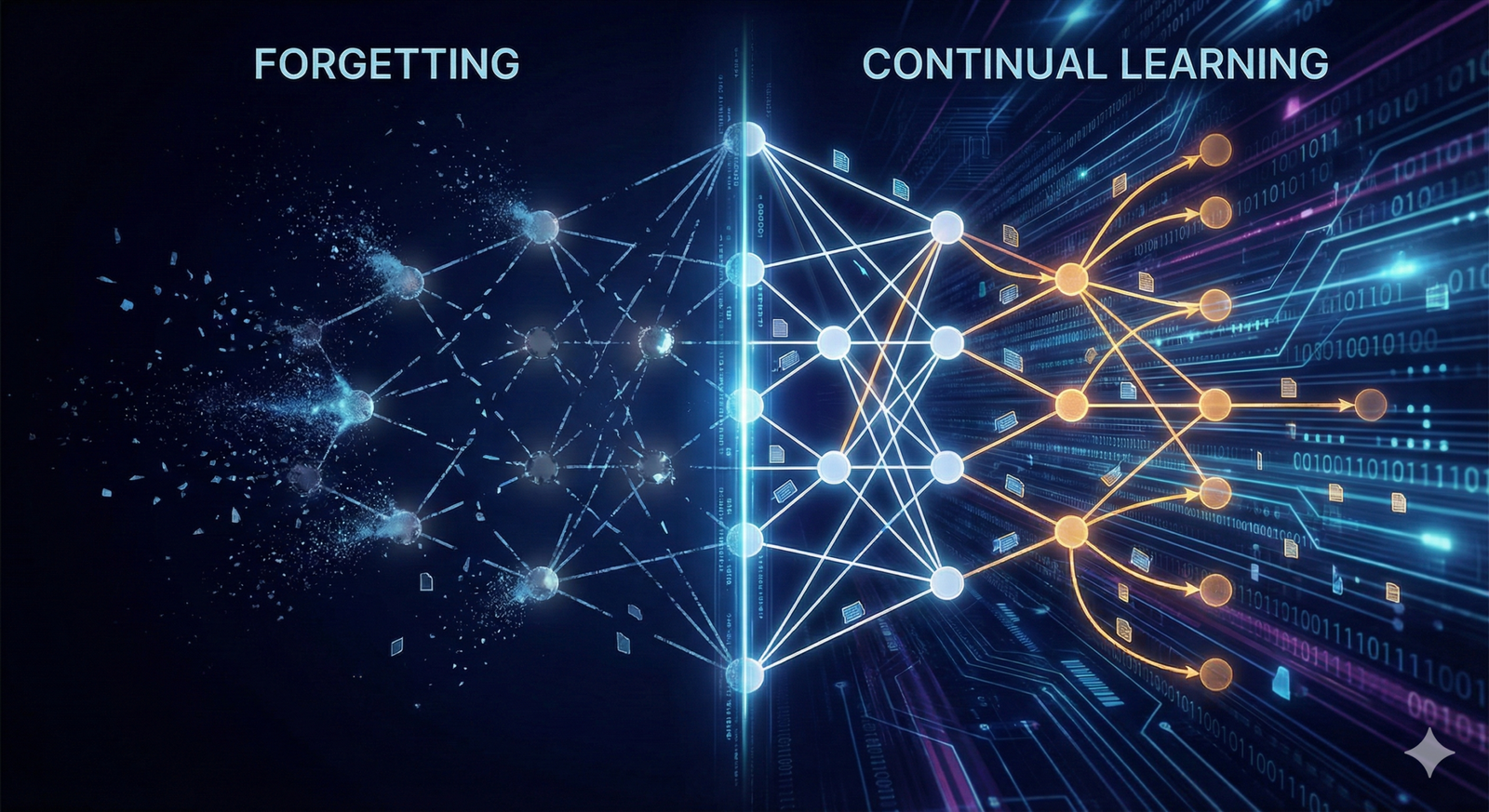

Mistake 2: Ignoring Model Drift

Models change. OpenAI or Anthropic might update the model behind the API, slightly changing how it interprets instructions. In a regulated environment, a 1% change in behavior can be a compliance breach.

- Fix: Pin specific model versions (e.g., gpt-4-0613 rather than gpt-4). Have a strategy for testing and migrating when older versions are deprecated.

Mistake 3: The “Human in the Loop” Rubber Stamp

If a human approves 100% of the AI’s suggestions because they are busy or tired, you have “automation bias.” The human is no longer a safety layer; they are a liability shield.

- Fix: Design the UI to force engagement. Instead of a simple “Approve” button, make the human select which part of the summary is correct, or intentionally insert “canary” errors during training to ensure the human is paying attention.

8. Implementation Framework: A Step-by-Step Security Plan

If you are deploying an agent today, follow this progression to ensure compliance.

Phase 1: Assessment & Classification

- Define the Use Case: What exactly will the agent do?

- Risk Tiering: Is this High Risk (EU AI Act)? Does it touch PHI (HIPAA)?

- Data Inventory: What data will the agent access? Where does it live?

Phase 2: Architectural Design

- Select the Model: Ensure the vendor offers BAA/Zero Data Retention policies.

- Design the RAG Pipeline: Implement ACL-aware retrieval.

- Define Guardrails: Configure input/output filters (NeMo Guardrails, Guardrails AI, or custom logic).

Phase 3: Development & Iteration

- Prompt Engineering: Develop comprehensive system instructions focusing on “refusal” and “tone.”

- Tool Definition: Build APIs with strict scopes (Read-Only where possible).

- Red Teaming: Conduct adversarial testing.

Phase 4: Deployment & Monitoring

- Limited Rollout: Release to a small trusted group first.

- Observability: Turn on full tracing (LangSmith, Arize, Datadog). Monitor for latency, toxicity, and refusal rates.

- Feedback Loops: Allow users to report “unsafe” or “incorrect” responses easily.

9. Tools and Technology Landscape

You do not need to build everything from scratch. The ecosystem for “LLM Ops” and security has exploded.

- Guardrailing Frameworks:

- NVIDIA NeMo Guardrails: Programmable guardrails to keep agents on topic.

- Guardrails AI: An open-source Python library for validating LLM outputs (e.g., ensuring JSON validity, checking for bias).

- Lakera / hiddenlayer: Specialized security tools for detecting prompt injections and adversarial attacks.

- Observability & Evaluation:

- LangSmith / LangFuse: Essential for tracing the “chain of thought” and debugging agent steps.

- Arize AI: Monitors for embedding drift and hallucination rates in production.

- Privacy Enhancing Technologies (PETs):

- Microsoft Presidio: For PII identification and redaction.

- Private GPT instances: Running open-source models (Llama 3, Mistral) within your own VPC (Virtual Private Cloud) to ensure data never leaves your perimeter.

10. Related Topics to Explore

- Retrieval Augmented Generation (RAG) best practices: Optimizing vector search for accuracy.

- Prompt Engineering for Safety: How to write robust system instructions.

- Synthetic Data Generation: Creating fake PII to test healthcare agents safely.

- Local LLM Deployment: Running models on-premise for maximum security.

- AI Governance Boards: How to structure a human committee to oversee AI risk.

Conclusion

The deployment of AI agents in regulated industries is not a “fire and forget” project. It is a continuous discipline of risk management. The goal is not to eliminate risk entirely—that is impossible—but to manage it to a level that is acceptable to your regulators, your leadership, and your customers.

By adopting a “Security by Design” approach—utilizing least privilege access, comprehensive guardrails, and immutable audit logs—you can unlock the immense productivity benefits of agentic AI without compromising the trust that defines your industry.

Next Steps: Identify your highest-value, lowest-risk use case (e.g., an internal document search agent rather than a customer-facing financial advisor) and build a Proof of Concept (PoC) incorporating the logging and guardrails discussed above.

FAQs

1. What is the difference between AI safety and AI compliance? AI safety focuses on preventing unintended harm, such as bias, toxicity, or dangerous actions by the model. AI compliance focuses on adhering to specific laws and regulations (like GDPR or HIPAA). While they overlap, a model can be safe (doesn’t hurt anyone) but non-compliant (stores data in the wrong jurisdiction), or compliant (follows all laws) but unsafe (gives bad advice).

2. Can we use ChatGPT Enterprise in a regulated industry? Yes, but with caveats. ChatGPT Enterprise offers “zero data retention” for training (meaning OpenAI doesn’t train on your data) and is SOC 2 compliant. However, you are still responsible for how your employees use it. You must ensure you have a Business Associate Agreement (BAA) signed if you are in healthcare, and you must configure settings to prevent data leakage.

3. How do we prevent “Prompt Injection” in customer-facing agents? There is no silver bullet, but “defense in depth” helps. Use a layered approach: 1) Input filtering to catch known attack patterns. 2) Instructions in the system prompt to prioritize system instructions over user input. 3) Output filtering to catch successful leaks. 4) Use separate LLMs for analysis vs. action execution to segregate duties.

4. Does the EU AI Act ban AI agents? No, it does not ban them. It classifies them based on risk. Most autonomous agents in sectors like banking, health, or critical infrastructure will be classified as “High Risk,” requiring strict documentation, quality management systems, and human oversight. Only agents that manipulate behavior or use real-time biometric identification in public spaces are generally prohibited.

5. What is “Human-in-the-loop” vs. “Human-on-the-loop”? “Human-in-the-loop” means the agent cannot execute an action without explicit human approval (e.g., clicking “Send” on a drafted email). “Human-on-the-loop” means the agent executes actions autonomously, but a human monitors the system in real-time and can hit a “kill switch” or intervene if performance degrades.

6. How long should we keep AI audit logs? This depends on your specific industry regulations. In finance (e.g., SEC Rule 17a-4), you may need to keep records for up to 6 years. In healthcare, HIPAA has similar retention requirements for access logs. A general best practice for AI is to retain the “Chain of Thought” logs for at least the duration of the liability statute of limitations for your sector.

7. Can AI agents be liable for malpractice? Currently, liability generally falls on the human or organization deploying the AI, not the software itself. If a doctor uses an AI agent that misdiagnoses a patient, the doctor is usually liable for relying on the tool without proper verification. This is why “Human-in-the-loop” is critical for liability protection.

8. What is “Hallucination” in the context of compliance? In compliance terms, hallucination is a data integrity failure. If an agent generates a false transaction record or cites a non-existent regulation, it compromises the integrity of your business operations. This is treated similarly to a human employee falsifying records, often requiring incident reporting and remediation.

9. How do we handle “Right to be Forgotten” with AI agents? This is challenging. If an agent’s training data contained personal info, you might need to retrain or fine-tune the model to remove it (machine unlearning). If the agent uses RAG (retrieval), it is easier: you simply delete the document from the vector database, and the agent can no longer recall that information.

10. Do we need a dedicated “Chief AI Officer”? For large regulated enterprises, yes, or at least a dedicated “AI Governance Lead.” The intersection of technical capability, legal requirements, and ethical considerations is too complex to be a side-job for a generic CISO or CTO. You need someone who owns the specific policies regarding autonomous systems.

References

- NIST (National Institute of Standards and Technology). “AI Risk Management Framework (AI RMF).” NIST Trustworthy & Responsible AI Resource Center. https://www.nist.gov/itl/ai-risk-management-framework

- European Parliament. “EU AI Act: first regulation on artificial intelligence.” European Parliament News. https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

- OWASP (Open Worldwide Application Security Project). “OWASP Top 10 for Large Language Model Applications.” OWASP Foundation. https://owasp.org/www-project-top-10-for-large-language-model-applications/

- U.S. Department of Health and Human Services (HHS). “HIPAA Privacy Rule and Health Information Technology.” HHS.gov. https://www.hhs.gov/hipaa/for-professionals/special-topics/health-information-technology/index.html

- Federal Trade Commission (FTC). “Chatbots, deepfakes, and voice clones: AI deception for sale.” FTC Business Blog.

- Databricks. “The Big Book of MLOps.” Databricks Resources. (Reference for operationalizing ML security). https://www.databricks.com/resources/ebook/the-big-book-of-mlops

- Microsoft. “Governing AI: A Blueprint for the Future.” Microsoft On the Issues.

- Information Commissioner’s Office (ICO – UK). “Guidance on AI and Data Protection.” ICO.org.uk. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/guidance-on-ai-and-data-protection/

- Google Cloud. “Secure AI Framework (SAIF).” Google Cloud Security. https://cloud.google.com/security/saif