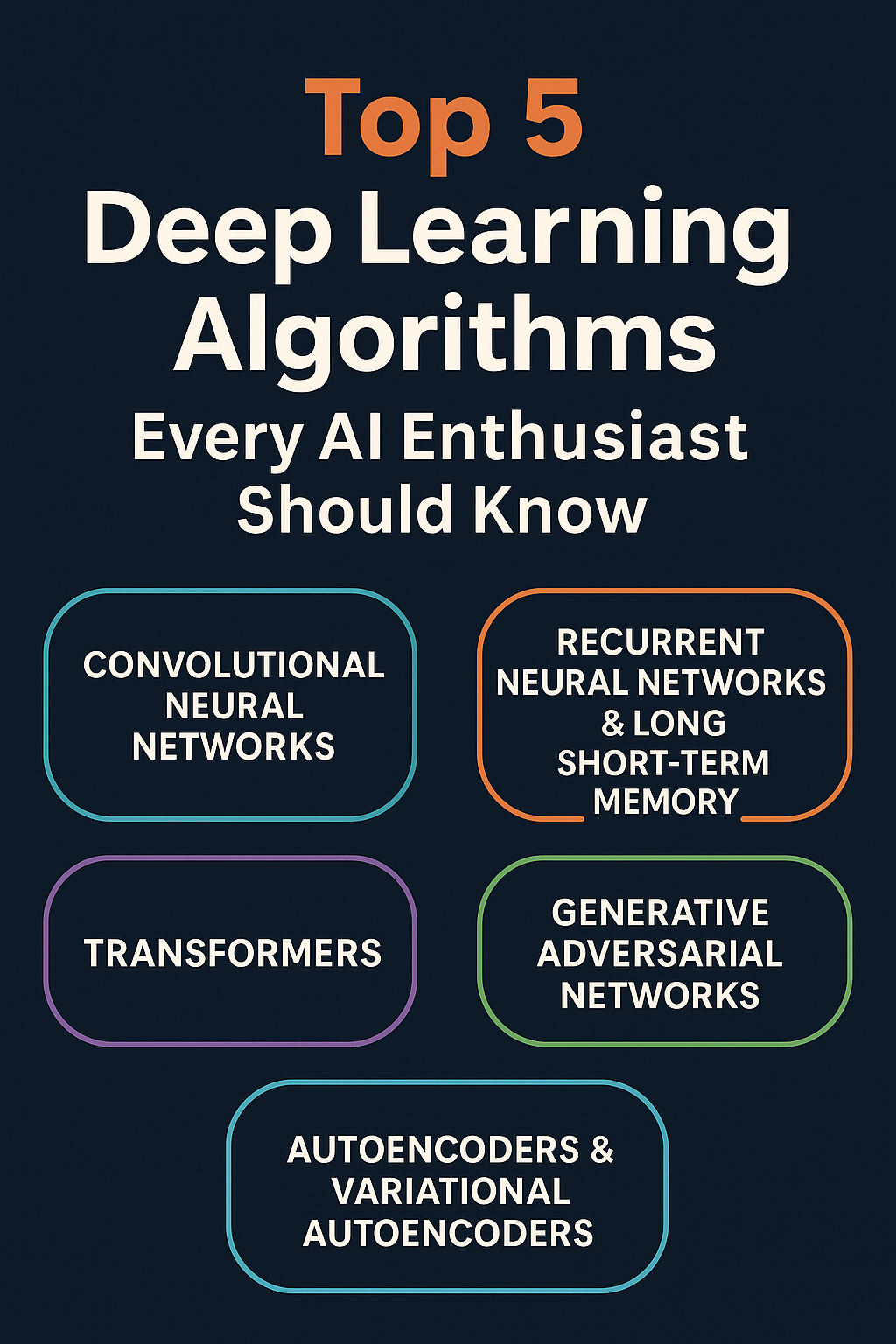

Deep learning has transformed the way AI operates. It has made huge strides in areas like speech recognition, natural language processing, computer vision, and more. Deep learning learns hierarchical representations of data on its own by using huge neural networks with many layers. If you want to stay competitive and make the finest systems, you need to know the most important deep learning algorithms. This is true whether you do it for leisure or work in AI research or engineering.

This long post will tell you about the Top 5 Deep Learning Algorithms that everybody who is interested in AI should know about.

- CNNs, or Convolutional Neural Networks

- LSTM and RNNs are two types of neural networks.

- Transformers

- Generative Adversarial Networks (GANs)

- Autoencoders and Variational Autoencoders (VAEs)

We’ll speak about how each barebones algorithm works, what its pros and cons are, and how it can be applied in the real world.

What does it mean to learn something?

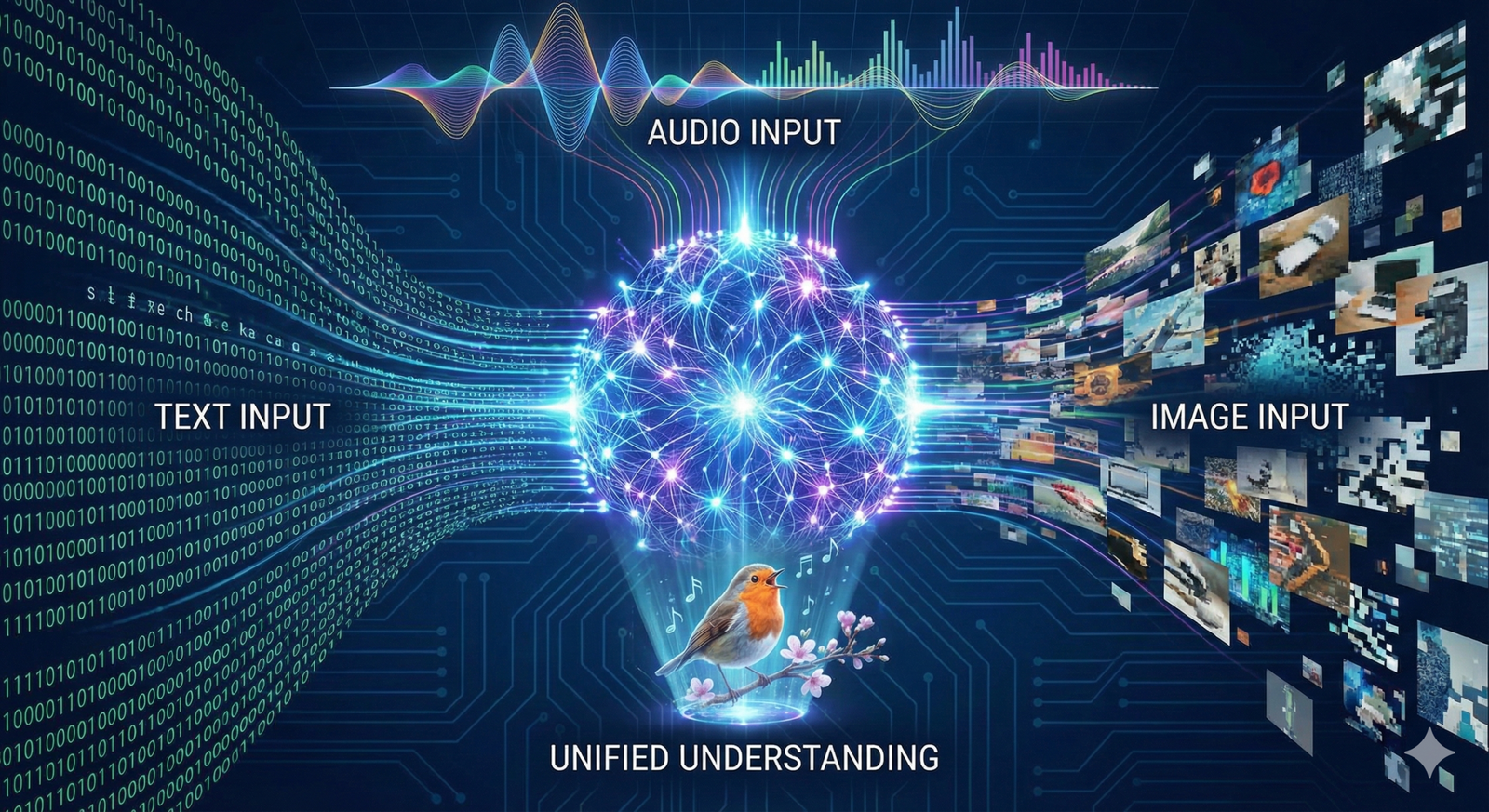

Deep learning is a kind of machine learning that uses neural networks with a number of hidden layers to find patterns in data that are hard to see. Deep learning methods learn hierarchical representations directly from pictures, audio waveforms, or text. This is different from standard algorithms, which need you to make features by hand.

Some key things are:

- Layered Architecture: When you stack layers, features are taken away at higher levels of abstraction.

- Backpropagation: Gradient-based optimization alters millions (or billions) of weights.

- Massive Data and Computing: You can use massive labeled datasets and GPUs or TPUs to train deep networks.

Deep learning has come a long way in several domains, including as language modeling (like GPT-4) and picture categorization (like ImageNet). You can solve a lot of AI problems if you know the basic algorithms.

Why These Algorithms Matter

These five algorithms are what AI systems are made of today:

- CNNs are great at working with spatial data like photos and movies because of convolution operations.

- Language, time series, and voice are examples of sequential data that RNNs and LSTMs can operate with. They show how these things alter as time goes on.

- Transformers revolutionized how NLP operates by using self-attention. This made them the best at writing programming, translating, and summarizing.

- GANs you can utilize GANs to produce photographs that look authentic and then tweak them to match different needs.

- Autoencoders and VAEs can learn how to represent things on their own, discover outliers, and compress data.

If you know how AI tools function, what their hyperparameters are, and how to use them best, you can pick the perfect one for each job.

1. CNNs, or Convolutional Neural Networks

1.1 In short

Convolutional Neural Networks (CNNs) are a kind of neural network that works well with data that is in a grid, like images. Yann LeCun came up with CNNs in the late 1980s. They became renowned in 2012 because to AlexNet. Convolutional layers help CNNs detect local patterns like edges and textures. Pooling layers shrink the data, and fully connected layers put it in order.

1.2 Key Parts

- Convolutional Layer: Makes feature maps by using learnable filters (kernels) on the input.

- Activation Function: Leaky and ReLU ReLU is one example of a nonlinear function that makes things nonlinear.

- Pooling Layer: Reduces the number of calculations by downsampling feature maps (max pooling or average pooling) while still making sure that spatial invariance holds true.

- Fully connected layer: Flattens features so they can be used for the final classification or regression.

1.3 Important Structures

- LeNet-5: The first time someone tried to recognize numbers.

- AlexNet won ImageNet 2012 because its architecture was deeper and its GPU processing was faster.

- VGGNet: It proved that using small (3×3) filters makes depth more accurate.

- ResNet: Added more connections so that networks might be very deep (up to 152 layers).

- MobileNet is a tiny CNN that works on mobile and embedded devices.

1.4 Uses

- Image Classification: Grouping photos together.

- Finding objects and arranging them in groupings, like YOLO or Faster R-CNN.

- Semantic segmentation is the process of putting tags on pixels for self-driving automobiles and medical imaging.

- Style Transfer: CNN feature maps are used by filters to change how a picture looks.

1.5 Good and Bad Things

Things that are good

- Very good at working with visible data.

- Sharing parameters lowers the total number of weights.

- An ecosystem that is completely formed with models that have been trained.

Limitations

- Not good at modeling sequences without affecting the structure.

- Needs a lot of data with labels.

- If the receptive field isn’t big enough, you might not see the big picture.

2. Long Short-Term Memory (LSTM) and Recurrent Neural Networks (RNNs)

2.1 Overview

Recurrent Neural Networks (RNNs) handle sequential data by keeping a hidden state that changes with each timestep. But vanilla RNNs have a hard time with gradients that go away or get excessively big over extended sequences. Long Short-Term Memory (LSTM) cells were invented by Hochreiter and Schmidhuber in 1997. These cells have gates that control the flow of information, which makes the problem less bad.

2.2 The Structure of an LSTM Cell

- Forget Gate: It decides what data to delete.

- Input Gate: Chooses what new information to preserve.

- Cell State: Keeps memories for a long period.

- Output Gate: At every time step, it controls the output.

2.3 Different Kinds

- Gated Recurrent Unit (GRU): A simplified form of LSTM that has fewer gates and learns faster.

- Bidirectional RNNs: They look at sequences in both directions to gain extra information.

2.4 Uses

- Language Modeling: Trying to guess the next word, which is like making word2vec better.

- Machine Translation: Seq2Seq models that are focused on one item.

- Speech recognition is the process of turning sounds into text.

- Forecasting time series: predicting the weather and the stock market.

2.5 Pros and Cons

Strengths

- Gets dependencies based on when they are needed.

- Can deal with sequences of varied lengths.

Restrictions

- Long sequences use a lot of computing power.

- It has difficulties with really long-range dependencies if you don’t pay attention.

3. Transformers

3.1 A Quick Look

Vaswani et al. originally discussed about transformers in “Attention Is All You Need” (2017). They changed from recurrence to self-attention, which helped them deal with all the elements of a sequence at once. Because of this major stride ahead, models like BERT, GPT, and T5 were built. These models changed NLP and other things as well.

3.2 The Self-Attention Mechanism

- Queries, Keys, and Values employ dot products to figure out how much attention they get.

- Scaled Dot-Product Attention: Use softmax on scores to give some numbers more weight.

- Multi-Head Attention: various heads of attention gaze at various connections at the same time.

3.3 The Transformer Structure

There are layers of multi-head attention and feedforward networks piled on top of each other in the encoder-decoder.

- Positional encoding: adds information about the order of the sequence.

3.4 Key Models

- BERT stands for “bidirectional encoder pre-training” or “masked language modeling.”

- The GPT Series is a group of autoregressive language models that make text.

- T5: The text-to-text transformer can accomplish a lot of various things using natural language processing.

3.5 Uses Outside of NLP

- Vision Transformers (ViT) apply self-attention on sections of images in computer vision.

- ASR Speech Transformers for changing speech.

- In reinforcement learning, transformers are employed as models of the world.

3.6 Pros and Cons

Strengths

- Very good at showing how things are linked over a lengthy period of time.

- It’s easy to parallelize, which speeds up training.

Limitations

- The more steps there are, the harder it gets by a factor of four.

- Big models need a lot of data and computer power.

4. Networks that make things happen (GANs)

4.1 A brief overview

Ian Goodfellow created GANs in 2014. In a minimax game, there are two networks: a generator and a discriminator. They both aim to produce bogus data that seems real.

4.2 Parts of a GAN

- Generator (G): It turns random noise into fake samples.

- Discriminator (D): Tells the difference between actual and fraudulent samples.

Training alternates between making G better (to fool D) and making D better (to find forgeries).

4.3 Different kinds

- Deep convolutional GAN for making pictures.

- CycleGAN is a kind of image-to-image translation that doesn’t need pairings of images.

- StyleGAN: Making faces with a lot of details.

4.4 Uses

- Image Synthesis: Making visuals that look real.

- Data augmentation implies adding to datasets when there isn’t much data.

- Super-Resolution: Making pictures crisper.

- Art and design are all about making things that are new and interesting.

4.5 Good and Bad Things

Makes info that is really real.

A framework that can be used in many ways.

Limitations

- Training that isn’t stable and mode breakdown.

- Sensitive to decisions about architecture and hyperparameters.

5. Autoencoders and Variational Autoencoders (VAEs)

5.1 In short

Autoencoders are neural networks that can learn to encode data on their own. They learn to connect inputs back together through a bottleneck layer to do this. Variational Autoencoders (VAEs) take it a step further by employing probability to describe variables that are not visible. This makes it possible to use generative modeling.

5.2 Building Style

- Encoder: This changes the input into a hidden representation with fewer dimensions.

- Decoder: It takes code that is concealed and changes it back into input.

- Bottleneck: It makes the representation smaller, which makes it easier to learn features.

VAEs add parameters for the mean and variance, and then they look for the best combination of KL divergence and reconstruction loss.

5.3 Different Versions and Additions

- Denoising Autoencoders: Fixing faulty inputs so that feature extraction works correctly.

- Sparse Autoencoders: Features that can be understood need constraints on how sparse they can be.

- β-VAE: Separates hidden aspects for you.

5.4 Uses

- Dimensionality Reduction: This is a different technique to do nonlinear embeddings than PCA.

- Anomaly detection is the process of identifying differences by faulty reconstruction.

- Generative Modeling: Getting samples from a learned hidden distribution.

- Recommendation Systems: Getting rid of stuff you don’t want by using hidden factors.

5.5 Pros and Cons

Strengths

- Learning simple representations by yourself.

- Probabilistic interpretation enables the generation of samples.

Limitations

- Outputs that aren’t as clear as those from GANs.

- Finding the correct mix between reconstruction and latent regularization can be hard.

Good Habits and Advice

Setting up and entering information

- Set the inputs to normal, which indicates that the average should be zero and the variance should be one.

- Make it stronger by adding noise, flips, and rotations.

Getting the hang of moving

- Use models that have already been trained, like ResNet or BERT, and improve them for the work you need to complete.

Switching Hyperparameters

- Try out different learning rates, batch sizes, and optimizers like Adam and SGD with momentum.

- Use learning rate schedulers like cosine annealing and warm restarts.

Regularization

- To keep the model from fitting too well, use dropout, weight decay, and batch normalization.

Debugging and Watching

- Check the losses and metrics for training and validation on a regular basis.

- To see how a model works, look at the attention weights, feature maps, or embeddings.

Efficiency and Growth

- Train on more than one TPU and GPU.

- Using mixed-precision training (FP16) can help you save memory.

Ethics and lowering bias

- Look for biases that aren’t fair.

- Set boundaries on fairness and supply a range of data sets.

Questions that are often asked (FAQs)

Q1: What algorithm should I use as a beginner? A. For vision problems, start with CNNs because their convolutional structure is easy to understand. If you have sequence data, you should utilize LSTMs or GRUs first. There are guides that show you how to utilize TensorFlow and PyTorch.

How do I choose between RNNs and Transformers? A. Transformers are usually better than RNNs when there are long sequences and when processing is done in parallel. Use RNNs or LSTMs if you don’t have a lot of data or processing power.

Q3. Are GANs suitable for beginners? A. It can be hard to work with GANs because they don’t always learn properly. Before you move on to high-resolution picture synthesis, try DCGAN on small datasets like MNIST.

What sets VAEs apart from autoencoders? A. Autoencoders employ a deterministic way to turn inputs into latent codes, while VAEs utilize a probabilistic method to describe latent variables. This allows them to create by taking samples.

Q5: How important is it to alter the hyperparameters? A. A lot. Even tiny adjustments to the learning rate, batch size, or architecture can make a major difference in how effectively a model functions. Use programs like Optuna or Ray Tune that perform the work for you.

In the end, deep learning is changing swiftly, but these techniques are still quite useful:

- CNNs help you figure out where things are.

- Using RNNs and LSTMs to model sequences

- Transformers for learning that rely on attentiveness

- GANs for making things that aren’t allowed

- Representation without supervision using autoencoders and VAEs

You’ll be at the bleeding edge of AI innovation if you acquire these skills and the best approaches to prepare data, regularize it, and utilize ethical AI. Look at the resources below, play around with open-source code, and get engaged in this exciting field.

References

- LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient‑based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324. https://doi.org/10.1109/5.726791

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 25. https://dl.acm.org/doi/10.1145/3065386

- He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778. https://arxiv.org/abs/1512.03385

- Hochreiter, S., & Schmidhuber, J. (1997). Long Short‑Term Memory. Neural Computation, 9(8), 1735–1780. https://www.bioinf.jku.at/publications/older/2604.pdf

- Vaswani, A., Shazeer, N., Parmar, N., et al. (2017). Attention Is All You Need. Advances in Neural Information Processing Systems, 30. https://arxiv.org/abs/1706.03762

- Goodfellow, I., Pouget‑Abadie, J., Mirza, M., et al. (2014). Generative Adversarial Nets. Advances in Neural Information Processing Systems, 27. https://arxiv.org/abs/1406.2661

- Kingma, D. P., & Welling, M. (2014). Auto‑Encoding Variational Bayes. International Conference on Learning Representations. https://arxiv.org/abs/1312.6114

- Vincent, P., Larochelle, H., Bengio, Y., & Manzagol, P.-A. (2010). Extracting and Composing Robust Features with Denoising Autoencoders. Proceedings of the 25th International Conference on Machine Learning, 1096–1103. https://www.jmlr.org/papers/volume11/vincent10a/vincent10a.pdf