Artificial intelligence (AI) has changed the game in several fields during a time of fast technological growth. AI systems are making the world change very quickly. They are making things like smart cities, personalized healthcare, self-driving automobiles, and financial services possible. But these new alterations bring up moral considerations that will decide if AI is useful or dangerous for people in the long run. AI ethics is the study and use of moral principles in the design and usage of AI. It’s no longer something that arrives after the fact. It is now a key element of how future technology will be made.

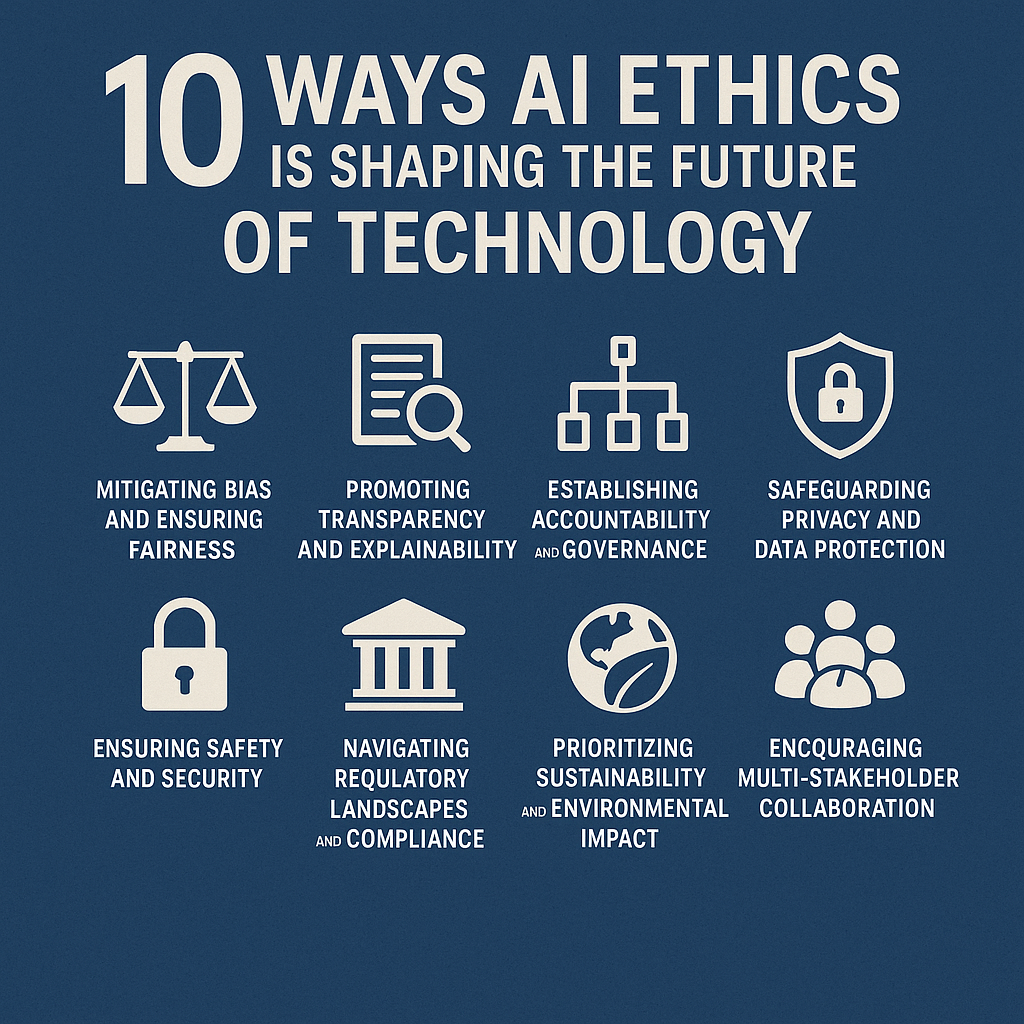

10 Ways AI Ethics is Shaping the Future of Technology

This article goes into great detail regarding each topic of ethics, presents real-life examples, and talks about what they signify for the future.

1. Making things more fair and less unfair

Why it matters: When AI models are trained on outdated data, they might make prejudices in society, like employment, lending, or the criminal justice system that are discriminatory to minorities, stronger or worse.

Finding and checking for prejudice: One technique to check for bias is to utilize “counterfactual fairness.” This means seeing if a model’s choice would be different if a sensitive component, like race or gender, were modified. You can also check for bias by having experts from outside the company undertake frequent audits.

Getting data that is typical: It’s crucial to make sure that datasets have a wide range of demographics so that things don’t go wrong. For example, AI initiatives in healthcare today need information from people of diverse ages, races, and places to make sure they don’t make mistakes while diagnosing.

For instance, the U.S. Equal Employment Opportunity Commission said in 2023 that employers should have to prove how they aim to cut down on bias before they use AI to recruit individuals.

In the future, AI systems will still obtain “Energy Star” ratings for treating people fairly, just like they do now. People will be more likely to trust and use it if you do this.

2. Encouraging honesty and openness

“Black-box” AI doesn’t show how decisions are made, which makes it tougher to hold people accountable and can make users less sure of themselves.

LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive Explanations) are two techniques that enable explainable AI (XAI) figure out which features affected a choice by breaking down model outputs.

Simple explanations: Along with technical explanations, easy-to-understand summaries (such “Your loan was approved because your income is above threshold X”) enable those who aren’t tech-savvy understand AI choices.

The EU’s AI Act specifies that AI systems that are very harmful must be honest and open. This is what regulators desire. Before selling their items, sellers must give clear instructions and documentation that people can understand (European Commission, 2024).

In the future, businesses will be able to distinguish out by being clear. Vendors who supply “fully interpretable” solutions may be able to charge more and persuade more businesses to buy them.

3. Making rules and making sure everyone respects them

Why it matters: If there isn’t a clear person or group to blame for AI failures, like erroneous diagnoses or mishaps with self-driving cars, people who are affected may not be able to obtain help.

AI governance bodies: Many companies now have AI ethical committees made up of people from multiple professions (legal, technical, and domain) that watch over projects at every stage of their life cycle.

Model cards and datasheets with standard reporting templates demonstrate how to use the model, how well it works, what its limits are, and how it could be misused (Mitchell et al., 2019).

Some places are looking of holding AI developers strictly liable, like product liability laws, to make sure that individuals who are harmed are held responsible for the harm they do.

Federated governance approaches, with aid from bodies like the International Atomic Energy Agency, may make sure that everyone is responsible for audits and that they are done the same way in the future.

4. Protecting privacy and data

Why it matters: AI needs a lot of data to perform successfully, but if you don’t protect people’s personal information, you could break privacy regulations and lose your consumers’ confidence.

Privacy by Design: By building in mechanisms to hide and limit data from the start, including differential privacy, the dangers to privacy are smaller.

Federated learning: Companies like Google have proved that AI can safeguard privacy without storing sensitive data in one place by training models on-device and simply communicating parameter updates, not raw data.

Regulatory alignment: AI systems deployed all around the world must follow the GDPR, CCPA, and new privacy regulations that have been passed in Africa and Asia.

We believe that there will be many more vaults for personal data in the future. These venues help people maintain track of their digital footprints, and AI software can only see some data points for a brief time.

5. Start and work with an agency that cares about people.

Why it matters: AI systems that don’t think about what humans desire could damage or make people upset without wanting to.

AI that is morally sound lets individuals make choices instead of taking them away. This helps them get more done.

User-in-the-loop: AI is effective when people check things, especially when it comes to significant judgments like medical diagnoses.

Accessibility standards: AI interfaces are open to everyone as long as they can be used with voice commands and screen readers.

“Value-aligned AI assistants” will help individuals make decisions that are in accordance with what they believe is right.

6. Making sure that safety and security are in place

Why it matters: People with evil intents can take advantage of AI’s shortcomings by contaminating training data or figuring out how to leverage proprietary algorithms by reverse-engineering models.

Adversarial robustness: It’s very vital to be able to protect against adversarial examples, which are inputs that have been modified just enough to deceive AI. This is especially true for systems that can discern the difference between a face and a self-driving car.

Secure model hosting: One technique to do math on encrypted data is with homomorphic encryption. This protects the model’s privacy and integrity in situations that aren’t safe.

Companies are putting up AI-specific Security Operation Centers (SOCs) to watch for AI-related threats as they happen, discover them, and address them.

In the future, there will be AI security certifications, such as ISO 27001. These will show you the best ways to design and use models that are safe.

7. How to obey rules and laws

Why it matters: When the rules are different in the EU, the U.S., China, and India, it’s impossible for global AI businesses to conduct business.

The EU AI Act splits AI systems into several categories of risk and sets rigorous requirements for “high-risk” usage like biometric identity and important infrastructure (European Commission, 2024).

The NIST Framework and U.S. Executive Orders are optional policies that highlight oversight that stimulates innovation, model transparency, and working together between the public and commercial sectors (The National Institute of Standards and Technology, 2023).

Emerging markets: China’s “New Generation AI Development Plan” puts a lot of emphasis on the country’s leadership in AI research, but it also requires data localization and content regulation.

Companies will be able to test out AI solutions with fewer regulations in the future thanks to regulatory sandboxes. This will help them think of new ideas faster and make sure they meet the guidelines before they go live on a big scale.

8. Putting people’s and the environment’s health first

People are worried about the carbon footprints of massive AI models since they need a lot of energy to train.

Researchers argue that when people talk about green AI projects, they should also talk about how effectively the models function and how much CO2 and energy they use. AI Mitigation Calculators and other tools assist developers figure out how much their work will hurt the environment.

You can make models smaller without altering how well they perform by using model compression or distillation. One method to achieve this is to make lighter versions of architectures like GPT. These approaches don’t need as much processing power.

Hyperscale providers are making their data centers more efficient and less harmful to the environment by using renewable energy sources and liquid cooling.

In the future, businesses who can make their models as simple as possible while still being good for the environment will be able to earn carbon-neutral AI certifications. This could influence how they buy things.

9. Improving the planet for everyone

Why it matters: We need to keep an eye on how AI is changing, otherwise it could make the gap between people with and without technology greater. This would be wonderful for wealthy countries and major tech businesses, but it would be disastrous for communities who don’t have enough access to technology.

Open-source technologies like Hugging Face and OpenMined let people cooperate on projects with other people. These projects make sure that everyone can use the tools and models they have to their full potential.

Localized AI solutions: AI apps need to work in certain places and take into account things like language, cultural norms, and infrastructural limits to make sure they are usable and relevant.

Building capacity: In poor nations, public-private partnerships are funding for AI literacy initiatives to help indigenous talent expand.

A more varied AI ecosystem, where many different people help create models, will lead to solutions that help solve problems all across the world, such as making farming better in sub-Saharan Africa and supporting people after catastrophes in Southeast Asia.

10. Getting a lot of people to work together

Why it matters: Only one field or area of study can’t deal with ethical AI.

The World Economic Forum’s Centre for the Fourth Industrial Revolution is one example of a public-private forum where firms, governments, universities, and NGOs may all work together to set regulations.

IEEE and ISO are two organizations that set worldwide standards, such the IEEE 7000 series, that enable engineers create AI that is ethical (The IEEE Standards Association in 2022).

Citizen assemblies: Some governments are employing “AI Citizen Juries” to see what people think about uses that are up for debate. This makes sure that everyone agrees with the use and that society thinks it’s right.

Decentralized governance models that use blockchain to retain clear records would make it easier to update moral rules in real time to reflect how society’s values are changing.

You shouldn’t only follow AI ethics to stay out of trouble; it’s the right thing to do for the next wave of technological progress. Businesses can get the most out of AI while also respecting people’s rights, the health of society, and the earth by making sure that every step, from collecting data to using it, includes fairness, openness, responsibility, and sustainability. AI that follows moral laws will help make the future fair, hospitable, and affluent as rules become more consistent and humans work together more often.

Questions that people often ask

Q1: What are the moral norms that AI should follow? AI ethics is the set of moral standards and best practices that tell humans how to create, utilize, and construct AI. These standards make guarantee that technologies are fair, honest, and open, and that they follow the rules of society.

Q2: Why do businesses need AI that is ethical? People are more likely to trust ethical AI. It minimizes the chances of getting into trouble with the law or getting bad press, and it often helps people make better decisions. People want to work for, partner with, and buy from firms that employ AI in a way that is safe.

Q3: How can small enterprises employ AI ethics without spending a lot of money? With Aequitas and other open-source tools, you may find bias, lightweight governance frameworks, and community initiatives that share best practices for little or no expense.

Q4: What happens if you don’t follow the rules for AI? If you don’t follow the EU AI Act’s regulations about safety or openness, you might be fined up to 6% of your global annual sales or €30 million, whichever is higher.

Q5: What do AI ethics and data privacy laws have in common? Getting authorization, limiting data to a minimum, and being open are all aspects that both fields have in common. Privacy-by-Design is a widespread approach that connects ethical AI with rules like the GDPR.

Q6: Is it possible for AI to be totally fair? It’s hard to remain entirely unbiased. The idea is to cut down on bias, not get rid of it totally. You can do this by employing diverse datasets, paying great attention to them, and always keeping an eye on them.

Q7: How can I keep up with changes to the rules of AI ethics? Join groups like IEEE TechEthics and get newsletters from groups like the Partnership on AI. The EU Digital Strategy will help you stay up to date on changes to the law.

References

- European Commission. (2024). Regulating Artificial Intelligence. Retrieved from https://digital-strategy.ec.europa.eu/en/policies/regulating-ai

- National Institute of Standards and Technology. (2023). NIST AI Risk Management Framework. Retrieved from https://www.nist.gov/itl/ai

- Mitchell, M., Wu, S., Zaldivar, A., Barnes, P., Vasserman, L., Hutchinson, B., … & Gebru, T. (2019). Model Cards for Model Reporting. FAT* ’19. Retrieved from https://arxiv.org/abs/1810.03993

- IEEE Standards Association. (2022). Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. Retrieved from https://standards.ieee.org/initiatives/ethics

- Google AI Blog. (2021). Federated Learning: Collaborative Machine Learning without Centralized Training Data. Retrieved from https://ai.googleblog.com/2021/04/federated-learning-collaborative.html

- Holstein, K., Wortman Vaughan, J., Daumé III, H., Dudik, M., & Wallach, H. (2019). Improving Fairness in Machine Learning Systems: What Do Industry Practitioners Need? Retrieved from https://dl.acm.org/doi/10.1145/3351095.3372864

- Bender, E. M., Gebru, T., McMillan‐Major, A., & Shmitchell, S. (2021). On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? FAccT ’21. Retrieved from https://dl.acm.org/doi/10.1145/3442188.3445922

- Jobin, A., Ienca, M., & Vayena, E. (2019). The Global Landscape of AI Ethics Guidelines. Nature Machine Intelligence, 1(9), 389–399. Retrieved from https://www.nature.com/articles/s42256-019-0088-2

1 Comment