AI has gone from being a small field of study to a powerful force that is changing industries, societies, and everyday life. AI promises to improve many things and come up with new ideas. It could help with things like self-driving cars, virtual assistants, predictive healthcare, and algorithms for trading stocks. But with great power comes great responsibility: AI systems can have a big effect on people’s rights, social justice, democracy, and world peace.

Every step of the AI lifecycle, from gathering data to using models and keeping an eye on them, should include ethical principles, according to developers and businesses. This is the only way to make AI that is safe, legal, and good for people. Regulators, investors, customers, and the general public all want AI projects to be honest, fair, and responsible. You can’t use AI that is moral anymore.

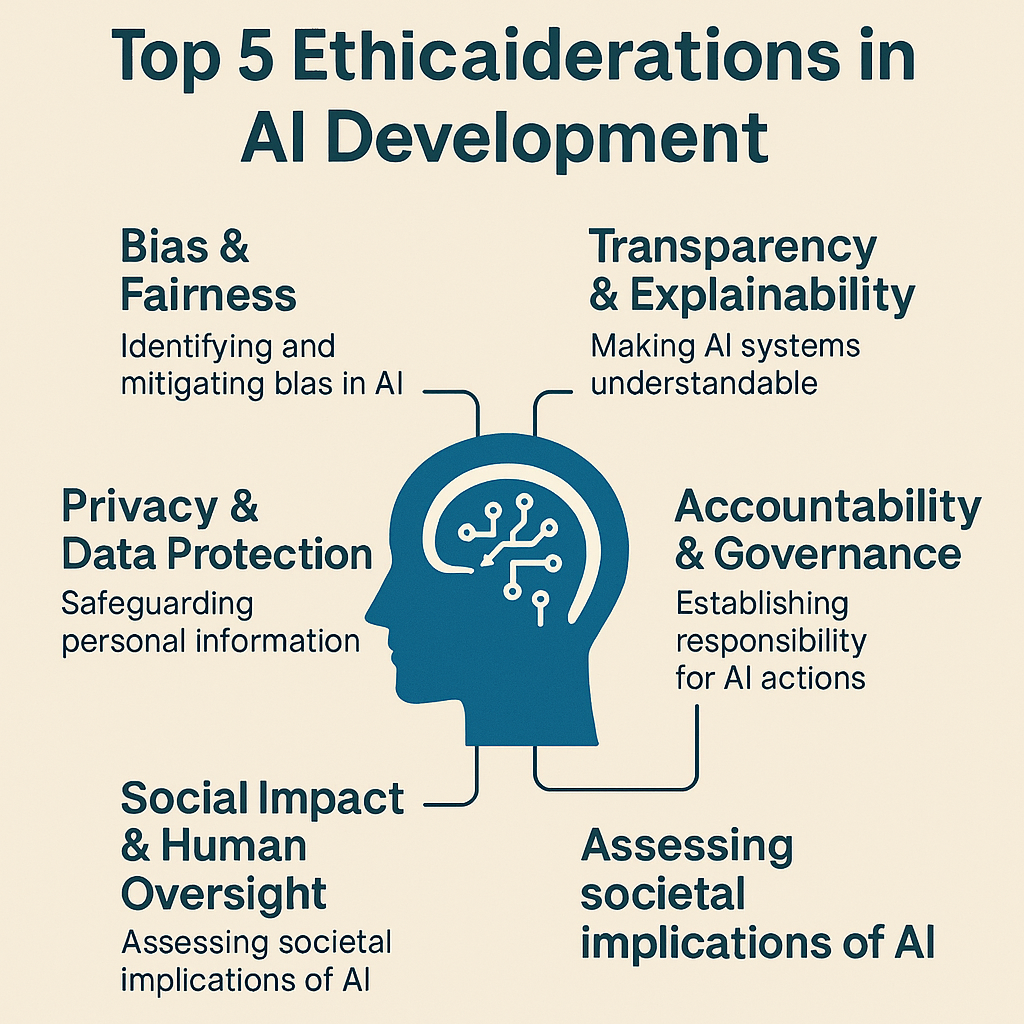

This article talks a lot about the five most important moral issues that come up when making AI. It talks about each one in great detail and gives best practices, real-life examples, and helpful tips. We’ll also answer common questions, give you a list of the most important things to remember, and give you links to more reading on the subject.

1. Bias and Fairness

1.1 Learning how algorithms can be unfair

When an AI system always gives unfair results to some people or groups, that’s called algorithmic bias. This is usually because the training data is biased, the model’s assumptions are wrong, or the features aren’t shown in the right way. Facial recognition systems don’t work well on people with darker skin, and some groups of people get lower scores on résumés when they use hiring algorithms.

1.2 Where Bias Comes From

- Old Information Bias: Old information that shows how people feel about things, like the pay gap between men and women at work.

- Sampling Bias: When some groups of people are not included in datasets enough or too much.

- Measurement Bias: Using labels that aren’t always correct or the same.

- Modeling Bias: Picking algorithms that naturally favor some patterns over others.

1.3 Ways to lower the risk: Getting Different Kinds of Data

When you collect training data, make sure to include people from different groups. Add more people from groups that don’t have a lot of people.

When you use synthetic data generation or data-augmentation methods, be careful not to get fake artifacts.

Finding and checking for bias

To fairly measure bias, use equalized odds and the difference in demographic parity.

Check your algorithms on a regular basis with reviews from other sources.

Ways that use algorithms

- Pre-processing: Changing the weights or samples of the data is what pre-processing means to make sure that the data is fairly represented.

- In-processing: Add fairness limits directly to the training goal, like adversarial debiasing.

- Post-processing: You can change the threshold, for example, to make the model’s outputs fair.

People in the Loop

When you annotate datasets and look for bias, it’s important to include domain experts and ethicists.

Let users tell you about behavior that seems strange.

1.4 A Case Study: How to Get a Loan

A large fintech company learned that its AI for approving loans was always saying no to people who lived in ZIP codes with lower incomes. They were able to reduce the disparate impact by 42% while keeping the overall accuracy of the predictions by checking the model and retraining it with socioeconomic features that were more balanced.

2. Being open and clear

2.1 The “Black Box” Issue

When people use complicated models like deep neural networks that are hard to understand, they often wonder how decisions are made. People may not trust you as much if you’re not clear, and it may be harder to follow the rules, especially when it comes to laws like the EU’s General Data Protection Regulation (GDPR), which says that people have a “right to explanation” for decisions made by machines.

2.2 How Clear Things Are

- Global Explainability: For example, looking at feature importance rankings can help you understand how the model works as a whole.

- Local Explainability: Giving an explanation for each prediction, like SHAP values and LIME explanations.

2.3 The Best Ways to Pick a Model

When you can, pick models that are easy to understand, like decision trees and linear models, for important areas.

Things to Help You Understand What Happened

- Local Interpretable Model-Agnostic Explanations (LIME)

- SHAP is short for “SHapley Additive exPlanations.”

- Using counterfactual methods to show “what if” scenarios.

Keeping Records and Reporting

- Your model cards should be easy to read and show the training data, the model’s limits, and how to use it.

- Make data sheets for datasets that explain how the data was gathered, how it was cleaned, and any possible biases.

Everyone should be able to see everything.

Tell people in plain language why their loan was turned down, like “Your debt-to-income ratio was too low for us.”

2.4 The Regulatory Landscape

- GDPR Article 22 says that people have the right to not have machines make decisions without human input.

- The California Consumer Privacy Act (CCPA) says that companies have to be honest about how they use people’s private information.

- The U.S. Algorithmic Accountability Act, which is being looked at, would require AI systems that are likely to cause harm to have impact assessments.

3. Keeping data and privacy safe

3.1 Dangers to Private Information

AI systems often need a lot of personal information, such as your health records, bank statements, and what you do on social media. If information isn’t handled properly or is shared without permission, it can lead to identity theft, discrimination, and loss of trust among users.

3.2 Tools to keep your privacy safe

Data that has been made anonymous or used a fake name

- Remove or hide direct identifiers like names and Social Security numbers.

- When you combine datasets, be careful about the risks of re-identification.

Different kinds of privacy

- Add noise that has been changed mathematically to the total outputs so that no one can copy individual records.

- For example, you could use Google’s TensorFlow Privacy or IBM’s Diffprivlib.

Learning in a Federation

- Train models on users’ devices and only send them model updates, not raw data.

- Use safe aggregation protocols to keep individual gradients from getting out.

MPC (Secure Multi-Party Computation)

Let everyone work together to train models without giving out any raw data.

3.3 Governance and Compliance

- According to GDPR, you can only collect a certain amount of data for a certain reason, and you have to protect the rights of the people whose data you collect.

- HIPAA is the law that governs healthcare in the U.S. It says that PHI, or protected health information, must be kept safe.

- The ISO/IEC 27001 standard tells people all over the world how to run systems that keep data safe.

4. Responsibility and Management

4.1 What does it mean to be in charge?

It gets harder to tell who is in charge as AI systems become more complex and able to do things on their own. If a self-driving car crashes, who is to blame? What do companies do to fix the problems that hiring algorithms that are biased cause?

4.2 Making rules for how to do things

Ethics Committees That Work in Different Fields

- Bring together a lot of different people, like engineers, lawyers, ethicists, and leaders in the community.

- At every stage of development, you should do regular risk and ethical reviews.

The Basics of Ethical Design

- Use frameworks that are well-known, like the IEEE Ethically Aligned Design.

- Follow the company’s values and let everyone know what the rules are for AI ethics.

Watching and Checking

- Check out AI programs that are dangerous for the company and for people outside of it.

- Get third-party watchdogs or certification groups involved.

How to fix things when they go wrong

- When AI doesn’t work, be clear about what to do, such as how to figure out what went wrong and how to fix it.

- Be honest with stakeholders and regulators when things go wrong.

4.3 Different Ways to Think About Legal Responsibility

- Strict liability means that the person who made or developed something is always to blame.

- Liability Based on Negligence: You have to prove that you didn’t follow a standard of care.

- Regulatory penalties include fines and orders to stop doing things that break AI rules.

5. What it means for people and society

5.1 What it does to people

AI-powered automation can take jobs away, make life harder for people who are already poor, and give tech oligopolies more power. On the other hand, responsible AI could help society in areas like education, healthcare, and protecting the environment.

5.2 Plan and build with people in mind

- Ask community groups, civil society groups, and people who will be affected to help plan the workshops.

- Ethnographic research will help you learn about what people really need and how they live.

Monitoring and feedback loops that never end

- Make dashboards that show you how well the model is working, how it’s changing, and what people are saying about it right now.

- Add features to the app that let users report bad or harmful outputs so you can fix them.

Evaluations of Effects

- Before you use something, do a Social Impact Assessment (SIA) and an Algorithmic Impact Assessment (AIA).

- Think about how the changes might affect people’s jobs, their ability to get services, and their faith in society.

Learning new things and starting a new job

- Help schools and governments create programs to teach people new skills.

- Use AI savings to help people who are out of work.

5.3 Triage in medicine AI is one example.

An AI-based triage tool helped a hospital figure out which patients needed to go to the emergency room first. Doctors were able to make the final decisions by carefully involving everyone who was interested and rolling out the program in stages. This cut the wait time by 23% without making anyone mad.

Questions and Answers (FAQs)

Q1: Why is ethical AI so important?

When AI is ethical, people are safe from things like discrimination, having their privacy violated, and not being able to make choices. It helps people trust the government, makes sure that rules are followed, and makes sure that technology is what people want.

Q2: How can small businesses use AI ethics without spending a lot of money?

Start by doing these things: Get information from different sources, write down how things work, and have someone outside of your group look over everything. IBM AI Fairness 360 and Google’s Privacy Sandbox are two open-source tools that can help with privacy and fairness.

Q3: What’s the difference between being open and being clear?

Being open means being honest about the source of a system’s data, how it was made, and how well it works. Explainability is about making some model choices clear, usually to end users or regulators.

Q4: How can I tell if my AI model is fair?

There are a lot of ways to check for fairness, like demographic parity (equal positive rates across groups), equalized odds (equal false positive and negative rates), and predictive parity (equal positive predictive value). Choose a metric based on the law and the area it affects, since not all metrics work in all situations.

Q5: Is it possible to get an ethical AI certificate?

There are new certification programs for the EU’s AI Act conformity assessments, IEEE’s Ethically Aligned Design metrics, and BSI’s AI Management Systems standard. Here are some things that people who are early adopters can do to show they mean business.

It is both the right thing to do and the smart thing to do to think about the moral issues that come up when AI is being made. By actively addressing bias and fairness, transparency and explainability, privacy and data protection, accountability and governance, and social impact and human oversight, organizations can create AI that earns the public’s trust, adapts to new rules, and encourages long-term innovation.

We have to keep an eye on AI all the time to make sure it is doing what it should. This means following strict rules about data, keeping good records, working with a lot of different people, and always being aware of what’s going on. As AI systems become more and more a part of our lives, the stakes will only get higher. Developers and leaders can make sure that AI is a good thing that helps people and societies instead of hurting them by following the ideas and best practices given here.

References

- European Commission. AI Act Proposal. Accessed July 2025.

https://commission.europa.eu/ai-act-proposal - General Data Protection Regulation (GDPR). Regulation (EU) 2016/679. Official Journal of the European Union.

https://eur-lex.europa.eu/eli/reg/2016/679/oj - IBM AI Fairness 360. Open‑source toolkit for fairness metrics.

https://aif360.mybluemix.net - Google TensorFlow Privacy. Differential privacy library.

https://www.tensorflow.org/privacy - Molnar, Christoph. Interpretable Machine Learning. 2020.

https://christophm.github.io/interpretable-ml-book - OECD. Recommendation on AI. OECD/LEGAL/0449. 2019.

https://www.oecd.org/going-digital/ai/principles/ - IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. Ethically Aligned Design.

https://standards.ieee.org/industry-connections/ec/autonomous-systems.html

2 Comments