The arrival of ultra-fast, ultra-reliable mobile networks is doing more than making phones snappier—it’s rewiring how and where machine learning (ML) gets built and deployed. Thanks to the speed, latency, and device-density characteristics of fifth-generation (5G) networks, organizations can push intelligence out of the data center and into factories, vehicles, hospitals, farms, and city streets. In practical terms, 5G turns everyday environments into data-rich, low-latency “compute fabrics,” where models can learn from streams and act in milliseconds. This article unpacks how 5G accelerates ML end-to-end, and gives you a step-by-step playbook—from architecture choices and edge deployment patterns to federated learning, network slicing, and measurement—so you can move from pilot to production with confidence.

Key takeaways

- 5G is the missing transport layer for real-time ML: high throughput, low latency, and massive device density unlock on-device and edge inference at scale.

- Edge + 5G beats cloud-only for time-critical workloads: pairing multi-access edge computing with local model serving enables sub-tens-of-milliseconds responses.

- Federated learning scales with 5G: high-bandwidth uplinks and reliable links support privacy-preserving, on-device training without centralizing raw data.

- Network slicing aligns the network to the model: domain-specific slices guarantee QoS for safety-critical ML, while other slices carry bulk analytics traffic.

- A pragmatic roadmap exists: start with a latency-sensitive “north-star” use case, deploy a small edge zone, instrument KPIs, and iterate weekly.

5G fundamentals that matter for ML

What it is & benefits

5G is the latest generation of cellular standards designed to deliver far higher data rates, lower latency, and massively greater connection density than 4G. Three service pillars matter for ML: enhanced mobile broadband (for bandwidth-hungry streams), ultra-reliable low-latency communication (for time-critical control), and massive machine-type communications (for dense IoT telemetry). Peak downlink and uplink targets, end-to-end latency goals in the single-digit milliseconds range for demanding scenarios, and support for up to one million devices per square kilometer reshape how we collect data, place models, and close the loop between sensing and actuation. Global 5G adoption has already reached billions of subscriptions—enough coverage to plan real deployments, not just lab demos.

Requirements & prerequisites

- Network access: Confirm 5G Standalone (SA) coverage or deploy a private 5G network for deterministic performance.

- Edge compute: Access to a multi-access edge computing (MEC) zone (public or on-prem) for low-latency model serving.

- Device fleet: 5G-capable gateways/cameras/sensors; choose sub-6 GHz for coverage or mmWave for short-range, high throughput.

- Ops: Foundational MLOps: container registry, CI/CD, experiment tracking, model registry, monitoring.

Step-by-step (beginner-friendly)

- Map workloads to 5G pillars: Tag each candidate use case as throughput-heavy (eMBB), latency-critical (URLLC), or device-dense (mMTC).

- Choose frequency range: Start with FR1 (sub-6 GHz) for coverage, add FR2 (mmWave) later where you need multi-Gbps bursts.

- Place inference: Default to MEC for latency-sensitive models and to cloud for heavy batch training; push tiny models on-device when possible.

- Instrument latency paths: Measure sensor-to-edge, edge-to-actuator, and inference time separately; set SLOs per use case.

- Secure the pipe: Use SIM-based identity, zero-trust edges, and per-slice policies.

Beginner modifications & progressions

- Simplify: If MEC is not available, emulate with a nearby micro-data center on gigabit fiber and use 5G for the last mile.

- Scale up: Add mmWave in hotspots and deploy GPU-equipped edge nodes for computer vision or speech models.

Recommended metrics

- End-to-end latency (p50/p95), successful transaction ratio, frame drop rate, model accuracy drift, and cost per inference.

Safety, caveats, mistakes

- Don’t design to theoretical peaks; provision to observed latency and jitter.

- Avoid single points of failure at the edge; deploy at least N+1 nodes.

- Beware coverage differences between non-standalone and standalone 5G; only SA unlocks full feature sets like end-to-end slicing.

Sample mini-plan

- Step 1: Benchmark current network and inference latency.

- Step 2: Stand up a single MEC node, deploy a basic model, and compare before/after KPIs.

Real-time ML at the edge: from camera frames to actions

What it is & benefits

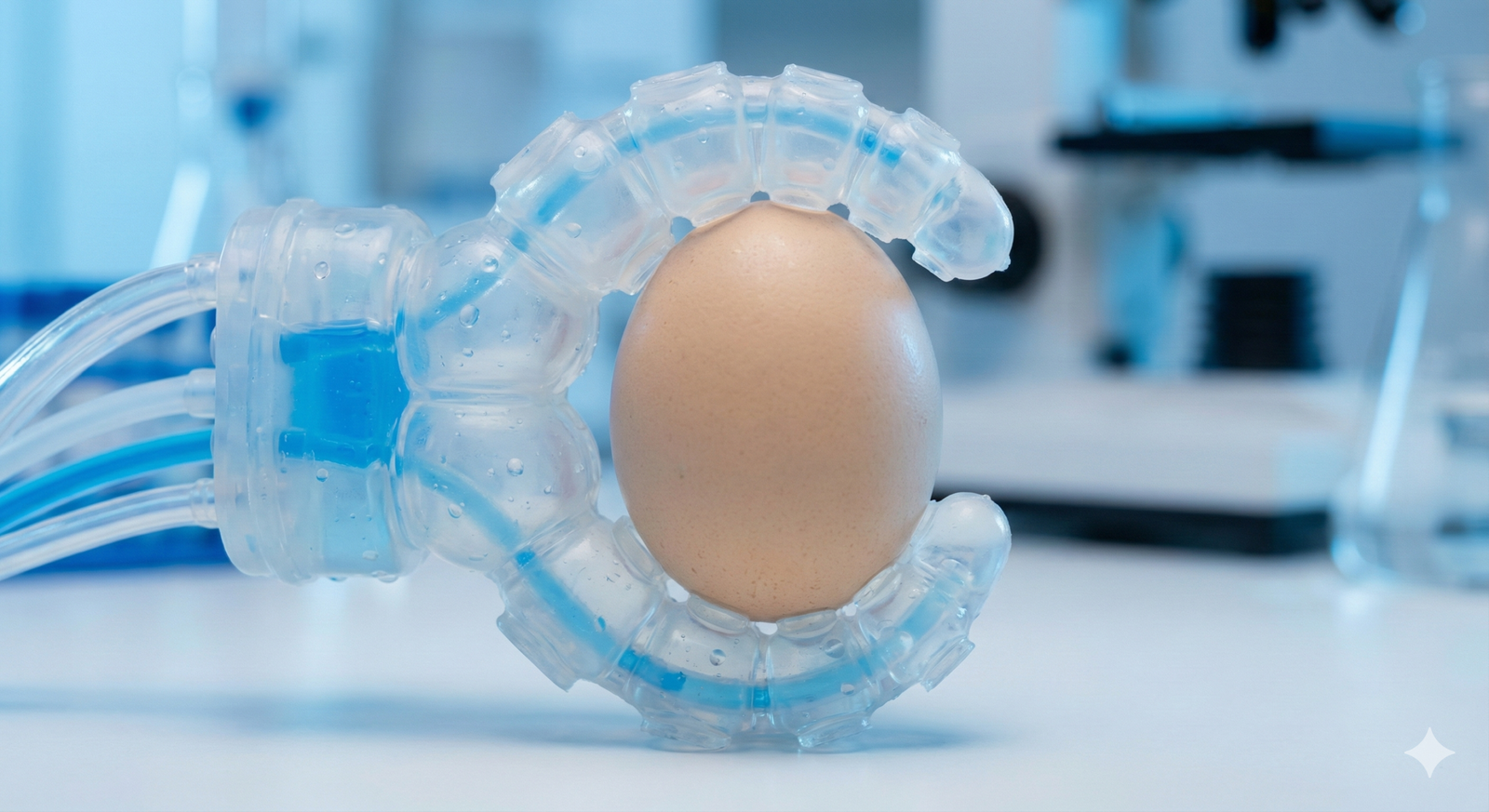

Edge inference means running models (computer vision, speech, anomaly detection) near the data source. With 5G backhaul and MEC, you can process high-rate video streams, detect conditions, and trigger actions in tens of milliseconds. Result: less bandwidth to the cloud, improved privacy, and the responsiveness that safety-critical use cases demand.

Requirements & prerequisites

- Hardware: 5G gateways, PoE cameras/sensors, compact GPU/accelerator at the edge (or CPU for lightweight models).

- Software: Container runtime, model server (Triton/ONNX Runtime/TensorRT equivalent), streaming framework (e.g., RTSP→gRPC pipeline).

- Networking: 5G SA SIMs/eSIMs, private APN or private 5G for deterministic QoS.

Step-by-step implementation

- Select a single stream (e.g., one quality-inspection camera) and a small, quantized model.

- Build the pipeline: Ingest → pre-process → infer → post-process → event bus.

- Deploy to edge: Containerize, ship to MEC, expose a minimal API with auth.

- Close the loop: Wire a simple action (e.g., reject a faulty part) to prove end-to-end latency.

- Scale: Add streams, shard across edge nodes, and load-balance.

Beginner modifications & progressions

- Start small: 720p streams at 10–15 fps with MobileNet-class models.

- Progress: Add multi-camera fusion, use INT8 quantization, and adopt model-specific accelerators.

Recommended frequency/duration/metrics

- Weekly retraining cadence for drifting environments; daily validation on holdout clips.

- Track p95 end-to-end latency, false positives/negatives, and bandwidth saved vs. cloud-only.

Safety and common mistakes

- Validate lighting changes, lens smudges, and motion blur; they cause silent model regressions.

- Don’t skip input validation; malformed frames can crash GPU pipelines.

Sample mini-plan

- Step 1: Edge-deploy a “good/defect” classifier with a 100-image seed set.

- Step 2: Set alert thresholds; trigger operator review for low-confidence cases.

Federated learning over 5G: private data, shared models

What it is & benefits

Federated learning (FL) trains a shared model across many devices or sites without moving raw data. Devices compute local updates and send model deltas to an aggregator at the edge or in the cloud. 5G’s bandwidth and reliability reduce the round-trip time for FL rounds and help keep stragglers in sync. The result is better privacy, compliance alignment, and performance on non-IID, real-world data.

Requirements & prerequisites

- Clients: Devices with enough CPU/GPU to train small batches locally.

- Coordinator: Edge aggregator close to clients to minimize communication delay.

- Framework: FL library (Flower, FedML, TensorFlow Federated, PySyft) and secure aggregation.

Step-by-step implementation

- Partition your data by site/device and choose a simple baseline model.

- Stand up the aggregator at MEC; configure secure transport and client authentication.

- Schedule rounds: clients train for N local epochs, send updates, server averages (e.g., FedAvg).

- Monitor heterogeneity: detect stragglers; adapt learning rates or sample subsets.

- Evaluate on a global validation set; promote only if accuracy and fairness thresholds are met.

Beginner modifications & progressions

- Simplify: Start with two or three clients and a small CNN; simulate slower links to stress test.

- Scale: Add hundreds of clients; switch to hierarchical FL (local aggregators per site).

Recommended metrics

- Round time, client participation rate, accuracy/loss vs. centralized baseline, fairness across cohorts.

Safety, caveats, mistakes

- Protect against model inversion and poisoning; use differential privacy and robust aggregation.

- Guard the control plane; FL can fall apart from simple client authentication gaps.

Sample mini-plan

- Step 1: Pilot FL across three stores or wards for demand forecasting or triage classification.

- Step 2: Compare FL model to a centralized model on out-of-distribution data.

Network slicing for ML: match the pipe to the workload

What it is & benefits

Network slicing creates virtual, end-to-end networks on shared infrastructure with guaranteed characteristics. You can run a high-reliability, low-latency slice for control loops, a high-throughput slice for video analytics, and a best-effort slice for software updates—without them interfering.

Requirements & prerequisites

- Support: 5G SA network with slice orchestration and APIs for provisioning.

- Policy: Defined QoS classes per workload; mapping from app to slice.

Step-by-step implementation

- Classify traffic: Control, inference results, bulk media, telemetry.

- Define slices: e.g., URLLC-oriented slice for robot control; eMBB-oriented slice for video.

- Provision via your operator or private core; attach SIM profiles with slice IDs.

- Observe: Collect slice-level metrics; confirm SLA adherence.

- Iterate: Resize bandwidth or priorities as usage changes.

Beginner modifications & progressions

- Simplify: Use APNs or basic QoS tagging if full slicing isn’t available.

- Progress: Automate slice selection based on app state (e.g., switch to URLLC slice when risk > threshold).

Recommended metrics

- Slice-level p95 latency, packet loss, throughput, and outage minutes.

Safety and mistakes

- Over-fragmenting slices increases management burden; start with two or three.

- Unclear traffic classification causes “rogue” flows to land on the wrong slice.

Sample mini-plan

- Step 1: Put robot control and safety messaging on a low-latency slice.

- Step 2: Move video uploads to a separate high-throughput slice to avoid starving control loops.

Building a 5G-ready MLOps stack

What it is & benefits

A 5G-ready MLOps stack coordinates code, models, data, and infrastructure from cloud to edge. It standardizes builds, deployments, rollbacks, and monitoring across hundreds of sites.

Requirements & prerequisites

- Core: GitOps workflow, container registry, model registry, feature store.

- Edge: Orchestrator (Kubernetes-flavored at the edge), model servers, observability agents.

- Security: PKI, device identity, image signing, SBOMs, and secure boot on edge nodes.

Step-by-step implementation

- Create a single repo per service with build pipelines targeting cloud and edge.

- Adopt a model registry with versioning, lineage, and stage gates.

- Implement canary deployments at the edge; route 1–5% of traffic to the new model.

- Instrument inference latency, confidence, drift, and resource usage.

- Automate rollback on failed SLOs; keep a “golden” model cached on every node.

Beginner modifications & progressions

- Simplify: Start with one edge cluster and manual promotion.

- Progress: Multi-region MEC, hierarchical control plane, and policy-driven promotion.

Recommended metrics

- Deployment lead time, change failure rate, mean time to recovery, and p95 inference time per model.

Safety and mistakes

- Don’t ship unpinned dependencies to constrained edge images.

- Avoid remote logs without compression; they will saturate uplinks during incidents.

Sample mini-plan

- Step 1: Stand up a minimal model registry and one edge cluster.

- Step 2: Run a weekly release train; require automated checks to promote to edge.

Data pipelines in a 5G world

What it is & benefits

5G encourages streaming data pipelines: transform at the edge, store features locally, and sync compact summaries to the cloud. This reduces bandwidth, improves resilience, and gives models fresh features.

Requirements & prerequisites

- Edge streaming: local message bus and time-series store.

- Transformations: feature engineering close to sensors; schema registry.

- Governance: data retention, PII handling, and lineage.

Step-by-step implementation

- Define schemas for sensor topics; include versioning from day one.

- Compute features at the edge (e.g., rolling means, FFTs) and forward features, not raw feeds.

- Backhaul selectively: send anomalies and model deltas; archive raw only when necessary.

- Monitor drift at the edge; raise flags when distributions shift.

- Sync summarized data to a central lakehouse for retraining.

Beginner modifications & progressions

- Simplify: Start with one or two features computed locally.

- Progress: Add per-site feature stores and global catalogs.

Recommended metrics

- Edge-to-cloud egress volume, time-to-feature availability, and drift alerts per week.

Safety and mistakes

- Beware implicit timezones in timestamps; align all clocks and NTP sources.

- Keep a “cold path” for compliance (e.g., events you must retain unmodified).

Sample mini-plan

- Step 1: Move one feature (e.g., vibration RMS) to edge computation.

- Step 2: Reduce raw stream upload by 80% while maintaining model accuracy.

Security, privacy, and reliability for 5G-powered ML

What it is & benefits

5G brings stronger identity and encryption by default, plus options like per-slice isolation. Combined with edge-first design and FL, you can meet strict privacy and availability requirements while still shipping high-impact ML.

Requirements & prerequisites

- Identity: SIM/eSIM enrollment tied to device certificates.

- Segmentation: dedicated slices/APNs for sensitive traffic.

- Supply chain: signed images, attestation, and secure boot at the edge.

Step-by-step implementation

- Enroll devices with SIM-based identity linked to your IAM.

- Segment traffic by slice; restrict inter-slice communication.

- Harden edges: enable secure boot, patching windows, image signing.

- Protect models: encrypt at rest/in transit, rate-limit APIs, and watermark outputs where appropriate.

- Exercise failure modes: simulate base-station loss and backhaul degradation; verify graceful fallback.

Beginner modifications & progressions

- Simplify: Start with network-level segmentation and basic IAM bindings.

- Progress: Add confidential compute at the edge and verifiable attestation.

Recommended metrics

- Time to patch critical CVEs, certificate expiry alerts, and fraction of endpoints with hardware attestation.

Safety and mistakes

- Don’t transmit model deltas in the clear; secure aggregation is table stakes.

- Never assume “private network” equals “secure”; treat every edge node as internet-adjacent.

Sample mini-plan

- Step 1: Put inference and control plane on separate slices.

- Step 2: Add secure aggregation for federated rounds.

Cost management and ROI: when 5G makes sense

What it is & benefits

5G isn’t “better Wi-Fi.” It shines where mobility, deterministic latency, coverage, and QoS matter. Use a simple decision matrix to validate ROI.

Requirements & prerequisites

- Inputs: current SLA breaches, downtime cost, privacy constraints, and mobility needs.

- Alternatives: Wi-Fi 6/6E, fiber, or LoRa/LPWAN for low-bandwidth telemetry.

Step-by-step implementation

- Score each use case on mobility, latency criticality, device density, and security.

- Compare TCO across options (private 5G vs. Wi-Fi + fiber) including spectrum/licensing.

- Pilot the highest-scoring use case; measure time-to-value and “false downtime” eliminated.

Beginner modifications & progressions

- Simplify: Lease a managed private 5G as a service for pilots.

- Progress: Acquire local spectrum or shared access where available.

Recommended metrics

- Incremental revenue or cost avoided per millisecond saved; number of SLA incidents eliminated.

Safety and mistakes

- Over-engineering slices and mmWave small cells for non-critical workloads inflates costs.

- Ignoring device lifecycle (SIM logistics, updates) creates hidden OpEx.

Sample mini-plan

- Step 1: Run a 90-day pilot on a single line or site.

- Step 2: Scale only if KPI uplifts exceed a predefined threshold (e.g., +15% throughput, −50% response time).

Sector blueprints (fast paths to value)

Manufacturing: quality inspection and robot safety

- Requirements: Private 5G, MEC node with GPU, 4–6 cameras per line.

- Steps: (1) Deploy a defect-detection model at the edge. (2) Put robot E-stop telemetry on a low-latency slice. (3) Use FL to adapt to new materials per plant.

- Metrics: False reject rate, cycle time, and p95 control-loop latency.

- Common mistake: Letting video saturate the control slice; keep them separate.

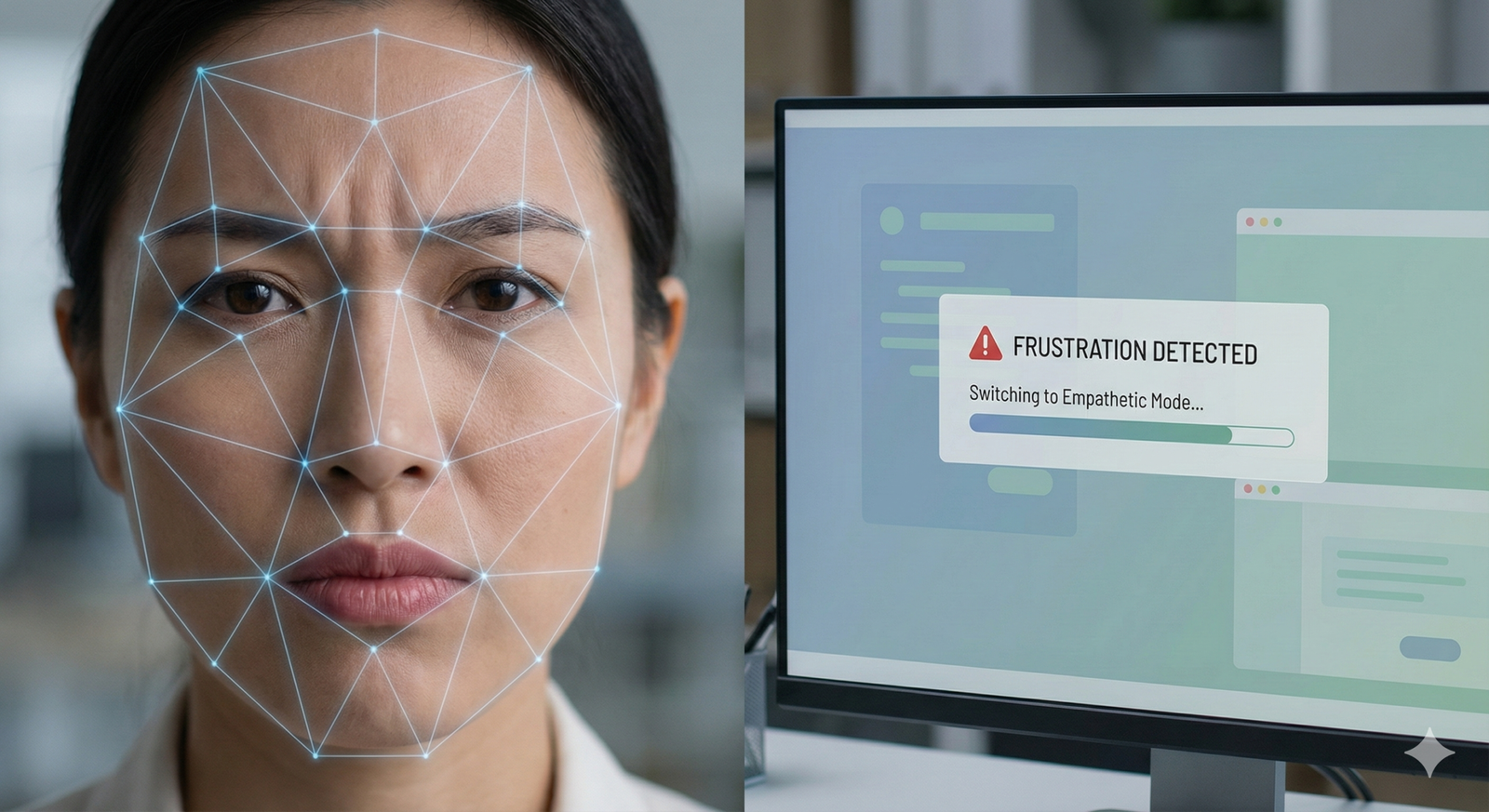

Smart cities: traffic analytics and adaptive signals

- Requirements: City-wide 5G coverage, edge nodes per district, secure camera onboarding.

- Steps: (1) Camera feeds → edge inference; (2) Publish congestion scores; (3) Retrain weekly with weather/events data.

- Metrics: Average delay at intersections, incident detection time.

- Common mistake: No privacy budget for PII; blur faces/license plates at the edge.

Healthcare: remote diagnostics (non-clinical guidance here)

- Requirements: 5G devices, secure edge, compliance guardrails.

- Steps: (1) On-device pre-screening; (2) Edge triage scoring; (3) Federated updates across clinics.

- Metrics: Time-to-triage, drop-call rate during tele-sessions.

- Common mistake: Uploading raw patient imagery; keep PHI on device/edge where policies require.

(For clinical decisions, consult qualified professionals and your compliance team.)

Quick-start checklist

- One high-value, latency-sensitive use case chosen

- MEC access (public or on-prem) confirmed

- Edge node provisioned with container runtime and model server

- 5G SA connectivity tested; SIM identities enrolled

- Minimal observability: latency, accuracy, and drift dashboards

- Rollback plan for models and pipelines

- Security baseline: signed images, per-slice policies, encrypted transport

Troubleshooting & common pitfalls

- Latency looks fine in the lab, bad in production → Inspect radio conditions, handovers, and jitter; add buffering and adaptive bitrate for inputs.

- Edge nodes keep running out of memory → Cap batch sizes, enable mixed precision, and right-size containers.

- Model accuracy drops after deployment → Track input drift; schedule frequent mini-retrain with recent edge samples.

- FL rounds stall → Quarantine stragglers, lower client fraction per round, or introduce hierarchical aggregation.

- Video floods the network → Transcode at the edge; send only detections or cropped regions to the cloud.

- Slicing policies not respected → Verify SIM profiles and app-to-slice mapping; check if you’re on SA vs. NSA.

How to measure progress and results

- Speed: sensor→decision p50/p95 latency; inference time budget per model.

- Quality: precision/recall, business outcomes (scrap rate, mean time to repair, wait time).

- Reliability: slice SLA adherence, device online %, failover success rate.

- Efficiency: bandwidth saved vs. cloud-only, cost per inference, energy per inference.

- Learning health: model drift rate, FL round time, client participation.

Create a baseline in week 0, then track deltas weekly.

A simple 4-week starter plan

Week 1 — Design & baseline

- Pick one use case and define success metrics and SLOs.

- Benchmark current network and inference latencies; collect a representative data slice.

- Secure MEC access (public or private) and provision one edge node.

Week 2 — Build a thin slice

- Containerize a small, quantized model; deploy to the edge with a single stream.

- Add observability for latency, accuracy, and resource use.

- Implement a read-only dashboard for stakeholders.

Week 3 — Harden & iterate

- Introduce a second stream and a simple canary; enable automatic rollback.

- Add a basic FL loop with two clients (or simulate) to validate the control plane.

- Segment traffic: put inference vs. bulk uploads on separate QoS classes or slices.

Week 4 — Prove value & plan scale

- Run A/B against the pre-5G/edge workflow; capture KPI deltas and costs.

- Conduct a failure-mode drill (edge node down, backhaul degraded).

- Draft a quarter-scale plan with budget, risks, and required roles.

FAQs

- Do I need 5G Standalone (SA) to benefit?

You can prototype on non-standalone, but SA is recommended for deterministic latency and full features like end-to-end slicing. - When should I choose mmWave over sub-6 GHz?

Use mmWave in short-range hotspots that need multi-Gbps throughput, such as dense camera clusters; use sub-6 for broad coverage. - Is edge inference always cheaper than cloud?

Not always. It reduces bandwidth and latency costs but adds edge hardware and ops. Model your total cost per inference and include personnel. - How does 5G help federated learning?

Faster, more reliable uplinks reduce round time and straggler impact, improving convergence without centralizing raw data. - Do I still need Wi-Fi?

Often yes. Many sites run a hybrid network: 5G for mobility and QoS-critical traffic; Wi-Fi for best-effort and indoor devices. - What’s the easiest first use case?

One camera, one model, one decision (e.g., defect detection or occupancy counting) with a clear business KPI. - How do I guarantee safety for robotics?

Place safety signaling and stop commands on a dedicated low-latency slice; include wired fallbacks and periodic failover drills. - What about privacy?

Keep PII on device/edge, blur or tokenize at ingress, and use FL or secure aggregation for training. - How do I avoid vendor lock-in?

Standardize on open model formats (ONNX), containerized deployments, and IaC for reproducibility across operators and clouds. - Can I train at the edge too?

Yes, for small models or incremental updates. Heavy training still belongs in the cloud or data center; use the edge for fine-tuning. - What if I don’t have MEC in my region?

Start with a micro-data center near the site on high-speed fiber, and use 5G for last-mile connectivity. Migrate to MEC when available. - How do I measure if the network or the model is the bottleneck?

Instrument separately: time pre-process, inference, and post-process, and measure network hops. Optimize whichever dominates p95 latency.

Conclusion

5G isn’t just faster connectivity; it’s an architectural catalyst that pulls machine learning into the physical world. With low latency, high throughput, high device density, and programmable slices, you can move models closer to where data is born and decisions matter—on the line, in the street, at the bedside. Start small, measure aggressively, and iterate weekly. The organizations that master 5G-first ML will set the tempo for the next decade of intelligent products and operations.

Call to action: Pick one real-time use case today, stand up a single edge node, and ship your first 5G-powered model within four weeks.

References

- Minimum Technical Performance Requirements for IMT-2020 radio interface(s), ITU-R, 2017. https://www.itu.int/dms_pub/itu-r/opb/rep/R-REP-M.2410-2017-PDF-E.pdf

- Minimum Technical Performance Requirements for IMT-2020 (overview slides), ITU, 2016 (PDF). https://www.itu.int/en/ITU-R/study-groups/rsg5/rwp5d/imt-2020/Documents/S01-1_Requirements%20for%20IMT-2020_Rev.pdf

- Latency Analysis for Mobile Cellular Network uRLLC, TelSoc Journal, 2022 (PDF). https://telsoc.org/sites/default/files/journal_article/447-liang-article-v10n3pp39-57.pdf

- Dynamic Packet Duplication for Industrial URLLC, MDPI/PMC, 2022. https://pmc.ncbi.nlm.nih.gov/articles/PMC8777940/

- Multi-access Edge Computing (MEC) – Technology Overview, ETSI, accessed 2025. https://www.etsi.org/technologies/multi-access-edge-computing

- What is multi-access edge computing (MEC)?, Red Hat, 2022. https://www.redhat.com/en/topics/edge-computing/what-is-multi-access-edge-computing

- Ericsson Mobility Report – November 2024, Ericsson, 2024. https://www.ericsson.com/en/reports-and-papers/mobility-report/reports/november-2024

- Mobile Subscriptions Outlook (data/forecasts), Ericsson, accessed 2025. https://www.ericsson.com/en/reports-and-papers/mobility-report/dataforecasts/mobile-subscriptions-outlook

- Ericsson Mobility Report – Read the latest edition (live highlights), Ericsson, accessed 2025. https://www.ericsson.com/en/reports-and-papers/mobility-report

- 5G Network Slicing (explainer), GSMA, accessed 2025. https://www.gsma.com/solutions-and-impact/technologies/networks/5g-network-technologies-and-solutions/5g-standalone-networks/network-slicing/

- An Introduction to Network Slicing (white paper), GSMA, 2017 (PDF). https://www.gsma.com/futurenetworks/wp-content/uploads/2017/11/GSMA-An-Introduction-to-Network-Slicing.pdf

- NG.135 E2E Network Slicing Requirements, GSMA, 2024 (PDF). https://www.gsma.com/newsroom/wp-content/uploads//NG.135-v4.0.pdf

- 5G Network Slice Management, 3GPP, 2023. https://www.3gpp.org/technologies/slice-management

- Release 18 (5G-Advanced) Overview, 3GPP, accessed 2025. https://www.3gpp.org/specifications-technologies/releases/release-18

- An Overview of AI in 3GPP’s RAN Release 18, IEEE Communications Technology News, 2025. https://www.comsoc.org/publications/ctn/overview-ai-3gpps-ran-release-18-enhancing-next-generation-connectivity

- 5G: NR Frequency Ranges (FR1/FR2) – ETSI 5G Overview, ETSI, accessed 2025. https://www.etsi.org/technologies/5g