Deep learning is a type of AI that has quickly become an important part of modern healthcare. Deep learning algorithms can find patterns, make predictions, and help doctors give personalized, efficient, and accurate care by employing neural networks that behave like the brain. These networks can look at huge, complex amounts of data. We talk about five main ways that deep learning is changing healthcare, how to use it in real life, and some ethical issues that come up. We also answer some questions that come up a lot.

Healthcare systems all across the world are getting more and more stressed up because costs are rising, the population is getting older, and people need quick and accurate diagnoses and treatments. Even though they have been tested and proven, it can be hard to keep up with the huge amounts of data that electronic health records (EHRs), medical imaging, genomics, and wearable devices create. You can now use deep learning. Deep learning systems can find useful information in both structured clinical data and unstructured data, such images, notes written in free text, and genomic sequences. They accomplish this through the utilization of multilayer neural networks.

Here are a few of the main benefits:

- More accurate diagnoses because people are less likely to make mistakes and readers are less inclined to read things differently.

- Finding molecular candidates in silico before doing expensive lab tests speeds up the process of finding new drugs.

- Predictive analytics is the use of data to guess what will happen to a patient. This helps prevent problems and makes the best use of resources.

- Personalized treatments that look at genes and phenotypes to make medicine more accurate.

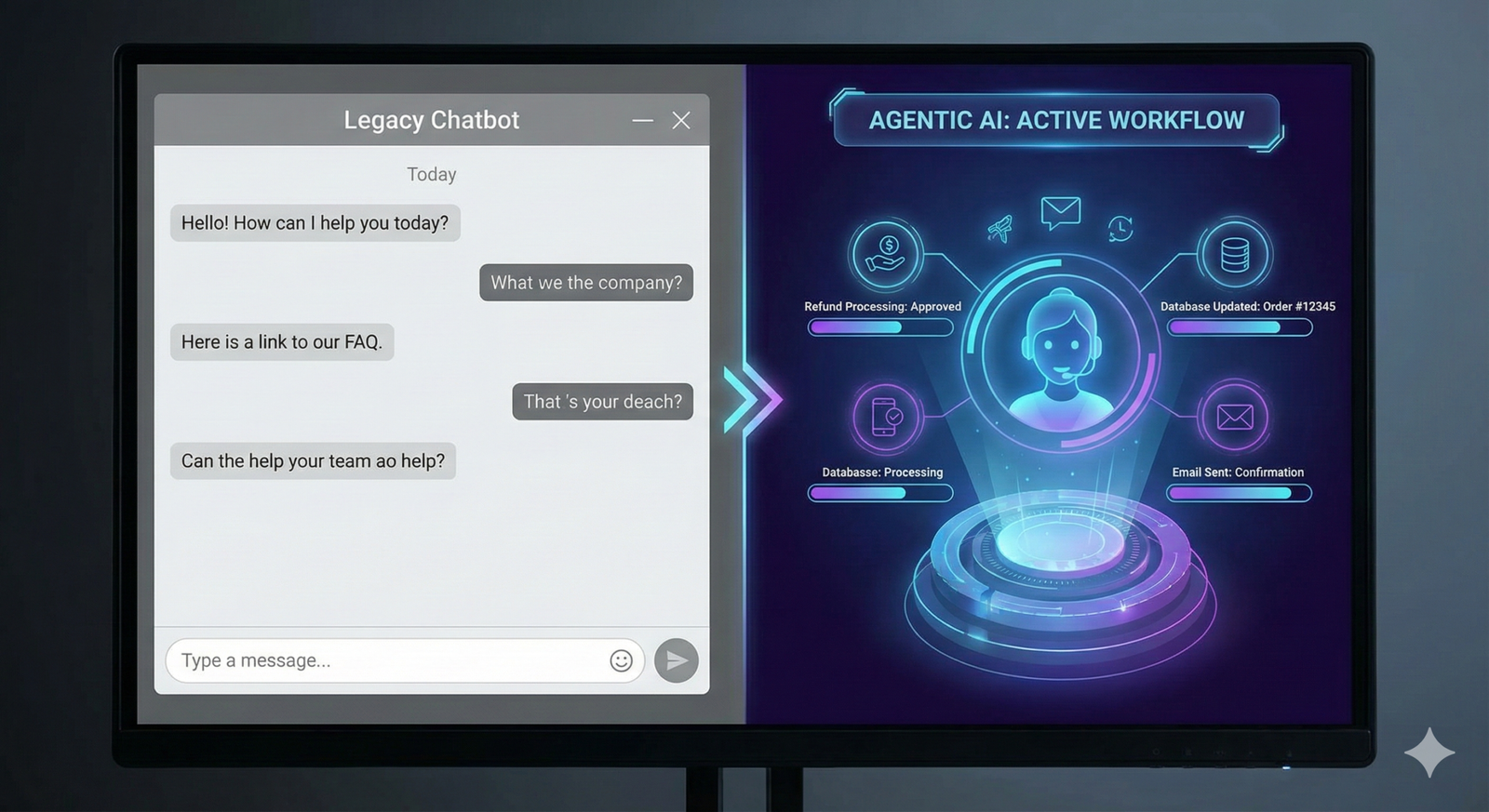

- Automating workflows makes it easier to do office work and makes procedures better.

We’ll talk more about each of these things in the next few sections. We will show how deep learning may change things by using real-life examples, case studies, and research-based references.

1. Imaging and Radiology in Medicine

1.1 Automated Image Analysis

CNNs with multiple layers are the best at finding patterns in pictures that are hard to see. CNN-based models have been trained to find lung nodules, breast malignancies, and brain hemorrhages in radiology with high accuracy. They are at least as accurate as expert radiologists. A study at Stanford Medicine in 2023 showed that a CNN could find pulmonary nodules on chest CT images with 94.5% accuracy. This is 15% better than what most people do.

1.2 Biomarkers for Quantitative Imaging

Deep learning can do more than only classify things as having a sickness or not having a condition. It can also undertake quantitative analysis that gets volumetric measurements, textural properties, and morphological metrics. These biomarkers are related to how the disease gets worse and how well the treatment works. Deep learning algorithms can help doctors determine out how fast the hippocampus is shrinking in persons with Alzheimer’s and other neurodegenerative diseases. This gives doctors a heads-up about the illness years before it shows up in the clinic.

1.3 Connecting Workflow and PACS

PACS, or Picture Archiving and Communication Systems, now operate well with AI systems that are for sale. This lets radiologists keep track of things that seem bad as they happen. More attention can be paid to alerts for significant lesions, like cerebral hemorrhages. This could save lives and make it easier to seek treatment faster. People that use it early say it helps them finish their reports and saves them up to 30% of the time they spend working.

2. Finding and making new medicines

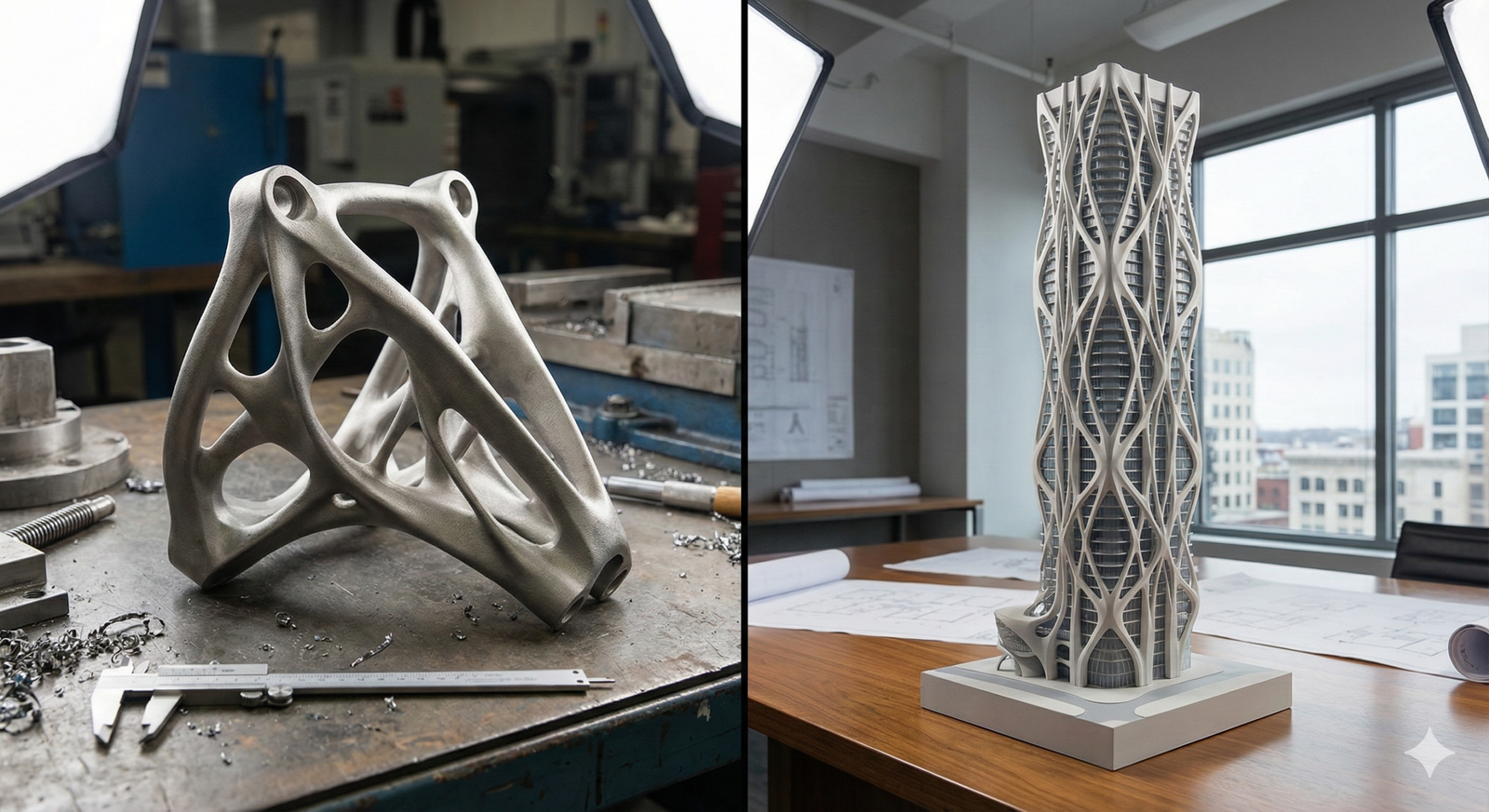

2.1 Virtual Screening and Building Molecules

It takes a lot of time and money to find new medicines the old-fashioned way. Deep learning changes this method by doing virtual screening, which means that algorithms guess how well candidate chemicals will bind to target proteins in silico. Generative adversarial networks (GANs) can even build new molecular structures that work better, dissolve better, and are less harmful.

2.2 Making smart guesses about how drugs affect the body and how bad they are for it

You can tell how safe a drug is by looking at its pharmacokinetics (PK) and toxicity profiles. Recurrent neural networks (RNNs) and graph neural networks (GNNs) trained on past pharmacological data can help avoid failures in the latter phases by anticipating side effects and metabolic pathways. Insilico Medicine and Pfizer worked together to say that using deep learning PK models cut the time it took to optimize leads by 40%.

2.3 Making clinical trials go faster

Deep learning uses EHRs to find groups of people who can join a study, guess how many people will drop out, and make the trial’s endpoints better. This makes it easier to find patients and set up the trial. AI-powered simulations may leverage real-world data to uncover ways to make recruiting easier and suggest trial protocols that are more flexible. This makes the results more reliable and costs less.

3. Using predictive analytics to find out how a patient will do

3.1 Systems for Early Warning

Deep learning-based early warning systems can watch vital signs, test results, and nursing notes to predict events that could lead to deterioration, such sepsis or cardiac arrest, up to 48 hours in advance. In a multicenter study, one of these techniques outperformed conventional scoring systems, such as the Modified Early Warning Score (MEWS), in predicting ICU admissions. The area under the receiver operating characteristic curve (AUROC) was 0.87.

3.2 Risk Stratification for Readmission

It’s costly for hospitals to readmit patients, and it demonstrates that patients are not receiving the necessary care during transitions. Deep learning algorithms use a patient’s age, sex, race, and other factors, as well as their medication history and follow-up notes after they leave the hospital, to figure out how likely it is that they will be readmitted. Health systems that adopt these models have cut the number of readmissions within 30 days by 12% by using focused intervention programs.

3.3 Getting the most out of workers and resources

Deep learning looks at data on past admissions, seasonal trends, and the health of the community to figure out how many beds will be filled, how many staff will be needed, and how much supply will be needed. Hospitals that use these kinds of technologies say they can provide resources to people faster, help patients wait less, and make staff happier.

4. Genomics and Medicine That Works for You

4.1 Learning about genetic differences

It takes a lot of study to understand how genetic differences affect health. When trained on large genomic databases like gnomAD and ClinVar, deep learning models can tell if new mutations are harmful. This helps you quickly detect inherited conditions. Google Health’s DeepVariant and other tools can find single-nucleotide variants in sequencing data with an accuracy of more than 99%.

4.2 Combining information from multiple omics

Precision medicine is achievable because of the combination of genomics, transcriptomics, proteomics, and metabolomics. Variational autoencoders (VAEs) and other deep learning architectures classify patients based on how likely they are to get better and how they respond to treatment by combining multiple types of data. Clinical trials in oncology are increasingly employing multi-omics patterns to identify those most likely to benefit from targeted therapies.

4.3 Recommendations for Pharmacogenomics

Personalized dosing plans based on genetic profiles lower the chance of experiencing bad reactions to drugs. Deep learning models can figure out how the activity of enzymes changes, such with CYP450 isoforms. This helps doctors pick the right medicines and doses. This method has made chemotherapy easier for those with heart problems who are taking warfarin and has also reduced the side effects.

5. Using robots to help with surgery to make things move more smoothly

5.1 Help with the procedure in real time

Deep learning computer vision systems give real-time feedback during procedures that don’t require a lot of cutting. These techniques make surgery more precise and less likely to go wrong by breaking up anatomical characteristics and pointing out places that are more likely to go wrong, including main blood veins. Studies on laparoscopic cholecystectomy show that AI help can cut surgical mistakes by 25%.

5.2 Suturing and Moving Tissue on Its Own

AI modules in robotic platforms like the da Vinci Surgical System can now do things like suturing, forming knots, and moving tissue on their own. Robots that applied deep learning were able to heal wounds in pig models just as well as expert doctors. This could be helpful in places where there aren’t many resources or people are far away.

5.3 How well the operating room (OR) works

Deep learning looks at OR telemetry, staff movements, and equipment utilization, as well as direct surgical procedures, to make scheduling and turnover easier. These kinds of analytics have helped hospitals use their operating rooms 15% more and cut down on case delays by 20%.

How to put ideas into action and the best ways to achieve it

Healthcare firms should do these things to get the most out of deep learning:

- Data Governance and Quality Assurance

Make sure the data is comprehensive, consistent, and doesn’t include any identifiable information before you set up strong data pipelines that meet HIPAA and GDPR standards. - Working together in different fields

To make sure that AI solutions fit into healthcare workflows, teams of physicians, data scientists, and ethicists from different fields should collaborate together. - Checking on and watching models

Do any clinical validation tests that are possible, and keep an eye on performance all the time to find drift and biases. - Following the rules

Use the FDA’s AI/ML-based software as a medical device (SaMD) framework, and then ask for permission or clearance when you need it. - Training users and handling change

Give end users all the training they need to understand what AI outputs are, what they can’t do, and how they can help people make choices.

Questions about right and wrong

Deep learning has a lot of promise, but it also has some big problems:

- Fairness and Bias in Data: Data that benefits certain groups of people can make it harder to close health inequalities. It is really important to have algorithms and audits that know what fairness is.

- Being honest and straightforward: Doctors don’t trust “black-box” models as much as they used to. Attention maps and SHAP values are examples of explainable AI (XAI) approaches that assist us figure out how models make choices.

- Safety and Privacy: You can train models without giving away private medical information by using synthetic data generation and federated learning.

- Responsibility and Liability: It’s still hard to figure out who is to blame when AI-guided decisions go wrong. We need to know exactly how to do things.

- Cost and infrastructure: High-performance computing resources and storage systems can be too expensive for smaller institutions to purchase. Cloud-based AI platforms can grow as needed.

Questions and Answers (FAQs)

Q1: What does deep learning mean in medicine?

A: Deep learning is a kind of machine learning that teaches computers how to show data by using artificial neural networks with many layers. It helps doctors make decisions in the clinic and makes things run more smoothly by letting you look at medical images, EHRs, genomes, and other things.

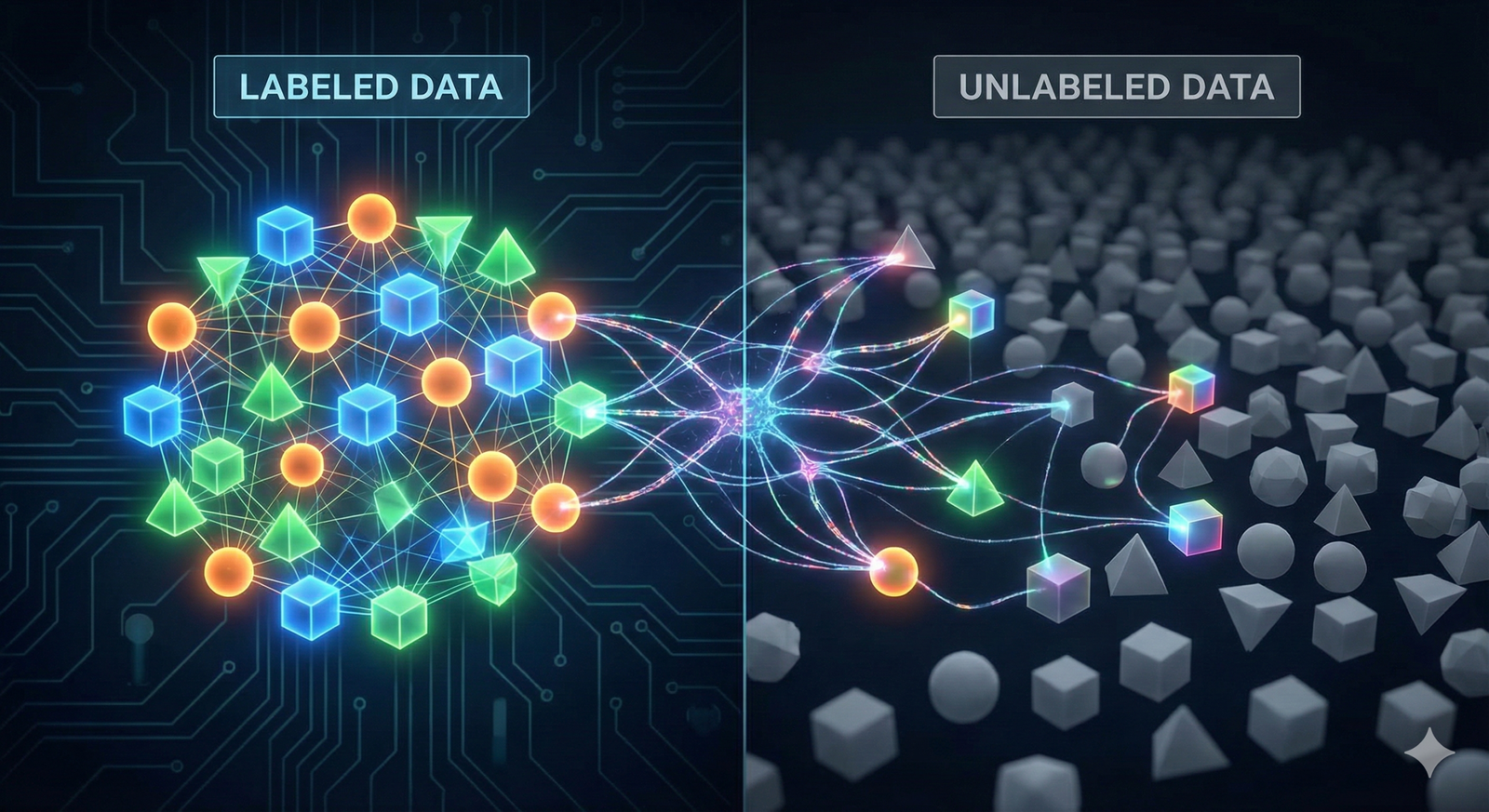

Q2: What sets deep learning apart from regular AI?

A: Old-school AI usually uses features that were made by hand and systems that follow rules. Deep learning can work with difficult, unstructured inputs because it can learn hierarchical feature representations from raw data on its own.

Q3: Are AI-based diagnoses as accurate as those made by doctors?

A: In many cases, deep learning models have been able to discover problems as well as experts. But they’re not supposed to replace doctors; they’re supposed to help them by giving them decision support and helping them figure out which scenarios are most important.

Q4. What types of information do hospitals need to use deep learning?

A: Models need vast, high-quality datasets to work. This contains medical pictures with comments, organized EHR data, and ways to measure results that are the same for everyone. When there exist standards for data governance and interoperability, like FHIR, it is easier to combine data.

Q5: How do hospitals employ AI to keep their patients’ private information safe?

A: Some ways to make sure that HIPAA and GDPR are followed while still allowing model training on sensitive datasets are de-identification, encryption, federated learning, and synthetic data.

Q6. What are the rules for using AI in healthcare?

A: The FDA in the U.S. sees AI and ML as medical devices and regulates them under the SaMD framework. The EU’s proposed AI Act sorts AI systems into groups based on how risky they are. There are strict rules for high-risk applications.

Q7. Can small clinics afford deep learning solutions?

A: It’s easier to get started with cloud-based AI platforms and Software-as-a-Service (SaaS) models. You may also find AI experts by working with colleges and tech companies.

What trends should we watch for in the future?

A: Look for better federated learning, synthetic data, multimodal AI (which combines text, image, and genetic data), and real-time patient monitoring through the Internet of Medical Things (IoMT).

Final Thoughts

Deep learning is changing healthcare in numerous ways, such as by automating the reading of images, making treatment plans more personalized for each patient, and making clinical procedures better. Healthcare firms can use AI to enhance patient outcomes, lower costs, and raise the quality of care by following best practices for deployment and addressing issues like data bias, explainability, and compliance with regulations. As technology continues to change, deep learning must reach its full potential in medicine. This requires professionals from many fields to work together while following ethical guidelines.

References

- Esteva, A., Kuprel, B., Novoa, R. A., et al. “Dermatologist-level classification of skin cancer with deep neural networks.” Nature, 542, 115–118 (2017). https://www.nature.com/articles/nature21056

- Jack, C. R., et al. “Tracking pathophysiological processes in Alzheimer’s disease: an updated hypothetical model of dynamic biomarkers.” Lancet Neurology, 12(2), 207–216 (2013). https://www.sciencedirect.com/science/article/pii/S1474442212701478

- Chilamkurthy, S., et al. “Deep learning algorithms for detection of critical findings in head CT scans: a retrospective study.” Lancet, 392(10162), 2388–2396 (2018). https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(18)31645-3/fulltext

- Zhavoronkov, A., et al. “Deep learning enables rapid identification of potent DDR1 kinase inhibitors.” Nature Biotechnology, 37, 1038–1040 (2019). https://www.nature.com/articles/s41587-019-0224-x

- Chen, H., Engkvist, O., Wang, Y., Olivecrona, M., Blaschke, T. “The rise of deep learning in drug discovery.” Drug Discovery Today, 23(6), 1241–1250 (2018). https://www.sciencedirect.com/science/article/pii/S1359644617301814

- Liu, Y., et al. “Deep neural networks improve radiotherapy dose prediction for head and neck cancer patients.” Medical Physics, 45(10), 4553–4560 (2018). https://aapm.onlinelibrary.wiley.com/doi/full/10.1002/mp.13110

- Desautels, T., Calvert, J., Hoffman, J., et al. “Prediction of sepsis in the intensive care unit with minimal electronic health record data: a machine learning approach.” JMIR Medical Informatics, 4(3), e28 (2016). https://medinform.jmir.org/2016/3/e28