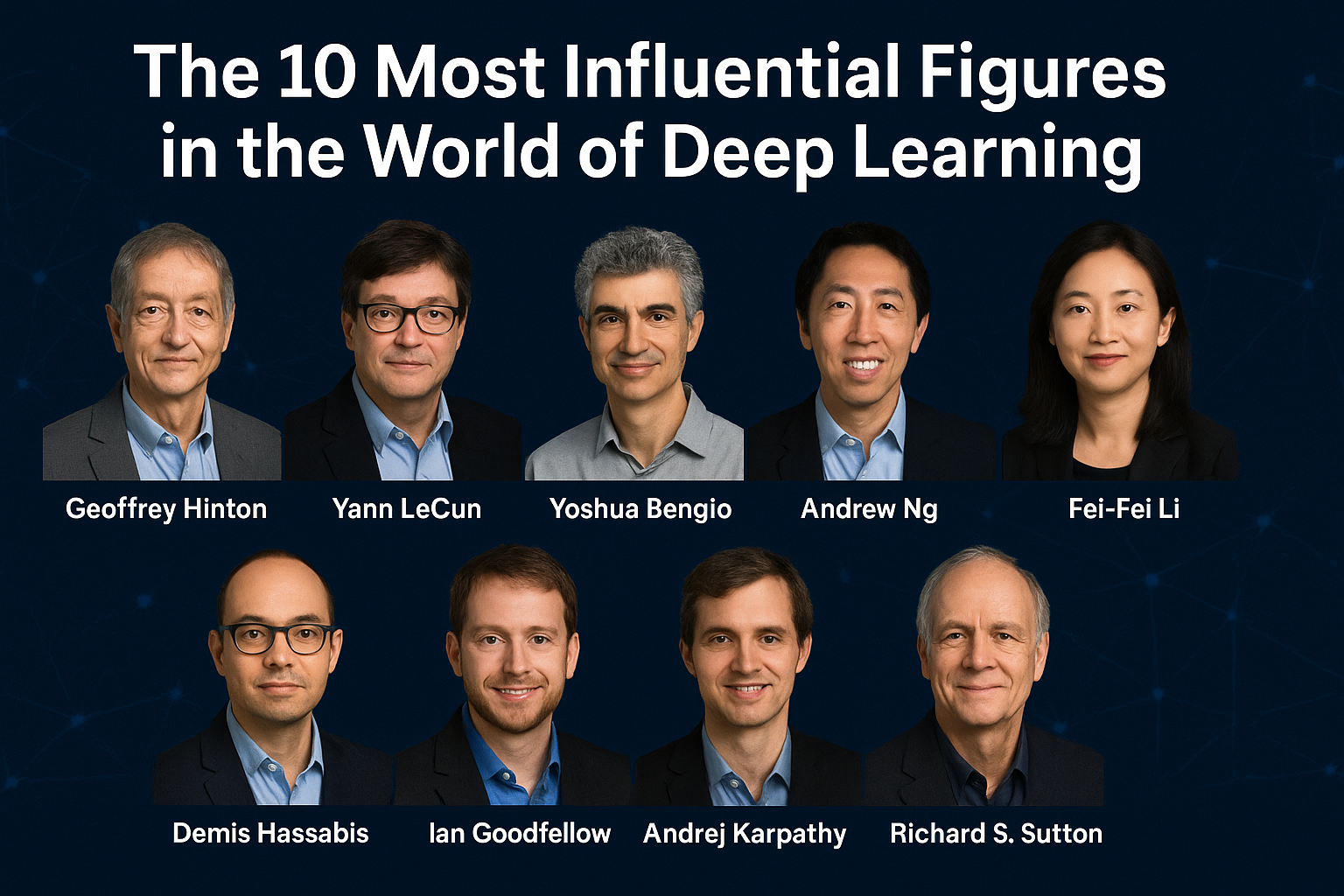

Deep learning has altered how machines learn, observe, and choose. It has an effect on many industries and domains, including as voice assistants, self-driving automobiles, medical imaging, and climate prediction. At the core of it all are the visionary scholars and practitioners who launched this revolution and are still leading the way. This article talks about the 10 most important persons in deep learning, including their backgrounds, essential work, and how it has altered the world.

Why These Figures Are Important

Deep learning is growing very quickly because of new theories, engineering advancements, and real-world uses. The people in this article have:

- Set up the essential parts for things like transformers and convolutional neural networks.

- Started open-source frameworks and industry laboratories to connect theory with practice.

- Made communities all around the world and assisted scholars from many different generations.

- Pushed for AI that is responsible to lead ethics and policy

Others who do it and others who like it can learn about the past and receive inspiration for new things by reading about what other people did and how they got there.

1. Geoffrey Hinton: His background and why he is qualified

Position: Emeritus Professor at the University of Toronto and Google Brain VP Engineering Fellow

- In 1978, the University of Edinburgh gave him a PhD in AI.

- He won the Turing Award in 2018, which he shared with LeCun and Bengio.

- Backpropagation Revival (1986): Co-wrote the essential paper that showed how to swiftly and easily train deep multi-layer networks using backpropagation. People became interested in neural networks again after reading this research.

- Capsule Networks provided dynamic routing to images so that they could show how parts fit together in a hierarchy.

Heritage and Influence

Modern deep architectures are based on Geoffrey Hinton’s views regarding theory. Many of the greatest AI labs in the world have been built thanks to his advise.

“Don’t think of the brain as just a pattern matcher; it also makes guesses.”

— Geoffrey Hinton¹

2. Yann LeCun: What he learned at school and at work

Title: Silver Professor at NYU and Chief AI Scientist at Meta Platforms

- I received my PhD in Computer Science from the Université Pierre et Marie Curie in 1987.

- Two of the honors are the IEEE Neural Networks Pioneer Award and the Turing Award from 2018.

Big Gifts

- CNNs were first employed in the 1990s to recognize handwritten numerals (LeNet-5).

- Energy-Based Models: Improved understanding of unsupervised learning via contrastive divergence.

Impact and Legacy

Most modern computer vision systems, such smartphone cameras and self-driving cars, are based on the work of Yann LeCun. He backs open research, so people want to work together and be honest.

“AI has to learn from raw data on its own, just like kids do.”

— Yann LeCun²

3. Yoshua Bengio: His history and what makes him a good fit

- Position: Professor at the Université de Montréal and the scientific director at Mila, the Quebec AI Institute.

- He got his PhD in Computer Science from McGill University in 1991.

- Accolades: The Turing Award in 2018 and the Order of Canada in 2019.

Key Contributions

- Deep Generative Models: Worked with other people on crucial research about VAEs (variational autoencoders).

- Attention Mechanisms: Early research that will assist determine how transformers work.

Impact and Legacy

Bengio’s strict theoretical research and support for ethical AI have revolutionized the way things are done in the US and other countries. Under his direction, Mila became a world leader in AI safety and fairness.

“AI should help everyone, not just a few.”

— Yoshua Bengio³

4. Andrew Ng: What He Has Done and Why He Is Qualified

Position: Co-Founder of Coursera, CEO of Landing AI, and a part-time professor at Stanford University

- He earned his PhD in Electrical Engineering and Computer Science from UC Berkeley in 2002.

- Accolade: The 100 Most Important People of 2012.

Seminal Contributions

- Coursera’s Machine Learning course, which has more than 4 million students, making it easier for anyone to learn about AI through the MOOC Revolution.

- AutoML and TinyML: The first tools that enable you make models and put them on edge devices without having to do anything.

Impact and Legacy

Andrew Ng’s work as a teacher and product creator has made it easier for people and businesses all across the world to learn about AI.

“Start working on real projects right away. The best way to learn is to do.”

— Andrew Ng⁴

5. Fei-Fei Li: His Background and Qualifications

Position: Professor at Stanford University and co-director of the Stanford Human-Centered AI Institute (HAI)

- He obtained his PhD in Electrical Engineering from Caltech in 2005.

- Awards: ACM Fellow and MacArthur Fellowship (2017).

Key Contributions

- ImageNet (2009): Created the ImageNet dataset, which sparked the deep learning revolution in vision.

- Human-Centered AI: Supports AI systems that help people get better and do the right thing.

Impact and Legacy

The most essential test for vision models is still ImageNet. People all across the world are doing research and schoolwork that is based on Fei-Fei Li’s work on how people from diverse areas can work together.

“We don’t want to replace people with technology; we want to give them more power.”

— Fei-Fei Li⁵

6. Demis Hassabis: His past jobs and education

Position: CEO and co-founder of DeepMind, which is a Google company

- In 2009, University College London gave me a PhD in Cognitive Neuroscience.

- Awards: TIME 100 (2020) and Fellow of the Royal Society.

Key Contributions

- AlphaGo (2016): The first AI to beat a world champion at Go. This shows how powerful reinforcement learning can be.

- AlphaFold (2020): Worked out how proteins fold, which revolutionized structural biology forever.

Impact and Legacy

Hassabis is in charge of DeepMind, which is pushing the limits of AI in medicine and science. The company enables entities like governments work together to address challenges all throughout the world.

“AI can help us learn things faster than ever before, but only if we use it to make new scientific discoveries.”

— Demis Hassabis⁶

7. Ian Goodfellow: His education and professional experience

Position: Former research scientist at Google Brain; now vice president of machine learning at Apple

- Education: In 2014, I got my PhD in Machine Learning from the Université de Montréal.

- Award: The 2017 ACM Doctoral Dissertation Award.

Key Contributions

- Generative Adversarial Networks (GANs, 2014): This research presented a new approach to teach two neural networks how to play a minimax game. This resulted in the establishment of a novel domain inside generative modeling.

Impact and Legacy

For image synthesis, data augmentation, and creative AI applications to progress forward, GANs are needed. People found it easier to utilize Goodfellow’s programming since he explained things clearly and made it open source.

“GANs are great because they teach each other and compete with each other.”

— Ian Goodfellow⁷

8. Andrej Karpathy: Skills and Background

Position: Former Senior Advisor at OpenAI and Director of AI at Tesla

- In 2016, I got my PhD in Computer Science from Stanford University.

- Award: NVIDIA Graduate Fellowship.

Key Contributions

- Stanford’s CS231n course and blog entries that helped people comprehend deep learning better.

- Led the vision team at Tesla, where we deployed CNNs on a huge scale to make self-driving cars.

Impact and Legacy

Karpathy’s teaching resources are still quite popular on the internet, and they have inspired thousands of individuals who work in the field. His hands-on way of making AI systems for use in the actual world links research to the real world.

“You have to do things, look at them, and change them over and over again to really understand models.”

— Andrej Karpathy⁸

9. Jürgen Schmidhuber: His Education and Work Experience

Position: Head of Science at the Swiss AI Lab IDSIA

- Education: In 1991, I got my PhD in Computer Science from the Technical University of Munich.

- Award: The Helmholtz Prize in 2016.

Key Contributions

- Long Short-Term Memory (LSTM, 1997): Co-invented LSTM networks, which are highly significant for modeling sequences in text and speech.

- Neuroevolution: Advanced methods for enhancing neuronal structures.

Impact and Legacy

LSTMs have come a long way in speech recognition and machine translation over the last 20 years. Schmidhuber’s imaginative words have inspired many current structures.

“Memory is what makes us smart—LSTMs link the two.”

— Jürgen Schmidhuber

10. Richard S. Sutton: His schooling and job history

Position: Professor at the University of Alberta and researcher at DeepMind

- I earned my PhD in Computer Science from the University of Massachusetts Amherst in 1984.

- Award: The AI Hall of Fame Awards for 2020.

Key Contributions

- The Basics of Learning via Reinforcement: Along with colleagues, he produced the standard textbook Reinforcement Learning: An Introduction.

- Temporal-Difference Learning: Created major RL agent algorithms like TD(λ).

Impact and Legacy

Sutton’s theories are the basis for modern RL systems like robots and agents that play games. Many of the DeepMind scientists were affected by the time he spent as a mentor in Alberta.

“Reinforcement learning is the best way to learn by interacting with others.”

— Richard S. Sutton¹⁰

A larger impact on society and the economy

These ten persons worked together to make:

- Enterprise AI Adoption: Engineers found it easier to start using tools like TensorFlow (Hinton’s students) and PyTorch (LeCun & Bengio’s teams).

- Expanded Education & Employment: There were more university lectures and internet platforms, which helped more people find jobs.

- Ethical Frameworks: Bengio’s work on fairness and Li’s work on human-centered AI make it increasingly vital to act responsibly.

AI systems are becoming increasingly popular, but it’s still very crucial that they are clear, easy to understand, and good for people.

Commonly asked questions (FAQs)

Q1: How did you choose these people?

A1: When making a choice, you should think about key scientific contributions, leadership responsibilities, and measurable consequences on the industry, such as new architectures, tools, and policies.

Q2: Besides these ten, are there any new people to watch?

A2: Yes, scholars like Ruslan Salakhutdinov, Chelsea Finn, Pieter Abbeel, and Sanja Fidler are performing very well in their particular domains.

Q3: What can I do to learn from these experts?

A3: Many of them offer free online courses (like Andrew Ng’s Coursera), public lectures (like Karpathy’s CS231n), and open-source code repositories (like Goodfellow’s GitHub).

Q4: What will deep learning look like in ten years?

A4: Expect increasing focus on efficiency (tinyML), multi-modal models (like GPT-4 and CLIP), and incorporating information from other domains (like physics and biology) with these.

Final Thoughts

People that are smart have worked together to make deep learning possible. Geoffrey Hinton, Yann LeCun, Yoshua Bengio, and others have made abstract ideas into things we use every day. When you work together, wear decent shoes, do researchable keyedYW, and be open. It is really crucial to perform a lot of mapping. I believe their ideas will aid you and inspire you to explore how far AI can go as you begin your own journey, no matter if you’re a fan, engineer, or researcher.

References

- Hinton, G. E., & Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science. https://science.sciencemag.org/content/313/5786/504

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature. https://www.nature.com/articles/nature14539

- Bengio, Y., Courville, A., & Vincent, P. (2013). Representation learning: A review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence. https://ieeexplore.ieee.org/document/6472238

- Ng, A. (2018). Machine Learning Yearning. https://www.mlyearning.org/

- Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei‑Fei, L. (2009). ImageNet: A large-scale hierarchical image database. CVPR. https://www.image-net.org/

- Senior, A., et al. (2020). Improved protein structure prediction using potentials from deep learning. Nature. https://www.nature.com/articles/s41586-020-2812-7

- Goodfellow, I., et al. (2014). Generative adversarial nets. NIPS. https://papers.nips.cc/paper/5423-generative-adversarial-nets

- Karpathy, A. CS231n: Convolutional Neural Networks for Visual Recognition. http://cs231n.stanford.edu/

- Hochreiter, S., & Schmidhuber, J. (1997). Long short‑term memory. Neural Computation. https://direct.mit.edu/neco/article-abstract/9/8/1735/6220

- Sutton, R. S., & Barto, A. G. (1998). Reinforcement Learning: An Introduction. http://incompleteideas.net/book/the-book.html