Artificial intelligence has leapt off the screen and into the physical world. From warehouse floors humming with mobile manipulators to humanoids learning new skills from video and text, the Top 5 Most Advanced AI Robotics Technologies are converging to make robots more capable, adaptable, and safe. This guide breaks them down in plain language, with hands-on steps for getting started, pitfalls to avoid, metrics that matter, and a four-week plan you can actually follow.

If you’re a founder, product leader, researcher, or engineer figuring out how to bring intelligent machines into the real world, this is your roadmap. By the end, you’ll know what each technology actually is, what gear and skills you need, how to implement it on a budget, and how to measure progress without getting lost in hype.

Key takeaways

- Foundation models for robots (vision-language-action) let you control multiple robots with a single policy and adapt quickly to new tasks.

- Diffusion policies are the new gold standard for learning robust, smooth robot actions from demonstrations, with documented performance gains over older methods.

- Whole-body mobile manipulation (e.g., humanoids) is going from lab demo to factory pilot—enabled by better control, simulation, and robot foundation models.

- AI tactile sensing and dexterous hands are closing the gap between “pick-and-place” and real dexterity, thanks to new fingertip sensors and accessible research hands.

- Sim-to-real with high-fidelity digital twins makes fast, safe iteration possible—and it’s rapidly improving with new physics, datasets, and standardized stacks.

1) Vision-Language-Action Foundation Models for Robot Control

What it is and why it matters

Vision-language-action (VLA) models fuse perception, language, and control into a single policy that maps camera frames and natural-language instructions to robot actions. Instead of hand-coding skills robot-by-robot, a VLA can generalize across tasks and embodiments after being trained on large, diverse datasets (including internet-scale image-text pairs plus real robot experience). In practice, this means you can say “wipe up the spill with a paper towel” and the robot plans, grasps, and executes without bespoke scripts.

Recent open and commercial efforts show the trend: RT-2 demonstrated how web-scale vision-language pretraining boosts robot generalization; OpenVLA (7B) released an open, cross-embodiment policy trained on ~970k robot episodes; and industry platforms are building humanoid-focused foundation models to unify locomotion and manipulation.

Core benefits

- Generalization: Learn once, deploy across new tasks and robots with minimal fine-tuning.

- Instruction following: Use plain language prompts instead of rigid state machines.

- Data leverage: Combine web data, synthetic data, and real trajectories at scale.

Requirements & low-cost alternatives

Hardware:

- RGB-D camera(s), wrist camera optional; commodity GPU workstation for training/fine-tuning; ROS 2-compatible robot arm or mobile manipulator.

Software: - ROS 2, Python, PyTorch; simulator (Isaac Sim/Isaac Lab) for data generation and safe iteration.

Data: - Task demonstrations (teleop or kinesthetic), plus open datasets like Open X-Embodiment for pretraining or transfer.

Low-cost path:

- Start in simulation with a virtual arm and gripper, collect scripted demos, and fine-tune an open VLA (e.g., OpenVLA) before touching hardware.

Step-by-step implementation (beginner-friendly)

- Set up the stack: Install ROS 2 and Isaac Sim; verify your robot URDF loads and moves in sim with basic waypoints. ROS Documentation

- Collect demonstrations: Record 50–200 teleoperated episodes per task (e.g., “pick mug → place on coaster”), logging camera images, language instruction, actions.

- Pretrain or fine-tune: Start from an existing VLA checkpoint (e.g., OpenVLA-7B). Fine-tune on your demos with parameter-efficient adapters to fit a single-GPU box.

- Evaluate in sim, then on hardware: Use identical code and ROS topics to deploy the policy in sim and real.

- Iterate with hard negatives: Add failure cases to the dataset; re-train weekly.

Beginner modifications & progressions

- Start simple: Single camera, table-top pick-and-place.

- Progress: Add multi-camera inputs, language variations, and clutter; then move to mobile manipulation (base + arm).

- Cross-embodiment: Swap in a different gripper/arm without changing the whole stack (the point of VLAs).

Recommended cadence & KPIs

- Cadence: Weekly data refresh; monthly re-train.

- KPIs: Success rate by task; no-assist episodes; time-to-complete; intervention count; generalization to unseen objects/phrases.

Safety, caveats, mistakes to avoid

- Put a safety supervisor in the loop (max forces, joint limits, workspace fences).

- Avoid data leakage (identical scenes in train/test).

- Don’t assume language understanding = safe behavior; always gate actions with constraints.

- Expect prompt sensitivity; codify canonical prompts after validation.

Mini-plan (example)

- Day 1–2: Load robot in Isaac Sim; teleop 100 demos of “grasp bottle → place in bin.”

- Day 3–5: Fine-tune OpenVLA-7B; validate on 20 novel bottles in sim, then on real robot.

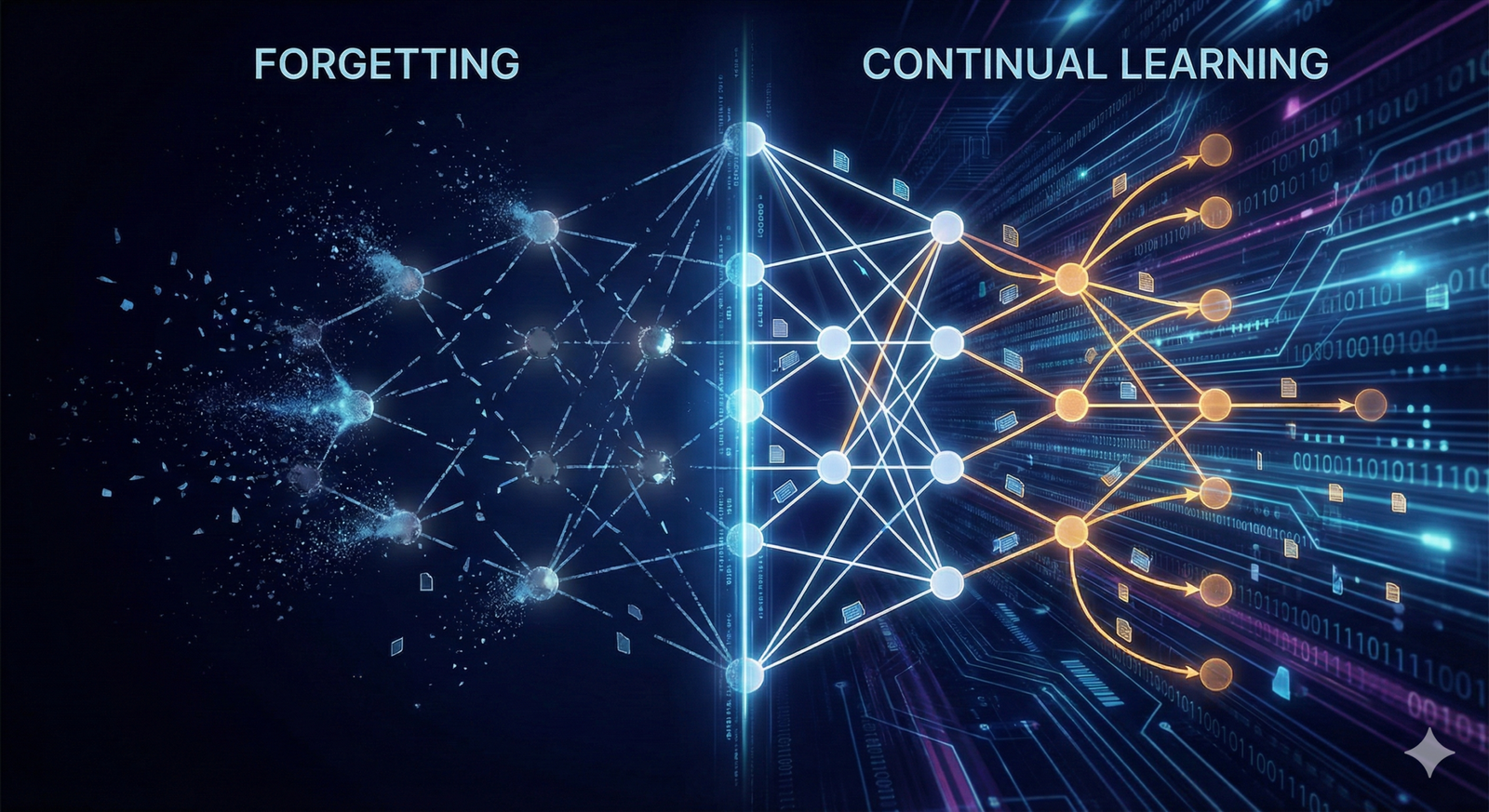

2) Diffusion Policies for Visuomotor Control

What it is and why it matters

Diffusion policies treat action generation as a denoising process: sample noisy actions, iteratively “denoise” toward a feasible trajectory conditioned on current observations. This stochastic process handles multimodal solutions (e.g., several legitimate grasp paths) better than older deterministic policies and has consistently delivered large gains in manipulation benchmarks. Documented results show diffusion policies outperforming prior methods by significant margins across varied tasks.

Research is moving fast: 3D Diffusion Policy (DP3) adds explicit 3D structure and shows ~85% success with as few as 40 demonstrations per task on real robots; newer work distills multi-step diffusion into one-step generators for real-time control.

Core benefits

- Robustness & smoothness: Natural trajectories; fewer “twitchy” artifacts.

- Data efficiency: Strong results with modest teleop datasets.

- Planning-like behavior: Can emulate multi-modal plans via sampling.

Requirements & low-cost alternatives

Hardware:

- A 6-DoF arm with wrist camera suffices; a Jetson or desktop GPU for training/inference.

Software: - Python/PyTorch; a diffusion policy codebase (open implementations available); ROS 2 bridge.

Data: - 40–200 high-quality demos per skill; augment in sim.

Low-cost path:

- Use Isaac Lab to script/demo tasks and train entirely in sim before trying a physical robot.

Step-by-step implementation

- Collect crisp demos: Fixed camera framing, consistent task resets; log RGB-D frames + action vectors at 10–20 Hz.

- Train a base diffusion policy: Start with action-space diffusion; use receding horizon at inference to replan every 0.2–0.5 s.

- Upgrade to DP3: Feed point clouds; expect better spatial generalization. arXiv

- Distill for speed: Convert to a one-step policy to hit real-time loop rates on modest hardware.

- Harden with perturbations: Add disturbances, lighting changes; re-train.

Beginner modifications & progressions

- Start with single-object pick-and-place → category-level generalization → tool use (e.g., open drawer, insert peg).

- Add language conditioning by combining a VLA encoder with a diffusion head.

Recommended cadence & KPIs

- Cadence: Train nightly; evaluate daily.

- KPIs: Task success; trajectory smoothness (jerk), safety violations, contact-rich error rate.

Safety, caveats, mistakes to avoid

- Diffusion latency can hurt control loops—use distillation or smaller backbones.

- Ensure action bounds and collision monitors are enforced.

- Don’t overfit to a single camera angle; diversify viewpoints.

Mini-plan (example)

- Week 1: 60 demos of “open drawer, place block inside,” train base diffusion; deploy with 5-step denoising.

- Week 2: Distill to one-step; add clutter; compare success/latency pre- and post-distillation.

3) Whole-Body Mobile Manipulation (Humanoids & Legged Platforms)

What it is and why it matters

“Mobile manipulation” merges locomotion (wheels or legs) with manipulation (arms and hands). The cutting edge combines whole-body control with learned policies so robots traverse clutter, stabilize objects with the torso, and use two hands for sequences like “pick a box, pivot, and shelf it.”

This is moving from research into commercial pilots. We’ve seen a fully electric next-gen humanoid platform designed for real-world tasks, alongside factories dedicated to humanoid production, and industry efforts building foundation models tailored for humanoids. agilityrobotics.com

Core benefits

- Human-oriented reach and dexterity: Operate in spaces built for people (shelves, door handles, stairs). Boston Dynamics

- Task diversity: Combining walking, balancing, and manipulation unlocks many light-industrial and logistics jobs.

- Rapid iteration: Simulation + shared models for locomotion/manipulation accelerate progress.

Requirements & low-cost alternatives

Hardware:

- Humanoid, quadruped-with-arm, or mobile base + arm; force-torque sensors recommended.

Software: - ROS 2; whole-body controllers; simulator (Isaac Sim) with digital twins.

Data: - Teleop demos of warehouse tasks, plus synthetic motion data from simulation pipelines.

Low-cost path:

- Start with mobile base + 6-DoF arm in sim; graduate to a small biped or a quadruped with a lightweight arm.

Step-by-step implementation

- Prototype in sim: Build a digital twin of a mock aisle with totes/shelves; practice box lifting and tote hand-off.

- Whole-body control loop: Blend torso and arm motion; add center-of-mass constraints and footstep planners.

- Policy learning: Use Isaac Lab to train walking-plus-grasp policies; schedule curriculum (static → moving target).

- Safety & supervision: Include virtual fences, emergency-stop trees, and force caps.

- Pilot on hardware: Start in caged areas; then move to supervised co-bot modes.

Beginner modifications & progressions

- Phase 1: Static manipulations with a mobile base.

- Phase 2: Add stepping and obstacles; then two-handed tasks.

- Phase 3: Introduce foundation-model intent understanding to accept natural language job steps.

Recommended cadence & KPIs

- Cadence: Weekly sim updates; quarterly on-site pilots.

- KPIs: Picks/hour; interventions/hour; mean time between assistance; safe-stop count; fall-free hours.

Safety, caveats, mistakes to avoid

- Don’t skip biomechanics: Whole-body contact requires accurate dynamics; validate payload limits.

- Avoid uncontrolled human exposure early; pilot in cordoned zones.

- Beware sim-to-real drift: Re-identify dynamics regularly as hardware wears.

Mini-plan (example)

- Sprint 1: Train “pick tote → place on conveyor” in a virtual aisle; enforce footstep and CoM constraints.

- Sprint 2: Add language prompts (“clear lane 2”), basic obstacle avoidance, and a two-handed lift.

4) AI Tactile Sensing & Dexterous Manipulation

What it is and why it matters

Vision alone struggles with slip, friction, and contact geometry. New vision-based tactile sensors (think tiny cameras under a soft skin) give robots a fingertip-level map of contact forces and textures. Paired with dexterous hands, robots can re-grasp in hand, uncap bottles, or neatly place deformable items—skills that turn “robotic pickers” into true helpers. Recent releases include fingertip sensors with 360-degree coverage and integrated touch platforms for research.

Major research groups have emphasized touch as a key to embodied intelligence, and industry partners are making research-ready hands (e.g., Allegro, Shadow) more accessible and ROS-compatible. Low-cost open designs exist for rapid prototyping. AI Meta

Core benefits

- Reliable grasps: Detect slip early and adjust.

- In-hand manipulation: Rotate, pivot, and “walk” objects between fingertips.

- Deformable object handling: Towels, bags, cables become tractable.

Requirements & low-cost alternatives

Hardware:

- Tactile fingertips (commercial or DIY) + dexterous hand (4–5 fingers). Allegro Hand

Software: - ROS 2 drivers, tactile calibration, diffusion/VLA policy that ingests touch features.

Data: - Short in-hand demonstrations; include failure modes (deliberate slips).

Low-cost path:

- Pair a research hand (Allegro) with DIY or lower-cost tactile sensors; or start with a 3D-printed anthropomorphic hand for algorithm prototyping. PMC

Step-by-step implementation

- Mount & calibrate tactile sensors: Capture dark/flatfield frames; track contact patches per finger.

- Collect in-hand demos: Rotate a cube 90°, unscrew a cap, or reorient a plug.

- Train a touch-augmented policy: Concatenate visual + tactile embeddings; train diffusion/VLA head.

- Close the loop: Add slip detectors to trigger micro-adjustments in grasp.

Beginner modifications & progressions

- Start: Two-finger pinch with tactile thumb only.

- Progress: Add three-finger “tripod” grasps, then five-finger handovers.

Recommended cadence & KPIs

- Cadence: Weekly sensor re-calibration; monthly policy updates.

- KPIs: Slip rate; re-grasp count; force limit violations; in-hand reorientation success.

Safety, caveats, mistakes to avoid

- Protect fingertips from over-compression; respect max force/pressure.

- Keep latency low between tactile events and controller updates.

- Don’t assume symmetry—left/right fingertips often age differently.

Mini-plan (example)

- Day 1–3: Calibrate tactile sensors; record 50 demos of “rotate cube in hand.”

- Day 4–7: Train a diffusion policy with tactile input; target 80% rotation success on novel cubes.

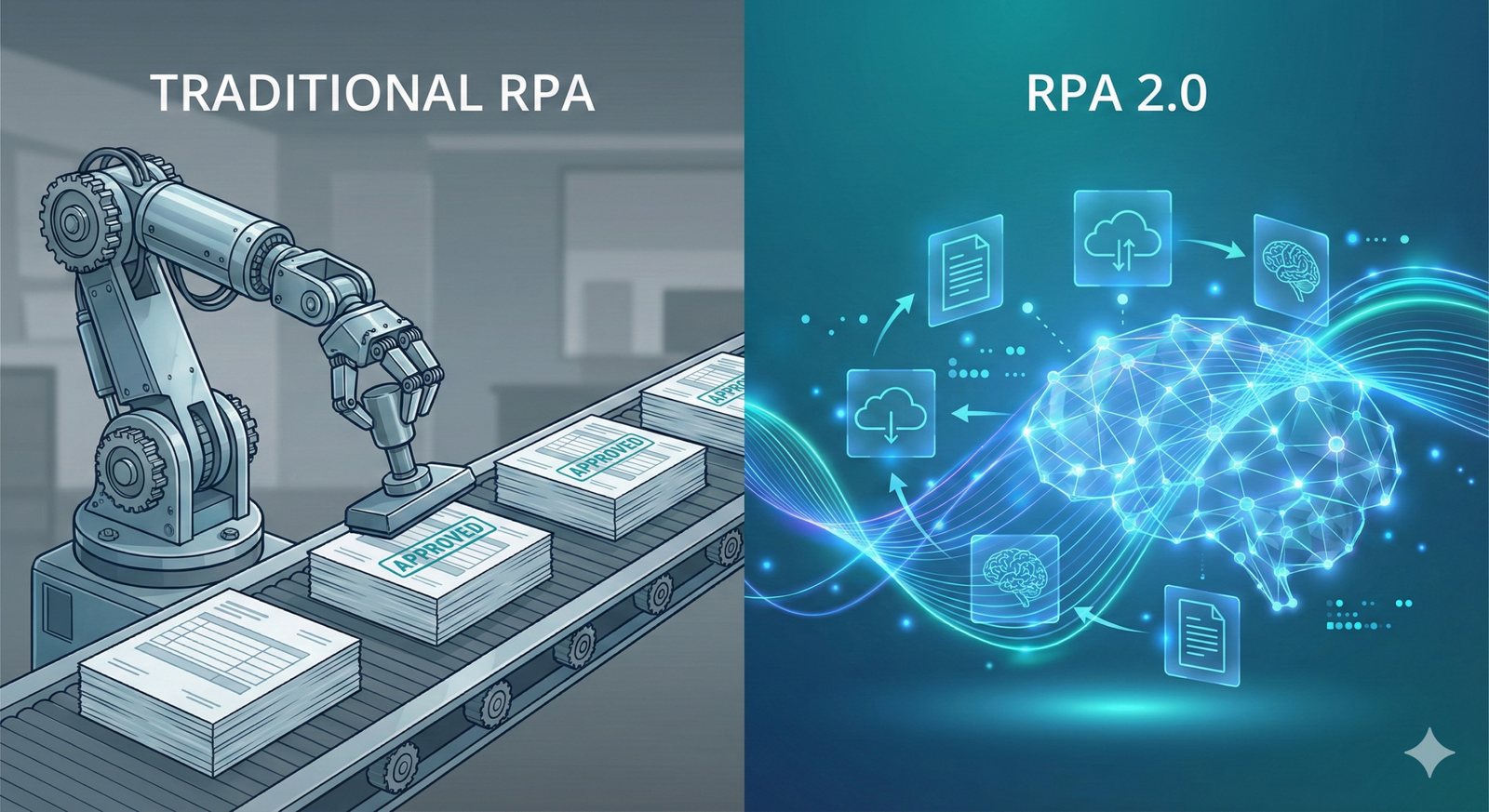

5) Sim-to-Real With Photorealistic Digital Twins & Neural Physics

What it is and why it matters

Robots learn fastest when you can fail a million times safely. That’s the promise of modern simulation: photoreal digital twins, GPU-accelerated physics, and standardized pipelines for synthetic data and policy training. Today’s platforms combine a USD-based scene graph, domain randomization, and robot learning frameworks so you can train controllers and vision models at scale, then deploy on hardware with fewer surprises.

The ecosystem keeps advancing—from new releases across Isaac Sim/Lab to announcements of physics engines and humanoid-focused models to further shrink the sim-to-real gap. Even robot manufacturers are public about adopting these stacks for transfer. NVIDIA Developeragilityrobotics.com

Core benefits

- Speed & safety: Train thousands of episodes overnight; test corner cases no human would stage.

- Repeatability: Deterministic tests; crystal-clear regression tracking.

- Scale: Synthetic motions and scenes feed foundation models. NVIDIA

Requirements & low-cost alternatives

Hardware:

- A single RTX-class GPU workstation is enough to start.

Software: - Isaac Sim/Lab for simulation + learning; ROS 2 for real-world deployment.

Low-cost path:

- Run the official container images; use prebuilt scenes; start with a tabletop arm and camera. NVIDIA NGC Catalog

Step-by-step implementation

- Build a digital twin: Import CAD/measurements; assemble lighting/materials; set correct mass/inertia.

- Randomize: Vary textures, friction, and camera intrinsics every episode; log domain parameters.

- Train at scale: Use Isaac Lab PPO/IL pipelines and curriculum learning.

- Validate transfer: Zero-shot test on hardware; fine-tune from a handful of real demos if needed.

- Monitor drift: Re-identify dynamics quarterly or after hardware changes.

Beginner modifications & progressions

- Start: Single task in a simple bin.

- Progress: Multi-robot scenes, conveyor belts, deformables, and motion planning with collision-aware policies.

Recommended cadence & KPIs

- Cadence: Nightly sim runs; weekly transfer tests.

- KPIs: Sim success vs. real success; sim-to-real gap (%); number of unsafe events prevented by sim; time-to-first-pass on hardware.

Safety, caveats, mistakes to avoid

- Faithful physics or bust: Wrong friction or inertia silently ruins transfer.

- Avoid over-fitting to a pretty scene—randomize aggressively.

- Keep software versions pinned; record seeds for reproducibility.

Mini-plan (example)

- Week 1: Build a digital twin of your packing cell; run 10k randomized episodes overnight.

- Week 2: Deploy the policy on hardware; measure the gap and fine-tune from 25 real demos.

Quick-Start Checklist

- Choose your anchor technology (VLA vs. diffusion vs. both).

- Set up ROS 2, Isaac Sim/Lab, and a reproducible Python environment.

- Define one narrow task and a pass/fail metric.

- Collect 40–200 clean demos (or synthesize at scale in sim).

- Train, deploy in sim, harden with perturbations, then test on hardware.

- Establish a weekly data + retrain cadence and a “no-assist” KPI.

Troubleshooting & Common Pitfalls

- Good sim, bad reality: Your friction or mass is off—re-estimate with simple system ID; randomize more aggressively.

- Policy looks smart but fails oddly: Check for dataset shortcuts (e.g., object always on the left).

- Latency breaks control: Distill multi-step diffusion to one-step; prune networks; move inference to the edge.

- Slippery grasps: Enable tactile-triggered micro-adjustments; cap forces; re-calibrate sensors weekly.

- Language confusion: Freeze a small set of validated prompts; log prompts with results for auditability.

How to Measure Progress (Without Fooling Yourself)

- Task success @ N trials (e.g., 100 attempts on a randomized test set).

- No-assist streaks (episodes completed with zero human help).

- Latency & smoothness (ms per control loop; jerk/acc).

- Safety metrics (contact force violations, emergency stops).

- Generalization tests (unseen objects, new lighting, rephrased instructions).

- Sim-to-real gap (absolute % difference), trended over time.

A Simple 4-Week Starter Plan

Week 1 — Foundations

- Install ROS 2 + Isaac Sim/Lab; load your robot URDF; verify joint control.

- Define one task (e.g., “place a bottle upright in a bin”).

- Collect 50 demos via teleop in sim.

Week 2 — First Policy

- Fine-tune a VLA or train a diffusion policy with your demos.

- Validate on 20 novel trials in sim; add domain randomization.

Week 3 — Harden & Transfer

- Add 30 “hard case” demos (clutter, occlusions).

- Deploy on hardware; measure success, latency, and safety events.

Week 4 — Scale & Instrument

- Add tactile input if grasps are unstable.

- Automate nightly training; track KPIs on a dashboard; lock a weekly retrain.

FAQs (Quick, Practical Answers)

1) Do I need a humanoid to benefit from robot foundation models?

No. A 6-DoF arm with a gripper is enough. VLAs and diffusion policies generalize across embodiments and start paying off even on simple tabletop tasks.

2) How many demos do I really need?

For diffusion policies, many tasks work with ~40–200 high-quality demonstrations; more helps with long-horizon tasks and clutter.

3) Should I start with simulation or go straight to hardware?

Start in simulation to iterate safely and cheaply; then transfer and fine-tune on a small set of real demos. NVIDIA Developer

4) Are these methods fast enough for real-time control?

Yes, with engineering. Use one-step diffusion or lighter backbones and keep the control loop on-device.

5) Can a single model control different robots?

That’s a core promise of cross-embodiment training and VLAs like OpenVLA—train once, adapt quickly.

6) What if my robot drops or crushes objects?

Add tactile sensing, enforce force/torque limits, and program “slip reactive” moves. Re-calibrate weekly. gelsight.com

7) Is language control safe?

Treat it like any other input. Validate prompts, gate with motion constraints, and maintain an emergency stop. ROS Documentation

8) How do I measure progress beyond success rate?

Track no-assist episodes, latency, safety violations, and sim-to-real gap—and trend them weekly. NVIDIA Developer

9) Are humanoids actually being deployed?

Early pilots exist in controlled environments; the hardware, control, and safety stack are maturing rapidly with new platforms and models for humanoids. AxiosTIME

10) What about swarms of small robots?

Swarms are viable for certain domains; community stacks for micro-quadrotors exist and can be a cost-effective testbed for coordination and planning research. Crazyswarm

Conclusion

Robotics is entering its physical-AI era. With VLAs to understand tasks, diffusion policies to execute smooth actions, whole-body control to operate in human spaces, tactile sensing to master contact, and sim-to-real pipelines to iterate safely at scale, the toolset is finally ready for ambitious products—not just demos. Start small, measure relentlessly, and let data—not hype—drive your roadmap.

CTA: Ready to put these technologies to work? Pick one pilot task, spin up sim this week, and train your first policy before Friday.

References

- RT-2: New model translates vision and language into action, Google DeepMind Blog, July 28, 2023 — https://deepmind.google/discover/blog/rt-2-new-model-translates-vision-and-language-into-action/

- RT-2 Project Page, Google/Robotics Transformer 2 — https://robotics-transformer2.github.io/

- Open X-Embodiment: Robotic Learning Datasets and RT-X Models, arXiv, Oct 13, 2023 — https://arxiv.org/abs/2310.08864

- Open X-Embodiment & RT-X Project Website, Open X-Embodiment Collaboration — https://robotics-transformer-x.github.io/

- OpenVLA: An Open-Source Vision-Language-Action Model, Project Website, 2024 — https://openvla.github.io/

- openvla/openvla-7b, Hugging Face Model Card, 2024 — https://huggingface.co/openvla/openvla-7b

- PaLM-E: An Embodied Multimodal Language Model, arXiv, Mar 6, 2023 — https://arxiv.org/abs/2303.03378

- PaLM-E Blog, Google Research, 2023 — https://research.google/blog/palm-e-an-embodied-multimodal-language-model/

- NVIDIA Announces Project GR00T Foundation Model for Humanoid Robots, NVIDIA Newsroom (GTC), Mar 18, 2024 — https://nvidianews.nvidia.com/news/foundation-model-isaac-robotics-platform

- Advancing Humanoid Robot Sight and Skill Development with NVIDIA Project GR00T, NVIDIA Developer Blog, 2024 — https://developer.nvidia.com/blog/advancing-humanoid-robot-sight-and-skill-development-with-nvidia-project-gr00t/

- Nvidia CEO Jensen Huang unveils new Rubin AI chips at GTC 2025, Associated Press, Mar 18, 2025 — https://apnews.com/article/457e9260aa2a34c1bbcc07c98b7a0555

- Everything Nvidia announced at its annual developer conference GTC, Reuters, Mar 18, 2025 — https://www.reuters.com/technology/everything-nvidia-announced-its-annual-developer-conference-gtc-2025-03-18/

- Diffusion Policy: Visuomotor Policy Learning via Action Diffusion, arXiv, Mar 7, 2023 (v5 Mar 14, 2024) — https://arxiv.org/abs/2303.04137

- 3D Diffusion Policy (DP3) Project Page, 2024 — https://3d-diffusion-policy.github.io/

- One-Step Diffusion Policy: Fast Visuomotor Policies via Diffusion Distillation, arXiv, Oct 28, 2024 — https://arxiv.org/html/2410.21257v1

1 Comment