AI is moving from buzzword to backbone, and a handful of ambitious startups are defining what “launch” means in this new era. In this deep dive, we spotlight five standout companies setting the pace with headline-grabbing releases, practical developer tools, and enterprise-ready products. If you’re an executive, product leader, or hands-on builder wondering which horses to bet on—and how to pilot them effectively—this guide gives you the why, what, and how. Within the first 100 words you’ll know where we’re headed: Top 5 AI startups making waves in tech launches, with real steps you can follow today.

Who this is for: CTOs and engineering leaders, founders and product managers, technical marketers and creative teams, and operations leaders looking to design fast, measured AI pilots without getting lost in vendor noise.

What you’ll learn: What makes each startup special, recent product launches that actually matter, realistic prerequisites and costs, beginner-friendly implementation checklists, common pitfalls, and a simple four-week plan to get from curiosity to production signal.

Key takeaways

- Pick momentum, not hype. The most valuable AI launches ship frequently, improve developer velocity, and unlock measurable business outcomes (time saved, quality gain, cost avoided).

- Start small, instrument early. Treat every pilot like an experiment with a documented hypothesis and KPIs. Keep scope tight and deploy where feedback cycles are fast.

- Model choice is a means, not an end. Favor platforms and APIs that match your constraints: data governance, cost, latency, modality (text, image, video), and deployment surface.

- Safety is a product feature. Guardrails, content provenance, role-based access, and red-teaming aren’t optional; they’re table stakes for scale.

- Ship weekly. Use the 4-week plan near the end to move from “we should try AI” to “we shipped, measured, and learned.”

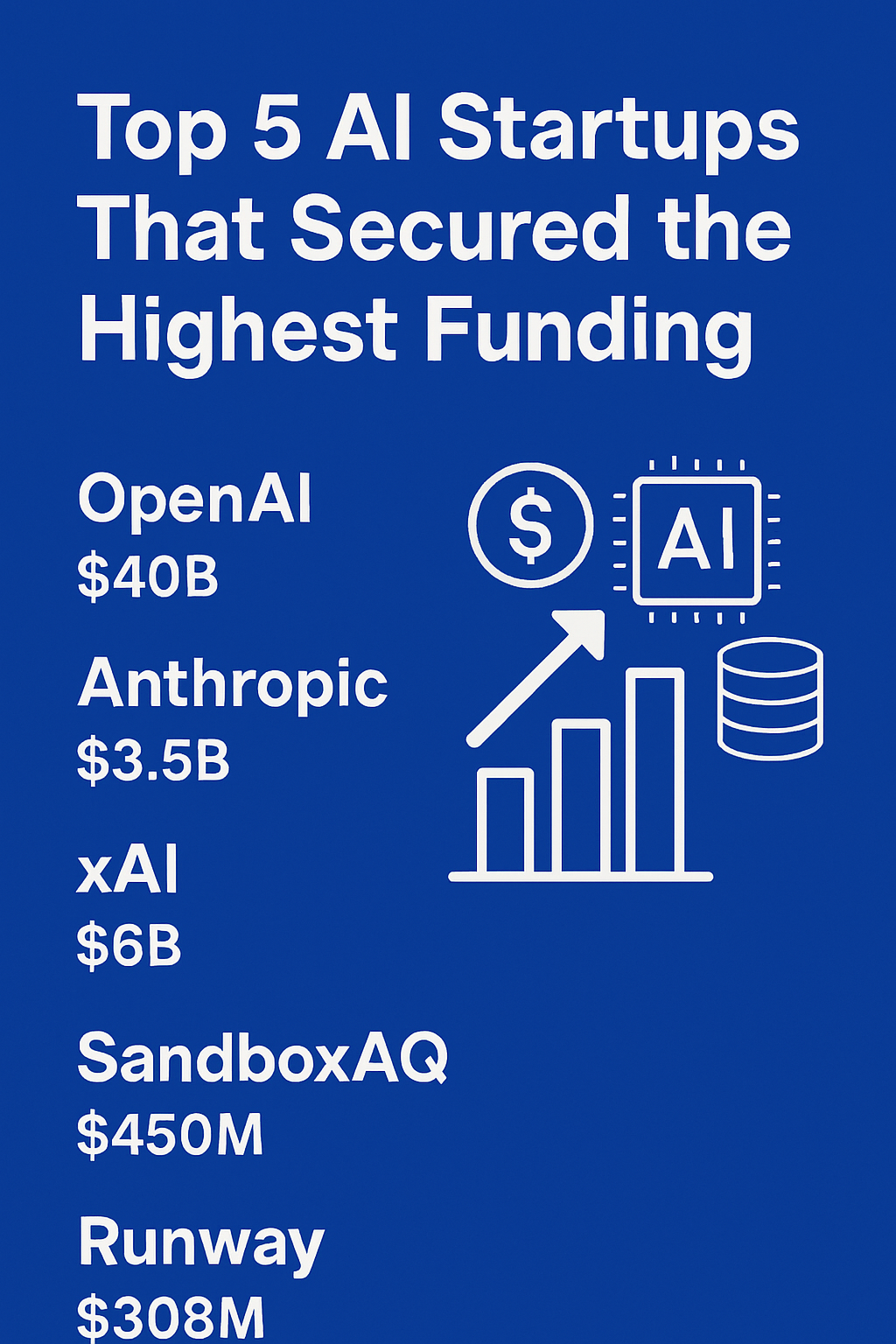

xAI — Shipping frontier reasoning at consumer speed

What it is & why it matters

xAI has been on a rapid cadence of model releases designed around stronger reasoning and native tool use. Recent launches introduced higher-end models and specialized offerings, with a clear emphasis on search integration and agentic capabilities. For teams that need up-to-date answers, long-context analysis, or reasoning-heavy workflows, the newest models and API give a compelling sandbox for experimentation and speed.

Core benefits

- Strong reasoning with native tool use and real-time search.

- Public API with fast iteration for builders.

- Multiple tiers for different compute/latency needs.

Requirements & prerequisites

- Skills: Backend development, prompt design, observability basics (tokens, latency, error handling).

- Accounts/Access: xAI account and API access; secure secrets management.

- Data: Sample tasks and datasets (questions, documents, code snippets).

- Cost: Start with free or trial credits if available; then budget per-request costs.

- Low-cost alternative: Use smaller models or distilled versions for non-critical paths.

Beginner implementation: step-by-step

- Pick a needle-moving use case. Examples: sales research synthesis, support agent drafting, engineering knowledge search.

- Define success. E.g., 20% reduction in time-to-answer on research tickets; <1% rate of critical hallucinations.

- Wire minimal RAG. Connect to a small document set and enable tool use for grounded answers.

- Instrument. Log prompts, responses, token usage, latency, and user feedback.

- Add guardrails. Content filtering and allow-list for tools and domains.

- Run a 1–2 week pilot. Collect qualitative feedback and quantitative metrics.

Beginner modifications & progressions

- Simplify: Start with a single tool (web search) and one team.

- Scale up: Add code-interpreter and custom tools; introduce agents for multi-step tasks.

- Harden: Cache frequent prompts, add prompt templates, and implement human-in-the-loop review for high-risk outputs.

Frequency, duration & KPIs

- Cadence: Weekly model evals; daily pilot reviews in the first week.

- KPIs: Time-to-answer, citation click-through, groundedness score, cost/answer, user satisfaction.

Safety, caveats & common mistakes

- Don’t skip source visibility when using web-connected tools.

- Treat reasoning traces as sensitive logs; secure and purge per policy.

- Avoid over-automating before you have reliable guardrails and escalation paths.

Micro-plan example (2–3 steps)

- Integrate the API with a single internal knowledge base and enable search tool use.

- Route 10% of research requests through the assistant; capture human edits and time saved.

- Promote to 50% after you hit quality and cost thresholds.

Anthropic — Enterprise-friendly launches that push context and collaboration

What it is & why it matters

Anthropic’s recent releases focused on higher-intelligence models, collaboration UX, and agent-adjacent capabilities. Two launches are especially relevant for builders and enterprise teams: a mid-tier model that reset the intelligence/speed bar, and a long-context upgrade enabling million-token workloads. Together with collaboration features and “computer use” capabilities, you get a versatile stack for coding, documents, and team workflows.

Core benefits

- Strong general reasoning and coding; multimodal capabilities where relevant.

- Collaboration UX that goes beyond chat (live workspaces for artifacts).

- Long-context support suitable for codebases and large document sets.

Requirements & prerequisites

- Skills: Prompt engineering, basic evals; for agents, familiarity with tool APIs and desktop automation risks.

- Accounts/Access: Web app or API credentials; optional access via hyperscalers.

- Data: Curated repos or corpora for long-context tasks.

- Cost: Per-token pricing; plan for higher costs with million-token contexts.

- Low-cost alternative: Use lighter models for triage and reserve the top model for final passes.

Beginner implementation: step-by-step

- Choose a collaborative workflow. Example: RFC drafting + code review with live artifacts.

- Stand up prompt templates. Create standard prompts for summarization, code translation, and test generation.

- Pilot long-context. Load a non-sensitive subset of a codebase (or contract set) and evaluate retrieval accuracy and groundedness.

- Automate guardrails. Add prompt-caching, output limits, and red-team tests.

- Introduce “computer use” carefully. Keep it sandboxed; log actions; require approvals for file writes or network changes.

Beginner modifications & progressions

- Simplify: Start with artifacts/workspaces for code snippets and docs.

- Scale up: Add long-context pipelines for entire repos; integrate with CI to propose changes.

- Harden: Add evaluation harnesses (regression suites) and quality gates.

Frequency, duration & KPIs

- Cadence: Weekly artifact reviews; monthly red-team drills.

- KPIs: Edit-acceptance rate, test pass rate for generated code, retrieval precision/recall, context utilization, cost per merged change.

Safety, caveats & common mistakes

- Million-token prompts make it easy to over-stuff context. Measure marginal benefit of added tokens.

- Desktop automation features require strong access controls and audit logs.

- Don’t skip model cards and usage policies when deploying to non-technical teams.

Micro-plan example (2–3 steps)

- Use a collaboration workspace to draft a new feature spec from prior tickets and docs.

- Run a long-context pass to align with historical decisions; generate test scaffolding.

- Gate merges via human review and automated checks.

Mistral — Fast-moving open & premier models with practical tooling

What it is & why it matters

Mistral is shipping a steady stream of open-weight and hosted models spanning text, vision, coding, OCR, and reasoning. Frequent releases to its changelog and model catalog make it a strong option for teams that value cost control, on-prem or self-deployment flexibility, and the ability to mix open models with hosted “premier” tiers and agents.

Core benefits

- Choice: Open weights for edge/on-prem and hosted “premier” models for frontier tasks.

- Breadth: Reasoning, coding, OCR, document AI, audio inputs—plus agents and connectors.

- Pace: Regular updates enable rapid iteration and fine-grained model selection.

Requirements & prerequisites

- Skills: API integration; optional MLOps for self-hosting; tokenization and sampling familiarity.

- Infrastructure: If self-hosting, GPUs/accelerators and observability; otherwise an API account.

- Data: Internal documents/code for evaluation; synthetic prompts for regression testing.

- Cost: Hosted usage is per-token; self-hosting requires capacity planning.

- Low-cost alternative: Start with smaller open models for offline/edge tasks.

Beginner implementation: step-by-step

- Pick the deployment shape. Hosted API first; plan for later self-host if needed.

- Choose a model family. Start with a small model for classification or routing; escalate to a frontier model for complex generation.

- Wire an Agent. Use the agents API to combine function calling with your internal tools.

- Set up evals. Small regression suite across representative prompts; track latency and cost.

- Iterate weekly. Swap models as needed; keep the interface constant.

Beginner modifications & progressions

- Simplify: Single model, single tool, no RAG.

- Scale up: Introduce OCR + document AI for forms; add reasoning models for multi-step tasks.

- Harden: Rate limiting, abuse monitoring, and model-version pinning.

Frequency, duration & KPIs

- Cadence: Daily smoke tests; weekly model comparisons.

- KPIs: Cost per 1k tokens, latency, pass@k on eval prompts, task completion rate, human edit distance.

Safety, caveats & common mistakes

- Model drift across frequent releases—pin versions and maintain a rollback plan.

- For open-weight deployments, compliance and privacy are your responsibility; perform a DPIA where required.

- Don’t test only on “happy paths.” Add adversarial and ambiguous prompts early.

Micro-plan example (2–3 steps)

- Stand up a hosted endpoint with a small model for classification/routing.

- Add a document AI step (OCR + extraction) for one form type.

- Graduate complex tasks to a larger hosted model after you hit accuracy and latency targets.

Perplexity — Research-grade answer engine and API that enterprises actually use

What it is & why it matters

Perplexity has emerged as a go-to AI research interface and API—with real-time browsing, citations, and enterprise features designed for governance and scale. A notable enterprise launch brought auditing, retention controls, user management, and security assurances. The platform’s adoption by high-profile partners underscores its viability as a production-grade search and research layer.

Core benefits

- Real-time, cited answers for defensible research and decision support.

- Enterprise controls (privacy, retention, and user management) designed for org-wide rollout.

- Developer-friendly API to embed “answer engine” functionality in apps.

Requirements & prerequisites

- Skills: API integration or SSO rollout for the web app; prompt and query design.

- Data: Curated internal knowledge sources for secure indexing; clear source allow-lists.

- Cost: Seat-based enterprise plans or API usage; budget for external model calls if used.

- Low-cost alternative: Start with team-level paid plans and graduate to enterprise.

Beginner implementation: step-by-step

- Choose a research workflow. Competitive analysis, sales prospecting, customer support knowledge.

- Connect sources. Start with a few systems (docs/wiki/storage) and set an allow-list for external sites.

- Roll out to a pilot group. Provide prompt recipes and a citation-verification checklist.

- Instrument. Track time saved per task, citation click-through, and research accuracy checks.

- Create a review ritual. Weekly “best answers” session to refine prompts and source coverage.

Beginner modifications & progressions

- Simplify: Begin with web-only answers and manual citation checks.

- Scale up: Add internal integrations and API-based automations; build domain-specific search apps.

- Harden: DLP policies, access tiers, and audit logs; tune source filters for bias control.

Frequency, duration & KPIs

- Cadence: Weekly prompt pack updates; monthly source audits.

- KPIs: Time saved per report, citation verification rate, duplicate work avoided, adoption and satisfaction by role.

Safety, caveats & common mistakes

- Citation isn’t correctness. Require human verification on critical decisions.

- Source bias. Monitor and tune allow-lists/deny-lists to avoid skewed results.

- Privacy. Ensure retention and training policies are configured to your standards.

Micro-plan example (2–3 steps)

- Deploy enterprise access to a single team with a pre-curated source list.

- Build a prompt library for standard research tasks; track time saved.

- Expand to adjacent teams after hitting quality thresholds.

Runway — Creative-first video models with practical control

What it is & why it matters

Runway’s recent video-generation releases prioritize fidelity, motion control, and production-friendliness. The “Gen-3” family emphasized better consistency and camera control, while a companion variant focuses on faster, lower-cost outputs. For creative, marketing, and product teams, these models turn storyboards into draft footage in minutes.

Core benefits

- High-fidelity text-to-video and image-to-video with strong motion control.

- Practical settings for duration, keyframes, and camera behavior.

- Guardrails and provenance features to support responsible use.

Requirements & prerequisites

- Skills: Prompting with cinematic terminology; basic editing.

- Accounts/Access: Web or mobile app; credits plan.

- Assets: Reference images or brand style boards.

- Cost: Credits per second; faster variants reduce cost where quality allows.

- Low-cost alternative: Use shorter durations and recycle keyframes; reserve premium generations for final cuts.

Beginner implementation: step-by-step

- Define the story beat. Write a 1–2 sentence prompt emphasizing camera moves and pacing.

- Pick the model. Use the main video model for text-only prompts; the fast variant for image-seeded shots.

- Set specs. Duration (5–10s), aspect ratio, and keyframes.

- Generate → Extend. Produce a first pass, then extend in short increments.

- Postprocess. Light editing; add captions, music, and brand overlays.

Beginner modifications & progressions

- Simplify: Use image-to-video for brand-consistent shots.

- Scale up: Stitch scenes into a 30–40s sequence; introduce keyframes for continuity.

- Harden: Archive prompts and outputs; implement an asset review flow with legal.

Frequency, duration & KPIs

- Cadence: Daily iteration during campaign sprints.

- KPIs: Storyboard-to-first-cut time, approval cycle length, engagement lift vs. baseline assets, cost per delivered asset.

Safety, caveats & common mistakes

- Over-specifying prompts can produce stiff, unnatural shots—describe camera and mood first.

- Rights & likeness. Follow brand and talent usage rules; use provenance metadata.

- Quality creep. Set “good enough” criteria; don’t spend premium credits on placeholders.

Micro-plan example (2–3 steps)

- Generate three 10-second variations of a product hero shot with different camera moves.

- Extend the best cut to 30–40 seconds and overlay brand elements.

- A/B test against your existing hero video.

Quick-start checklist

- Select one high-leverage workflow per team (research, coding, support, creative).

- Choose one startup per workflow (avoid multi-vendor sprawl at the start).

- Write a one-paragraph pilot plan with clear KPIs and a “stop” condition.

- Create prompt templates and a feedback form (thumbs up/down + free-text).

- Add safety basics: content filters, access tiers, retention policy, and logging.

- Block 30 minutes daily for the pilot owner to review outputs and metrics.

- Schedule a week-2 checkpoint to decide: iterate, scale, or stop.

Troubleshooting & common pitfalls

- “Hallucinations” in answers. Add retrieval grounding; display sources; require human verification for critical outputs.

- Latency spikes. Cache frequent prompts, precompute embeddings, and backoff retries.

- Costs creep up. Set quotas; alert on tokens/job; use small models for triage and large ones for final drafts.

- Stakeholder skepticism. Ship visual wins quickly (before/after examples) and track time saved with simple timers.

- Over-indexing on a single model. Keep your interface model-agnostic so you can switch when a better option arrives.

- Security gaps. Treat prompts and logs as sensitive data; mask secrets; review vendor compliance documents.

How to measure progress (simple instrumentation)

- Time saved per task: Measure with a simple stopwatch plugin or start/stop buttons in your tool.

- Edit distance: Percentage of AI output accepted without edits (or with minor edits).

- Groundedness: Share a short checklist for reviewers to mark source alignment.

- Adoption: Weekly active users, sessions per user, and repeat usage.

- Cost per successful outcome: Tokens/credits divided by “accepted” outputs.

- Quality trend: 5-point Likert score on clarity, correctness, and tone—tracked weekly.

A simple 4-week starter plan (cross-functional)

Week 1 — Scope & setup

- Pick one workflow each for research, coding, and creative.

- Choose a single vendor per workflow.

- Implement minimal logging and create a prompt library.

Week 2 — Pilot & feedback

- Route 10–20% of tasks through the AI path.

- Collect edit-distance, time saved, and groundedness.

- Review daily, adjust prompts, and prune sources.

Week 3 — Harden & expand

- Add guardrails (role-based access, output limits, red-teaming).

- Scale the pilot to 50% of tasks if KPIs are green.

- Introduce long-context or faster variants where relevant.

Week 4 — Decide & document

- Freeze the best configuration; write a 2-page “how we use it” guide.

- Greenlight production rollout or spin up a new experiment for the next workflow.

- Schedule monthly model reviews (new releases, cost, quality).

FAQs

- Which startup should I start with?

Map tool to task: research → Perplexity; heavy reasoning or agents → xAI; long-context coding/docs → Anthropic; cost-flexible hosted/open mix → Mistral; video content → Runway. - How do I keep costs under control?

Use small models for triage, cache frequent prompts, cap max tokens, and alert on spend. Reserve frontier models for final passes or high-stakes tasks. - Is long-context always better?

No. It can add cost and noise. Start with targeted retrieval and only scale context windows when you see accuracy gains that justify cost. - What about data privacy?

Review each vendor’s retention and training policies. Set retention windows, disable training on your data where options exist, and restrict which sources the system can access. - How do I compare models fairly?

Create a 50–200 prompt eval set with hidden answers and score by task success, edit distance, latency, and cost. Keep prompts and scoring constant across runs. - Can non-technical teams adopt these tools?

Yes—with templates, examples, and a clear “safe use” checklist. Start with web apps before moving to API-driven automations. - What’s the risk of vendor lock-in?

Minimize it by separating your orchestration layer (prompts, routing, logging) from specific model APIs. Keep your data and evals portable. - How do I handle hallucinations and bias?

Ground responses with retrieval, show sources, and build allow-/deny-lists. Require human sign-off for critical decisions and log all outputs for audit. - Do I need agents right away?

Not necessarily. Agents add complexity. Prove value with single-step tasks first, then graduate to agentic flows with strict sandboxing and approvals. - When should I scale a pilot?

Scale when you consistently hit KPIs for quality and cost over at least a week, and when stakeholders confirm the workflow actually saves them time.

Conclusion

AI is now a shipping discipline, not a science project. The five startups above are pushing the envelope with launches that matter: better reasoning with tool use, collaborative long-context work, a flexible mix of open and hosted options, research you can cite, and video tools that meet creative teams where they are. Start small, measure the work, and evolve weekly—because in AI, momentum compounds.

Call to action: Pick one workflow and one vendor today; ship a measured pilot this week, learn next week, and scale in a month.

References

- Grok 4, xAI, July 9, 2025 — https://x.ai/news/grok-4

- Announcing Grok for Government, xAI, July 14, 2025 — https://x.ai/news/government

- Grok 3 Beta — The Age of Reasoning Agents, xAI, February 19, 2025 — https://x.ai/news/grok-3

- Claude 3.5 Sonnet, Anthropic, June 21, 2024 — https://www.anthropic.com/news/claude-3-5-sonnet

- Claude Sonnet 4 now supports 1M tokens of context, Anthropic, August 12, 2025 — https://www.anthropic.com/news/1m-context

- Anthropic releases AI to automate mouse clicks for coders, Reuters, October 22, 2024 — https://www.reuters.com/technology/artificial-intelligence/anthropic-releases-ai-automate-mouse-clicks-coders-2024-10-22/

- Changelog, Mistral AI (entries including July 24, 2024; November 18, 2024; August 12, 2025) — https://docs.mistral.ai/getting-started/changelog/

- Models Overview, Mistral AI (catalog including premier and open models; updated 2025) — https://docs.mistral.ai/getting-started/models/models_overview/

- Perplexity launches Enterprise Pro, Perplexity, April 23, 2024 — https://www.perplexity.ai/hub/blog/perplexity-launches-enterprise-pro

- Perplexity Enterprise Pro, Perplexity (product page; enterprise features) — https://www.perplexity.ai/enterprise

- Truth Social’s Perplexity search comes with Trump-friendly media sources, Axios, August 6, 2025 — https://www.axios.com/2025/08/06/trump-truth-social-perplexity

- Introducing Gen-3 Alpha: A New Frontier for Video Generation, Runway, June 17, 2024 — https://runwayml.com/research/introducing-gen-3-alpha

- Creating with Gen-3 Alpha and Gen-3 Alpha Turbo, Runway Help Center (model specs and usage) — https://help.runwayml.com/hc/en-us/articles/30266515017875-Creating-with-Gen-3-Alpha-and-Gen-3-Alpha-Turbo