We are currently navigating a pivotal moment in the history of the internet: the erosion of “seeing is believing.” For decades, video and audio recordings were treated as definitive proof of reality. Today, however, the rapid advancement of generative artificial intelligence (AI) has democratized the ability to create hyper-realistic synthetic media, commonly known as deepfakes. While this technology holds creative promise, it presents a profound threat to personal privacy and digital identity.

The ability to clone a voice from a three-second audio clip or map a face onto a different body in real-time video is no longer the domain of Hollywood studios or state-sponsored hackers. These tools are available as consumer apps, often for free. This shift fundamentally alters the security landscape for individuals, families, and businesses. Protecting your digital identity in this new era requires more than just strong passwords; it demands a fundamental rethink of how we verify reality and how we curate our digital footprints.

In this guide, deepfakes refer specifically to AI-generated or manipulated audio, video, or image content designed to impersonate real individuals or create realistic synthetic personas. We will explore the mechanisms of these threats, the specific risks to your digital identity, and, most importantly, the practical, actionable steps you can take to safeguard your privacy.

Key Takeaways

- The Threat is Personal: Deepfakes are no longer just for celebrities; everyday individuals are increasingly targeted for financial fraud, harassment, and identity theft.

- Biometrics Are Vulnerable: Voice authentication and facial recognition systems can be fooled by sophisticated AI clones, necessitating stronger, non-biometric backup verifications.

- Data Scarcity is Defense: The more high-quality audio and video of yourself you publish publicly, the easier it is to train an AI model on your likeness.

- Verification Over Trust: In high-stakes communications (financial transfers, emergency family calls), emotional trust must be replaced with strict verification protocols.

- Hardware Authentication: Physical security keys and passkeys offer a layer of protection that deepfakes cannot bypass through social engineering.

Understanding the Deepfake Landscape

To protect against deepfakes, one must first understand the technological leap that has occurred. Deepfakes utilize a form of machine learning called “deep learning,” specifically Generative Adversarial Networks (GANs) and, increasingly, diffusion models.

How the Technology Works

In a GAN, two AI models work in tandem: a “generator” creates the fake content, and a “discriminator” attempts to spot the forgery. They loop millions of times, with the generator learning from its mistakes until the discriminator can no longer tell the difference between the fake and the real data.

Historically, training these models required thousands of images and hours of audio. As of early 2026, “zero-shot” or “few-shot” learning models allow convincing clones to be generated from:

- Audio: As little as 3 to 10 seconds of clear speech.

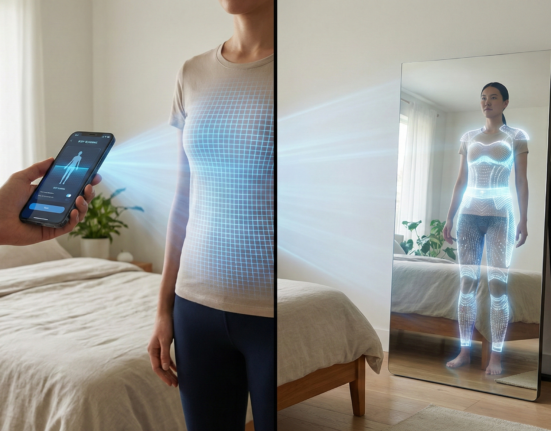

- Video: A single high-resolution photograph can be animated to speak, or a short video clip can be used to train a “face swap” model for live video calls.

The Shift to Real-Time

The most dangerous evolution is real-time deepfakes. Early iterations required hours of rendering time. Now, bad actors can utilize live-streaming tools that overlay a target’s face and voice onto their own during a Zoom call or FaceTime. This capability is the cornerstone of modern “social engineering” attacks, where the attacker convinces a victim they are speaking to a boss, a colleague, or a loved one to extract money or sensitive data.

The Intersection of Deepfakes and Identity Theft

Traditional identity theft usually involves stealing static data: Social Security numbers, dates of birth, or credit card information. Deepfakes usher in the era of Synthetic Identity Fraud. This is not just stealing data; it is stealing the persona.

Synthetic Identities

Criminals utilize deep learning to combine real and fake information to create a new, synthetic identity. They might use a real Social Security number (often from a child or deceased person) combined with an AI-generated face that doesn’t belong to any real human. Because the face is unique and persistent, it can pass “Know Your Customer” (KYC) checks used by banks and fintech apps that ask users to upload a video selfie.

The “Grandparent Scam” 2.0

One of the most visceral threats to individual privacy is the weaponization of voice cloning in family emergency scams.

- The Scenario: A parent receives a call from an unknown number. When they answer, they hear their child’s voice—panicked, crying, perhaps claiming to be in a car accident or arrested.

- The Mechanism: Scammers scrape audio from the child’s public TikTok, Instagram, or YouTube videos to clone their voice.

- The Outcome: The parent, bypassing logic due to emotional shock and the visceral recognition of the voice, transfers money instantly.

Reputational Hijacking

For professionals, the threat extends to reputation. Deepfakes can be used to fabricate evidence of misconduct, create non-consensual intimate imagery (NCII), or manipulate stock prices by impersonating CEOs. This form of identity attack seeks to destroy credibility rather than steal funds, making it difficult to remediate through traditional banking channels.

Biometric Security in a Synthetic World

For years, security experts advocated for biometrics (fingerprints, face scans, voice recognition) as the gold standard because “you cannot lose your face like you lose a password.” Generative AI challenges this axiom.

Voice Authentication Vulnerabilities

Many financial institutions and service providers implemented “voiceprint” passwords for phone banking. This system is now critically vulnerable. AI voice clones can mimic not just the pitch and tone of a user, but also their cadence and accent.

- Risk Level: High.

- Recommendation: If your bank offers voice authentication as a primary login method, disable it in favor of a PIN or an authenticator app code.

Facial Recognition and Liveness Detection

Facial recognition used to unlock phones (like FaceID) creates a 3D map of the face using infrared dots, making it very difficult to spoof with a 2D deepfake image. However, facial recognition used for remote identity verification (e.g., verifying a driver’s license online) often relies on standard cameras. Security vendors are racing to improve “liveness detection”—technology that looks for micro-movements, blood flow changes (photoplethysmography), or reflections that indicate a real human presence. While effective, this is an arms race; as detection improves, so do the spoofing tools.

Securing Your Digital Footprint: The First Line of Defense

The raw material for a deepfake is data. The more high-quality audiovisual data you have online, the easier you are to clone. While we cannot disappear from the internet, we can curate our “data exhaust.”

The Audio Audit

Audio is currently the most vulnerable vector because it requires the least data to clone effectively.

- Review Social Media: Check your public profiles (Instagram Reels, TikToks, YouTube Shorts). Do you have videos where you are speaking clearly directly into a microphone?

- Limit Availability: Consider restricting these accounts to “Friends Only” or removing older content that is no longer necessary.

- Voicemail Greetings: Generic voicemail greetings are safer than personal recordings. If a scammer calls your phone and hits your voicemail, they can record your greeting to train a model.

Visual Hygiene

- High-Resolution Images: Extremely high-resolution headshots provide the best training data for face-swapping algorithms. When uploading profile pictures to public forums (LinkedIn, Twitter/X), consider using lower-resolution files or applying subtle noise filters (see “Watermarking” below) that disrupt AI training.

- 360-Degree Views: Videos that show your face from multiple angles are gold mines for deepfake training. Be mindful of posting content that provides a complete mapping of your facial structure.

Watermarking and “Poisoning” Tools

Emerging tools allow users to apply invisible changes to their images that are imperceptible to the human eye but confusing to AI models.

- University of Chicago’s “Glaze” and “Nightshade”: Originally designed for artists to protect style, similar concepts are being adapted for facial protection. These tools alter pixels in a way that prevents models from interpreting the image correctly.

- Invisible Watermarking: Standards bodies like the Coalition for Content Provenance and Authenticity (C2PA) are working on cryptographic metadata standards. While not yet universal, platforms are beginning to label AI content.

Practical Defense Strategies: Establishing Protocols

Technology alone cannot solve the deepfake problem because the attacks often exploit human psychology (social engineering) rather than software bugs. You must establish human protocols (“wetware” patches) for yourself and your family.

1. The Family “Safe Word”

This is the single most effective low-tech defense against AI voice scams.

- How it works: Agree on a secret word or phrase with your close family members.

- The Rule: If anyone calls claiming to be in distress, arrested, or hurt, and asking for money or information, ask for the safe word.

- Implementation: The word should be simple but unique (e.g., “Purple Elephant” or a specific childhood pet’s name). If the caller cannot provide it, hang up and call the person back on their known number.

2. The “Call Back” Protocol

If you receive a call from a known organization (bank, IRS, employer) or a person appearing to be a colleague via video call:

- Hang Up/Disconnect: Do not provide information.

- Verify: Call the entity back using a trusted phone number found on the back of your credit card or the official company directory.

- Out-of-Band Verification: If you are on a video call with a “boss” asking for a wire transfer, message them on a separate channel (Slack, Signal, internal email) to confirm the request.

3. Transition to Hardware Authentication

Since deepfakes can bypass biometric verification and phishing attacks can steal SMS codes, the strongest defense for digital identity is a physical security key (e.g., YubiKey, Google Titan).

- Why it helps: Even if a deepfaked video call convinces you to log in to a fake website, a hardware key will not authenticate unless the domain is legitimate. It anchors your digital identity to a physical object that cannot be cloned by AI.

Tools and Technologies for Detection

Can we use AI to fight AI? Yes, but with caveats. Detection tools analyze artifacts in media—inconsistent lighting, lack of blinking, unnatural lip-syncing, or digital noise patterns.

Browser Extensions and Software

Several cybersecurity firms are releasing browser extensions that flag potentially synthetic media. These tools analyze the metadata and pixel structure of images on social media feeds.

- Deepware Scanner: A tool allowing users to upload videos to check for manipulation.

- Intel’s FakeCatcher: Analyzes “blood flow” pixels in video to determine liveness.

The Limitation: Detection tools often lag behind generation tools. A detector might have a 95% accuracy rate, but the 5% failure rate makes it unreliable for critical decisions. Never rely solely on software to tell you if something is real; use it as one data point among many.

Cryptographic Provenance (C2PA)

The long-term solution lies in “content provenance.” This involves embedding cryptographically secure metadata into photos and videos at the moment of capture (via the camera hardware). This digital signature proves that the content originated from a real camera and has not been altered. Major camera manufacturers (Sony, Leica, Nikon) and software companies (Adobe) are adopting the C2PA standard. As of 2026, look for “Content Credentials” icons on images which allow you to view the history of the file.

Corporate and Institutional Responsibilities

If you are a business leader or IT administrator, the deepfake threat vector shifts from personal privacy to corporate security.

The “CFO” Fraud

There have been documented cases where finance workers were duped into transferring millions of dollars after attending a video conference call where the CFO and other colleagues were deepfakes. Defense:

- Multi-Person Authorization: Require two human approvals for any transfer over a certain threshold.

- Strict Process: Financial requests should never be processed solely based on a video or voice command.

Protecting Employee Identity

Companies often post high-resolution videos of executives and employees for marketing purposes. This inadvertently provides threat actors with training data for “Whaling” attacks (phishing attacks targeting senior executives). Policy Strategy:

- Assess the necessity of public audiovisual data.

- Train employees specifically on deepfake awareness—teaching them that “seeing” is no longer “knowing.”

Legal Recourse and Ethics

The legal framework surrounding deepfakes is struggling to keep pace with technology. Laws vary significantly by jurisdiction.

Current Legal Landscape (As of 2026)

- Non-Consensual Intimate Imagery (NCII): Many jurisdictions (including the UK via the Online Safety Act and various US states) have criminalized the creation and distribution of deepfake pornography.

- Right of Publicity: Laws protecting an individual’s likeness are being expanded to cover digital replicas. In the US, the “NO FAKES Act” or similar state-level legislation often provides a civil cause of action against unauthorized digital cloning.

- Political Ads: Regulations increasingly require clear labeling/watermarking of AI-generated content in political advertising.

What to Do If You Are Targeted

- Preserve Evidence: Download the video, audio, or image. Take screenshots of the context, timestamps, and user profiles.

- Report to Platform: Use the specific reporting flows for “Impersonation” or “Synthetic Media” on platforms like X, Meta, or TikTok.

- Legal Action: Consult a lawyer specializing in digital privacy or defamation. You may have grounds for a takedown notice based on copyright (if you own the original source material) or right of publicity violations.

- Identity Theft Report: If the deepfake is used for fraud, file a report with your national fraud reporting center (e.g., FTC in the US, Action Fraud in the UK).

Common Mistakes and Pitfalls

In the rush to protect privacy, avoid these common errors:

- Over-reliance on “Glitches”: Early deepfakes had obvious flaws—strange hands, unblinking eyes, robotic voices. Modern deepfakes fix these issues. Do not assume a video is real just because the person is blinking or the lighting looks correct.

- Sharing “Voiceprints” for Fun: Viral social media trends often ask users to “record yourself saying these phrases.” These are effectively data harvesting operations for voice cloning models. Avoid participating in memes that require specific vocal inputs.

- Ignoring the “Low Tech” Deepfake: A threat actor doesn’t need a perfect video clone. A slightly blurry video or a choppy audio connection (blamed on “bad Wi-Fi”) can mask the imperfections of a cheap deepfake model. Skepticism should increase, not decrease, when technical quality is poor.

The Future of Digital Identity: Self-Sovereign Identity (SSI)

Looking ahead, the solution to the deepfake crisis may lie in a complete restructuring of how we handle digital ID. Self-Sovereign Identity (SSI) and decentralized identity frameworks allow individuals to control their own identity verification data without relying on a central database.

In an SSI future, you might verify your identity to a bank or social media platform using a “Zero-Knowledge Proof.” This allows you to prove you are who you say you are (or that you are over 18, or a real human) without actually sharing your biometric data or uploading a video selfie that could be intercepted and reused.

Until these technologies are ubiquitous, we live in the “Uncanny Valley” of security—a time where our digital representations can be weaponized against us.

Conclusion

The age of deepfakes requires a shift in mindset from “passive trust” to “active verification.” Protecting your digital identity is no longer just about securing your data; it is about securing your likeness and your voice. By minimizing your biometric exposure, establishing family and corporate verification protocols, and utilizing hardware-based authentication, you can build a resilient defense against synthetic media.

The technology will continue to advance, likely becoming indistinguishable from reality to the naked eye. However, the principles of verification remain constant. When in doubt, disconnect and verify through a trusted channel. In a world where anything can be faked, the established, offline connections we have with people and institutions become our most reliable anchors of truth.

FAQs

1. Can deepfakes trick banking facial recognition? Yes, sophisticated deepfakes can potentially trick banking facial recognition, specifically systems that lack advanced “liveness detection.” While banks use 3D mapping technology that is harder to fool than simple 2D cameras, researchers and criminals have demonstrated methods to bypass these checks using “injection attacks” where the camera feed is replaced with a pre-recorded deepfake video.

2. How can I tell if a video call is a deepfake? Look for subtle “glitches” such as inconsistent lighting on the face versus the background, unnatural eye movements, or lip-syncing that is slightly off. Ask the person to turn their head sideways (profile view), as many 2D-based models struggle to maintain the illusion at extreme angles. Finally, ask a personal question or use a pre-arranged safe word.

3. Are there laws against creating deepfakes of me? The laws are a patchwork and depend heavily on where you live. Generally, creating a deepfake for the purpose of fraud, defamation, or non-consensual pornography is illegal in many jurisdictions. However, creating a non-harmful deepfake for satire or artistic expression may be protected speech. Legal recourse often relies on harassment, copyright, or “right of publicity” statutes.

4. What is the “Safe Word” strategy? The Safe Word strategy is a low-tech defense for families. You agree on a secret word or phrase that only your family knows. If you receive a distress call (voice or video) from a family member claiming to be in trouble and needing money, you ask for the safe word. If the caller cannot provide it, it is likely an AI clone.

5. How do I remove my voice data from the internet? Completely removing data is difficult, but you can reduce exposure. Set social media accounts to private. Delete old videos from platforms like YouTube or Facebook where you are speaking clearly for long periods. Request takedowns from data broker sites that might aggregate your personal info. Be cautious about using voice-based authentication for customer service.

6. Can AI detectors accurately spot deepfakes? Not reliably enough to be foolproof. While AI detectors can spot many fakes by analyzing pixel artifacts, they produce both false positives (flagging real video as fake) and false negatives (missing high-quality fakes). They should be used as a tool to flag suspicion, not as the final authority on truth.

7. Is my voice mail greeting a security risk? Yes, a personalized voicemail greeting provides a clean, isolated sample of your voice that scammers can download if they call your phone. It is safer to use the default automated greeting provided by your carrier or a generic “text-to-speech” greeting that does not use your actual voice.

8. What is the difference between a “cheapfake” and a “deepfake”? A “deepfake” uses AI and machine learning to generate or manipulate content. A “cheapfake” (or shallowfake) uses conventional editing techniques—like slowing down a video to make someone sound drunk, or splicing audio clips together out of context. Both are used for disinformation, but deepfakes are technically harder to detect.

9. How does “Content Credentials” help fight deepfakes? Content Credentials (based on C2PA standards) act like a digital nutrition label for media. When a photo or video is taken with a compatible camera, it embeds a secure, tamper-evident history of the file. If the file is edited or generated by AI, that information is recorded in the metadata, allowing viewers to verify the origin of the content.

10. What should I do if I find a deepfake of myself online? First, save a copy for evidence. Second, report the content immediately to the platform hosting it (most have specific policies against synthetic media and impersonation). Third, consider consulting a lawyer regarding a cease-and-desist letter or potential civil action based on defamation or right of publicity.

References

- Federal Bureau of Investigation (FBI). (2024). The Growing Threat of Deepfakes and Synthetic Media. FBI Internet Crime Complaint Center (IC3). https://www.ic3.gov

- Cybersecurity and Infrastructure Security Agency (CISA). (2025). Contextualizing Deepfake Threats to Organizations. CISA.gov.

- Coalition for Content Provenance and Authenticity (C2PA). (2025). Technical Specifications for Digital Content Provenance. C2PA.org. https://c2pa.org/specifications/

- National Institute of Standards and Technology (NIST). (2024). NIST Interagency Report on Facial Recognition Technology and Liveness Detection. NIST.gov.

- Federal Trade Commission (FTC). (2024). Voice Cloning and Consumer Fraud: A Report. FTC.gov.

- Europol Innovation Lab. (2024). Facing Reality? Law Enforcement and the Challenge of Deepfakes. Europol.europa.eu. https://www.europol.europa.eu/publications-events/publications/facing-reality-law-enforcement-and-challenge-of-deepfakes

- Witness. (2023). Prepare, Don’t Panic: Synthetic Media and Deepfakes. Witness.org. https://www.witness.org/deepfakes/

- Stanford Internet Observatory. (2025). The State of Deepfakes: Landscape, Threats, and Impact. Stanford.edu. https://cyber.fsi.stanford.edu/io