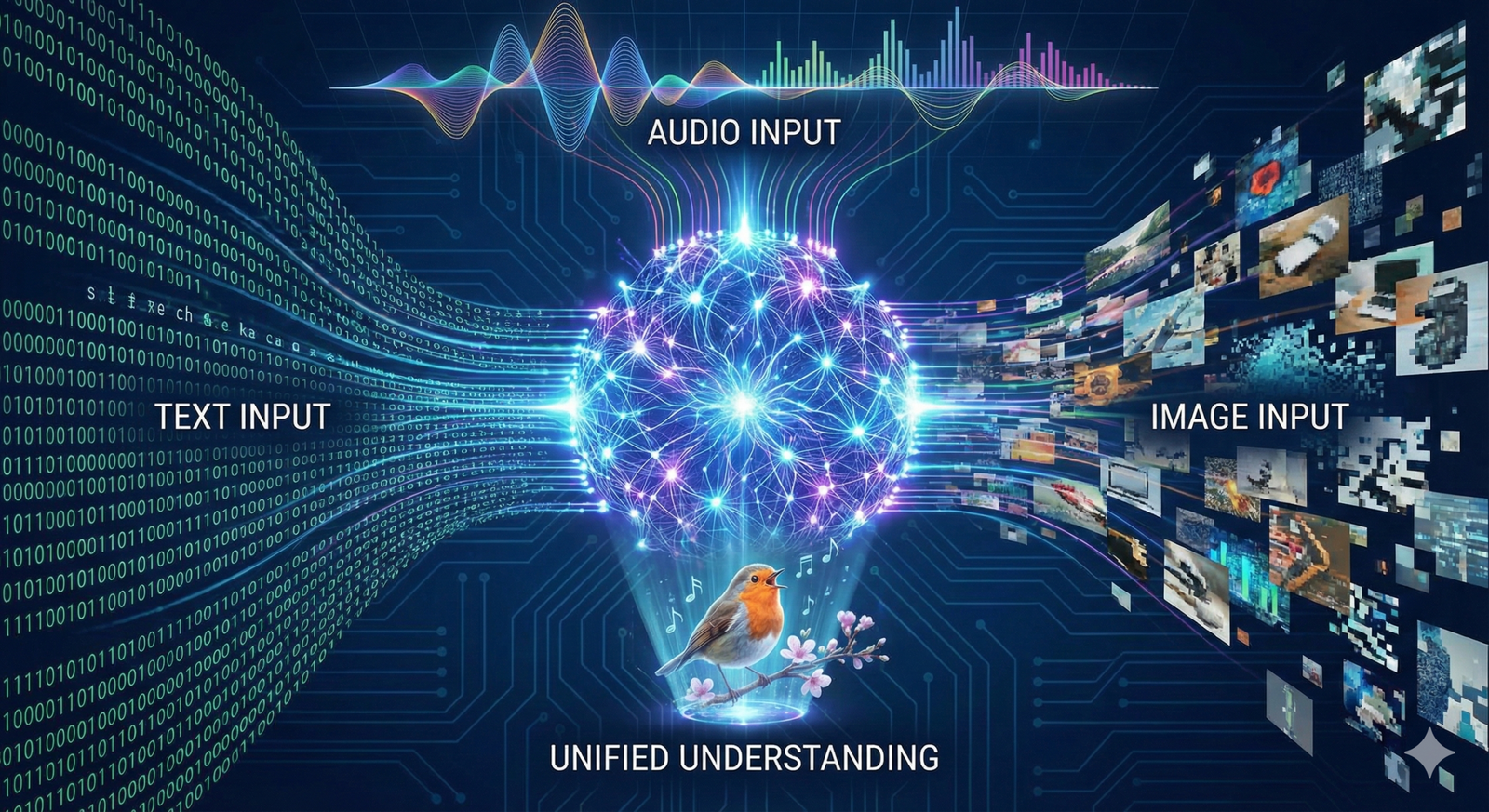

For decades, artificial intelligence existed in silos. Text models processed words, computer vision models analyzed pixels, and audio models deciphered sound waves. They were brilliant specialists but disconnected isolationalists. A text model could write a sonnet about a sunset, but it couldn’t recognize one in a photograph. A vision model could spot a cat, but it couldn’t explain why the cat looked angry.

This era of separation is ending. The convergence of multimodal zero-shot learning represents a fundamental shift in how machines perceive reality. By combining text, images, and audio into unified foundation models, we are moving toward AI that understands the world more like humans do—holistically, contextually, and seamlessly.

In this guide, multimodal zero-shot learning refers to the capability of a single AI model to process and relate information across different types of media (modalities) and perform tasks it was not explicitly trained to do (zero-shot).

Key Takeaways

- Breaking Silos: Multimodal models dissolve the boundaries between text, vision, and audio, allowing for fluid translation and reasoning across different data types.

- Generalization Power: Zero-shot learning enables models to identify objects or perform tasks they haven’t seen during training, relying on semantic relationships rather than labeled examples.

- Efficiency: Instead of training three separate models for three tasks, one multimodal foundation model can often handle them all, though with higher initial computational costs.

- Real-World Impact: From accessibility tools that describe surroundings to the blind, to search engines that find songs by humming, the applications are vast and immediate.

- The “Context” Revolution: These models don’t just match patterns; they appear to understand context, enabling complex reasoning like explaining a meme or analyzing a video clip’s sentiment.

Scope of This Article

What is IN scope:

- Detailed explanations of zero-shot learning and multimodal architecture.

- The mechanisms of combining text, images, and audio (embeddings, transformers).

- Practical applications and use cases in business and technology.

- Overviews of major architectures (like CLIP, Gemini, GPT-4o).

- Ethical considerations and limitations.

What is OUT of scope:

- Deep mathematical proofs of transformer architectures.

- Step-by-step coding tutorials for building a model from scratch (though we discuss high-level implementation).

- Reviews of specific consumer hardware.

1. Defining the Core Concepts

To understand the revolution, we must first deconstruct the terminology. The industry buzzwords often obscure simple, albeit powerful, concepts.

What is Multimodal AI?

Human intelligence is inherently multimodal. When you read the word “apple,” you might simultaneously visualize a red fruit, recall the crunching sound of a bite, and remember the taste. You do not process the word in a vacuum.

Traditional AI was unimodal. A Natural Language Processing (NLP) model dealt only with text. A Convolutional Neural Network (CNN) dealt only with images. Multimodal AI bridges these gaps. It is a system trained on multiple types of data—text, images, audio, video, sensor data—simultaneously.

The goal is to create a shared representation space. In this space, the mathematical representation (vector) of an image of a dog is distinctively close to the mathematical representation of the text string “a loyal canine.” The model understands that these two different inputs refer to the same underlying concept.

What is Zero-Shot Learning?

In traditional supervised learning, if you wanted an AI to recognize a platypus, you had to show it thousands of images labeled “platypus.” If you showed it an echidna, it would fail because it had never seen that specific label.

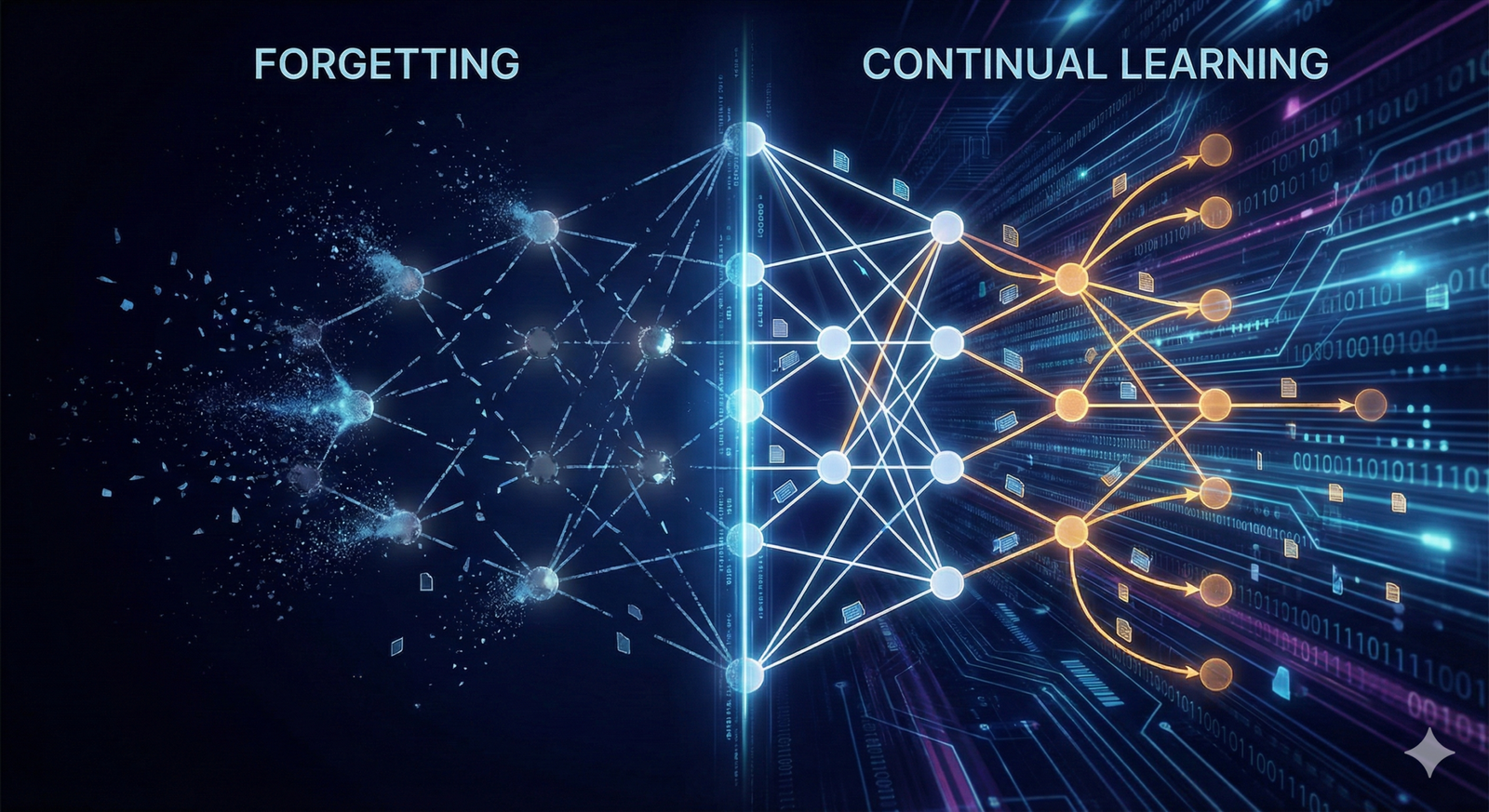

Zero-shot learning (ZSL) breaks this dependency. It allows a model to classify data or perform tasks it has not explicitly seen during training. It does this by leveraging auxiliary information—usually text descriptions.

The “Zebra” Analogy: Imagine you have never seen a zebra. However, you know what a horse looks like, and you have been told that a zebra looks like a “striped horse.” If you walk into a zoo and see a striped horse, you can identify it as a zebra immediately. You utilized zero-shot learning. You combined your visual knowledge (horse) with a semantic concept (striped) to identify a new object without ever having seen a photo of it before.

The Intersection: Multimodal Zero-Shot Learning

When we combine these two concepts, we get multimodal zero-shot learning. This is the ability of an AI to take a prompt in one modality (e.g., text: “Find the clip where the glass breaks”) and find the answer in another modality (e.g., audio/video) without having been specifically trained on a dataset of “glass breaking” clips.

This capability is the engine behind modern generative AI and advanced search tools. It is what allows you to type “a futuristic city in the style of synthwave” and get an image, even if the model wasn’t trained on that exact sentence.

2. How It Works: The Mechanics of Convergence

Understanding the “how” requires looking under the hood at how machines translate different realities (pixels, sound waves, characters) into a universal language.

The Universal Language: Embeddings and Vector Spaces

Computers cannot natively understand that a picture of a cat and the word “cat” are related. To bridge this, AI researchers use embeddings.

An embedding is a list of numbers (a vector) that represents a piece of data. High-dimensional vector spaces act as a map of meaning. In a well-trained multimodal model:

- The vector for an image of a King.

- The vector for the word “King.”

- The vector for an audio clip of a royal trumpet fanfare.

These would all cluster together in the vector space, while the vector for a “banana” would be far away.

Contrastive Learning: Models like OpenAI’s CLIP (Contrastive Language-Image Pre-training) popularized a technique called contrastive learning. The model is trained on pairs of images and their text captions. It learns to pull the image and its correct caption closer together in vector space, while pushing incorrect captions further away. This alignment is what enables the “zero-shot” magic. Once the model understands the relationship between visual features and language, it can recognize anything that can be described in words.

The Architecture: Transformers for Everything

The Transformer architecture, originally designed for text (Google’s BERT, GPT), has proven surprisingly versatile. It excels at processing sequences of data and paying “attention” to different parts of that sequence to derive context.

- Text: A sequence of tokens (words/sub-words).

- Images: Can be broken into a sequence of patches (Vision Transformers or ViT).

- Audio: Can be visualized as spectrograms (visual representations of spectrum frequencies) and treated like images or sequences.

Because Transformers can process these different inputs using similar mechanisms, it becomes easier to build a single “brain” that ingests all of them.

Cross-Attention Mechanisms

In a multimodal model, cross-attention is the mechanism that allows one modality to influence the processing of another.

- Text-to-Image: When generating an image, the model uses the text prompt to “attend” to specific visual concepts learned during training. If the prompt says “sunset,” the model focuses on warm color spectrums and horizon lines in its latent space.

- Audio-to-Text: When transcribing a video, visual cues (like a person’s lips moving or a door slamming) can help the model disambiguate unclear audio through cross-modal context.

3. Deep Dive: Text, Images, and Audio

To appreciate the complexity of multimodal zero-shot learning, we must look at how each modality contributes to the whole.

Text: The Semantic Anchor

Text is currently the “operating system” of multimodal models. It provides the semantic logic and the instruction layer.

- Role: Text acts as the query language. It frames the zero-shot task. We don’t show the model a picture to ask for a picture; we use text to describe the desired output.

- Zero-Shot Capability: Large Language Models (LLMs) provide the encyclopedic knowledge base. They know that “filibuster” relates to politics and “sauté” relates to cooking. This semantic web is what allows the visual or audio components to be labeled correctly without specific training examples.

Images: The Visual Context

Vision provides spatial and physical understanding.

- Role: Images ground the AI in physical reality. They teach the model about shapes, colors, textures, and object relationships.

- Integration: In zero-shot scenarios, the model analyzes the raw pixels and converts them into semantic concepts. It doesn’t just see “Shape A next to Shape B”; it sees “A person holding a smartphone.”

- Challenge: Visual ambiguity is high. A “bank” can be a river side or a financial institution. Multimodal models use surrounding context (e.g., is there water or an ATM in the image?) to resolve this.

Audio: The Temporal Dimension

Audio adds a layer of temporal (time-based) and emotive information that images often miss.

- Role: Audio captures tone, urgency, music, and environmental events.

- Speech vs. Non-Speech: While speech-to-text is mature, Audio Event Detection (AED) is the frontier of zero-shot learning. This involves recognizing sounds like “glass breaking,” “baby crying,” or “engine idling.”

- Zero-Shot Audio: A user might prompt, “Find the moment in the podcast where they laugh.” The model must understand the acoustic signature of “laughter” without having been explicitly trained on that specific podcast’s audio tracks.

4. Real-World Applications

The theoretical power of multimodal zero-shot learning translates into transformative practical applications across industries.

1. Advanced Search and Retrieval (Semantic Search)

Traditional search relied on keywords. If an image wasn’t tagged “golden retriever,” a text search wouldn’t find it.

- The Shift: With multimodal embeddings, you can search a video library using natural language: “Show me the scene where the car drives off the cliff.” The model understands the semantic meaning of the video frames and matches it to your text query, even if the video has zero metadata tags.

- Impact: This is revolutionizing media archives, legal discovery, and e-commerce (e.g., “Find a shirt with a pattern that looks like this wallpaper”).

2. Generative Content Creation

This is the most visible application. Tools like Midjourney, DALL-E 3, and Stable Diffusion are multimodal systems.

- Text-to-Audio: Generating sound effects for movies by typing “footsteps on gravel, night time, eerie.”

- Image-to-Text (Captioning): Automatically generating descriptive alt-text for millions of images on a website to improve SEO and accessibility.

- Zero-Shot Editing: “Make the dog in this photo look like a painting by Van Gogh.” The model applies a style transfer based on its zero-shot understanding of “Van Gogh style” without needing a specific dataset of “Van Gogh Dogs.”

3. Accessibility and Assistive Tech

Multimodal AI is a game-changer for the visually or hearing impaired.

- Visual Assistance: Smart glasses can look at a refrigerator and answer verbal questions: “Do I have any milk left?” The model processes the visual input (image of fridge interior) and the audio query (user voice) to generate a text/speech response.

- Contextual Captioning: For the hearing impaired, AI can describe non-speech sounds in video calls (“doorbell ringing,” “dog barking in background”) which were previously lost in standard subtitles.

4. Healthcare Diagnostics

Medical data is inherently multimodal: patient history (text), X-rays/CT scans (images), and heart/lung sounds (audio).

- Application: A doctor can query an AI: “Based on this X-ray and the patient’s reported symptoms of wheezing, what is the likely diagnosis?”

- Zero-Shot Utility: The model can flag rare anomalies it hasn’t been explicitly trained to diagnose by recognizing deviations from “healthy” baselines described in medical literature.

5. Robotics and Embodied AI

Robots need to hear, see, and read instructions.

- Scenario: You tell a robot, “Pick up the red apple.” The robot must process the audio command, use vision to identify objects, filter for “red” and “apple,” and calculate motor control.

- Resilience: If you say, “Pick up the gala apple,” and the robot hasn’t been trained on “gala,” zero-shot learning allows it to infer that the gala is likely the reddish-orange fruit, distinct from the granny smith.

5. Leading Models and Architectures

As of early 2026, the landscape is dominated by a few key architectures that have defined the state of the art.

OpenAI: CLIP and GPT-4o

- CLIP (Contrastive Language-Image Pre-training): The pioneer. CLIP was trained on 400 million text-image pairs. It taught the industry that you don’t need expensive labeled data (like ImageNet); you can scrape the internet for image-text pairs and achieve incredible zero-shot performance.

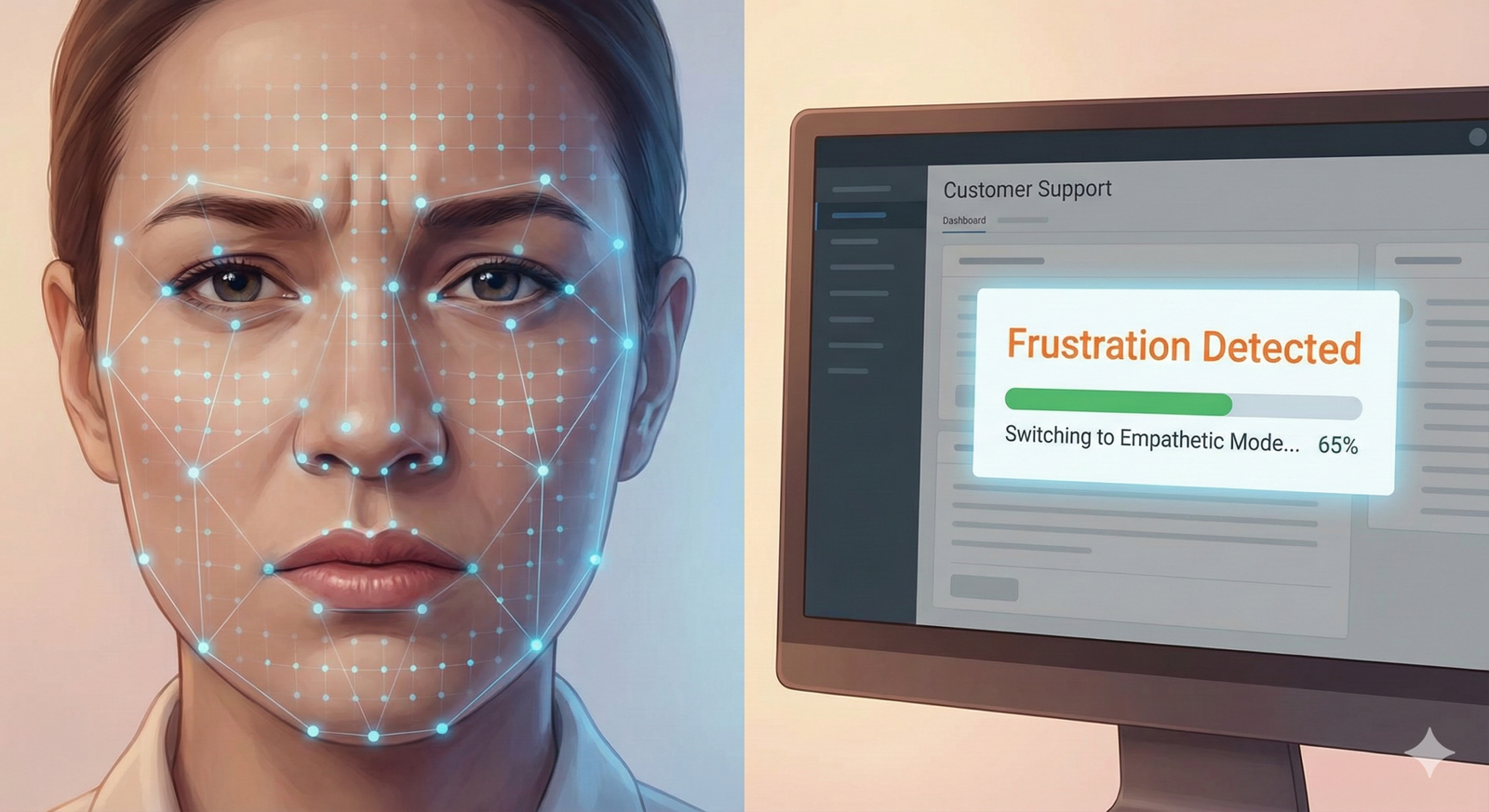

- GPT-4o: A natively multimodal model. Unlike previous iterations that used separate models glued together, GPT-4o (and its successors) is trained on text, vision, and audio jointly. This allows it to detect emotion in a user’s voice and respond with a sympathetic tone, processing the audio inputs directly rather than converting them to text first.

Google: Gemini

Google’s Gemini models were built from the ground up to be multimodal. They can reason across video and text simultaneously.

- Capabilities: Gemini can watch a video of someone drawing a physics problem on a board and solve it in real-time. This demonstrates high-level reasoning across modalities—translating visual handwriting into mathematical logic.

Meta: ImageBind

Meta released ImageBind, often described as an immense step toward “binding” modalities.

- Six Modalities: ImageBind connects text, image/video, audio, depth (3D), thermal (infrared), and IMU (movement sensors).

- The Zero-Shot Implication: Because all these are bound in a single vector space, you can theoretically use audio to generate a thermal map, or use movement data to retrieve images, even without specific training pairs for those obscure combinations.

6. Implementation Strategies for Business

For organizations looking to leverage multimodal zero-shot learning, the barrier to entry has lowered significantly, but strategy is key.

Who This Is For (And Who It Isn’t)

- This IS for: Companies with vast unstructured data (video archives, customer support audio logs, image banks) who want to make that data searchable and actionable without manual tagging.

- This IS for: Developers building “copilot” interfaces where users interact naturally via voice or images.

- This ISN’T for: Highly specialized numerical forecasting (e.g., high-frequency trading) where tabular data dominates and multimodal reasoning adds noise rather than signal.

The “Vector Database” Necessity

Implementing these models requires infrastructure to store embeddings.

- Tools: Vector databases like Pinecone, Weaviate, and Milvus are essential. They store the high-dimensional vectors generated by models like CLIP.

- Workflow:

- Pass your images/audio through a multimodal encoder.

- Store the resulting vectors in the database.

- When a user queries (text or image), encode the query into a vector.

- Perform a “nearest neighbor” search in the database to find matches.

Using APIs vs. Fine-Tuning

- Zero-Shot (API): For 80% of business cases, off-the-shelf models (via APIs from OpenAI, Google, Anthropic, or Hugging Face) work beautifully. They handle general concepts (cars, people, sentiment) out of the box.

- Fine-Tuning: If your domain is highly specific (e.g., identifying microscopic defects on a specific circuit board brand), zero-shot might struggle. You may need to fine-tune the multimodal model on a small set of your specific data to align its embeddings with your niche vocabulary.

7. Challenges and Ethical Considerations

While the capabilities are dazzling, the risks are equally multidimensional.

The Hallucination Multiplier

We know text models hallucinate (invent facts). Multimodal models can hallucinate across senses.

- Example: A model might describe an image of a generic flower as a specific rare orchid because the text prompt led it astray, or it might “hear” words in a noisy audio clip that weren’t spoken.

- Grounding: Ensuring the model is “grounded” in the input data rather than its training memory is a major area of active research.

Bias Amplification

Data on the internet is biased. Images of “CEOs” in training data are predominantly white men. When a multimodal model generates images or retrieves resumes based on zero-shot descriptions, it often amplifies these stereotypes.

- The Risk: If a recruitment tool uses zero-shot audio analysis to judge “confidence” in a candidate’s voice, it may be biased against certain accents or dialects that were underrepresented in the training audio.

Compute and Environmental Cost

Training these massive foundation models requires enormous energy. Furthermore, inference (running the model) is more expensive for multimodal tasks than for text. Processing video frames and audio spectrograms consumes significantly more GPU cycles than processing text tokens, impacting sustainability goals and cloud bills.

Copyright and “Style” Theft

Zero-shot models can mimic the style of living artists or the sound of specific musicians.

- Legal Grey Area: Is it fair use to train on copyrighted songs so the model can understand the concept of “jazz”? Is it a violation to generate a new song that sounds exactly like a famous pop star? Courts globally are currently grappling with these questions.

8. The Future: Toward “Any-to-Any” Intelligence

Where is this heading? The trajectory is clear: the dissolution of “modalities” as distinct technical challenges.

Embodied Intelligence

The next frontier is taking these brains out of the server and putting them into bodies (robots). Multimodal zero-shot learning is the prerequisite for general-purpose robots that can navigate messy, noisy, unpredictable homes and factories.

Real-Time, On-Device Processing

Currently, most powerful multimodal models run in the cloud. The push is toward “Edge AI”—running efficient versions of these models on phones and laptops. This would allow for privacy-preserving AI that can see and hear (via your phone camera/mic) without sending data to a central server.

“Any-to-Any” Generation

We are moving toward systems where inputs and outputs are fluid.

- Current: Text-to-Image.

- Future: (Humming + Sketch) -> (Orchestrated Song + Music Video). The ability to combine partial inputs from various senses to create complete, complex outputs.

9. Common Pitfalls to Avoid

When adopting or experimenting with multimodal zero-shot models, avoid these common mistakes:

- Overestimating “Zero-Shot” Accuracy: Just because it can identify objects without training doesn’t mean it’s 100% accurate. Always keep a human in the loop for critical decisions (like medical diagnosis or safety inspections).

- Ignoring Latency: Multimodal inference is slow. Don’t expect real-time video analysis from a massive foundation model without significant optimization or latency lag.

- Neglecting Data Privacy: Sending audio and video to third-party APIs carries higher privacy risks than text. Biometric data (face, voice) is sensitive and often regulated (e.g., GDPR, CCPA).

- Assuming Text is Enough: Don’t rely solely on text prompts to steer these models. Use “image prompting” (providing a reference image) alongside text to significantly improve accuracy and style control.

Conclusion

The convergence of text, image, and audio into unified multimodal zero-shot models is not just a technical upgrade; it is a step change in AI utility. We have moved from models that act like calculators—precise but narrow—to models that act like interns—capable of looking, listening, reading, and synthesizing information to handle tasks they haven’t explicitly rehearsed.

For businesses, developers, and creators, the opportunity lies in the “spaces between.” It is no longer about just analyzing text or just classifying images. It is about the rich insights found when you connect a customer’s angry tone (audio) with their frustrated facial expression (video) and their complaint ticket (text).

As of 2026, the technology is accessible, powerful, and rapidly maturing. The next step is yours: identify the siloed data in your world—the lonely audio files, the unsearchable video archives—and explore how multimodal AI can unlock their value.

Next Steps

- Experiment: Try a multimodal API (like OpenAI’s Vision or Google’s Gemini) to analyze a simple set of images or audio files from your business.

- Audit Data: Look at your unstructured data. Do you have video or audio that is currently a “black box”? This is your prime candidate for multimodal zero-shot indexing.

- Learn: Familiarize yourself with vector databases, as they are the infrastructure that makes this technology usable at scale.

FAQs

What is the difference between multimodal and zero-shot learning?

Multimodal learning refers to the type of data an AI uses (e.g., seeing images AND reading text). Zero-shot learning refers to the training method that allows the AI to handle tasks it hasn’t seen before. Multimodal zero-shot learning combines both: using multiple data types to perform new, untrained tasks.

Can zero-shot models replace supervised models?

Not entirely. Zero-shot models are “generalists.” They are great for broad tasks and handling the unexpected. However, for highly specific, repetitive tasks where 99.9% accuracy is required (like manufacturing defect detection), a specific supervised model (fine-tuned on that exact data) will usually outperform a zero-shot model.

How does audio processing fit into multimodal models?

Audio is typically converted into a visual representation called a spectrogram. The model then processes this spectrogram similarly to how it processes images, looking for patterns. It also uses text descriptions of sounds (e.g., “siren,” “laughter”) during training to learn the relationship between the sound waves and their meaning.

Is multimodal AI expensive to run?

Generally, yes. Processing images and audio requires more computational power (tokens) than processing pure text. However, costs are dropping rapidly as models become more efficient and hardware improves.

What are some examples of multimodal zero-shot tasks?

- Visual Q&A: Asking “What color is the car?” about an image the model has never seen.

- Cross-Modal Search: Typing “a happy song with drums” and finding an audio track without any text tags.

- Zero-Shot Classification: Categorizing product images into buckets like “shoes,” “shirts,” and “hats” without training a specific classifier for those categories.

Do I need to know coding to use these models?

To build them? Yes. To use them? No. Many commercial tools (like ChatGPT Plus or Midjourney) are user-friendly interfaces for multimodal models. However, integrating them into business software usually requires Python programming knowledge and familiarity with APIs.

Why is “grounding” important in multimodal AI?

Grounding ensures the AI’s response is actually based on the image or audio provided, rather than just making things up based on its internal knowledge. For example, if you upload a picture of a generic dog, you want the AI to describe that dog, not describe a generic dog from its training memory.

What is CLIP?

CLIP (Contrastive Language-Image Pre-training) is a foundational model developed by OpenAI. It was a breakthrough because it learned to associate images with text captions from the internet, allowing it to perform zero-shot classification on images with incredible accuracy.

References

- OpenAI. (2021). CLIP: Connecting Text and Images. OpenAI Research. https://openai.com/research/clip

- Google DeepMind. (2023). Gemini: A Family of Highly Capable Multimodal Models. Google DeepMind Technical Report. https://deepmind.google/technologies/gemini/

- Meta AI. (2023). ImageBind: Holistic AI Learning Across Six Modalities. Meta AI Research. https://ai.meta.com/blog/imagebind-six-modalities-binding-ai/

- Vaswani, A., et al. (2017). Attention Is All You Need. Advances in Neural Information Processing Systems (NeurIPS). https://arxiv.org/abs/1706.03762

- Radford, A., et al. (2021). Learning Transferable Visual Models From Natural Language Supervision. OpenAI. https://arxiv.org/abs/2103.00020

- Hugging Face. (n.d.). Multimodal Models Task Guide. Hugging Face Documentation. https://huggingface.co/tasks/multimodal

- Anthropic. (2024). Claude 3 Model Family: Multimodal Capabilities. Anthropic Research. https://www.anthropic.com/news/claude-3-family

- Pinecone. (n.d.). What is Vector Search? Pinecone Learning Center. https://www.pinecone.io/learn/vector-search-basics/

- Cao, Y., et al. (2024). A Survey on Multimodal Large Language Models. arXiv preprint. https://arxiv.org/abs/2306.13549

- World Economic Forum. (2024). The Future of Jobs Report: AI and Multimodal Impacts. https://www.weforum.org/reports/the-future-of-jobs-report-2023/