The narrative surrounding artificial intelligence often oscillates between two extremes: a utopian future where machines do all the work, and a dystopian anxiety where humans are rendered obsolete. However, a third, more pragmatic reality is emerging in the modern workplace—one that doesn’t replace the human, but rather elevates them. This is the era of human-AI collaboration, often realized through the concept of “centaur teams.”

In the high-stakes world of competitive chess, a “centaur” is a team composed of a human player and an AI program working in tandem. History and recent studies have shown that these hybrid teams frequently outperform not only unassisted humans but also the most powerful standalone supercomputers. This principle is now migrating from the chessboard to the boardroom, the hospital, and the design studio. As of January 2026, the most successful organizations are not those that automate everything, but those that figure out how to weave human intuition, ethics, and strategic context with the raw processing power of AI.

This guide explores the mechanics of centaur teams, explains why they outperform pure automation, and provides a strategic framework for implementing human-AI collaboration in your organization.

Key Takeaways

- Definition: A “centaur team” combines human intuition and strategic oversight with AI’s speed and data processing capabilities.

- Performance: Research indicates that hybrid human-AI teams often outperform both humans alone and AI alone, particularly in tasks requiring high nuance or creativity.

- The “Jagged Frontier”: AI capabilities are uneven; humans are essential for navigating the gaps where AI competence drops off (edge cases, ethical dilemmas).

- Skill Shift: Success requires a shift from rote execution to “prompt engineering,” “output verification,” and high-level strategy.

- Risk Mitigation: Human-in-the-loop workflows drastically reduce the risks of AI hallucinations, bias, and security failures.

- Implementation: Building a centaur team isn’t just about buying software; it requires redesigning workflows to optimize the handoff between human and machine.

Who This Is For (And Who It Isn’t)

This guide is designed for:

- Business Leaders and Executives looking to integrate AI without displacing their workforce or sacrificing quality.

- Project Managers and Team Leads who need practical frameworks for managing hybrid teams of humans and digital agents.

- Knowledge Workers seeking to understand how to position themselves as “pilots” of AI rather than competitors to it.

- HR Professionals designing upskilling programs for the AI-augmented workforce.

This guide is NOT for:

- Those looking for a technical manual on coding specific neural networks.

- Readers seeking a “set it and forget it” automation solution that removes humans entirely.

What Are “Centaur Teams”?

To understand the future of work, we must look back at a pivotal moment in the history of artificial intelligence. In 1997, world chess champion Garry Kasparov famously lost to IBM’s Deep Blue. For many, this signaled the beginning of the end for human intellectual dominance. However, Kasparov didn’t retreat. Instead, he pioneered a new form of chess called “Freestyle Chess.”

In Freestyle Chess, players could use any tool they wanted. The result was revealing: the winner of the first freestyle tournament was not a grandmaster, nor a supercomputer, but a pair of amateur chess players using three average computers. Their ability to “coach” the machines—knowing when to trust the computer and when to override it—allowed them to defeat superior human opponents and superior standalone AIs. Kasparov dubbed these human-machine pairs “Centaurs.”

Defining the Modern Centaur

In a modern business context, a centaur team is a workflow structure where humans and AI agents have distinct, complementary roles. It differs significantly from simple automation:

- Automation: The machine replaces the human for a specific task (e.g., a robot arm welding a car part). The human is removed from the loop.

- Centaur (Augmentation): The human remains the “pilot” or “architect.” The AI acts as a “copilot” or “engine.” The human provides the intent, context, and final judgment; the AI provides the generation, calculation, and pattern recognition.

Centaur vs. Cyborg

While often used interchangeably, some researchers distinguish between “Centaurs” and “Cyborgs” based on the style of integration:

- Centaurs tend to divide and conquer. They hand off a clear sub-task to the AI, let it work, and then re-engage to evaluate the result. Ideally, they switch between “human mode” and “AI mode” strategically.

- Cyborgs tend to integrate the AI more continuously, intertwining their thought process with the machine’s output in real-time (e.g., a writer who edits a sentence while the AI is simultaneously predicting the next few words).

Both approaches fall under the umbrella of human-AI collaboration, but the “Centaur” model is currently the most robust framework for enterprise implementation because it emphasizes accountability and clear delineation of labor.

The Limits of Pure Automation: Why We Still Need Humans

The drive for pure automation—removing humans entirely—often hits a wall known as the “Jagged Frontier.”

The Jagged Frontier

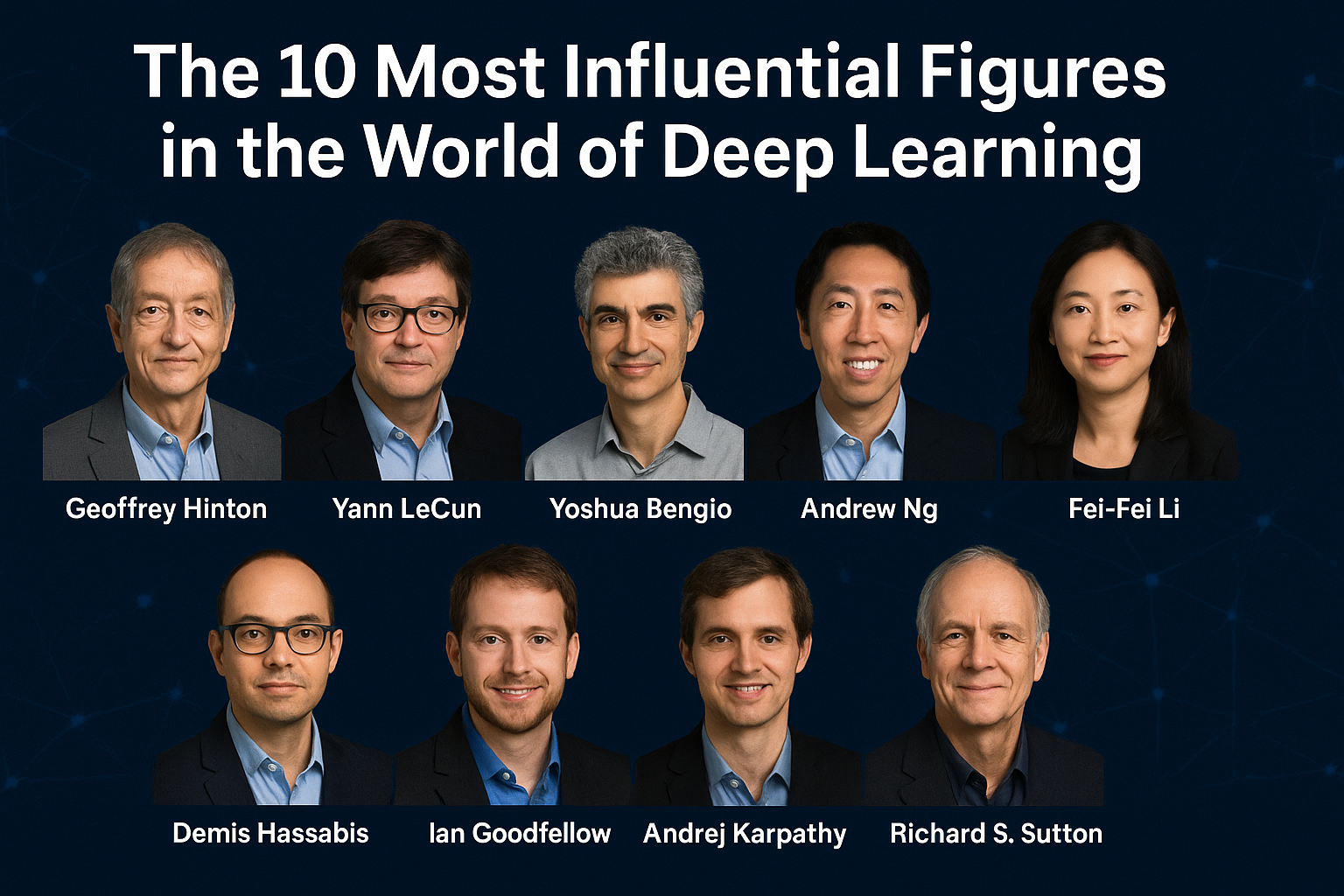

A landmark study by researchers from Harvard Business School, collaborating with the Boston Consulting Group (BCG), introduced the concept of the “jagged frontier” of AI capabilities. Unlike previous technological waves that automated “easy” manual tasks first, Generative AI is uneven. It might excel at writing a complex sonnet (a hard task for many humans) but fail at simple logical counting or fact-checking current events (easy tasks for humans).

Because the frontier of capability is jagged and constantly shifting, pure automation is risky. An AI might perform brilliantly on 90% of tasks and catastrophically fail on the remaining 10% without “knowing” it has failed.

The Context Gap

AI models are probabilistic engines. They predict the next likely token based on training data. They do not “understand” the world in the way humans do. They lack:

- Situational Awareness: An AI customer service agent doesn’t know that a hurricane just hit the customer’s region unless explicitly fed that data. A human operator instinctively understands the emotional context of such an event.

- Institutional Memory & Politics: AI doesn’t understand the unspoken political dynamics of a client meeting or the specific “voice” a brand has cultivated over decades beyond what is in its training set.

- Moral Accountability: When an AI makes a biased decision, it cannot be held accountable. A human in the loop provides the necessary ethical backstop.

The Science of Synergy: How Collaboration Boosts Performance

When humans and AI collaborate effectively, they achieve “Hybrid Intelligence,” a state where the collective performance exceeds the capabilities of either entity working alone.

1. Complementary Cognitive Strengths

The most successful centaur teams map tasks to the entity best suited for them.

- AI Strengths:

- Scale: Reading 10,000 documents in seconds.

- Generation: Producing 50 variations of an image or headline instantly.

- Consistency: Following a syntax rule without fatigue.

- Human Strengths:

- Curation: Selecting the best option from the 50 generated variations.

- Context: Understanding why a customer is angry, not just that they are angry.

- Novelty: Connecting two disparate concepts that have never been connected in the training data.

2. Cognitive Offloading

Centaur teams allow humans to offload “low-level” cognitive tasks to the AI, freeing up mental bandwidth for “high-level” strategy.

- Example: A software engineer uses an AI copilot to write boilerplate code (offloading). This preserves their mental energy for designing the system architecture and ensuring security compliance (high-level value).

3. Variance Reduction

Humans are inconsistent. Our performance fluctuates based on sleep, mood, and hunger. AI is consistent but prone to specific types of errors (hallucinations). By pairing them, the human catches the AI’s hallucinations, and the AI stabilizes the human’s inconsistency.

Real-World Use Cases of Centaur Teams

Human-AI collaboration is not theoretical; it is already reshaping major industries.

Healthcare: The Radiologist Centaur

In medical imaging, pure automation (AI diagnosing X-rays alone) has faced regulatory and ethical hurdles due to false positives/negatives. However, studies have shown that a radiologist assisted by AI performs better than either alone.

- The Workflow: The AI scans the image and highlights “regions of interest” (potential tumors). The human radiologist reviews these highlights.

- The Benefit: The AI reduces the chance that the human misses a subtle anomaly due to fatigue. The human filters out the AI’s “false alarms” that would otherwise cause unnecessary patient anxiety.

Software Development: The 10x Developer?

The adoption of AI coding assistants (like GitHub Copilot or Cursor) is the most widespread example of centaur teams.

- The Workflow: The developer writes a comment describing the function. The AI generates the code block. The developer reviews, tweaks, and integrates it.

- The Benefit: Developers report spending less time searching for syntax on Google and more time solving architectural problems. It turns every developer into a technical lead of their own digital intern.

Marketing & Content: The Creative Director Model

In the past, a copywriter had to write every word. In a centaur team, the copywriter becomes a “Creative Director.”

- The Workflow: The human researches the audience and defines the angle. They prompt the AI to generate five different drafts. The human mixes the best metaphors from Draft A and the structure of Draft B, edits for tone, and finalizes the piece.

- The Benefit: Content velocity increases, but more importantly, the “blank page syndrome” is eliminated.

Legal Services: The Augmented Associate

Lawyers use AI to review contracts and search for precedents.

- The Workflow: AI scans thousands of pages of discovery documents to flag relevant keywords or clauses. The human lawyer reviews the flagged documents to build the legal argument.

- The Benefit: What used to take a team of junior associates weeks now takes days, allowing the firm to focus on legal strategy rather than document review.

Key Components of Successful Human-AI Collaboration

Building a centaur team requires more than just a subscription to an LLM (Large Language Model). It requires a specific infrastructure.

1. Trust Calibration

One of the hardest challenges is getting the human to trust the AI the right amount.

- Under-trust: The human ignores helpful AI suggestions because they fear technology, negating the benefit.

- Over-trust (Automation Bias): The human blindly accepts the AI’s output without verifying it. This is dangerous.

- Solution: Training must focus on “skeptical usage.” Users should be taught exactly where the AI tends to fail so they remain vigilant.

2. Frictionless Handoffs

The interface must allow for easy back-and-forth. If a user has to copy-paste text between three different windows to get AI help, the cognitive load is too high. Integrated “copilots” that live inside the working environment (like sidebars in Word or IDEs in coding) are essential for seamless collaboration.

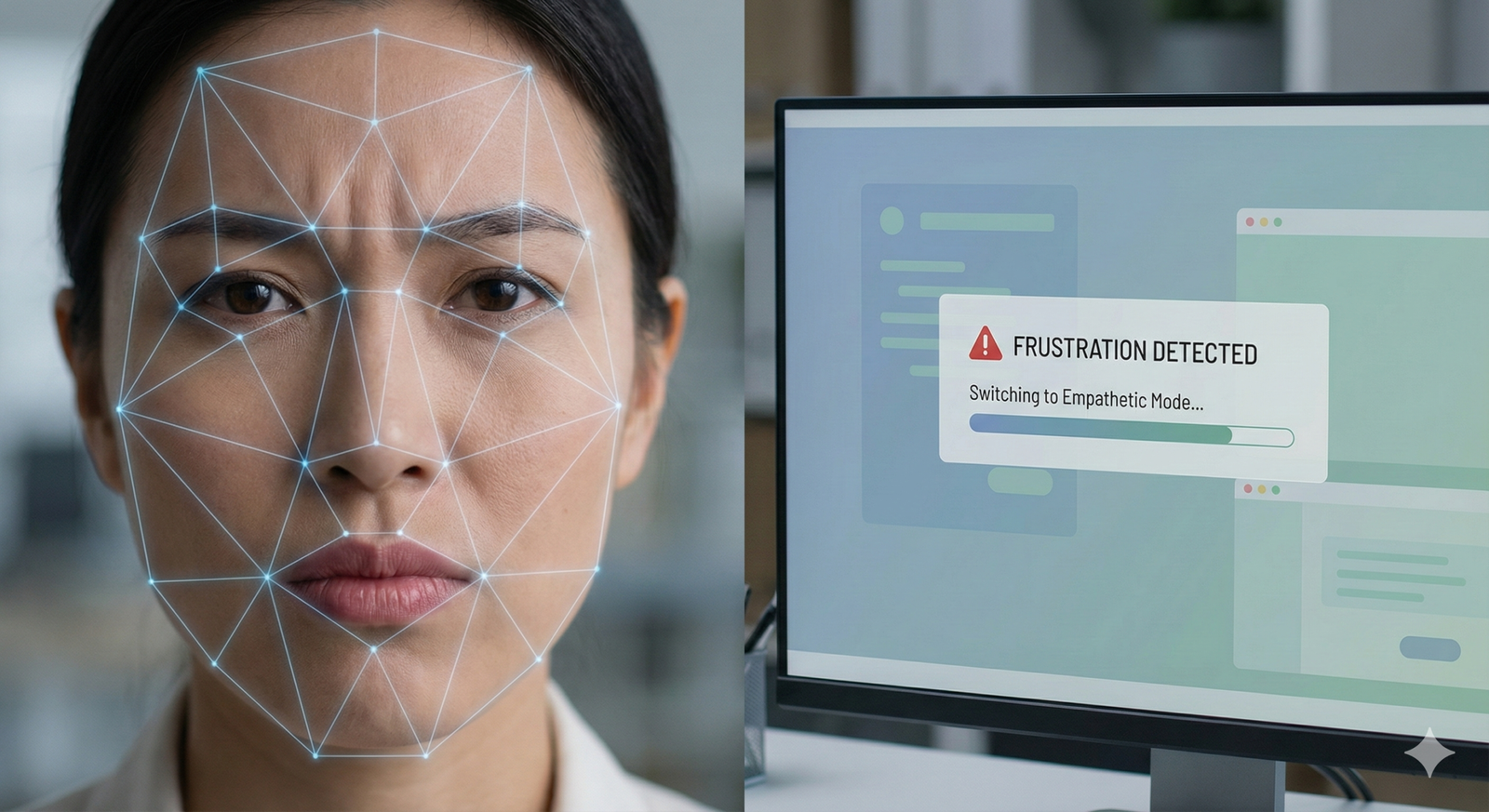

3. The “Human-in-the-Loop” (HITL) Protocol

Standard operating procedures (SOPs) must dictate when a human must intervene.

- Example: In an automated customer support workflow, any conversation that detects “anger” or “legal threat” should automatically route to a human agent.

Strategic Framework for Implementation

If you are a leader looking to build centaur teams, follow this step-by-step framework.

Phase 1: Task Decomposition

Do not try to “apply AI to marketing.” That is too broad. Break jobs down into individual tasks.

- Example Job: Social Media Manager.

- Tasks: 1. Brainstorm ideas. 2. Write captions. 3. Design graphics. 4. Schedule posts. 5. Analyze metrics. 6. Respond to comments.

- Centaur Opportunity: AI can heavily assist with 1, 2, 3, and 5. Humans should lead on 6 (relationship building) and final approval of 2 and 3.

Phase 2: Tool Selection & Integration

Choose tools that fit the “Centaur” model—those that allow for editing and iteration. Avoid “black box” tools that give you a final result with no ability to modify the steps.

- Criteria: Look for tools with “regenerate,” “edit,” and “explain” features.

Phase 3: Pilot Training (The New Upskilling)

Training employees to use AI is not just about prompt engineering. It covers:

- Decomposition: Teaching staff how to break their own problems down so AI can solve them.

- Verification: Teaching staff how to fact-check AI.

- Iterative Prompting: How to converse with the AI to refine outputs.

Phase 4: Feedback Loops

Create a mechanism where the human corrects the AI, and that correction improves the system.

- Enterprise Level: If a human edits an AI-generated email, that edit should be captured to fine-tune the model for the next time.

Common Mistakes and Pitfalls

Even with good intentions, organizations often stumble when implementing hybrid teams.

1. Falling Asleep at the Wheel

When AI is correct 95% of the time, humans tend to zone out. This makes the 5% failure rate catastrophic because the human is not paying attention when it happens.

- Mitigation: Introduce “spot checks” or deliberate friction where the human is forced to actively approve a high-stakes decision.

2. Skill Atrophy

If junior employees use AI to do all the basic work, they may never develop the deep expertise required to become senior leaders who can evaluate the AI.

- Mitigation: “unplugged” training sessions where juniors must perform tasks manually to ensure foundational understanding.

3. The “Good Enough” Trap

Centaur teams can produce average work at lightning speed. There is a risk that the volume of “okay” content drowns out the need for “exceptional” content.

- Mitigation: Set quality standards higher than before. Since the baseline is easier to reach, the human value add must be pushing from “good” to “great.”

4. Ambiguous Accountability

If a centaur team makes a mistake, who is to blame? The user? The AI vendor? The IT department?

- Mitigation: Establish a clear policy: The human operator is always accountable for the final output. The AI is a tool, like a calculator; if the bridge collapses, you blame the engineer, not the calculator.

Measuring Success in Hybrid Teams

Old metrics like “hours worked” or “lines of code written” are obsolete in the age of human-AI collaboration.

New Metrics to Watch

- Velocity to Value: How fast does an idea become a finished product?

- Correction Rate: How often does the human have to intervene significantly? (A high correction rate might mean the AI tool is poor; a near-zero rate might mean the human is over-trusting).

- Employee Satisfaction: Do employees feel “superpowered” or do they feel like “robot babysitters”? Centaur teams should feel empowering.

- Novelty Score: Is the team producing more innovative solutions than before, or just more of the same solutions?

The Future: From Chatbots to Agents

As of 2026, we are moving from “Chatbot” interfaces to “Agentic” workflows. In a chatbot model, the human prompts, and the bot answers. In an agentic model, the human gives a goal (“Plan a marketing campaign for product X”), and the AI agents break it down, research, draft, and present a plan for review.

In this future, the “Centaur” relationship evolves. The human becomes less of a co-author and more of a manager or executive editor. The collaboration shifts from sentence-by-sentence interaction to goal-oriented supervision. However, the core principle remains: The human provides the intent and judgment; the machine provides the execution and scale.

Related Topics to Explore

- Prompt Engineering for Business: Techniques for getting the best outputs from LLMs.

- AI Ethics and Governance: Frameworks for ensuring responsible AI use.

- The Future of Remote Work: How AI agents impact distributed teams.

- Cognitive Load Theory: Understanding how AI tools affect mental energy.

- Agentic AI Workflows: Moving beyond chat to autonomous agents.

Conclusion

The “Man vs. Machine” narrative is a false dichotomy. The future belongs to the “Man plus Machine.” Centaur teams represent the optimal path forward for organizations that want to harness the explosive power of AI without losing the nuance, accountability, and strategic depth that only humans can provide.

By understanding the limits of pure automation and leaning into the strengths of hybrid intelligence, we can create a workforce that is not only more productive but also more creative and fulfilled. The goal is not to build a machine that can replace a human, but to build a human-machine team that can achieve what neither could alone.

Next Steps for You:

- Audit your current workflows: Identify one process where “pure automation” is failing or where humans are bogged down by rote tasks.

- Run a “Centaur Pilot”: distinct from a software pilot, test a workflow where a human is explicitly trained to use AI as a partner for that specific task.

- Measure the gap: Compare the output quality and speed of the centaur team against both the unassisted human and the purely automated attempt.

FAQs

What is the difference between “Human-in-the-loop” and a “Centaur Team”?

“Human-in-the-loop” (HITL) is a broad technical term often referring to humans training models or verifying data. A “Centaur Team” is a specific type of HITL workflow focused on real-time problem solving and creativity, where the human and AI work together dynamically to achieve a superior result.

Did Garry Kasparov actually coin the term “Centaur”?

Yes. After losing to Deep Blue, Kasparov organized “freestyle” chess tournaments. He observed that humans paired with machines (which he called Centaurs) played the highest level of chess, beating both standalone humans and standalone supercomputers.

Can Centaur teams work in creative fields?

Absolutely. In fact, creative fields are where Centaur teams often shine brightest. Artists and writers use AI to generate raw materials, mood boards, or drafts, which they then refine, curate, and finalize. This allows for higher creative output and exploration of ideas that might be too time-consuming to execute manually.

What is the biggest risk of Centaur teams?

The biggest risk is “automation bias” or complacency. If the AI performs well 99% of the time, the human operator may stop critically evaluating the output, leading to errors slipping through during the 1% of cases where the AI hallucinates or fails.

Do I need to be a programmer to be part of a Centaur team?

No. Modern Generative AI tools interact via natural language. The skill set required is not coding, but “prompt engineering”—the ability to clearly articulate instructions, provide context, and iterate based on feedback.

Will Centaur teams eventually be replaced by pure automation?

For some repetitive, low-stakes tasks, yes. However, for complex, high-stakes, or novel tasks, the “jagged frontier” of AI suggests that humans will remain essential for context, ethics, and strategic direction for the foreseeable future. The role of the human will shift, but not disappear.

How do we handle data privacy in Centaur teams?

Data privacy is critical. Organizations must ensure that the AI tools used by Centaur teams are enterprise-grade and do not train their public models on the company’s proprietary data. Strict data governance policies must be in place.

Is “Centaur” the only term for this?

No. You may also hear terms like “Hybrid Intelligence,” “Augmented Intelligence,” “Symbiotic AI,” or “Human-Machine Teaming.” They all describe roughly the same concept of collaborative effort.

References

- Harvard Business School. (2023). Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality. Working Paper.

- Kasparov, G. (2017). Deep Thinking: Where Machine Intelligence Ends and Human Creativity Begins. PublicAffairs.

- Boston Consulting Group (BCG). (2023). AI at Work: What People Are Saying. BCG Henderson Institute.

- Massachusetts Institute of Technology (MIT). (2024). The Impact of Generative AI on Highly Skilled Work. MIT Sloan Management Review.

- Lakhani, K. R., & Iansiti, M. (2020). Competing in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the World. Harvard Business Review Press.

- Microsoft WorkTrend Index. (2024). Will AI Fix Work? Microsoft Corporation.

- Nature Medicine. (2023). Biomedical imaging and the role of artificial intelligence: a hybrid approach.

- Nielsen Norman Group. (2024). AI and the Future of Work: A User Experience Perspective.

- Stanford HAI. (2024). Generative AI: Perspectives from the Stanford Institute for Human-Centered AI.

- World Economic Forum. (2025). The Future of Jobs Report 2025. (Projected/Contextual reference for up-to-date labor trends).