Disclaimer: This article explores the technological applications of generative AI in healthcare. It is for informational purposes only and does not constitute medical advice, diagnosis, or treatment. Always seek the advice of a qualified health provider with any questions you may have regarding a medical condition.

The integration of generative AI in healthcare represents a fundamental shift from reactive care to proactive, personalized medicine. While traditional artificial intelligence has long been used to classify data—telling a doctor whether a tumor is benign or malignant—generative AI goes a step further. It has the capacity to create new data, simulate biological scenarios, and synthesize vast amounts of unstructured medical records into coherent, actionable insights. This capability is rapidly transforming how clinicians approach diagnostics and how researchers develop predictive models for complex diseases.

As of January 2026, healthcare systems globally are moving beyond pilot programs to integrate these tools into daily workflows. From reconstructing high-fidelity medical images from low-quality scans to predicting a patient’s unique response to a specific drug therapy, the potential for improved patient outcomes is immense. However, this power comes with significant responsibility regarding patient privacy, algorithmic bias, and clinical accuracy.

This guide provides a comprehensive overview of how generative AI is reshaping the medical landscape, specifically within the domains of personalized diagnostics and predictive medicine.

Key Takeaways

- Beyond Classification: Generative AI creates new insights, such as synthetic patient data or reconstructed imaging, rather than just sorting existing data.

- Personalized Precision: Algorithms can now tailor diagnostic procedures and treatment plans to an individual’s genetic and lifestyle profile.

- Predictive Power: AI models identify early warning signs of conditions like sepsis or heart failure hours or days before clinical symptoms appear.

- Efficiency Gains: Automating clinical documentation and summarizing patient histories frees up significant physician time for direct patient care.

- Human-in-the-Loop: Despite technological advances, physician oversight remains critical to verify AI outputs and prevent “hallucinations.”

- Data Privacy: The use of synthetic data helps researchers train models without compromising real patient confidentiality.

Who This Is For (And Who It Isn’t)

This guide is designed for healthcare professionals, medical researchers, hospital administrators, and med-tech investors who want a deep understanding of the practical applications and strategic implications of generative AI. It is also suitable for informed patients seeking to understand the technology behind modern care.

It is not a technical manual for coding AI algorithms, nor is it a guide to replacing medical professionals. The focus is on augmentation and clinical decision support.

Defining Generative AI in the Medical Context

To understand the impact of generative AI in healthcare, it is necessary to distinguish it from traditional predictive analytics. Standard machine learning (ML) models are discriminative; they draw a line between data points (e.g., “Sick” vs. “Healthy”). Generative AI, driven by architectures like Transformers (used in Large Language Models or LLMs) and Generative Adversarial Networks (GANs), learns the underlying distribution of the data to generate new instances that resemble the original training set.

In a medical context, this translates to three core capabilities:

- Generation: Creating synthetic medical images to train other AI models or filling in missing data in patient records.

- Synthesis: Summarizing thousands of pages of unstructured clinical notes into a concise patient history.

- Simulation: Modeling how a specific protein might fold or how a biological system might react to a new drug.

The “generative” aspect is what allows for true personalization. Instead of applying a standard protocol based on population averages, these models can generate a “digital twin” of a patient—a virtual simulation used to test treatments before administering them in the real world.

The Revolution in Medical Imaging and Radiology

One of the most mature applications of generative AI is in medical imaging. Radiologists are often burdened with high volumes of scans, and image quality can be compromised by factors like patient movement or radiation dose limits.

Enhancing Image Quality with GANs

Generative Adversarial Networks (GANs) are being used to “denoise” and reconstruct images. For example, a Low-Dose CT scan (LDCT) safer for the patient often yields a grainier image than a standard dose. Generative AI can take the LDCT data and generate a high-resolution version that retains the diagnostic utility of a higher radiation dose without the associated risks.

This process, often called “super-resolution,” allows for:

- Reduced Radiation Exposure: Critical for pediatric patients and those requiring frequent monitoring.

- Shorter Scan Times: Faster scans reduce the likelihood of motion artifacts (blurring), which AI can also correct post-hoc.

- Modality Transfer: Research is exploring the conversion of MRI images into CT-like images (or vice versa) synthetically, potentially saving patients from undergoing multiple different types of scans.

Synthetic Data for Rare Diseases

Training diagnostic AI requires massive datasets. For common conditions like pneumonia, data is plentiful. For rare diseases, it is scarce. Generative AI can create “synthetic” medical images—fake but statistically accurate scans of rare tumors or anomalies—to train diagnostic algorithms. This allows AI diagnostic tools to learn to recognize rare conditions without compromising the privacy of the few real patients who have them.

Personalized Diagnostics: From Genomics to Pathology

Personalized diagnostics aims to tailor disease identification to the molecular and genetic makeup of the individual. Generative AI accelerates this by analyzing multi-modal data—combining genetics, imaging, and clinical history.

Genomic Interpretation and Variant Prediction

Genomic sequencing generates terabytes of data. Identifying which genetic mutation is responsible for a disease is often like finding a needle in a haystack. Generative models, particularly those trained on vast libraries of genetic sequences (similar to how LLMs are trained on text), can predict the functional impact of genetic variants.

For instance, models like Google DeepMind’s AlphaMissense can predict whether a specific “missense” mutation (a change in a single DNA letter) is likely to be benign or pathogenic. This moves diagnostics from “unknown significance” to “actionable insight,” allowing geneticists to diagnose rare hereditary disorders faster.

Computational Pathology

In pathology, diagnosing cancer involves examining tissue slides under a microscope. Generative AI assists pathologists by:

- Stain Normalization: different labs use different chemical stains, making slides look different. AI can digitally “re-stain” images to a standard format, reducing error.

- Virtual Staining: AI can take a label-free tissue image and digitally generate the appearance of specific chemical stains, potentially removing the need for expensive and time-consuming chemical processing in the lab.

Multi-Modal Diagnostic AI

The future of generative AI in healthcare lies in multi-modal Large Language Models (LLMs). These systems can ingest a patient’s MRI scan, their genomic report, and ten years of unstructured clinical notes simultaneously. By synthesizing these disparate data streams, the AI can propose a differential diagnosis that considers the holistic view of the patient, rather than just isolated symptoms.

Predictive Medicine: Anticipating Acute Events

Predictive medicine shifts the focus from “what is wrong now?” to “what will go wrong soon?” Generative models are particularly good at identifying subtle, non-linear patterns that precede acute medical events.

Early Warning Systems for Sepsis and Heart Failure

Sepsis is a life-threatening response to infection that moves quickly. Traditional scoring systems often catch it too late. Generative models trained on time-series data (vital signs, labs, fluid inputs) can predict the onset of sepsis hours before clinical deterioration becomes obvious.

Similarly, in cardiology, AI models analyzing ECG data can detect subtle electrical patterns indicative of impending heart failure or atrial fibrillation that are invisible to the human eye. These predictive medicine algorithms serve as a 24/7 monitor, alerting staff only when the probability of an event crosses a high-confidence threshold.

Predicting Progression of Chronic Diseases

For diseases like Alzheimer’s or Diabetic Retinopathy, the rate of progression varies wildly between patients. Generative AI can simulate the likely progression path of a specific patient’s disease based on their baseline data.

- Ophthalmology: AI can analyze retinal scans to predict which diabetic patients are at highest risk of developing blindness in the next 12 months, allowing for prioritized intervention.

- Neurology: By analyzing speech patterns and MRI data, generative models are being tested to predict cognitive decline trajectories in early-stage dementia patients.

Clinical Decision Support and Documentation

While diagnostics and prediction grab headlines, the administrative burden is a primary cause of physician burnout. Generative AI acts as a sophisticated assistant in the exam room.

Ambient Clinical Intelligence

“Ambient listening” tools use generative AI to listen to the doctor-patient conversation (with consent) and automatically generate a structured clinical note (SOAP note). Unlike simple transcription, these models:

- Filter out small talk (“How is the weather?”).

- Extract relevant medical facts (“Patient reports chest pain for 3 days”).

- Map symptoms to medical codes (ICD-10/11) for billing and records.

- Draft a summary for the patient’s portal in plain language.

This allows the physician to maintain eye contact with the patient rather than typing into a computer, restoring the human connection in healthcare.

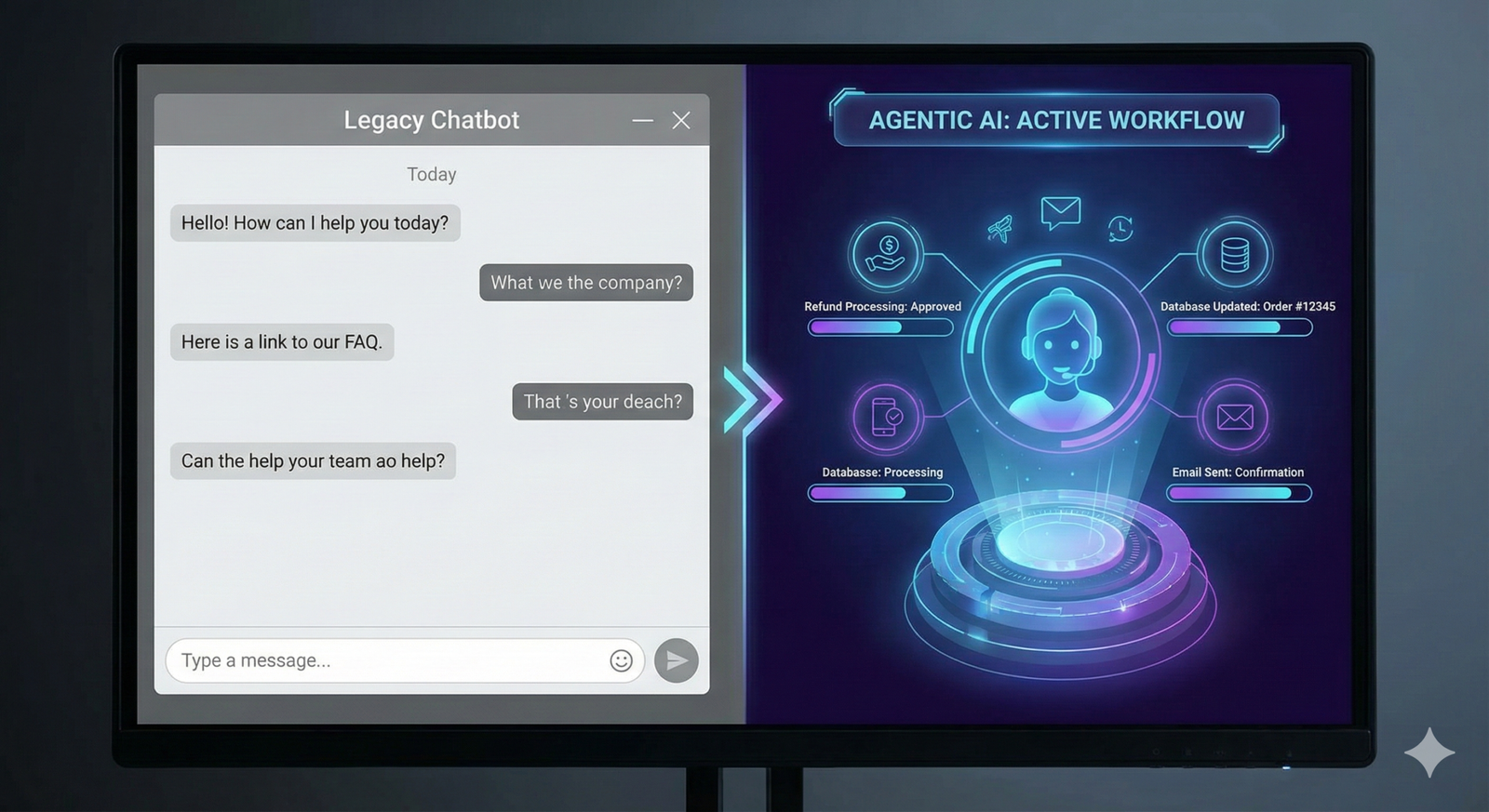

Automated Triage and Summarization

When a patient arrives at the ER or sends a message to their provider, generative AI can summarize their lengthy medical history into a “one-pager” for the clinician. It highlights allergies, recent surgeries, and current medications immediately. In triage settings, chatbots powered by LLMs can gather initial symptom data, rank urgency, and direct patients to the appropriate level of care (e.g., “Call 911” vs. “Schedule routine appointment”).

Drug Discovery and Pharmacogenomics

Developing a new drug takes over a decade and costs billions. Generative AI is compressing this timeline by hallucinating plausible molecular structures that do not yet exist in nature but might bind to a specific disease target.

De Novo Protein Design

Tools like AlphaFold have solved the protein folding problem, but newer generative models are now designing entirely new proteins de novo (from scratch). Researchers describe the desired properties of a drug (e.g., “binds to receptor X but avoids receptor Y”), and the AI generates chemical structures that fit those constraints. This is particularly promising for “undruggable” targets in oncology and neurology.

Pharmacogenomics: The Right Drug for the Right Person

Adverse drug reactions are a leading cause of hospitalization. Pharmacogenomics studies how genes affecting drug metabolism. Generative AI combines genetic data with pharmacokinetic models to predict:

- Efficacy: Will this drug work for this specific patient?

- Toxicity: Is this patient at high risk for severe side effects?

- Dosage: What is the optimal dose based on the patient’s metabolic rate?

This moves prescribing away from “trial and error” toward data-driven precision.

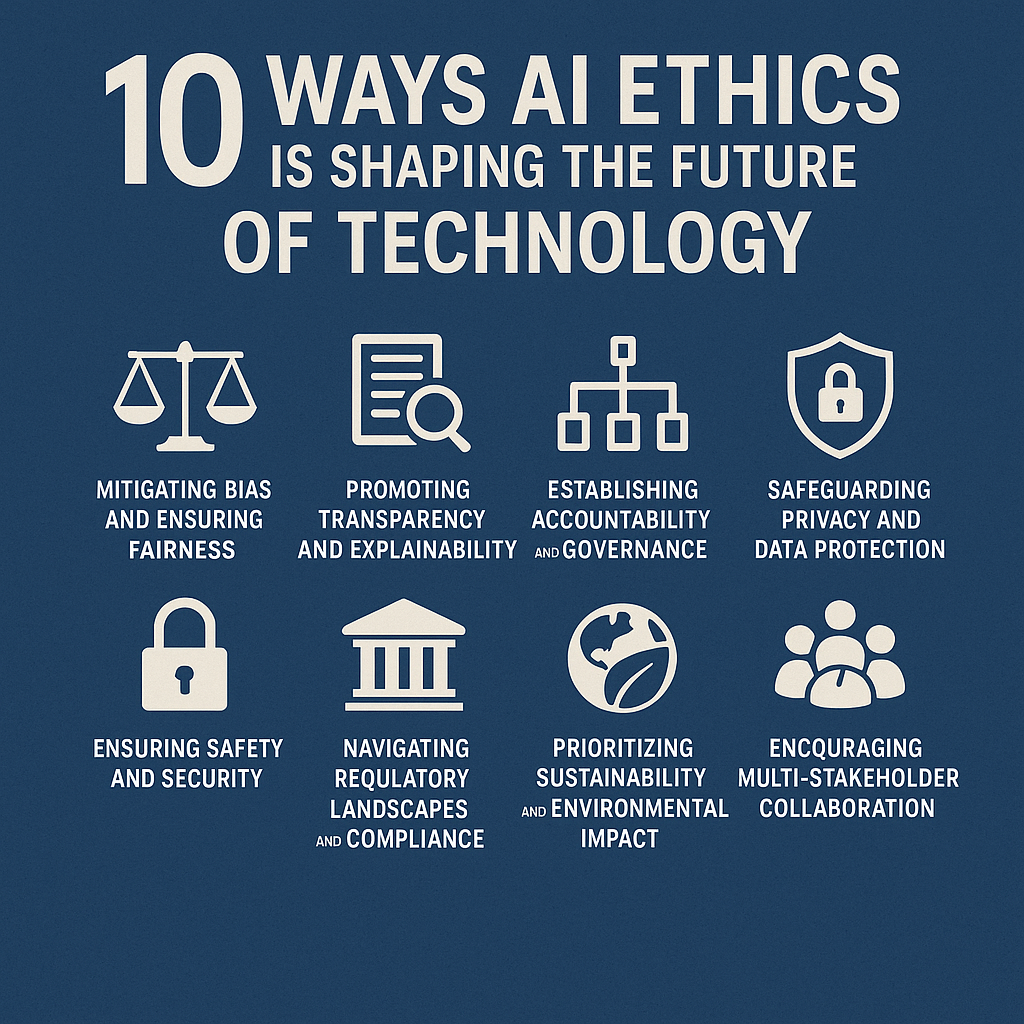

Risks, Challenges, and Ethical Considerations

The deployment of generative AI in healthcare is not without profound risks. Because these systems are “probabilistic” (they guess the next likely piece of information) rather than “deterministic,” they can fail in ways that are difficult to detect.

The Problem of “Hallucinations”

In an LLM, a hallucination is a confident but factually incorrect statement. In creative writing, this is a quirk; in medicine, it is dangerous. An AI might invent a medical reference, suggest a non-existent drug dosage, or hallucinate a symptom in a patient summary that was never discussed.

- Mitigation: Strict “Human-in-the-Loop” workflows where a clinician must sign off on every AI-generated output. Retrieval-Augmented Generation (RAG) is also used to ground AI responses in trusted medical databases rather than relying solely on training data.

Algorithmic Bias and Health Equity

AI models are only as good as the data they are trained on. Historically, medical data is heavily skewed toward white, male, Western populations.

- Skin Tone Bias: Dermatological AI trained on light skin may fail to diagnose skin cancer on dark skin.

- Socioeconomic Bias: Predictive models using billing data as a proxy for “sickness” may underestimate the health needs of poor populations who access care less frequently.

- Solution: Researchers are actively using generative AI to create synthetic diverse datasets to retrain models, ensuring they perform equally well across all demographics.

Data Privacy and Security

Healthcare data is protected by strict regulations like HIPAA (USA) and GDPR (Europe). Using patient data to train generative models raises complex legal questions.

- Inversion Attacks: Can a hacker query an AI model to reveal the private data of the patients it was trained on?

- Data Sovereignty: Hospitals are increasingly opting for “on-premise” or “private cloud” instances of LLMs to ensure patient data never leaves their secure environment to train a public model.

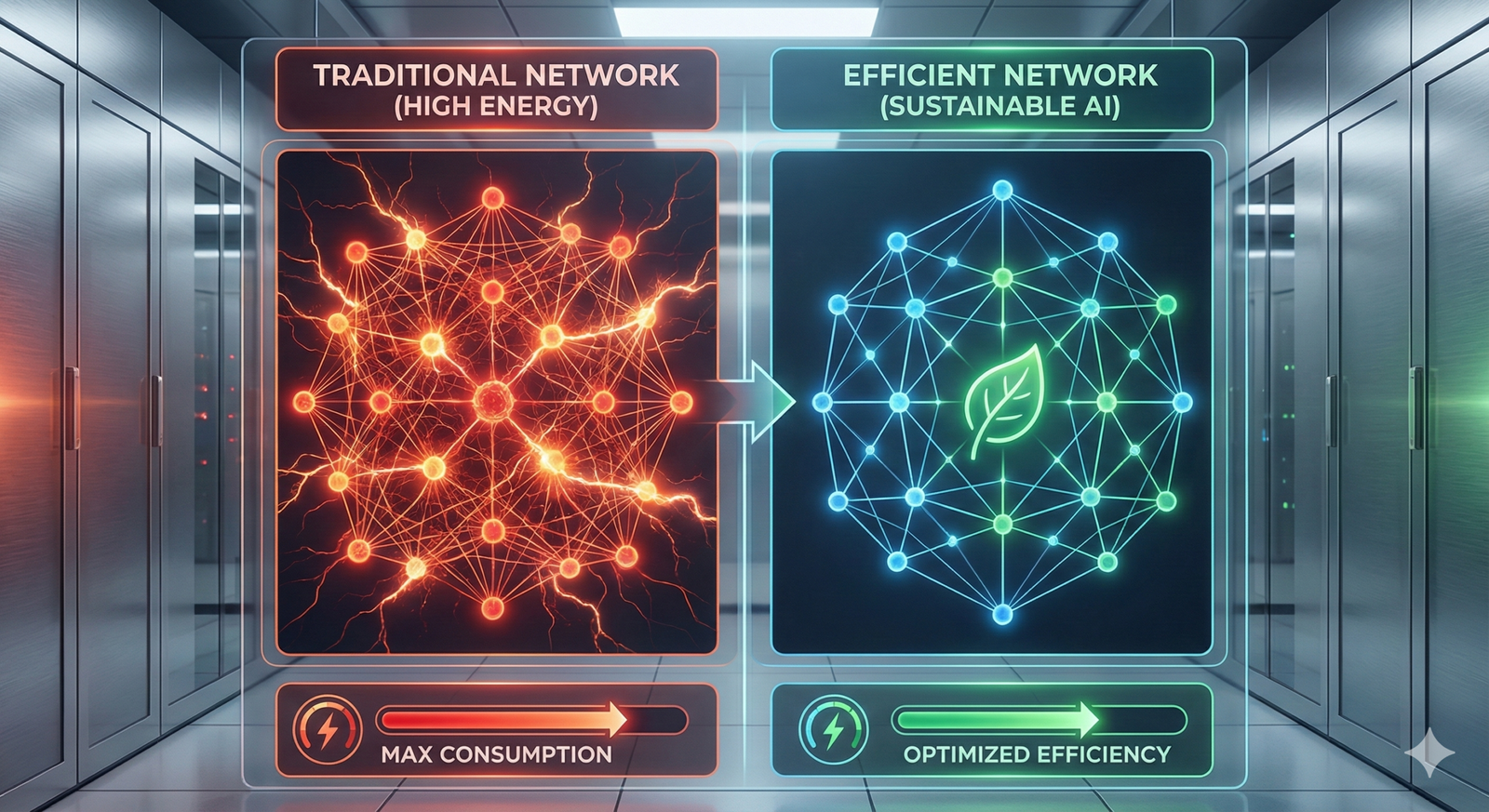

Implementing Generative AI: Practical Considerations

For healthcare organizations looking to adopt these tools, the path forward involves infrastructure, governance, and culture.

Infrastructure Readiness

Running advanced medical imaging AI or LLMs requires significant computational power (GPUs). Hospitals must decide between cloud-based solutions (easier to scale, harder to secure) and on-premise hardware (expensive, secure). Additionally, data silos must be broken down; AI cannot predict heart failure if the cardiology data cannot talk to the pharmacy data.

Governance Frameworks

Hospitals are establishing “AI Governance Councils” composed of doctors, ethicists, IT security, and legal experts. These councils evaluate:

- Clinical Validation: Has this specific model been tested on our patient population?

- Transparency: Can the AI explain why it made a recommendation? (Explainable AI or XAI).

- Liability: If the AI misses a diagnosis, who is responsible? (Currently, the attending physician retains full liability).

Change Management

Physicians are often skeptical of “black box” algorithms. Successful implementation requires framing AI as a “Co-pilot” or “Second Opinion,” not a replacement. Training programs must be developed to teach medical staff how to interact with these tools effectively and how to spot when the AI might be wrong.

The Future: Digital Twins and Continuous Monitoring

Looking ahead, the convergence of wearable technology and generative AI in healthcare points toward the concept of the “Personal Health Digital Twin.”

Imagine a virtual model of your physiology that lives in the cloud, updated in real-time by your smartwatch and electronic health record.

- Scenario: Your doctor wants to prescribe a new blood pressure medication. Instead of trying it on you, they test it on your Digital Twin. The simulation predicts a drop in kidney function. The doctor chooses a different drug.

- Scenario: Your wearable detects a slight change in gait and sleep patterns. The AI analyzes this against your history and predicts a high likelihood of a migraine in 24 hours, suggesting preemptive medication.

This era of “Continuous Predictive Care” moves medicine out of the hospital and into the background of daily life, intervening only when necessary.

Common Mistakes and Pitfalls

When discussing or implementing generative AI in this sector, several misconceptions persist.

Mistake 1: Assuming “Human-Level” Means “Perfect”

An AI might pass the US Medical Licensing Exam (USMLE), but that does not mean it has clinical judgment. AI struggles with context, intuition, and ethical nuance. Over-reliance on AI scores can lead to “automation bias,” where clinicians stop thinking critically and blindly follow the machine.

Mistake 2: Ignoring the “Black Box” Problem

Deep learning models are notoriously opaque. In high-stakes fields like oncology, knowing that the AI predicts cancer is not enough; doctors need to know where and why. Implementing models without explainability features (like heatmaps on X-rays) leads to low adoption rates.

Mistake 3: Underestimating Integration Costs

Buying the software is the cheap part. Integrating an AI tool into an existing Electronic Health Record (EHR) system (like Epic or Cerner) is technically difficult and expensive. Workflow disruption is the number one reason AI pilots fail.

Conclusion

Generative AI in healthcare is rapidly maturing from a futuristic concept into a practical toolkit for personalized diagnostics and predictive medicine. By handling the heavy lifting of data synthesis, image reconstruction, and pattern recognition, these tools offer the promise of returning the “care” to healthcare—giving providers more time to focus on the human aspects of medicine.

However, the technology is not a magic wand. It requires rigorous validation, robust ethical guardrails, and a commitment to keeping the human physician in the driver’s seat. As we move through 2026 and beyond, the most successful health systems will be those that view AI not as a replacement for human expertise, but as the ultimate instrument for amplifying it.

Next Steps: For healthcare leaders, the immediate priority is to conduct a data readiness audit to determine if your organization’s data infrastructure is robust enough to support these advanced AI integrations.

FAQs

How accurate is generative AI in medical diagnosis?

Accuracy varies significantly by application. In specific tasks like identifying potential tumors in radiology scans, top-tier AI models often match or slightly exceed human performance. However, in complex, multi-system diagnoses, they still lag behind experienced clinicians. AI is best viewed as a “second opinion” or screening tool rather than a definitive diagnostician.

Will generative AI replace doctors?

No. Generative AI is designed to augment doctors, not replace them. It handles data analysis, documentation, and preliminary screening, allowing doctors to focus on complex decision-making, patient empathy, and physical procedures. The legal and ethical responsibility for patient care remains strictly with the human provider.

What is the difference between predictive AI and generative AI?

Predictive AI analyzes existing data to forecast future outcomes (e.g., “70% chance of readmission”). Generative AI creates new data or content (e.g., writing a discharge summary, generating a synthetic CT scan, or designing a new drug molecule). In healthcare, they often work together.

Is patient data safe when used with AI?

It depends on the implementation. Public AI models (like standard ChatGPT) should generally not be used with Protected Health Information (PHI). Healthcare-grade AI solutions use private, secure environments (HIPAA-compliant) where data is not shared publicly or used to train the base model for other users.

Can generative AI help with mental health?

Yes. Generative AI is being used to power therapeutic chatbots that offer Cognitive Behavioral Therapy (CBT) techniques for mild anxiety and depression, bridging the gap for patients waiting for human therapists. It also analyzes speech patterns to predict depressive episodes, though these tools are mostly supportive and not diagnostic.

What are “Digital Twins” in healthcare?

A Digital Twin is a virtual replica of a patient’s physiology created using generative AI and the patient’s medical data. Doctors can use this digital model to simulate how the patient would react to different surgeries or drugs, minimizing risk before performing the actual procedure on the patient.

How does AI help in drug discovery?

Generative AI accelerates drug discovery by “imagining” new molecular structures that could treat diseases. It screens millions of potential chemical combinations virtually to predict which ones will be effective and safe, significantly cutting down the time and cost required for pre-clinical testing.

What is the biggest risk of using AI in medicine?

The biggest risks are “hallucinations” (AI making up false medical facts) and bias. If an AI is trained primarily on data from one demographic group, it may provide inaccurate diagnoses for people of other races or genders. Rigorous testing and diversity in training data are essential to mitigate this.

References

- Topol, E. J. (2025). High-performance medicine: the convergence of human and artificial intelligence. Nature Medicine. https://www.nature.com/nm/

- U.S. Food and Drug Administration (FDA). (2025). Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices

- Moor, M., et al. (2023). Foundation models for generalist medical artificial intelligence. Nature. https://www.nature.com/articles/s41586-023-05881-4

- World Health Organization (WHO). (2024). Ethics and governance of artificial intelligence for health. https://www.who.int/publications/i/item/9789240029200

- Rajpurkar, P., & Lungren, M. P. (2023). The Current and Future State of AI in Radiology. Journal of the American College of Radiology. https://www.jacr.org/

- Singhal, K., et al. (2023). Large language models encode clinical knowledge. Nature. https://www.nature.com/articles/s41586-023-06291-2

- Mayo Clinic. (2025). Artificial Intelligence in Cardiology. https://www.mayoclinic.org/departments-centers/cardiovascular-medicine/research/artificial-intelligence

- European Medicines Agency (EMA). (2024). Reflection paper on the use of Artificial Intelligence (AI) in the medicinal product lifecycle. https://www.ema.europa.eu/en/news/reflection-paper-use-artificial-intelligence-lifecycle-medicines

- Jumper, J., et al. (2021). Highly accurate protein structure prediction with AlphaFold. Nature. https://www.nature.com/articles/s41586-021-03819-2