Robotics has sprinted from niche automation to everyday infrastructure in just a decade. From 2015 to 2025, the field fused machine learning with mechatronics, pushed robots out of cages and into close collaboration with people, and turned once-impossible demos—dynamic bipeds, warehouse pickers, autonomous surgical maneuvers—into pilots and, increasingly, products. This article takes a practical, structured look at the last 10 years: the breakthroughs that mattered, how they changed the economics and safety of deployment, and how organizations can start—today—with a plan, metrics, and beginner-friendly steps.

Key takeaways

- AI moved into the robot’s control loop. Vision-language-action models and foundation-model policies unlocked generalization, enabling robots to reason about tasks they weren’t explicitly programmed to do.

- Robots left the cage. Collaborative robots and new safety standards normalized human-robot workcells and lowered the barrier to entry for small and midsize manufacturers.

- Logistics became the proving ground. Massive deployments of mobile platforms, arms, and early humanoids proved ROI with tangible, measurable KPIs.

- Simulation and open tooling exploded. ROS 2, powerful edge compute, and high-fidelity simulators shrank iteration cycles and training costs.

- Regulation matured. Drone remote identification, beyond-visual-line-of-sight pilots, and comprehensive AI regulations began to shape deployment norms.

- Healthcare crossed key milestones. Surgical robotics scaled globally while autonomous capabilities made credible lab-to-clinic progress.

Note: This article provides general information, not medical, legal, or financial advice. For decisions in those domains, consult a qualified professional.

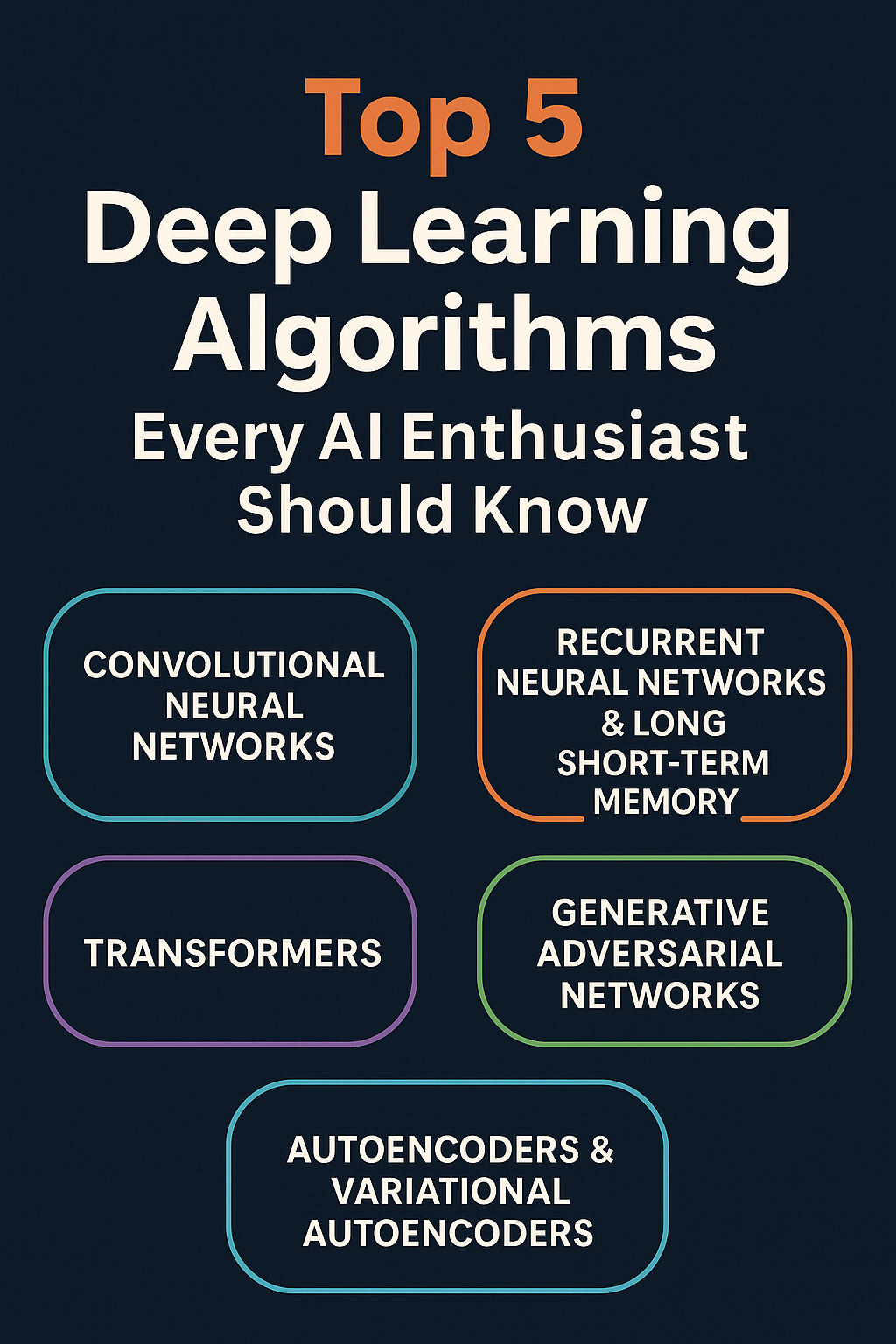

AI-Native Robotics: From Hand-Programming to Generalization

What it is and why it mattered.

The last decade replaced brittle, hand-engineered perception and control stacks with policies trained on large, heterogeneous datasets. Vision-language-action (VLA) models and transformer-based policies let robots map sensor observations to actions using the same representational machinery that powers modern AI assistants. In practice, that meant a robot could follow natural-language instructions, adapt to novel objects, and chain steps with light-touch prompting rather than months of bespoke coding.

Requirements and low-cost alternatives.

- Core: A robotic platform (mobile base or arm), RGB-D camera(s), a GPU-capable edge computer, and a VLA or imitation-learning policy.

- Software: ROS 2, a simulator (for example, Omniverse-based tools), and a perception stack.

- Budget-savvy path: Start in simulation; rent compute; use community LTS ROS 2 releases and off-the-shelf cameras.

Step-by-step for beginners.

- Prototype in sim. Load a mobile base or 6-DoF arm into a high-fidelity simulator and build a minimal “pick and place” scene.

- Add a language interface. Pipe natural-language prompts to a lightweight policy that outputs goal poses or skill triggers.

- Bridge to real. Calibrate cameras, map coordinate frames, and test short horizon skills (reach, grasp, stow) before chaining into longer tasks.

Beginner modifications and progressions.

- Simplify: Fixed cameras and fiducials for reliable perception.

- Scale up: Add multi-camera sensor fusion, tactile sensing, and a memory module for multi-step tasks.

Recommended cadence and KPIs.

- Frequency: Weekly skill releases; daily simulation runs; monthly real-world validation.

- Metrics: Task-success rate under distribution shift, time-to-completion, human interventions per hour, and sim-to-real gap.

Safety, caveats, and common mistakes.

- Over-trusting language models without guardrails leads to unsafe motions.

- Neglecting calibration between sensors and robot frames multiplies small errors.

- Skipping “stop-action” affordances for human supervisors slows iteration and increases risk.

Mini-plan example (2–3 steps).

- Choose one task (e.g., “pick the red mug and place it on the tray”).

- Implement a goal-conditioned controller that accepts a textual slot (“red mug”) and returns a grasp pose; test 50 episodes in sim; then transfer to real.

Collaborative Robots: Humans and Machines in the Same Workspace

What it is and why it mattered.

Collaborative robots (cobots) introduced power- and force-limiting, speed-and-separation monitoring, and soft tooling, allowing people and robots to share space without hard guarding. Over the decade, cobots grew from novelty into a standard entry point for flexible automation.

Requirements and cost-savvy alternatives.

- Core: A 6-axis cobot arm with integrated force sensing, a simple gripper, and a tablet-style programming interface.

- Add-ons: Vision for part location, safety scanners for dynamic speed limiting.

- Budget path: Start with hand-guiding teach modes and gravity compensation; add vision later.

Step-by-step for beginners.

- Risk assess. Identify pinch points, payloads, and speeds; define safe work envelopes and collaborative modes.

- Teach by hand. Record waypoints for a pick-place that feeds a manual station.

- Layer vision. Add a top-down camera and basic object detection to remove hard fixtures.

Beginner modifications and progressions.

- Simplify: Fixed trays and kitting boards reduce perception complexity.

- Scale: Add conveyor tracking, robot-to-robot handoffs, and tool changers.

Cadence and KPIs.

- Frequency: Two-week sprints to add one skill or product variant.

- Metrics: Uptime, overall equipment effectiveness (OEE), cycle time, near-miss incidents, and changeover time.

Safety and common mistakes.

- Misapplying “collaborative” as a blanket excuse to skip guarding. Collaborative mode is conditional, not universal.

- Ignoring fatigue—sustained human attention at the fence line still requires ergonomic design.

Mini-plan (2–3 steps).

- Pilot a cobot to depalletize small cartons at <5 kg, then hand them to a human inspector.

- Add speed-and-separation monitoring to increase throughput while preserving safe stops.

Warehouses, Fulfillment, and Early Humanoids

What it is and why it mattered.

Logistics became the first environment where mobile platforms, robot arms, and increasingly bipedal formats paid off at scale. Over the decade, deployments jumped from a few thousand mobile robots to hundreds of thousands. New storage systems, AI-driven pickers, and bipedal platforms began tackling dull, repetitive tote-handling tasks.

Requirements and entry-level alternatives.

- Core: Autonomous mobile robots (AMRs) or automated storage and retrieval systems (AS/RS), robotic arms for induction, and a warehouse execution system (WES) integration.

- Add-ons: Item-level perception, dynamic slotting, and bipedal pilots for ergonomic tote flows.

- Budget path: Start with a single AMR mission (cart-to-dock) or a semi-autonomous induction arm for a handful of SKUs.

Step-by-step for beginners.

- Map and label. Digitize aisles, docks, and buffer zones; define traffic rules.

- Pilot one flow. Move empty totes or shipping labels between two stations with a single AMR.

- Instrument and iterate. Add an arm for induction at one workstation; measure picks per hour, error rates, and travel time.

Beginner modifications and progressions.

- Simplify: Start with empty-tote moves to avoid grasping complexity.

- Scale: Add pick-to-light integration, multi-AMR coordination, and later evaluate a bipedal pilot for ergonomics.

Cadence and KPIs.

- Frequency: Monthly capability expansions; weekly path-planning updates as layouts change.

- Metrics: Throughput (lines/hour), mis-pick rate, AMR utilization, human-robot near-misses, and return-to-service time.

Safety and common mistakes.

- Overlapping pedestrian and robot lanes without visual cues.

- Under-investing in change management and cross-training (operators, maintenance, safety leads).

Mini-plan (2–3 steps).

- Automate empty-tote circulation with AMRs; add a vision-enabled arm to induct polybags; then trial a bipedal unit on a single, supervised shift for ergonomic lifts.

Field Robotics and Drones: From Line-of-Sight to Integrated Airspace

What it is and why it mattered.

Drones and ground robots matured from hobbyist novelties to regulated, enterprise-grade tools. Remote identification requirements clarified accountability in national airspace. Pilots and waivers for beyond-visual-line-of-sight (BVLOS) operations expanded the economic envelope for inspection and delivery.

Requirements and low-cost alternatives.

- Core: Aircraft with Remote ID hardware, a licensed operator where required, and a mission planner with geofencing and logging.

- Add-ons: Thermal sensors for inspection; parachute systems for operations over people.

- Budget path: Start with sub-250 g drones for training and basic mapping tasks where permissible.

Step-by-step for beginners.

- Compliance first. Register aircraft, enable Remote ID, and document operational areas.

- Run standard missions. Fly repeatable grid or orbit missions for inspection; generate consistent orthomosaics.

- Evaluate BVLOS potential. Build safety cases with detect-and-avoid, documented procedures, and operator training.

Beginner modifications and progressions.

- Simplify: Start with daylight, low-wind photogrammetry.

- Scale: Add thermal inspections, corridor mapping, and—where permitted—BVLOS operations with a formal safety case.

Cadence and KPIs.

- Frequency: Weekly flight windows; quarterly safety reviews.

- Metrics: Mission success rate, airspace incursions (target: zero), mean time to data delivery, and exception reports.

Safety and common mistakes.

- Relying on manufacturer geofencing as a proxy for legal compliance.

- Failing to rehearse abort procedures and emergency landings.

Mini-plan (2–3 steps).

- Establish a Remote ID-compliant inspection route; collect baseline imagery; then expand to thermal inspections of the same assets to quantify defect detection uplift.

Surgical and Medical Robotics: Scale First, Autonomy Second

What it is and why it mattered.

Over the last decade, surgical robotics scaled dramatically across hospitals, specialties, and geographies, while credible steps toward autonomy emerged in the lab. Installed bases grew, new systems entered regulated markets, and research robots demonstrated precise laparoscopic maneuvers with reduced tissue trauma in animal models.

Requirements and entry-level alternatives.

- Core (for hospitals): A robotic surgery platform, trained clinical teams, and OR turnover workflows that protect utilization.

- Add-ons: Simulation curricula, advanced imaging, and integrated digital ecosystems for training and analytics.

- Budget path: Partner with regional centers for proctoring; begin with high-volume procedures and robust evidence bases.

Step-by-step for beginners (hospital setting).

- Service line selection. Choose indications with strong outcomes data and predictable case volumes.

- Team training. Proctor surgeons, scrub, and anesthesia; simulate entire room workflows.

- Ramp plan. Start with one day per week, stable case lists, and explicit KPIs.

Beginner modifications and progressions.

- Simplify: Start with single-quadrant cases and standard resection patterns.

- Scale: Add multi-quadrant procedures, dual-console training, and data-driven instrument utilization planning.

Cadence and KPIs.

- Frequency: Monthly morbidity and mortality reviews; quarterly capital review and case mix expansion.

- Metrics: Case time (skin-to-skin), conversion rate, complication rate, instrument spend per case, and OR utilization.

Safety and common mistakes.

- Treating the robot as a marketing device rather than a clinical tool with specific indications and learning curves.

- Inadequate credentialing and proctoring leading to avoidable complications during the ramp.

Mini-plan (2–3 steps).

- Launch with one high-volume indication under proctoring; codify debriefs after each case; expand to adjacent procedures once time and outcomes stabilize.

Humanoids: From Viral Demos to Production Pilots

What it is and why it mattered.

Dynamic humanoids leapt from research videos to controlled pilots on production floors. Industrial interest sharpened around tasks where human-oriented infrastructure (stairs, shelves, hand tools) makes wheels and fixed bases awkward. Over the decade, hardware matured, electric actuation replaced hydraulics for many platforms, and early commercial agreements moved from MOU to milestone-based pilots.

Requirements and low-cost alternatives.

- Core: A bipedal or torso-on-legs platform, environment instrumentation (charging, localization), and tightly-scoped tasks.

- Alternative path: Use AMRs or cobots for most of the flow; evaluate humanoids only where their morphology is necessary.

Step-by-step for beginners.

- Task mining. Isolate 2–3 repetitive, ergonomic pain points (e.g., tote moves).

- Pilot under supervision. Run single-shift trials with safety stewards, clear abort modes, and video review.

- Gate to scale. Define milestone criteria (mean time between interventions, task completion times, near-misses).

Beginner modifications and progressions.

- Simplify: Fixed routes and consistent grasp points.

- Scale: Add multi-station coverage, dynamic re-tasking via voice or WES messages, and off-board reasoning.

Cadence and KPIs.

- Frequency: Weekly firmware updates; daily pre-flight checks; quarterly milestone reviews.

- Metrics: Interventions per hour, successful tote transfers per shift, recovery time from falls or faults, and net ergonomic benefit.

Safety and common mistakes.

- Over-scoping pilots beyond today’s manipulation and balance limits.

- Under-estimating the value of facility modifications (ramps, staging shelves) to reduce risk and increase throughput.

Mini-plan (2–3 steps).

- Assign a humanoid to move empty totes between two stations on a flat route; add end-effector sensing; then trial a light payload move with a supervisor present.

Open-Source, Simulation, and Edge Compute: The Quiet Revolution

What it is and why it mattered.

What changed robotics as much as AI was the ecosystem: reliable LTS middleware, thriving package repositories, simulators that mirror physics and sensors, and edge modules able to run sophisticated policies on-board. Yearly OS-like releases stabilized deployments, while GPU-accelerated simulation unlocked data generation and validation at scale.

Requirements and low-cost alternatives.

- Core: ROS 2 LTS distribution, a hardware abstraction layer, and a simulator with photorealistic rendering and sensor models.

- Edge: A modern embedded GPU module with sufficient TOPS and memory for perception and policy inference.

- Budget path: Start with a low-cost dev board and a laptop GPU; use community assets and scenes.

Step-by-step for beginners.

- Pick an LTS baseline. Standardize on a supported middleware and OS combo across robots.

- Build digital twins. Prototype environments and tasks in simulation first; generate synthetic datasets.

- Close the loop. Validate perception and control in sim; then deploy the same packages to real hardware.

Beginner modifications and progressions.

- Simplify: Single camera plus depth; pre-baked scenes.

- Scale: Multi-sensor fusion, domain randomization for sim-to-real, and scenario-based safety testing.

Cadence and KPIs.

- Frequency: Monthly software releases; nightly simulation regression tests.

- Metrics: Sim-to-real performance gap, bugs caught in sim vs. field, and compute utilization on edge devices.

Safety and common mistakes.

- Skipping sensor-to-world calibration; sim success will not transfer without it.

- Neglecting time synchronization across sensors—essential for fusion.

Mini-plan (2–3 steps).

- Standardize on one ROS 2 LTS; build a digital twin of a single cell; deploy the same code to a lab robot after passing simulation regression.

Safety, Standards, and Governance: The Maturation of the Stack

What it is and why it mattered.

Safety standards for collaborative operation, more predictable airspace rules for drones, and comprehensive AI governance frameworks moved robotics from “possible” to “permissible.” Over the decade, rules crystalized around how machines share space with people, how aircraft identify themselves, and how high-risk AI systems are managed across product lifecycles.

Requirements and low-cost alternatives.

- Core: A formal risk assessment per cell or mission, documented safety functions, and training.

- Add-ons: Third-party functional safety components and regular internal audits.

- Budget path: Start with a lightweight risk register and a simple change-control process.

Step-by-step for beginners.

- Map hazards. List foreseeable misuse, pinch points, and maintenance risks.

- Assign mitigations. Speed limits, e-stops, safe-torque-off, and separation monitoring.

- Plan governance. Keep a living hazard log, test schedules, and incident reviews.

Beginner modifications and progressions.

- Simplify: Begin with low-payload, low-speed applications.

- Scale: Expand to multi-robot cells and formalize governance to match regulatory timelines.

Cadence and KPIs.

- Frequency: Quarterly audits; annual full safety case reviews.

- Metrics: Corrective actions closed on time, near-miss frequency, and training completion rates.

Safety and common mistakes.

- Treating standards as paperwork rather than design inputs.

- Failing to separate developmental test areas from production environments.

Mini-plan (2–3 steps).

- Write a one-page hazard analysis for your first cell; implement two independent stops and documented maintenance lockout; schedule a 60-day post-launch audit.

Quick-Start Checklist

- Define one high-value, low-variance task and its success criteria.

- Choose a stable middleware and simulator; freeze tool versions for 90 days.

- Instrument everything (task success, intervention, downtime, incident reports).

- Establish a safety case: hazards, mitigations, tests, sign-offs.

- Start tiny pilots (one robot, one shift), then scale methodically.

- Train operators and maintenance together—shared vocabulary, shared metrics.

Troubleshooting and Common Pitfalls

- “It worked in sim but fails in the lab.” Re-check camera intrinsics/extrinsics and clock sync; increase domain randomization; add tactile feedback.

- “Cobots pause constantly.” Tune speed-and-separation zones; reduce false positives by improving occlusion handling and reflective-surface mitigation.

- “AMRs block aisles.” Re-plan traffic with one-way lanes and merge zones; add congestion penalties to the planner.

- “Drone missions get scrubbed.” Pre-compute wind-aware return-to-home envelopes; maintain a spare aircraft and batteries; rehearse aborts.

- “Surgical ramp stalls.” Narrow indications; add dual-console proctoring; schedule consistent Thursday-morning blocks to stabilize teams.

How to Measure Progress (That Actually Predicts ROI)

- Task success under change: New items, new lighting, or new operators—measure without re-teaching.

- Human interventions per hour: The most honest autonomy metric.

- Mean time to recovery: From fault to productive motion.

- OEE uplift or lines/hour: Tie technical improvements to throughput.

- Safety leading indicators: Near-misses, guard disablements, and “stop” events per 1,000 cycles.

- Learning velocity: Simulation regressions caught per week, patches per month, and time from idea to A/B test.

A Simple 4-Week Starter Plan

Week 1 — Scope and Safety

- Pick one task (e.g., cobot pick-and-place feeding a manual inspection).

- Draft a one-page safety plan and risk register; define success metrics.

Week 2 — Simulate and Script

- Build the cell in a simulator; validate reach, occlusion, and cycle time.

- Record/teach waypoints; add a basic perception node with fiducials.

Week 3 — First Motion and Metrics

- Deploy to real hardware; run 100 cycles at low speed with a safety steward.

- Log success rate, near-misses, and interventions; fix the top three issues.

Week 4 — Close the Loop and Plan Scale

- Add speed-and-separation monitoring; run a full shift on a non-critical product.

- Present results; decide go/no-go to expand to a second SKU or a second shift.

FAQs

1) Are humanoids ready to replace most warehouse work?

Not yet. Early pilots show promise on ergonomic tote moves and supervised transfers, but reliability and manipulation breadth still limit scope. Start with narrow, low-risk tasks.

2) How do I know whether to choose a cobot or an industrial arm?

If you need high payloads, long reach, or fast cycles with fixed guarding, choose an industrial arm. For mixed-model, human-shared stations with lower payloads and more changeovers, cobots shine.

3) What’s the easiest first step in AI-enabled robotics?

Start with vision that helps a traditional program—barcode reading, pose estimation—then graduate to goal-conditioned skills and language-based tasking as confidence grows.

4) Do I need a high-end GPU at the edge?

For simple pick-and-place with fiducials, no. For multi-camera perception and learned policies, yes. Prototype in the cloud, but plan to run latency-sensitive inference on-board.

5) How do we keep humans safe when robots share the floor?

Combine procedural controls (training, signage) with technical controls (e-stops, speed-and-separation). Validate with routine drills and near-miss tracking.

6) Are drones finally allowed to fly long distances without spotters?

Waivers and specific approvals are expanding, and proposed rules are in motion. Practical BVLOS remains tightly regulated; expect location-dependent procedures.

7) What’s the real ROI timeline for a first warehouse robot?

Small pilots can hit payback in months if scoped to clear bottlenecks. The biggest delays come from integration and change management, not the robot itself.

8) Is autonomous surgery actually real?

Autonomous subtasks in animal models are real and improving; full human surgeries without clinician oversight are not approved. Expect gradual autonomy under surgeon supervision.

9) Should startups build hardware or focus on software?

Unless you have a killer actuator or sensor innovation, leverage mature hardware and spend your cycles on high-leverage software—perception, planning, data tooling, and ops.

10) How do I avoid vendor lock-in?

Standardize on open middleware, insist on data portability, and maintain digital twins you control. Negotiate exit clauses and API commitments up front.

Conclusion

Ten years ago, robots were impressive demos with narrow utility. Today, they’re dependable teammates in factories and warehouses, credible tools in operating rooms and on job sites, and they’re beginning to reason about open-ended tasks. The evolution wasn’t one breakthrough—it was the compounding of AI-native control, safer human-robot collaboration, realistic simulation, and clearer rules of the road. Start small, measure honestly, and build the organizational muscles—safety, data, maintenance—that turn pilots into profit.

Call to action: Pick one task, one robot, and one metric—then ship your first four-week pilot.

References

- An Electric New Era for Atlas, Boston Dynamics, April 13, 2024. https://bostondynamics.com/blog/electric-new-era-for-atlas/

- RT-2: New model translates vision and language into action, Google DeepMind, July 28, 2023. https://deepmind.google/discover/blog/rt-2-new-model-translates-vision-and-language-into-action/

- RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control, arXiv, July 28, 2023. https://arxiv.org/abs/2307.15818

- Vision-Language-Action Models: RT-2 (project page and paper PDF), Robotics Transformer 2, 2023. https://robotics-transformer2.github.io/

- Amazon introduces new robotics solutions, About Amazon, October 18, 2023. https://www.aboutamazon.com/news/operations/amazon-introduces-new-robotics-solutions

- Amazon has more than 750,000 robots working in its fulfillment centers. Here are some of the things they can do., Business Insider, February 2025. https://www.businessinsider.com/how-amazon-uses-robots-sort-transport-packages-warehouses-2025-2

- As Amazon expands use of warehouse robots, what will it mean for workers?, AP News, 2024. https://apnews.com/article/6da0e5ed0273ed15ec43b38b007918df

- Record of 4 Million Robots Working in Factories Worldwide, International Federation of Robotics, September 24, 2024. https://ifr.org/ifr-press-releases/news/record-of-4-million-robots-working-in-factories-worldwide

- World Robotics 2024 – Press Conference Slides (PDF), International Federation of Robotics, September 2024. https://ifr.org/img/worldrobotics/Press_Conference_2024.pdf

- World Robotics 2023 Report: Asia ahead of Europe and the Americas, International Federation of Robotics, September 26, 2023. https://ifr.org/ifr-press-releases/news/world-robotics-2023-report-asia-ahead-of-europe-and-the-americas

- Collaborative Robots – How Robots Work alongside Humans, International Federation of Robotics, December 4, 2024. https://ifr.org/ifr-press-releases/news/how-robots-work-alongside-humans

- ISO/TS 15066:2016 — Robots and robotic devices — Collaborative robots, International Organization for Standardization, 2016. https://www.iso.org/standard/62996.html

- PD ISO/TS 15066:2016 (publication details), BSI Knowledge, March 31, 2016. https://knowledge.bsigroup.com/products/robots-and-robotic-devices-collaborative-robots

- ROS 2 Jazzy Jalisco Released, Open Robotics, May 30, 2024. https://www.openrobotics.org/blog/2024/5/ros-jazzy-jalisco-released