Disclaimer: This article discusses technology used in health and safety contexts, including stress detection and driver monitoring. It is for informational purposes only and does not constitute medical advice or legal compliance guidance. For medical concerns, always consult a qualified healthcare professional.

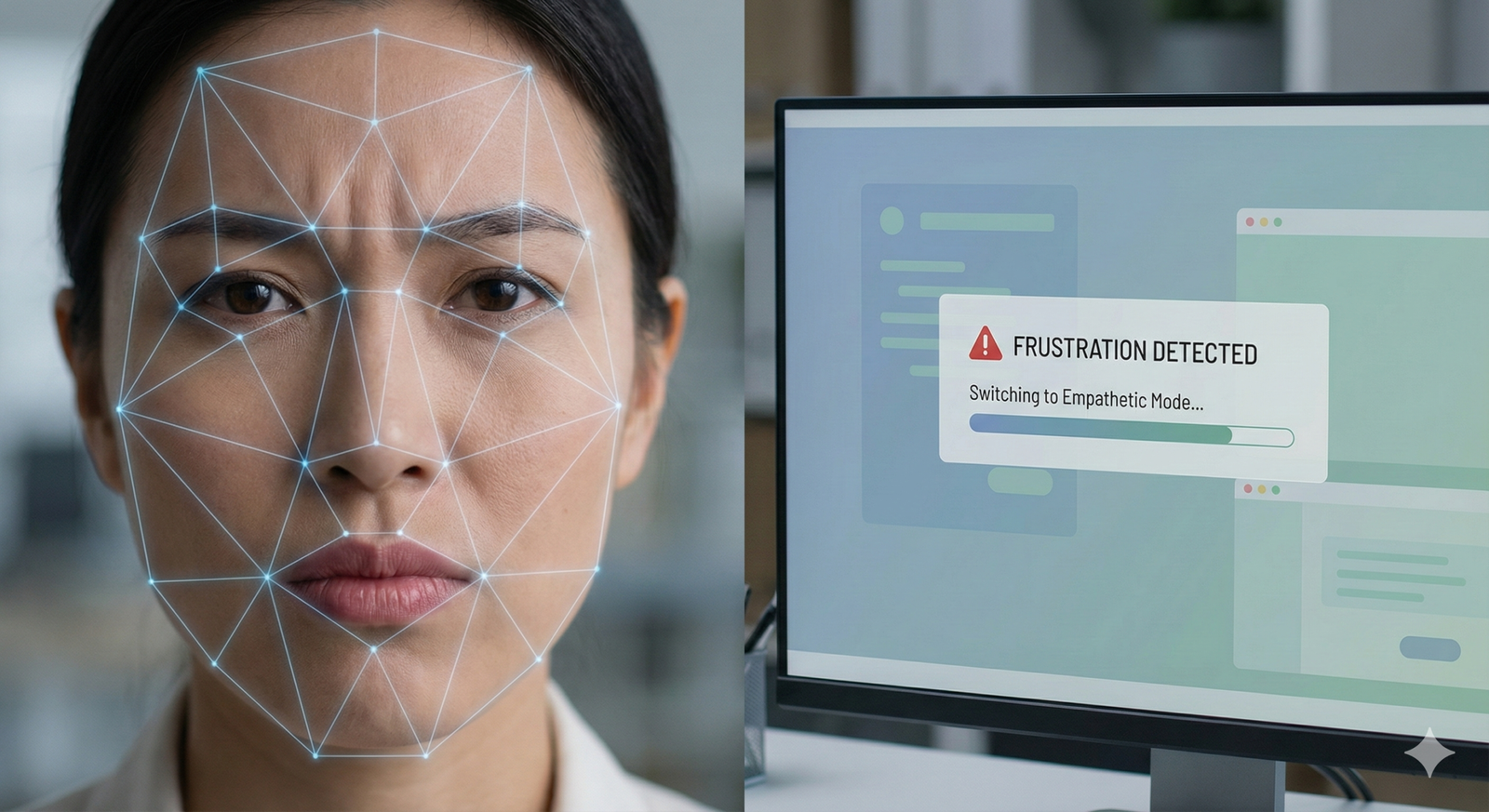

Imagine a car that knows when you are experiencing road rage and plays calming music, or a customer service chatbot that detects the subtle tremor of stress in your voice and immediately routes you to a senior human agent. This is not science fiction; it is the rapidly evolving reality of emotion AI.

Also known as affective computing, emotion AI represents a massive leap forward from the rigid, logic-based computing of the past. While traditional AI excels at IQ—processing data, calculating odds, and generating text—emotion AI aims to provide machines with EQ (Emotional Intelligence). By analyzing facial expressions, vocal intonations, body language, and even physiological signals, these systems attempt to “read” the room and adapt accordingly.

However, giving machines the power to gauge our internal states brings complex challenges. From the technical hurdles of interpreting cultural nuances to the profound ethical questions surrounding privacy and “emotional surveillance,” the landscape is vast and thorny.

In this guide, emotion AI refers to the branch of artificial intelligence that detects, interprets, and simulates human emotional states using various data inputs.

Key Takeaways

- Beyond Text: Emotion AI goes deeper than simple text sentiment analysis by using multimodal data—video (facial expressions), audio (tone/pitch), and biometrics (heart rate/skin conductance).

- Real-World Impact: It is currently active in automotive safety (detecting drowsiness), call centers (coaching agents), and healthcare (mental health monitoring).

- Technical Complexity: It relies on “multimodal fusion,” combining different signal types to guess an internal state, often using frameworks like Ekman’s six basic emotions.

- Ethical Risks are High: Privacy concerns, potential bias in training data, and the risk of manipulation are significant hurdles facing the industry.

- Regulation is Incoming: As of January 2026, frameworks like the EU AI Act are placing strict guardrails on where and how emotion recognition can be used, particularly in workplaces and schools.

Who This Is For (and Who It Isn’t)

This guide is for:

- Business Leaders & Product Managers: Looking to understand how to integrate empathy into user experiences or customer support workflows.

- Developers & Data Scientists: Seeking a conceptual overview of the modalities and frameworks used in affective computing.

- Curious Consumers & Privacy Advocates: Wanting to know how much devices understand about their feelings and the privacy implications involved.

This guide is not for:

- Clinical Psychologists: Looking for medical diagnostic criteria or treatment protocols.

- Hardware Engineers: Seeking deep-level schematics for biometric sensor construction.

What Is Emotion AI? (Defining Affective Computing)

At its core, emotion AI is a subset of artificial intelligence designed to bridge the gap between human emotion and digital response. The term “affective computing” was popularized in the late 1990s by Rosalind Picard at MIT’s Media Lab. The premise was simple yet revolutionary: if computers are to interact naturally with humans, they must recognize and respond to human emotion.

Distinction From Sentiment Analysis

It is common to confuse emotion AI with sentiment analysis, but they differ in scope and depth:

- Sentiment Analysis: Primarily text-based (NLP). It analyzes words to determine if a statement is positive, negative, or neutral. It reads what you said.

- Emotion AI: Is multimodal. It analyzes how you said it. It looks at the pitch of your voice, the furrow of your brow, the pause in your speech, and potentially your heart rate variability. It aims to identify specific states like anger, joy, surprise, or fatigue.

The “EQ” of Machines

The goal of this technology is not necessarily for the machine to “feel” emotions (which remains firmly in the realm of philosophy and sci-fi), but to simulate empathy. If a machine can recognize that a user is confused or stressed, it can alter its behavior—slowing down a tutorial, changing its tone of voice, or alerting a human supervisor. This capability transforms the user interface from a static tool into an adaptive partner.

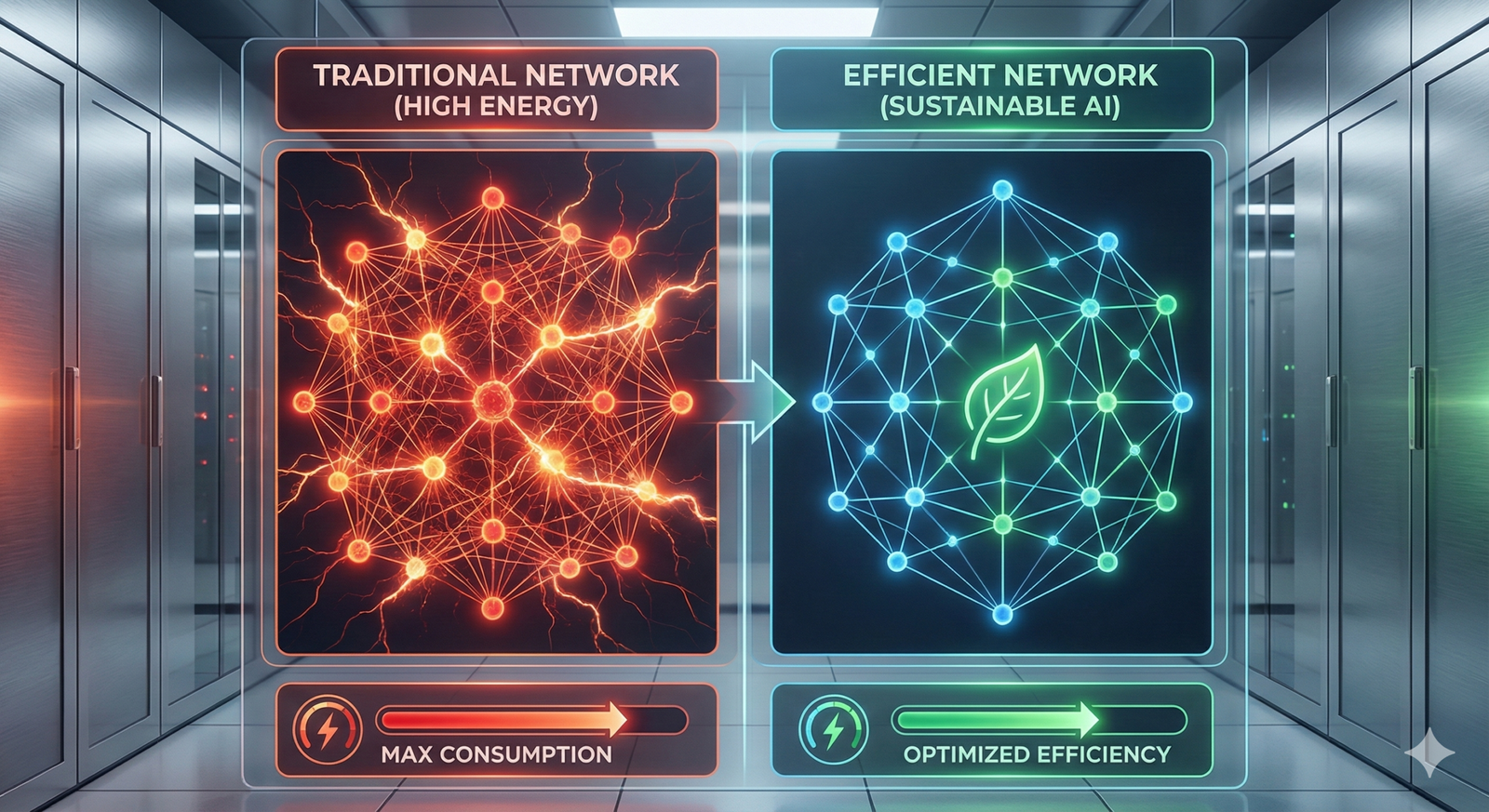

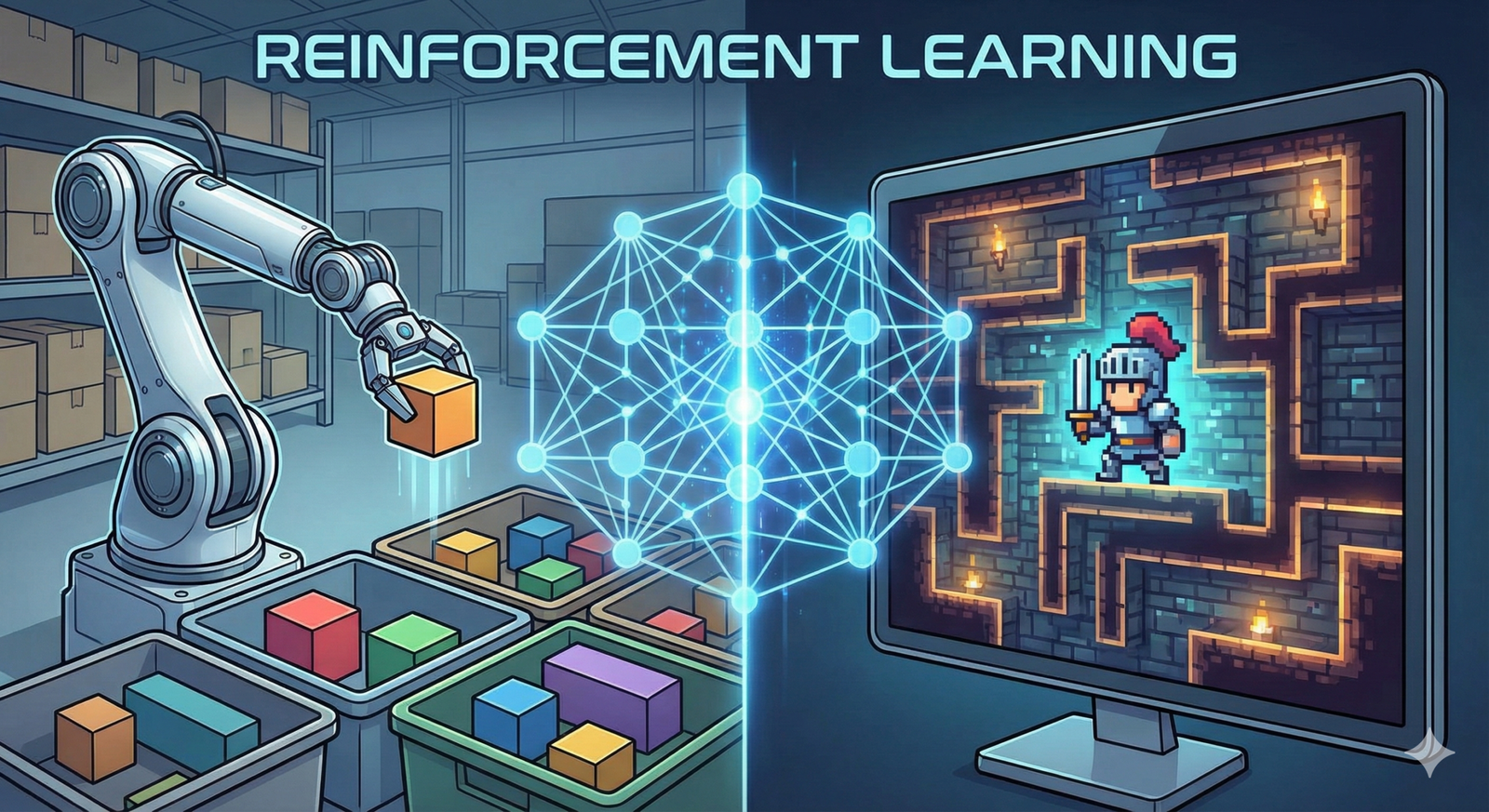

How Emotion AI Works: The Technology Behind the Sense

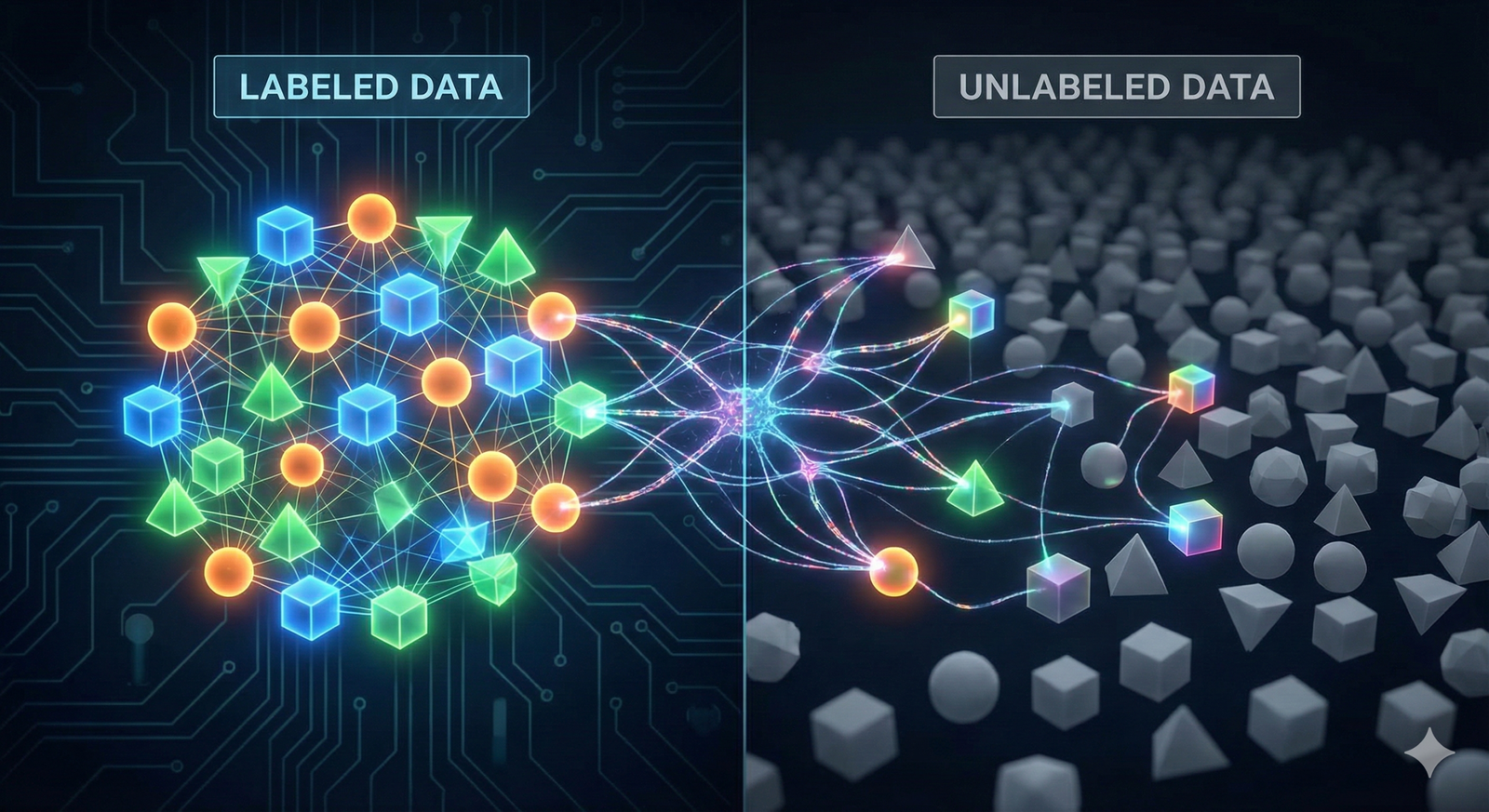

Emotion AI does not possess intuition. It relies on pattern recognition, comparing incoming data against massive datasets of labeled human behaviors. The process generally follows a pipeline: Data Capture → Processing & Fusion → Classification.

1. Data Capture (The Modalities)

To gauge stress or happiness, the system needs inputs. These are the “senses” of the AI:

- Computer Vision (Facial Coding): Cameras track key landmarks on a face (corners of the mouth, eyebrows, nose tip). The system measures the movement of these points to identify micro-expressions.

- Audio Processing (Voice Analysis): Microphones capture speech. The AI analyzes prosody—the rhythm, stress, and intonation of speech—rather than just the words. It looks for tremors, volume spikes, or rapid pacing indicative of stress.

- Biometrics (Physiological Sensors): Wearables (like smartwatches) or specialized sensors measure heart rate variability (HRV), galvanic skin response (sweat), and respiration. These are often the most reliable indicators of physiological stress.

- Text (NLP): While basic, the choice of words and punctuation still provides context to the other signals.

2. Processing and Multimodal Fusion

A person might smile while speaking angrily (sarcasm), or cry tears of joy. A single data source is often misleading. Advanced emotion AI uses multimodal AI, specifically a technique called “sensor fusion,” to verify signals against each other.

- Example: If facial recognition sees a smile, but voice analysis detects high-stress tremors and text analysis finds negative words, the system might classify the emotion as “nervousness” or “sarcasm” rather than “happiness.”

3. Classification Frameworks

Once the data is processed, the AI must label it. Two main psychological frameworks are used:

- Categorical (Ekman’s Model): Classifies emotions into discrete buckets: Anger, Disgust, Fear, Happiness, Sadness, and Surprise. This is the most common approach for commercial software.

- Dimensional (The Circumplex Model): Plots emotion on a graph. The X-axis might be Valence (positive vs. negative) and the Y-axis might be Arousal (calm vs. excited). This allows for more nuance, distinguishing between “depressed” (negative valence, low arousal) and “stressed” (negative valence, high arousal).

Key Modalities of Emotion Detection

To understand how machines that gauge stress operate in the real world, we must look closer at the specific technologies driving the industry.

Facial Action Coding System (FACS)

This is the gold standard for visual emotion AI. Based on research identifying specific muscle movements (Action Units) that correspond to emotions, AI models map the face into a geometry of nodes.

- How it works: If the inner eyebrows raise (Action Unit 1) and the jaw drops (Action Unit 26), the system calculates the probability of “Surprise.”

- Limitations: It struggles with cultural differences (in some cultures, smiling is a sign of politeness even during disagreement) and people who have “poker faces” or facial paralysis.

Voice and Speech Analytics

Voice is often harder to mask than facial expressions. AI emotion recognition in audio examines:

- Pitch: High pitch can indicate excitement or fear.

- Tone: Flat tones may indicate boredom or depression.

- Jitter and Shimmer: Technical terms for micro-fluctuations in pitch and loudness that often correlate with high cognitive load or stress.

Physiological and Wearable Sensors

This is the frontier of “invisible” emotion tracking.

- EDA (Electrodermal Activity): Measuring skin conductance (sweat gland activity). High peaks usually correlate with high arousal (stress or excitement).

- HRV (Heart Rate Variability): Low variability is a strong physiological marker of stress and fight-or-flight responses.

- Eye Tracking: Pupil dilation can indicate intense focus or cognitive overload.

Real-World Applications: Where Emotion AI Is Used Today

While some applications are still experimental, emotion AI is already embedded in several industries, driving efficiency and safety.

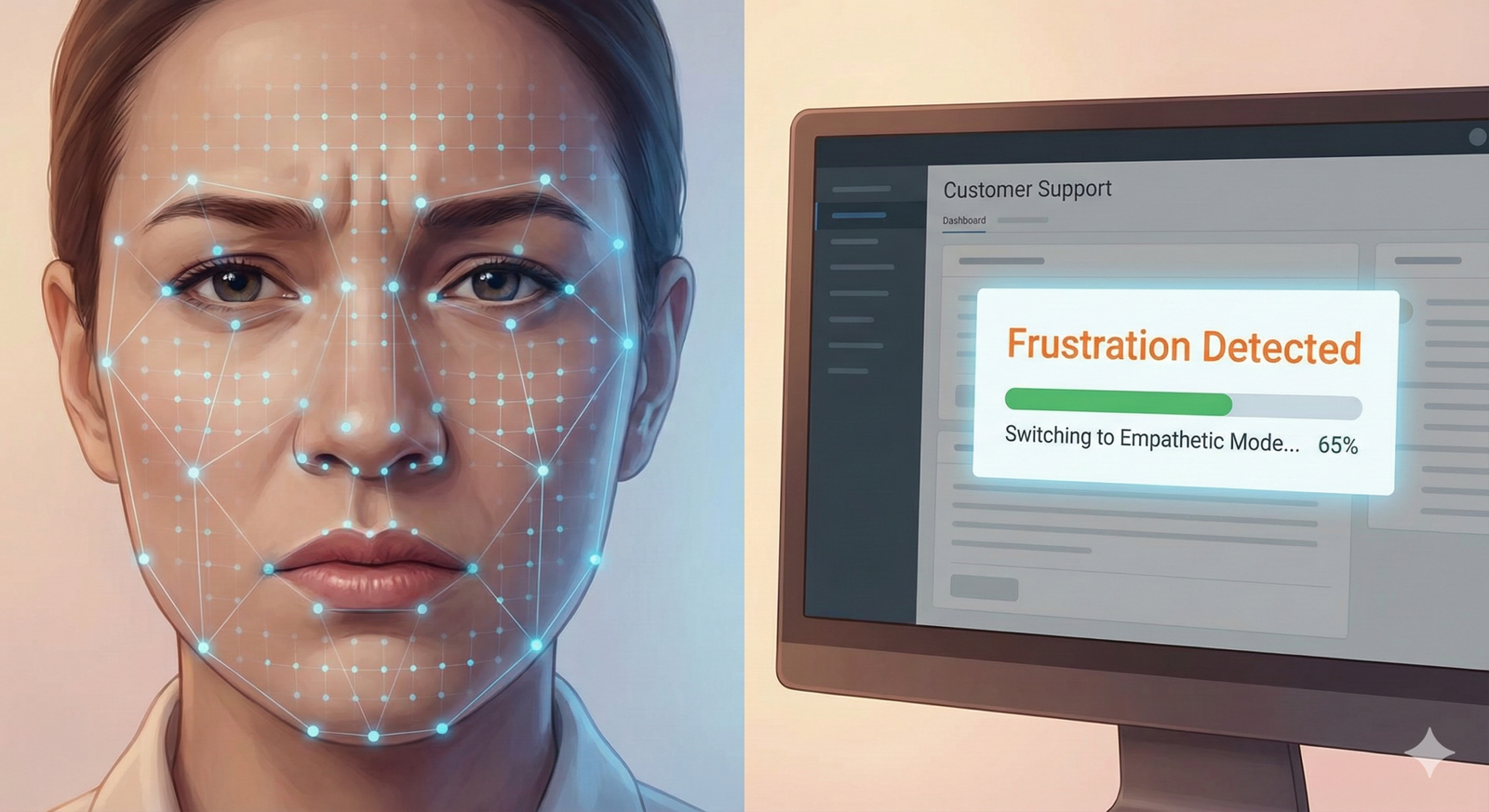

1. Customer Service and Call Centers

This is currently the largest commercial use case.

- Agent Coaching: AI analyzes the customer’s voice in real-time. If the customer sounds irate, the AI prompts the agent on their screen: “Customer sounds frustrated. Try speaking more slowly and using empathy statements.”

- Routing: “Sentiment-based routing” directs angry customers to agents with high EQ scores or specialized retention training, rather than a general queue.

- Post-Call Analytics: Instead of manually reviewing calls, managers get a dashboard showing “Stress Peaks” across thousands of calls, identifying product issues that trigger negative emotions.

2. Automotive: The Empathetic Car

With the rise of semi-autonomous driving, the car needs to know if the human is ready to take the wheel.

- Driver Monitoring Systems (DMS): Cameras inside the cabin track eyelid droop (fatigue) and gaze (distraction).

- Stress & Rage Detection: If the car detects road rage (aggressive voice, tight grip on the wheel via pressure sensors), it can adjust cabin lighting, lower the temperature, or suggest a break.

- Regulation: As of 2024-2026, European safety standards (GSR) have mandated driver monitoring features in new vehicles, accelerating the adoption of these technologies.

3. Mental Health and Healthcare

Mental health AI applications use objective data to support subjective diagnosis.

- Companion Bots: Robots like Moxie (for children) or ElliQ (for seniors) use emotion AI to detect loneliness or sadness and initiate conversations to improve mood.

- Telehealth Support: Apps analyze video diaries to track patient progress. If a patient with depression shows a flattening of vocal tone and reduced facial movement over weeks, the app can flag this potential relapse to their therapist.

- Autism Support: Wearables (like Google Glass implementations) have been used to help children with autism recognize the emotions of others by displaying the emotion name (e.g., “Happy,” “Angry”) in their field of view.

4. Education and Corporate Training

- Student Engagement: Some experimental platforms analyze students’ faces during remote learning to detect confusion or boredom. If the class average for “confusion” spikes, the teacher knows to re-explain the concept. Note: This is highly controversial and regulated.

- Public Speaking Training: Tools analyze a user’s presentation style, giving feedback on eye contact, filler words, and whether they sounded confident or anxious.

5. Media and Neuromarketing

Brands want to know if their ads work.

- Ad Testing: Instead of asking a focus group “Did you like this?”, researchers film the group’s faces while they watch. The AI detects split-second micro-expressions of joy, disgust, or skepticism that the viewers might not even admit to themselves.

- Game Testing: Video game developers measure player frustration vs. engagement to balance difficulty levels.

The Benefits: Why Add EQ to Machines?

Why invest in machines that gauge stress? The benefits usually fall into three categories:

Hyper-Personalization

Current personalization is based on past clicks (behavior). Emotion AI allows personalization based on current state. If a learning app sees you are frustrated, it switches to an easier exercise. If a music app hears sadness in your voice request, it avoids upbeat party tracks.

Enhanced Safety

In contexts like driving, heavy machinery operation, or air traffic control, human error is often caused by fatigue or high stress. Emotion AI acts as a failsafe, detecting the physiological precursors to error before the accident happens.

Improved Human Connection (Paradoxically)

In telehealth or customer support, AI alerts can help humans be more empathetic. A tired doctor or a burnt-out call center agent might miss subtle cues that the AI picks up, reminding the professional to check in on the person’s feelings.

Ethical Concerns and Privacy Risks

This section is critical. Emotion AI ethics is one of the most hotly debated topics in technology. The capability to read minds—or at least, read the physical signals of the mind—creates significant risks.

1. The “Pseudo-Science” Critique

Many psychologists argue that facial coding is scientifically flawed. They contend that emotions are not universal; a scowl can mean anger, concentration, or bad eyesight. If an AI relies on a simplified model (Ekman’s 6 emotions), it may fundamentally misinterpret complex human states. Relying on this for hiring decisions or security assessments is dangerous.

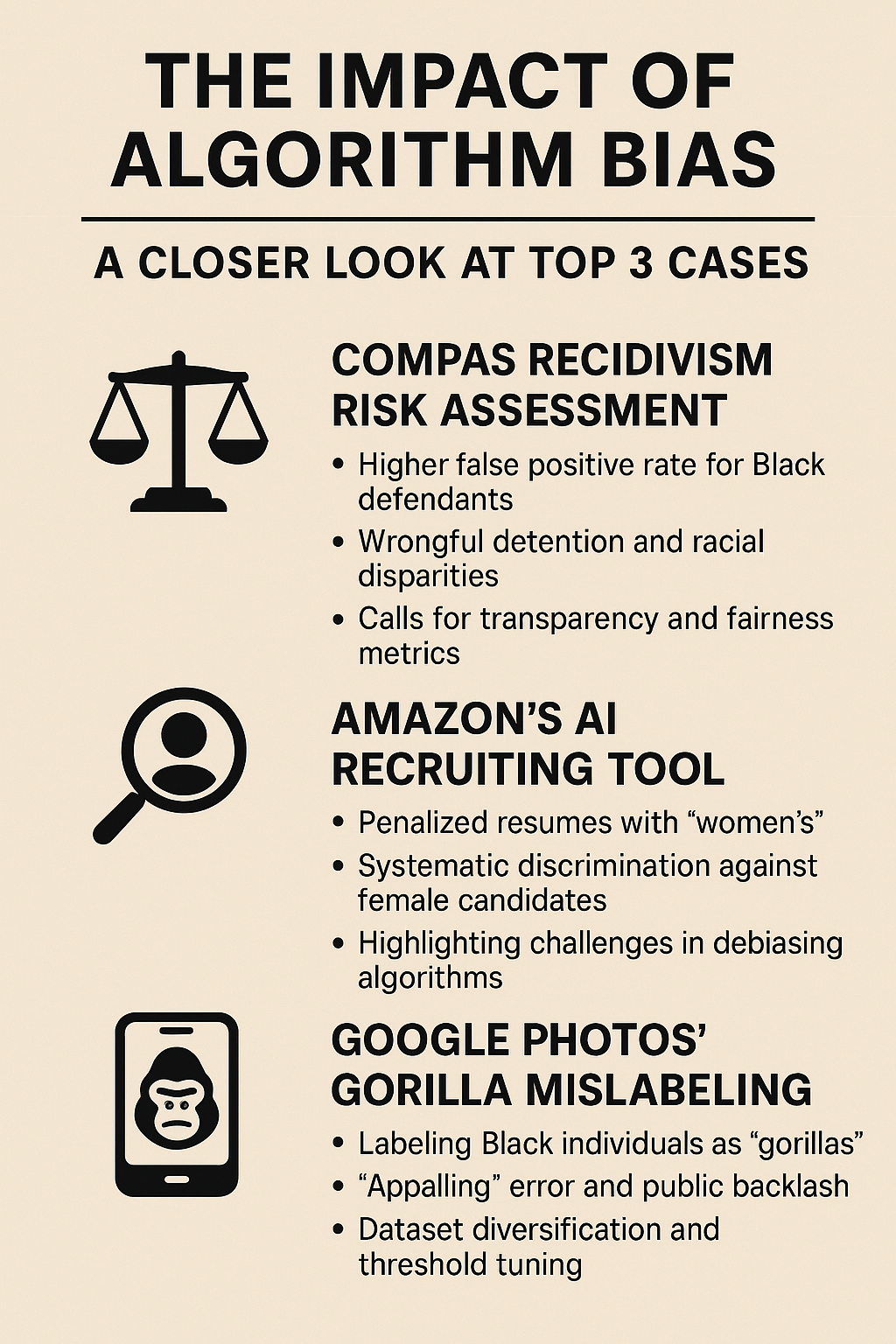

2. Bias and Discrimination

Training datasets have historically been skewed toward white, Western faces.

- Racial Bias: AI models have been shown to misinterpret the facial expressions of Black men as more “aggressive” than they are.

- Cultural Bias: In some cultures, maintaining eye contact is respectful; in others, it is aggressive. If an AI judge in a job interview expects Western norms, it will unfairly penalize candidates from other backgrounds.

3. Emotional Surveillance and Privacy

Privacy concerns are paramount. Your face, voice, and heart rate are biological data.

- Involuntary Disclosure: You can choose what you type, but you cannot easily control your micro-expressions or pupil dilation. Emotion AI strips away the “mask” people wear in public.

- Workplace Monitoring: The idea of a boss tracking “employee happiness” via webcams is dystopian to many. It creates pressure to “perform” happiness, leading to emotional exhaustion.

4. Manipulation (Nudging)

If an advertiser knows exactly when you are most vulnerable, insecure, or happy, they can time their pitch to be irresistibly effective. This moves marketing from persuasion to manipulation, potentially exploiting users’ emotional states for profit.

Regulatory Landscape: The EU AI Act and Beyond

Governments are stepping in. As of early 2026, the regulatory landscape is shifting from “wild west” to “strict compliance.”

- The EU AI Act: This landmark legislation classifies certain uses of emotion recognition as “unacceptable risk” or “high risk.”

- Prohibited: The use of emotion recognition systems in the workplace and educational institutions for the purpose of monitoring is largely banned or heavily restricted to prevent intrusive surveillance.

- High Risk: Systems used in law enforcement, border control, or recruitment are subject to rigorous conformity assessments.

- US State Laws: States like Illinois (BIPA) have strict laws regarding biometric data collection, which covers the facial geometry data often used in emotion AI.

Common Pitfalls in Implementing Emotion AI

For organizations looking to deploy emotion AI, failure often comes from these mistakes:

1. Ignoring Context

A person screaming at a football game is happy. A person screaming in a bank is likely angry or terrified. If the AI analyzes the face/voice without knowing the context (football game vs. bank), the classification will be wrong. Context-aware AI is the next hurdle for the industry.

2. Over-Trusting the Machine

Users often fall into “automation bias,” believing the computer is objective. If an AI says a candidate “looked dishonest” during an interview, hiring managers might believe it over their own judgment, even though the AI might simply be reacting to the candidate’s nervousness or poor lighting.

3. Neglecting User Consent

Using emotion analysis on customers or employees without explicit, informed consent is a fast track to PR disasters and lawsuits. “Transparency” is the watchword—users must know if their emotions are being analyzed.

Future Trends: The Road to Empathic Computing

Where is stress detection technology going next?

Generative Empathy

With the rise of Large Language Models (LLMs), emotion AI is merging with generative AI. Instead of just detecting “sadness,” the AI can generate a response that is tonally perfect, using an empathetic voice and phrasing to comfort the user. We are moving from detection to interaction.

Ambient Intelligence

Sensors are moving from cameras to invisible radar and Wi-Fi sensing. Researchers are developing Wi-Fi routers that can detect the breathing rates and heartbeats of people in a room without them wearing any devices. This could enable “smart homes” that adjust lighting and music automatically when they detect high stress levels in the household.

Longitudinal Emotion Tracking

Moving from “state” (how you feel now) to “trait” (how you are changing over time). This is crucial for healthcare, where long-term trends in mood are more important than a single moment of frustration.

Related Topics to Explore

- Multimodal Machine Learning: The engineering behind combining audio, video, and text data.

- Neuromarketing: How brain science and biometrics are reshaping advertising.

- Biometric Privacy Laws: Deep dives into GDPR, BIPA, and the EU AI Act.

- Human-Computer Interaction (HCI): The broader field of designing interfaces that work with human capabilities.

- Explainable AI (XAI): The challenge of understanding why an AI classified a face as “angry.”

Conclusion

Emotion AI represents a profound shift in our relationship with technology. We are moving from a world where we have to learn the language of machines (code, commands, clicks) to a world where machines are learning the language of humans (smiles, sighs, hesitation).

The potential for machines that gauge stress to save lives on the road, improve mental health care, and humanize customer service is immense. However, the technology sits on a razor’s edge. Without rigorous ethical standards, bias mitigation, and privacy protection, it risks becoming a tool for intrusive surveillance rather than empathetic support.

For businesses and developers, the path forward involves responsible innovation: prioritizing transparency, validating data across multiple modalities, and remembering that while AI can detect a smile, it takes a human to understand the meaning behind it.

FAQs

1. Can emotion AI really detect feelings?

Technically, no. It detects expressions and physiological signals that correlate with feelings. It maps physical data (a smile, a high heart rate) to statistical probabilities of an emotion. It cannot know your internal subjective experience, only your external display.

2. Is emotion AI legal in the workplace?

It varies by region. In the EU, under the AI Act, using emotion recognition to monitor employees is heavily restricted and generally prohibited due to privacy rights. In the US, it is generally legal but subject to biometric privacy laws in states like Illinois (BIPA) and California (CCPA/CPRA).

3. How accurate is stress detection technology?

Accuracy varies by modality. Physiological sensors (HRV, skin conductance) are generally more accurate for detecting physiological arousal (stress) than facial analysis, which can be easily faked or misinterpreted. Combining multiple data sources (multimodal fusion) significantly improves accuracy.

4. Can I opt out of emotion tracking?

In many consumer applications (like cars or smart speakers), you can often toggle these features off in settings. However, in public surveillance or security contexts, opting out may not be possible, which is a major point of contention for privacy advocates.

5. Does emotion AI work on everyone?

Not equally. Systems are often less accurate for people with facial distinctiveness, facial paralysis, neurodivergent individuals (who may express emotions differently), and certain ethnic groups if the training data was not diverse.

6. What is the difference between mood and emotion in AI?

In AI terms, “emotion” usually refers to a short-term, transient reaction (e.g., surprise at a loud noise). “Mood” refers to a longer-lasting emotional state (e.g., feeling down for several days). Detecting mood requires longitudinal data tracking over time.

7. What sensors are used for stress detection?

Common sensors include optical heart rate sensors (PPG) in watches, galvanic skin response (GSR) sensors for sweat, cameras for pupil dilation and blink rate, and microphones for voice pitch analysis.

8. Is emotion AI used in hiring?

It has been used to analyze video interviews to gauge “culture fit” or confidence. However, this practice is highly controversial, scientifically questioned, and faces strict regulatory scrutiny (classified as “High Risk” in the EU) due to the potential for bias against candidates.

9. Can emotion AI detect lies?

“Lie detection” is a dubious claim. While AI can detect physiological stress markers often associated with deception (the “polygraph effect”), these markers can also be caused by anxiety, fear, or excitement. Reliable lie detection remains scientifically unproven.

10. How does the automotive industry use emotion AI?

They use it primarily for safety. Cameras and sensors detect “driver states” like drowsiness, distraction, or high stress/rage. The car then reacts—alerting the driver, tightening seatbelts, or enabling autonomous braking protocols to prevent accidents.

References

- MIT Media Lab. (n.d.). Affective Computing Group Projects. Massachusetts Institute of Technology. https://www.media.mit.edu/groups/affective-computing/overview/

- European Parliament. (2024). EU AI Act: First regulation on artificial intelligence. European Union. https://artificialintelligenceact.eu/

- Barrett, L. F., et al. (2019). Emotional Expressions Reconsidered: Challenges to Inferring Emotion From Human Facial Movements. Psychological Science in the Public Interest. https://journals.sagepub.com/doi/10.1177/1529100619832930

- Gartner. (2025). Hype Cycle for Artificial Intelligence, 2025. Gartner Research. https://www.gartner.com/en/research/methodologies/gartner-hype-cycle

- Information Commissioner’s Office (ICO). (2022). Biometric data and biometric recognition technologies. ICO UK. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/

- Picard, R. W. (1997). Affective Computing. MIT Press.

- IEEE Standards Association. (2025). Ethical Considerations in Emulated Empathy and Affective Computing. IEEE. https://standards.ieee.org/

- Hume AI. (2024). The Science of Expression: Measuring Voice and Face. Hume AI Research. https://hume.ai

- Smart Eye. (2025). Driver Monitoring Systems and Interior Sensing. Smart Eye Automotive Solutions. https://smarteye.se

- American Psychological Association (APA). (2023). The role of technology in mental health services. APA. https://www.apa.org/topics/mental-health/technology