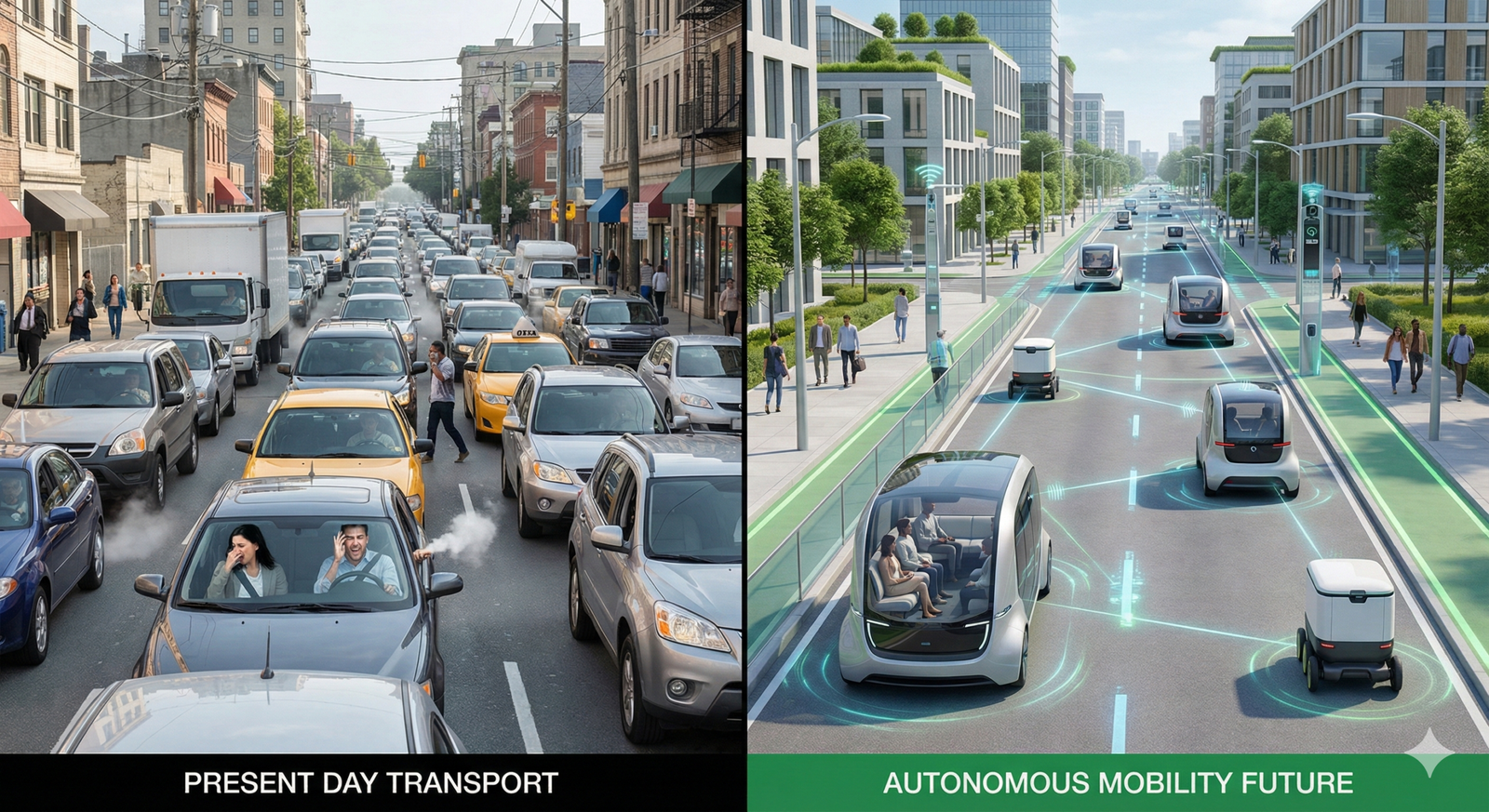

Imagine a morning commute where you don’t drive. Instead, you step into a pod that arrived precisely when you needed it, effectively turning your travel time into extra sleep, work, or leisure. This isn’t science fiction—it is the rapidly evolving reality of autonomous vehicles and AI mobility.

As we move through 2026, the conversation around transportation has shifted from “if” to “how” and “when.” The convergence of robotics, artificial intelligence (AI), and high-speed connectivity is creating a new ecosystem known as Mobility as a Service (MaaS), fundamentally challenging the century-old model of private car ownership.

Disclaimer: This article discusses emerging technologies, regulations, and safety standards which are subject to rapid change. While we strive for accuracy, laws regarding autonomous driving vary significantly by region. Always consult local transport authorities or legal professionals for specific compliance guidance.

Who This Guide Is For (and Who It Isn’t)

This guide is for:

- Tech Enthusiasts & Early Adopters: Readers who want to understand the “brain” behind the machine—how LiDAR, radar, and cameras work together.

- Policy Makers & Urban Planners: Professionals looking at the infrastructure impacts of self-driving fleets.

- Business Leaders & Investors: Individuals interested in the economic shifts, from logistics to insurance.

- General Commuters: Anyone curious about how their daily travel might change in the next decade.

This guide isn’t for:

- DIY Mechanics: We won’t be covering how to repair an internal combustion engine or modify a car’s suspension.

- Hardcore AI Developers: While we discuss algorithms, we won’t be writing Python code or debugging neural networks here.

Key Takeaways

- The Shift to Usership: The future is likely less about owning a car and more about subscribing to mobility services.

- Safety First: AI aims to eliminate human error, which accounts for the vast majority of traffic accidents, though it introduces new ethical challenges.

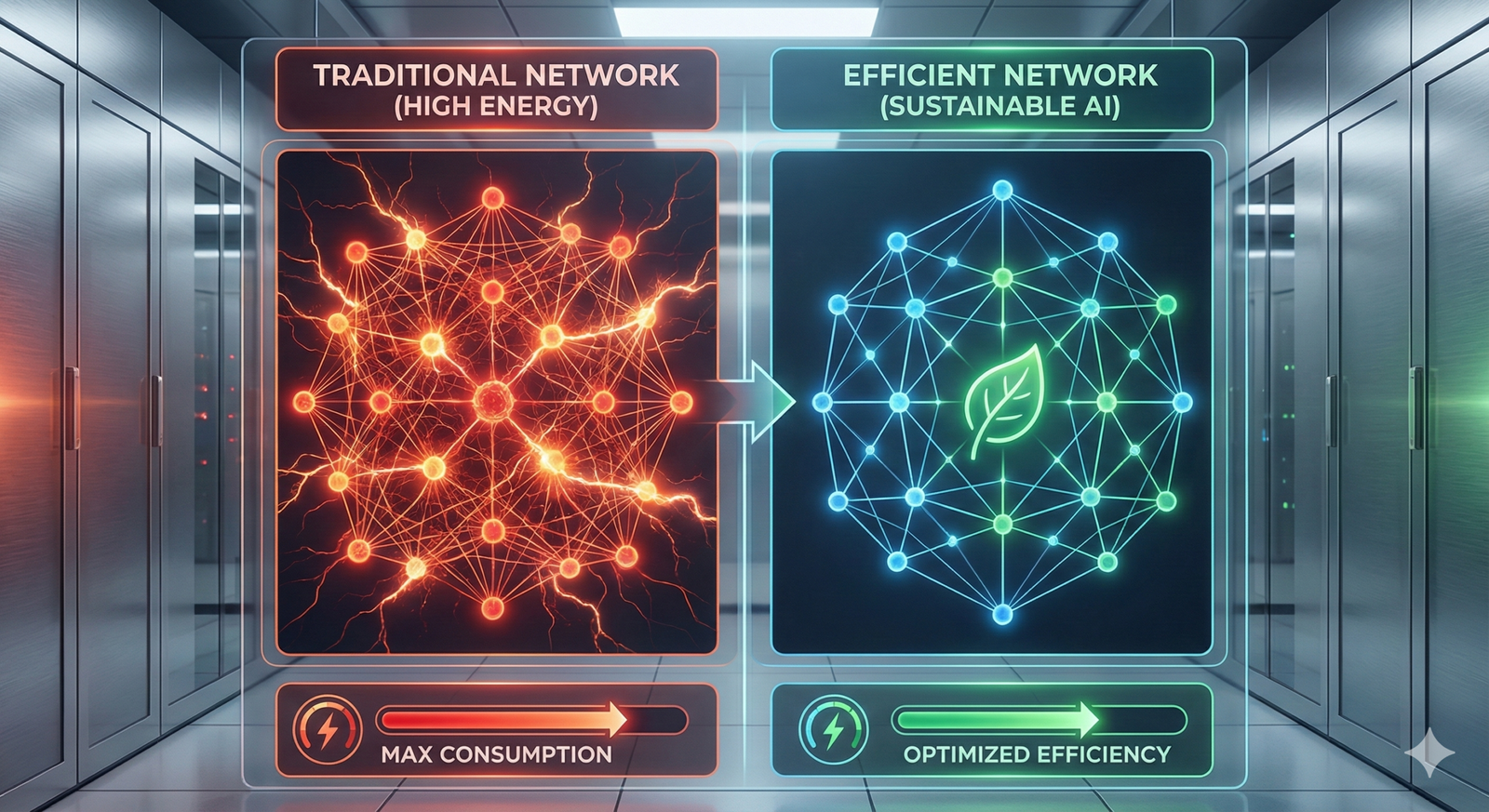

- Data is the New Oil: Autonomous vehicles (AVs) generate terabytes of data daily, requiring massive infrastructure for processing and security.

- Levels of Autonomy: Not all “self-driving” cars are equal; understanding the difference between Level 2 (driver assist) and Level 5 (full autonomy) is critical.

- Infrastructure Dependency: AVs cannot exist in a vacuum; they need smart cities and V2X (Vehicle-to-Everything) communication to function optimally.

Defining the Landscape: Autonomous Vehicles and AI Mobility

To understand where we are going, we must first define the core components of this revolution. It is not just about a car that steers itself; it is about an entire intelligent network.

What are Autonomous Vehicles (AVs)?

Autonomous vehicles are robotic systems capable of sensing their environment and operating without human involvement. They utilize a sophisticated suite of sensors—eyes and ears, essentially—fed into a central AI computer that acts as the brain. This “brain” makes split-second decisions based on perception, prediction, and planning algorithms.

What is AI-Driven Mobility?

While the AV is the hardware, AI mobility is the service layer. It refers to the intelligent orchestration of transportation fleets. Think of it as a “super-dispatcher” that uses predictive analytics to position vehicles where demand will be highest, optimizes routes to reduce energy consumption, and manages the entire lifecycle of a trip from booking to payment. This forms the backbone of Mobility as a Service (MaaS).

In this ecosystem, the vehicle is merely a node in a massive, interconnected digital network. The goal is to make movement seamless, efficient, and sustainable.

The 6 Levels of Vehicle Autonomy

Understanding the capabilities of autonomous vehicles and AI mobility starts with the Society of Automotive Engineers (SAE) levels of automation. This standard helps cut through marketing hype to reveal what a vehicle can actually do.

Level 0: No Automation

- Definition: The human driver does everything.

- In Practice: Your classic 1990s sedan. You steer, brake, accelerate, and monitor the road. Systems like basic cruise control are technically Level 0 because they don’t steer or adapt to traffic dynamically.

Level 1: Driver Assistance

- Definition: The vehicle can assist with either steering or braking/acceleration, but not both simultaneously.

- In Practice: Adaptive Cruise Control (ACC) keeps a set distance from the car ahead, or Lane Keeping Assist (LKA) nudges you back into the lane. The driver is still fully responsible.

Level 2: Partial Automation

- Definition: The vehicle can control both steering and acceleration/deceleration simultaneously.

- In Practice: This is the standard for most premium vehicles as of 2026. Systems like Tesla’s Autopilot or GM’s Super Cruise (in early versions) fall here. The driver must keep their hands on the wheel (or eyes on the road) and be ready to take over instantly.

Level 3: Conditional Automation

- Definition: The vehicle can handle all aspects of driving in specific conditions (e.g., traffic jams on highways). The driver can take their eyes off the road but must be ready to intervene when the system requests it.

- In Practice: “Traffic Jam Pilots.” You might read a book while in slow traffic, but if the car approaches a complex construction zone, it will alert you to take control.

Level 4: High Automation

- Definition: The vehicle can handle all driving tasks in specific conditions or geofenced areas. No driver attention is required in these zones.

- In Practice: Robotaxis operating in a specific city district or autonomous shuttles on a university campus. These vehicles may not even have steering wheels. If they encounter a problem they can’t solve, they pull over safely rather than demanding a human take over.

Level 5: Full Automation

- Definition: The vehicle can drive anywhere a human can drive, in any weather, on any road surface, without any human interaction.

- In Practice: A truly universal self-driving car. This is the “holy grail” of the industry and remains the most difficult engineering challenge due to the unpredictability of extreme weather and unmapped rural roads.

Under the Hood: Key Technologies Powering AVs

How does a machine replicate the complex cognitive task of driving? It relies on “Sensor Fusion”—the combining of data from multiple sources to create a coherent 3D model of the world.

1. LiDAR (Light Detection and Ranging)

LiDAR is often considered the most critical sensor for Level 4 and 5 vehicles. It fires millions of laser pulses per second to measure distances, creating a precise, high-resolution 3D map of the surroundings.

- Why it matters: Unlike cameras, LiDAR provides accurate depth perception and works in various lighting conditions. It can “see” the exact shape of a pedestrian or a cyclist from hundreds of meters away.

2. Radar (Radio Detection and Ranging)

Radar uses radio waves to detect objects. While it lacks the resolution of LiDAR, it is incredibly robust.

- Why it matters: Radar excels in poor weather. Where cameras might be blinded by sun glare or heavy rain, and LiDAR might struggle with heavy fog, radar can “see through” these obstructions to measure the speed and distance of other vehicles.

3. Computer Vision (Cameras)

High-definition cameras read the visual world: traffic lights, stop signs, lane markings, and brake lights.

- Why it matters: Cameras are the only sensor that can read color and text. AI models (Convolutional Neural Networks) process these video feeds to classify objects (e.g., “That is a Stop sign,” “That is a school bus”).

4. Artificial Intelligence and Machine Learning

The “brain” of the vehicle. It processes the raw data from all sensors (perception), predicts the behavior of others (prediction), and decides on a path (planning).

- Deep Learning: The system learns from billions of miles of driving data (both real and simulated) to recognize edge cases—like a plastic bag floating in the wind versus a rock on the road.

5. V2X Communication (Vehicle-to-Everything)

This technology allows the vehicle to “talk” to the world around it.

- V2V (Vehicle-to-Vehicle): “I am braking hard!” (Sent to the car behind).

- V2I (Vehicle-to-Infrastructure): “The light will turn red in 3 seconds.” (Sent by the traffic signal).

- V2P (Vehicle-to-Pedestrian): Alerts sent to smartphones or wearables of pedestrians crossing the street.

The Rise of Mobility-as-a-Service (MaaS)

The convergence of autonomous vehicles and AI mobility is driving a shift from product to service. In the 20th century, freedom meant owning a car. In the 21st century, freedom means having access to mobility anytime, anywhere.

The Robotaxi Model

Companies are moving toward fleet-operated models where users pay per mile rather than paying off a car loan.

- Cost Efficiency: Eliminating the human driver creates the biggest cost reduction in ride-hailing services. Without a driver to pay, the cost per mile drops significantly, potentially becoming cheaper than personal car ownership.

- Utilization: The average private car is parked 95% of the time. A robotaxi can operate nearly 24/7, stopping only for charging and cleaning.

Automated Public Transit

It’s not just about 4-passenger sedans. AI mobility includes autonomous pods and shuttles that bridge the “first-mile/last-mile” gap.

- Dynamic Routing: Instead of fixed bus lines that run empty at night, AI-driven shuttles can dynamically alter their routes based on real-time request data, picking up passengers efficiently.

Freight and Logistics

Before robotaxis become ubiquitous for people, they are revolutionizing the supply chain.

- Autonomous Trucking: Long-haul trucking on highways is a prime candidate for automation. Trucks can operate in convoys (platooning) to reduce wind resistance and fuel consumption, driving continuously without mandatory rest breaks (except for refueling/charging).

- Last-Mile Delivery: Sidewalk robots and autonomous delivery vans are beginning to handle the final leg of package delivery, reducing congestion in residential neighborhoods.

Benefits of an AI-Driven Mobility Ecosystem

Why are companies and governments investing billions into autonomous vehicles and AI mobility? The potential societal benefits are transformative.

1. Safety Improvements

According to historic data from organizations like the World Health Organization, over 1.3 million people die in road crashes annually, with human error involved in approximately 94% of accidents.

- Consistency: AI does not get tired, drunk, distracted, or angry. It monitors 360 degrees simultaneously and reacts faster than human reflexes allow.

2. Traffic Efficiency and Environmental Impact

- Smoother Flow: AVs can drive closer together safely and accelerate/decelerate smoothly. This reduces “phantom traffic jams” caused by human braking behavior.

- Green Routing: AI mobility platforms optimize routes to reduce energy consumption. Furthermore, most AV fleets are electric, accelerating the transition away from fossil fuels.

3. Accessibility and Social Equity

- Mobility for All: The elderly, the blind, and people with disabilities who cannot drive are often isolated. AVs restore their independence, allowing them to travel without relying on family or scheduled paratransit services.

- Transit Deserts: Low-cost autonomous shuttles can provide service to underserved areas where traditional bus lines are not profitable.

4. Productivity Gains

The average commuter spends hundreds of hours a year driving. Reclaiming this time for work, rest, or entertainment represents a massive boost to quality of life and economic productivity.

Safety and Ethical Considerations

Despite the benefits, the transition to autonomous vehicles and AI mobility raises profound ethical questions and safety concerns.

The “Trolley Problem” in Real Life

One of the most debated philosophical aspects of AVs is decision-making in unavoidable accident scenarios. If an AV must choose between hitting a pedestrian or swerving into a wall (harming the passenger), what should it do?

- Ethical Programming: In practice, engineers focus on avoiding these scenarios entirely through defensive driving. However, policy frameworks must be established to define “acceptable risk” and liability.

Cybersecurity Risks

A connected car is a hackable car. If a fleet of thousands of vehicles is connected to a central server, it becomes a target.

- Ransomware: Imagine a scenario where a hacker freezes a fleet of robotaxis until a ransom is paid.

- Weaponization: Terrorists could theoretically take control of heavy autonomous trucks.

- Mitigation: The industry is adopting “Security by Design,” using military-grade encryption and isolating critical driving systems (steering/brakes) from entertainment systems.

The “Black Box” Problem

Deep learning models are often opaque; it is difficult to know exactly why the AI made a specific decision.

- Explainable AI (XAI): Regulators are pushing for XAI in autonomous driving so that after an incident, investigators can understand the logic path the vehicle took.

Regulatory and Infrastructure Challenges

Technology often moves faster than the law. For autonomous vehicles and AI mobility to scale, the physical and legal world must adapt.

Legal Liability

In a conventional crash, we determine which driver was at fault. In an AV crash, who is responsible?

- The Manufacturer? (If the hardware failed).

- The Software Developer? (If the algorithm failed).

- The Fleet Operator? (If maintenance was neglected).

- The Passenger? (Unlikely, but possible in Level 3 scenarios). Insurance models are shifting from “driver liability” to “product liability.”

Infrastructure Readiness

- Road Markings: AVs rely on clear lane lines. Faded paint, potholes, and confusing signage are major hurdles.

- 5G Connectivity: V2X communication requires low-latency, high-bandwidth networks (5G) to transmit data instantly between vehicles and traffic lights.

- Charging Grids: A massive fleet of electric robotaxis requires robust fast-charging hubs distributed throughout cities.

Weather Limitations

As of early 2026, heavy snow and torrential rain remain challenging for AV sensors. Snow covers lane markings and confuses LiDAR; rain creates noise in radar data. While algorithms are improving, AVs in sunny climates (like Phoenix or Dubai) are far ahead of those in snowy regions (like Minneapolis or Oslo).

Real-World Implementation: What This Looks Like in Practice

To visualize the impact of autonomous vehicles and AI mobility, let’s look at specific scenarios.

Scenario A: The Urban Commuter

- Morning: You open an app and request a ride. A shared autonomous pod arrives. It has no driver’s seat, just comfortable lounge seating.

- The Ride: The pod communicates with traffic lights, hitting green waves to minimize stops. You answer emails on the built-in screen.

- Arrival: The pod drops you at the office door and immediately departs to pick up the next passenger or head to a charging depot. You never worry about parking.

Scenario B: The Smart Logistics Hub

- Long Haul: An autonomous semi-truck travels continuously on the highway from a port to a distribution center 500 miles away.

- Handover: At the highway exit, the truck enters a “transfer hub.” A human driver (or a specialized urban robot) takes over the trailer for the complex navigation through city streets to the final warehouse.

- Benefit: This hybrid model maximizes the efficiency of the highway miles while acknowledging the complexity of urban driving.

Economic Impact and the Future Workforce

The disruption caused by autonomous vehicles and AI mobility will be felt across the global economy.

Industries at Risk

- Trucking and Taxi Driving: Millions of jobs rely on driving. While the shift won’t happen overnight, the long-term trend points toward displacement.

- Auto Insurance: With fewer accidents, premiums will drop, and the business model will shift to insuring fleets rather than individuals.

- Auto Repair: Electric AVs have fewer moving parts than gas cars, and fleet operators will likely handle maintenance in-house, squeezing independent mechanics.

New Opportunities

- Remote Fleet Operators: Humans will still be needed to monitor fleets and remotely assist vehicles that get stuck or confused (Teleoperation).

- Maintenance & Sanitization: Robotaxis need to be cleaned and serviced frequently.

- Data Analysis & Mapping: The demand for high-definition mapping and data annotation services will skyrocket.

- In-Car Experience: As drivers become passengers, a new economy of “passenger entertainment” and productivity tools will emerge.

The Impact on Real Estate

If we no longer need parking garages in city centers (because AVs drop passengers off and leave), huge amounts of prime urban real estate will open up.

- Repurposing: Parking lots could become parks, housing, or commercial spaces.

- Home Garages: In the suburbs, home garages might be converted into living spaces if families switch to MaaS subscriptions.

Common Mistakes and Misconceptions

When discussing autonomous vehicles and AI mobility, several myths persist.

Mistake 1: “Self-Driving Cars Will Be Perfect Immediately”

Reality: Early AVs will make mistakes. They will be cautious, sometimes overly so, causing traffic delays by hesitating at intersections. The goal is to be statistically safer than humans, not instantly flawless.

Mistake 2: “I Can Sleep in My Tesla Today”

Reality: This is a dangerous misconception. Current consumer vehicles are Level 2 or arguably Level 3. They require supervision. Sleeping behind the wheel of a commercially available car in 2026 is still illegal and unsafe.

Mistake 3: “The Moral Dilemma is the Biggest Hurdle”

Reality: While the “Trolley Problem” is fascinating philosophically, the real hurdles are much more mundane: predicting the behavior of human drivers, handling bad weather, and navigating construction zones.

The Road Ahead: A Timeline for Mass Adoption

Predicting the future is difficult, but industry roadmaps suggest a phased rollout for autonomous vehicles and AI mobility.

Phase 1: Geofenced Commercial Deployment (Current – 2028)

Robotaxi services operate in specific, well-mapped areas of major cities with favorable weather. Autonomous trucking begins on select highway corridors. Safety drivers are gradually removed.

Phase 2: Expansion and Integration (2028 – 2035)

Services expand to suburbs and less ideal weather conditions. Personal AV ownership becomes an option for luxury buyers. V2X infrastructure becomes standard in new road construction.

Phase 3: Ubiquity (2035+)

MaaS becomes the dominant mode of transport in urban centers. Traditional human-driven cars may be restricted in certain city zones. The design of cities begins to change to favor pedestrians and AVs over parked cars.

Related Topics to Explore

As you deepen your understanding of this topic, consider exploring these related fields:

- Smart Cities and IoT: How urban infrastructure connects with AVs.

- Electric Vehicle (EV) Battery Tech: The power source for autonomous fleets.

- Machine Learning Algorithms: The specific neural networks (CNNs, RNNs) used in computer vision.

- Urban Planning for the Future: Designing cities without parking lots.

- Cybersecurity in IoT: Protecting connected devices from bad actors.

Conclusion

The era of autonomous vehicles and AI mobility is not just about upgrading the car; it is about upgrading the entire concept of movement. We are transitioning from a system defined by individual ownership, fossil fuels, and human error to one defined by shared access, electrification, and algorithmic precision.

While the challenges—technological, legal, and ethical—are significant, the destination is worth the journey. A world with fewer accidents, less congestion, and accessible mobility for all is within reach. For us as individuals, the “driver” of the future is likely not a person, but a passenger—free to work, dream, and connect while the machine handles the road.

Next Step: If you are interested in experiencing this technology firsthand, check if a robotaxi pilot program is currently operating in your nearest major metropolitan area and book a test ride to see the future in action.

FAQs

1. Are autonomous vehicles safe for pedestrians?

Yes, generally speaking. Autonomous vehicles are designed to be extremely cautious around pedestrians. They use LiDAR and cameras to detect human shapes and predict movement. However, like any technology, they are not immune to failure, and interactions can be tricky when pedestrians ignore traffic rules.

2. Can autonomous vehicles drive in the snow?

As of 2026, heavy snow remains a challenge. Snow can obscure lane markings and accumulate on sensors, blinding the vehicle. Manufacturers are developing heated sensors and better mapping software to overcome this, but AVs operate best in clear weather today.

3. How much will an autonomous ride cost compared to Uber or Lyft?

Analysts predict that mature autonomous ride-hailing could be significantly cheaper than current services—potentially by 50% or more—because the cost of the human driver is removed. Eventually, it may rival the cost of owning a personal car.

4. Will I need a driver’s license in the future?

For the foreseeable future, yes. If you own a car with Level 2 or 3 capabilities, you must be licensed and able to take over. In a distant future of fully Level 5 vehicles, a license might become optional for passengers, but we are likely decades away from that being the universal standard.

5. Can autonomous vehicles be hacked?

Theoretically, yes. Any connected device has vulnerabilities. However, automakers treat cybersecurity as a top priority, using encryption and isolating critical driving controls from external networks to minimize this risk.

6. What happens if an autonomous car gets confused?

If an AV encounters a situation it cannot handle (like a police officer using hand signals), most are programmed to pull over safely and stop. In managed fleets, the car can contact a remote human operator for guidance.

7. Do autonomous vehicles use gas or electricity?

The vast majority of autonomous vehicles are electric (EVs). Electronic components require significant power, and the industry is aligning with global sustainability goals. The digital architecture of EVs is also easier for computers to control than internal combustion engines.

8. What is the difference between ADAS and AV?

ADAS (Advanced Driver Assistance Systems) refers to Level 1 and 2 features like lane-keeping and emergency braking—systems that help a human driver. AV (Autonomous Vehicle) refers to Level 3+ systems that take over the driving task completely.

9. How do autonomous cars navigate without GPS?

While they use GPS, they don’t rely on it exclusively because GPS can be blocked by tall buildings. They rely primarily on “localization”—comparing what their sensors see (landmarks, curbs) against a highly detailed 3D digital map stored on board.

10. Who is leading the race in autonomous vehicles?

The landscape is competitive. Major players include technology companies like Waymo (Alphabet) and Zoox (Amazon), automotive giants like Tesla, GM (Cruise), and Mercedes-Benz, and Chinese tech firms like Baidu (Apollo) and Pony.ai.

11. Will autonomous trucks replace truck drivers?

In the long term, long-haul trucking jobs are at risk. However, the transition will likely involve a hybrid model first, where humans handle complex urban driving and robots handle highway stretches. New roles for monitoring and logistics management will also emerge.

12. What is V2X?

V2X stands for “Vehicle-to-Everything.” It is a communication technology that allows a car to exchange data with other cars (V2V), traffic lights and roads (V2I), and even pedestrian devices (V2P) to improve safety and traffic flow.

References

- SAE International. (2021). Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles (J3016). SAE.org.

- National Highway Traffic Safety Administration (NHTSA). (n.d.). Automated Vehicles for Safety. USDOT. https://www.nhtsa.gov/technology-innovation/automated-vehicles-safety

- Waymo. (2025). Waymo Safety Report: Performance Data and Collision Statistics. Waymo Official Safety Hub. https://waymo.com/safety/

- World Health Organization (WHO). (2023). Road Traffic Injuries Key Facts. WHO.int. https://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries

- McKinsey & Company. (2024). The Future of Mobility: Trends and Scenarios. McKinsey Center for Future Mobility. https://www.mckinsey.com/industries/automotive-and-assembly/our-insights

- U.S. Department of Transportation. (2024). Ensuring American Leadership in Automated Vehicle Technologies: Automated Vehicles 4.0 (AV 4.0). transportation.gov. https://www.transportation.gov/av

- Insurance Institute for Highway Safety (IIHS). (2024). Real-world benefits of crash avoidance technologies. IIHS.org. https://www.iihs.org/topics/advanced-driver-assistance

- European Commission. (2024). Connected and Automated Mobility (CAM). European Commission Mobility Strategy.

- IEEE Spectrum. (2025). The State of Self-Driving Car Technology. IEEE.org. https://spectrum.ieee.org/transportation/self-driving

- Center for Strategic and International Studies (CSIS). (2023). Assessing the Cybersecurity Risks of Autonomous Vehicles. CSIS.org. https://www.csis.org/analysis/cybersecurity-risks-autonomous-vehicles