The volume of user-generated content uploaded to the internet every minute is staggering. From social media posts and product reviews to live video streams and forum discussions, the digital world generates data at a scale that human teams physically cannot review alone. This is where AI content moderation becomes not just a luxury, but an operational necessity.

Artificial Intelligence has transformed the landscape of Trust and Safety, moving platforms from reactive cleanup crews to proactive defense systems. However, as these tools become more powerful, the challenges of accuracy, bias, and the complex nature of misinformation have grown in tandem.

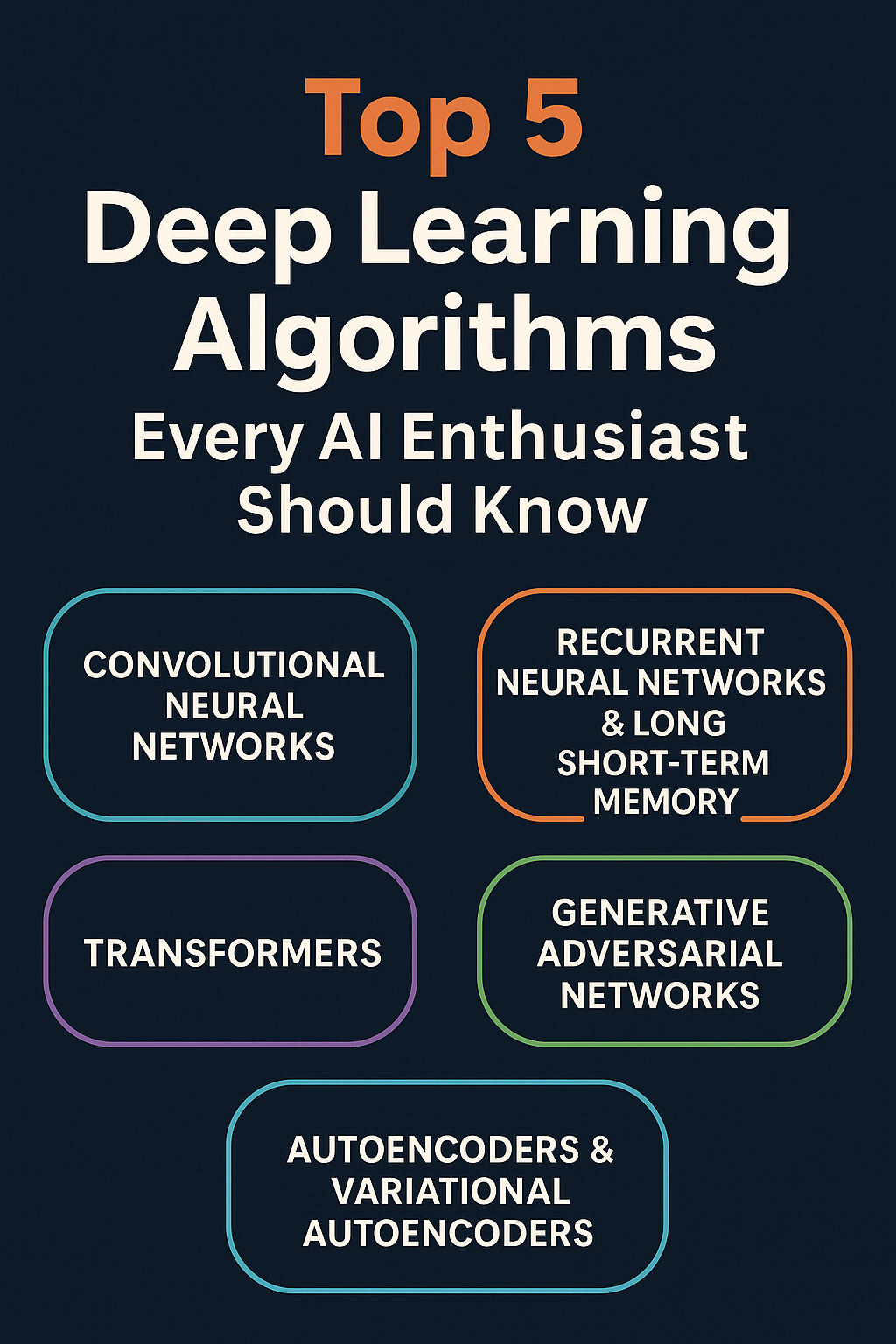

In this guide, AI content moderation refers to the use of machine learning algorithms, natural language processing (NLP), and computer vision to automatically detect, flag, or remove content that violates platform policies or spreads falsehoods.

Key Takeaways

- Scale is the primary driver: AI is the only mechanism capable of filtering the billions of interactions happening daily; humans deal with the nuance, not the volume.

- Context is king: While AI excels at pattern recognition, it often struggles with satire, cultural nuance, and “algospeak” without advanced training.

- Hybrid models are essential: The most effective safety strategies use AI for triage and humans for adjudication (Human-in-the-Loop).

- Misinformation is a moving target: Unlike static policy violations (like nudity), misinformation changes rapidly, requiring dynamic AI that can cross-reference evolving knowledge bases.

- Bias mitigation is mandatory: Without careful tuning, moderation algorithms can disproportionately silence marginalized voices or misunderstand dialects.

Who This Is For (And Who It Isn’t)

This guide is designed for product managers, trust and safety professionals, community leaders, and platform developers who are looking to implement or improve automated safety systems. It is also relevant for policy makers seeking to understand the technical capabilities and limitations of current moderation tools.

This article is not a coding tutorial for building a neural network from scratch, nor is it a philosophical debate on free speech, though it touches on the operational realities of both.

The Mechanics of Automated Detection

To understand how AI combats toxicity and misinformation, we must look under the hood at the different modalities of data it processes. Modern AI content moderation does not rely on a single algorithm but rather a stack of specialized models working in concert.

Natural Language Processing (NLP) for Text

Text remains the dominant form of communication online, and NLP is the frontline defense. Early moderation relied on “blocklists” or “keyword filtering”—simple lists of banned words. If a user typed a slur, the system blocked it.

In practice, this method is brittle. Users easily bypass filters using misspellings (e.g., swapping “i” for “1”), and harmless sentences containing blocked substrings are often flagged (the “Scunthorpe problem”).

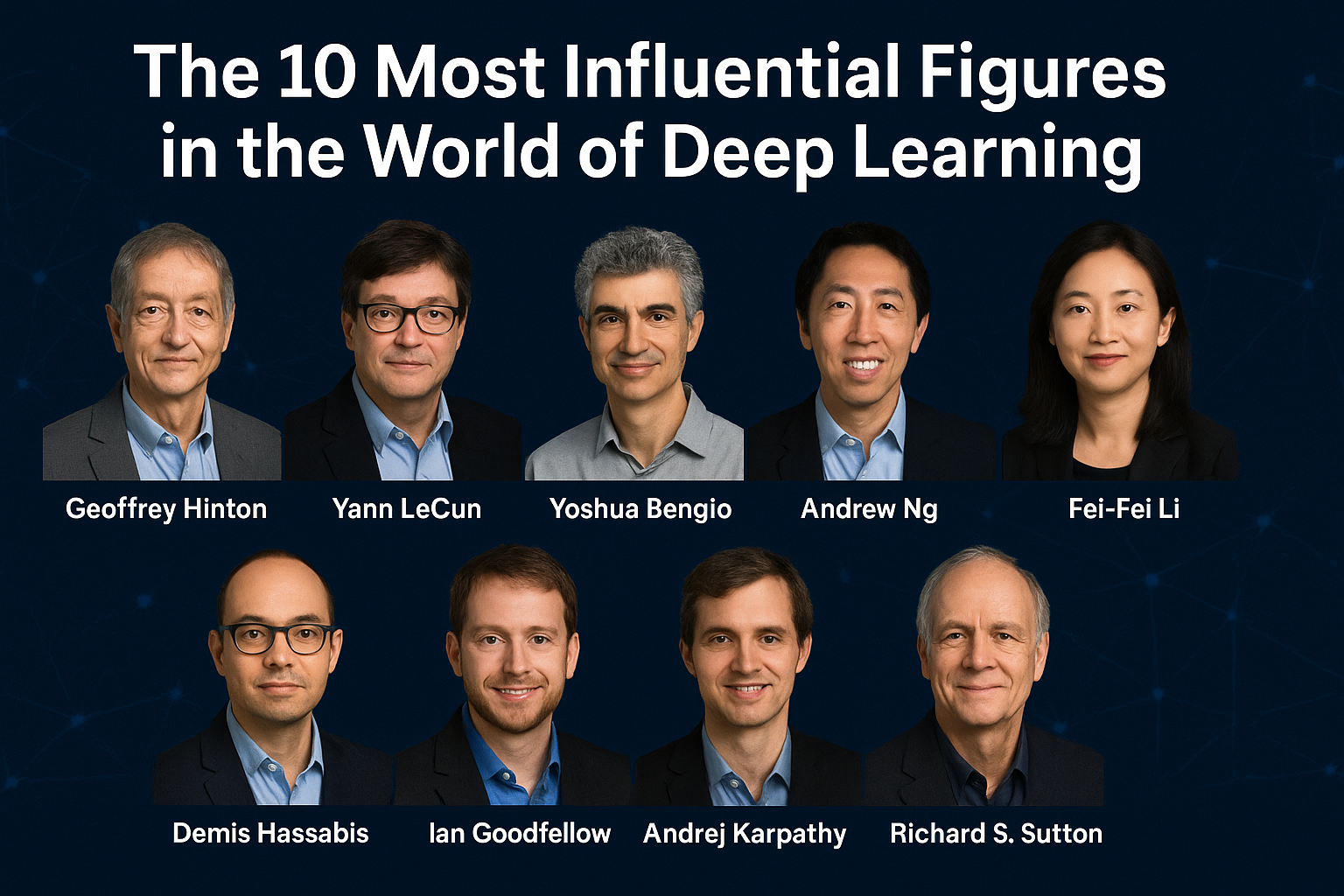

Modern NLP uses Large Language Models (LLMs) and Transformer architectures (like BERT or GPT-based models) to understand semantic meaning rather than just keyword presence.

- Sentiment Analysis: Determines the emotional tone of a message (angry, sarcastic, positive).

- Intent Recognition: Distinguishes between a user describing a violent movie scene versus making a violent threat.

- Entity Extraction: Identifies specific targets of harassment, such as public figures or minority groups.

Computer Vision for Images and Video

Visual content moderation is computationally heavier but essential for preventing the spread of graphic violence, adult content, and visual misinformation.

- Object Detection: Identifies prohibited items (weapons, drugs) or biological features (nudity) within an image.

- Optical Character Recognition (OCR): Extracts text overlaid on images (memes, screenshots) to check for hate speech or disinformation that evades text-only filters.

- Scene Understanding: Analyzes the context of an image. For example, distinguishing between a photo of a weapon in a video game (likely safe) versus a weapon in a real-world threatening context.

- Hashing (Perceptual Hashing): This is a critical efficiency tool. Instead of analyzing every image with AI, platforms create a digital fingerprint (hash) of known bad content. If a user re-uploads a banned image, the system detects the hash match instantly, even if the image has been resized or slightly altered.

Audio Intelligence

With the rise of social audio and voice chat in gaming, audio moderation has become a critical frontier.

- Transcription: Converting speech to text in real-time to run standard NLP analysis.

- Acoustic Analysis: Detecting tone, volume, and non-verbal cues (like screaming or gunshots) that text transcription might miss.

Combating Misinformation with AI

While detecting a “bad word” or a “nude image” is a classification problem, combating misinformation is a verification problem. This makes it significantly harder for AI to handle autonomously. Misinformation is often technically “safe” language used to convey a falsehood.

Fact-Checking Integration

AI models designed for misinformation do not inherently “know” the truth. Instead, they function as high-speed librarians.

- Claim Extraction: The AI scans content to identify verifiable claims (e.g., “Voting machines in State X were hacked”).

- Knowledge Base Retrieval: The system queries trusted databases, fact-checking organization APIs (like Snopes or Reuters), or internal “truth sets.”

- Stance Detection: The AI determines if the content supports, refutes, or is neutral toward the known facts.

In practice, this is often used to apply labels rather than remove content. You have likely seen “Get the facts about…” banners on social media posts; these are triggered by AI matching the topic of the post to a known disinformation narrative.

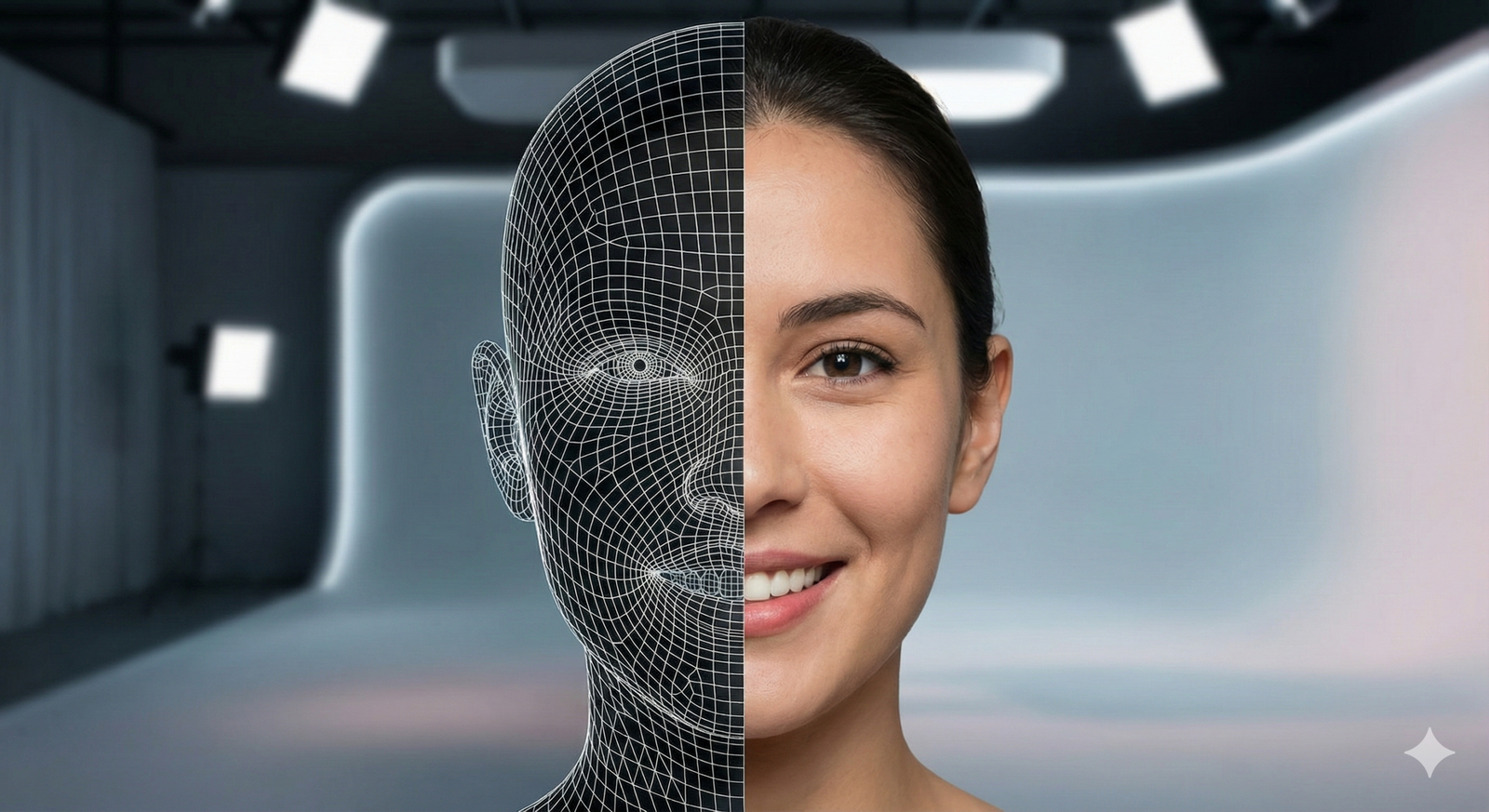

Deepfake and Synthetic Media Detection

As of 2026, the proliferation of generative AI has made deepfake detection a top priority. Bad actors use AI to generate fake audio of politicians or fake imagery of war zones.

- Artifact Analysis: AI looks for pixel-level inconsistencies, irregular blinking patterns in video, or unnatural audio waveforms that human eyes and ears miss.

- Provenance Watermarking: Platforms are increasingly adopting standards like C2PA, which embed cryptographic metadata into files to prove their origin. AI readers verify these signatures to confirm if an image was taken by a camera or generated by a model.

- Reverse Search and Propagation Analysis: AI tracks how an image is spreading. If a “new” photo of a breaking news event suddenly appears simultaneously on thousands of bot accounts without a clear primary source, the propagation pattern itself can trigger a misinformation flag.

Key Components of a Modern Safety Stack

Implementing AI content moderation is not just about the model; it is about the workflow. A robust safety stack typically includes three layers: Pre-Moderation, Post-Moderation, and Reactive Moderation.

1. Pre-Moderation (The Firewall)

This occurs before content is visible to the public. It is the most aggressive form of safety but risks slowing down user interaction.

- Use case: Preventing child sexual abuse material (CSAM) or extreme gore.

- Mechanism: Content is scanned upon upload. If it matches a high-confidence hash of illegal material, it is blocked immediately.

- Latency: Must be under 100-200ms for text to feel “real-time.”

2. Post-Moderation (The Cleanup)

Content is published immediately to reduce friction, but AI scans it in the background within seconds or minutes.

- Use case: Bullying, harassment, or spam.

- Mechanism: The AI flags content. Depending on the confidence score, the content is either hidden automatically or sent to a human queue.

- Visibility: The user sees their post, but it may be “shadowbanned” (invisible to others) until cleared.

3. Reactive Moderation (User Reporting)

Users act as a distributed sensor network. When a user reports content, AI prioritizes the report.

- Triage: AI analyzes the reported content. If the report is “This is spam” and the AI scores the probability of spam at 99%, it acts immediately. If the AI scores it at 20%, it routes it to a human to avoid a false positive.

The Human-in-the-Loop (HITL) Necessity

A common misconception is that AI replaces human moderators. In reality, AI changes the job of human moderators. The goal of AI is not to make the final decision on complex cases, but to clear the “noise” so humans can focus on the “signal.”

The “Confidence Threshold” Strategy

The interaction between AI and humans is governed by thresholds.

- High Confidence (e.g., >95%): The AI is certain the content violates policy (e.g., obvious spam links). Action: Automated Removal. No human intervention is needed.

- Medium Confidence (e.g., 60-95%): The AI suspects a violation but is unsure (e.g., a reclaimed slur used by a protected group). Action: Sent to Human Review Queue.

- Low Confidence (e.g., <60%): The content is likely safe. Action: Do Nothing (or monitor for virality).

Protecting Moderator Mental Health

Human moderators are frequently exposed to the worst aspects of the internet—violence, abuse, and exploitation. AI acts as a shield:

- Blurring: AI detects graphic imagery and blurs it before a human opens the ticket. The moderator can choose to reveal it only if necessary.

- Grayscale: Converting traumatic images to black and white helps reduce the visceral psychological impact.

- Batching: AI groups similar types of content so moderators can switch mental contexts less frequently.

Challenges and Ethical Pitfalls

While AI content moderation solves the scale problem, it introduces new ethical and technical dilemmas. Platforms must be transparent about these limitations to maintain user trust.

Algorithmic Bias and Fairness

AI models are trained on datasets, and datasets contain human biases.

- Dialect Bias: Studies have shown that standard NLP models are more likely to flag African American Vernacular English (AAVE) as “toxic” compared to Standard American English, even when the content is harmless.

- Cultural Context: An image of a swastika might be hate speech in Europe but a religious symbol in parts of Asia. A global AI model without regional fine-tuning will fail to distinguish the difference, leading to unjust censorship.

The “Black Box” Problem

Deep learning models, especially large neural networks, are often opaque. When an AI bans a user for “harassment,” it might not be able to explain why. Was it the adjective? The context?

- Impact: This makes the appeals process difficult. If the platform cannot explain the violation, the user cannot learn from the mistake.

Adversarial Attacks and “Algospeak”

Users are highly adaptive. When they realize AI blocks certain words (e.g., “suicide”), they invent code words (e.g., “unalive”). This creates an endless game of cat-and-mouse.

- Visual Attacks: Bad actors add imperceptible “noise” layers to images that confuse computer vision models (making a violent image look like a toaster to the AI) while remaining visible to humans.

Regulatory Landscape and Compliance

As of 2026, content moderation is no longer just a platform choice; it is a legal requirement in many jurisdictions.

The EU Digital Services Act (DSA)

The DSA has set the global standard for algorithmic accountability. It requires very large online platforms (VLOPs) to:

- Assess systemic risks, including the spread of disinformation.

- Provide transparency into how their moderation algorithms work.

- Offer robust appeal mechanisms for users who have been moderated by AI.

Section 230 (US Context)

While Section 230 of the Communications Decency Act traditionally protects platforms from liability for user content, recent legal pressures encourage “Good Samaritan” moderation. Using AI to remove distinctively harmful content generally falls under these protections, but using AI to editorialize or promote specific viewpoints can complicate legal standing.

Global Fragmentation

Platforms operating globally must deal with conflicting laws. AI models often need “geofencing” capabilities—blocking content in Germany (where distinct hate speech laws apply) while allowing it in the US (under the First Amendment).

Implementation Strategies for Platforms

For organizations looking to deploy AI content moderation, the path forward involves strategic decisions on technology and policy.

Build vs. Buy

- Buy (API Solutions): Services like OpenAI’s moderation endpoint, Google’s Perspective API, or Microsoft Content Safety are powerful, easy to integrate, and constantly updated.

- Pros: Fast implementation, low maintenance, access to state-of-the-art models.

- Cons: recurring costs, less control over specific definitions of “toxicity,” data privacy concerns.

- Build (Open Source/Custom): Training BERT or RoBERTa models on internal community data.

- Pros: Perfectly tailored to your specific community guidelines and slang; data stays on-premise.

- Cons: Requires a dedicated data science team, high cost of annotation (labeling data), and infrastructure maintenance.

Defining “Truth” in Misinformation Systems

Implementing misinformation filters requires a rigorous policy framework.

- Trusted Flaggers: Platforms should prioritize reports from certified NGOs or fact-checking partners over random user reports.

- Virality Circuit Breakers: A common strategy is to not delete misinformation immediately, but to limit its algorithmic spread (downranking) while it is being fact-checked. If AI detects a viral piece of potential disinformation, it can temporarily “throttle” the reach until a human verification occurs.

Metrics for Success

How do you know if your system works?

- Precision: Of the content the AI flagged, how much was actually bad? (High precision = fewer false positives/wrongful bans).

- Recall: Of the bad content on the platform, how much did the AI find? (High recall = safer platform).

- Turnaround Time (TAT): How long does content stay up before detection?

Common Mistakes and Pitfalls

Even with the best intentions, improved safety systems can fail if implemented poorly.

1. Over-Reliance on Automation

Turning on a strict AI filter without human oversight often results in a “sterile” platform where users are afraid to speak.

- Example: A health support group discussing “breast cancer” gets flagged for “sexual content” because the vision model detects nudity, and the text model detects anatomical terms.

2. Ignoring the “Appeal” Loop

AI will make mistakes. If users have no way to appeal an automated ban, they will leave the platform. The data from appeals is also the most valuable training data you have—it explicitly tells the model where it was wrong.

3. Static Keyword Lists in an AI World

Mixing legacy keyword blocklists with modern AI often causes conflicts.

- Pitfall: A hard-coded blocklist bans a word that the AI would have understood as safe in context. Always prioritize semantic understanding over rigid lists unless the term is universally illegal.

4. Lack of Transparency

Telling a user “You violated community standards” is frustrating.

- Best Practice: Tell the user “Our automated system detected potential hate speech in this comment.” Specificity reduces recidivism.

Future Trends in AI Safety

The field of AI content moderation is evolving rapidly. Here is what is on the horizon.

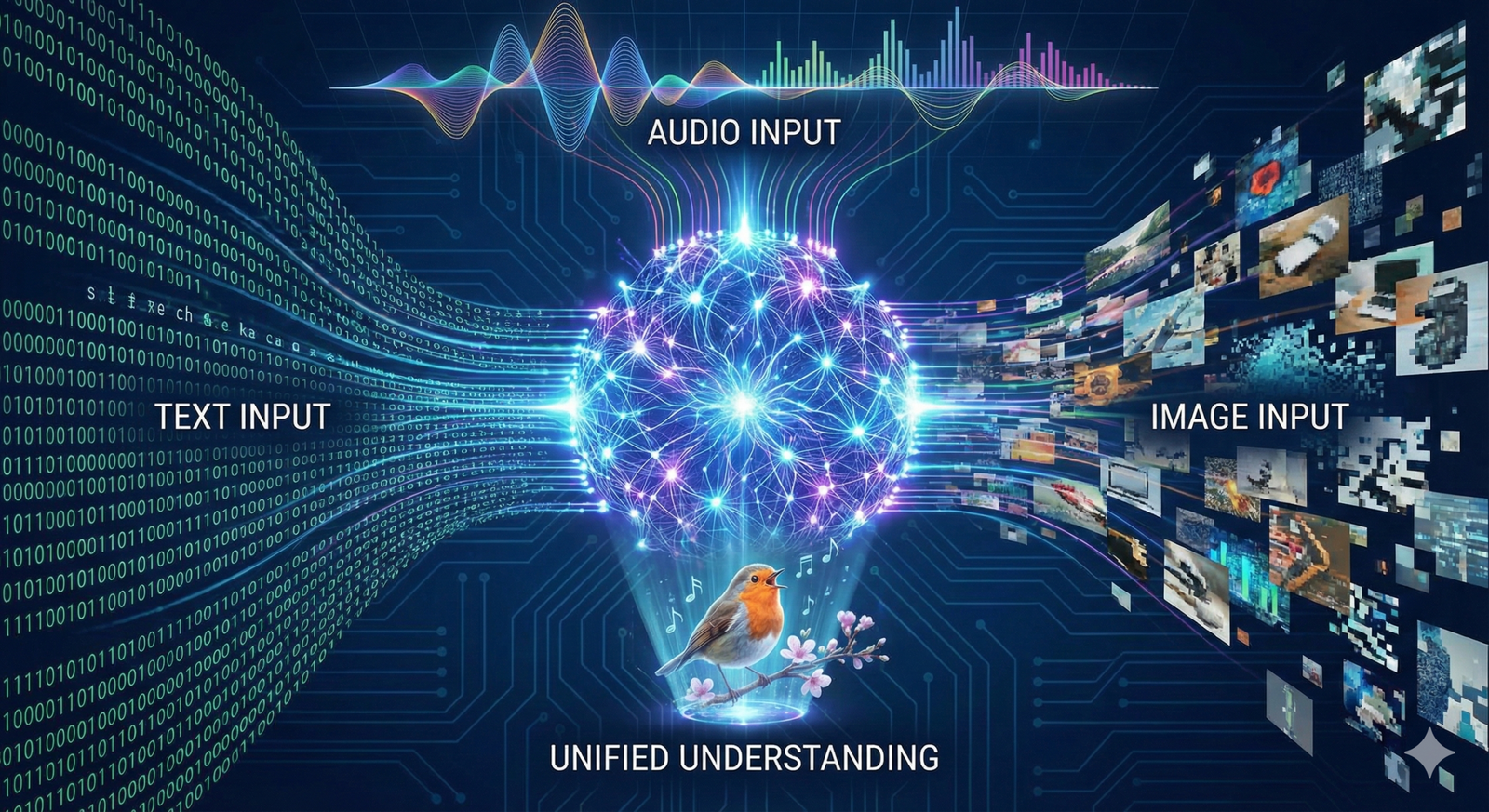

Multimodal Large Language Models (MLLMs)

Future models will not analyze text and images separately. They will process the video, audio, and text simultaneously to understand the full context. For example, a video of a person shouting might be flagged as violence by audio AI, but a multimodal model would see they are cheering at a sports game and mark it as safe.

Decentralized Moderation (Fediverse)

In decentralized networks (like Mastodon or Bluesky), users are gaining the ability to choose their own algorithms. “Middleware” services will allow users to subscribe to specific moderation providers (e.g., “I want the strict family-friendly filter” vs. “I want the loose free-speech filter”).

Pre-emptive Nudging

Instead of punishing users after they post, AI is increasingly used to “nudge” users before they hit send.

- Mechanism: The AI detects a toxic comment in the draft phase and prompts: “This looks like it might violate our policies. Are you sure you want to post this?”

- Result: Studies show a significant percentage of users edit or delete their own toxicity when prompted, reducing the load on moderation teams.

Related Topics to Explore

- Trust and Safety Tooling: An overview of software suites used by human moderators.

- Algorithmic Transparency: How to explain AI decisions to users and regulators.

- C2PA and Digital Provenance: The technical standards for verifying image origins.

- Data Labeling for Safety: How to create high-quality datasets to train moderation bots.

- The Psychology of Content Moderation: Managing PTSD and burnout in safety teams.

Conclusion

AI content moderation is the infrastructure that makes the modern internet habitable. Without it, platforms would be overrun by spam, scams, and toxicity within hours. However, it is not a magic wand. It is a tool that requires constant tuning, human oversight, and a commitment to ethical implementation.

For platforms battling misinformation, the stakes are even higher. The goal is not to be the “arbiter of truth,” but to create an environment where authentic information can thrive and manipulated media is identified.

Next Steps: If you are implementing a moderation strategy, start by auditing your current data volume. If you process fewer than 1,000 items a day, a human-first approach with simple keyword filtering may suffice. If you are scaling beyond that, evaluate API-based solutions like Google Perspective or OpenAI’s moderation endpoints to establish a baseline safety layer before investing in custom model training.

FAQs

What is the difference between content moderation and censorship?

Content moderation is the enforcement of a platform’s specific community guidelines (which users agree to) to ensure safety and usability. Censorship typically refers to a government or external authority suppressing speech to control a narrative. Moderation protects the community; censorship restricts rights.

Can AI detect sarcasm in hate speech?

This remains one of the hardest challenges. While modern Large Language Models (LLMs) have improved significantly at detecting sarcasm by analyzing context, they still struggle compared to humans. They often miss sarcasm that relies on cultural references or inside jokes, leading to false positives.

How does AI handle non-English content?

Major AI providers train models on multilingual datasets, covering top global languages (Spanish, French, Chinese, etc.) effectively. However, AI performance drops significantly for “low-resource” languages or regional dialects, often requiring native human moderators to bridge the gap.

What is a “false positive” in moderation?

A false positive occurs when the AI incorrectly flags innocent content as a violation. For example, an AI trained to block “nudity” might flag a photo of a desert sand dune because the curves and colors resemble skin. Minimizing false positives is crucial for user retention.

Is AI content moderation expensive?

It depends on the approach. Using pre-built APIs (like OpenAI or Azure) usually costs a fraction of a cent per request, which is affordable for most startups. Building and hosting custom enterprise-grade computer vision models, however, can require significant GPU compute costs and engineering resources.

How do platforms handle live streaming moderation?

Live streaming is difficult because it happens in real-time. Platforms use “frame sampling” (checking one image every few seconds) and real-time audio transcription to catch violations. There is usually a delay of a few seconds in the broadcast to allow AI to “kill” the stream if a severe violation (like violence) is detected.

Does AI remove misinformation automatically?

Rarely. Because misinformation is context-dependent, AI usually “downranks” it (makes it less visible) or sends it to a human fact-checker. Automatic removal is typically reserved for content that causes immediate real-world harm (e.g., fake voting instructions or dangerous medical advice).

What is “shadowbanning”?

Shadowbanning is a moderation technique where a user’s content is rendered invisible to everyone but the user themselves. AI often uses this for suspected spammers. The goal is to stop the spammer from realizing they have been caught, so they don’t immediately create a new account.

References

- European Commission. (2024). The Digital Services Act: Ensuring a safe and accountable online environment. European Union. https://commission.europa.eu/strategy-and-policy/priorities-2019-2024/europe-fit-digital-age/digital-services-act_en

- OpenAI. (2024). Moderation API Documentation. OpenAI Platform. https://platform.openai.com/docs/guides/moderation

- Google Jigsaw. (2023). Perspective API: Using machine learning to reduce toxicity online. Jigsaw. https://perspectiveapi.com/

- Meta Transparency Center. (2024). Community Standards Enforcement Report. Meta. https://transparency.fb.com/data/community-standards-enforcement/

- Coalition for Content Provenance and Authenticity (C2PA). (2023). C2PA Technical Specifications for Digital Provenance. https://c2pa.org/specifications/

- Gorwa, R., Binns, R., & Katzenbach, C. (2020). Algorithmic content moderation: Technical and political challenges. Big Data & Society. https://journals.sagepub.com/doi/full/10.1177/2053951719897945

- Gillespie, T. (2018). Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions That Shape Social Media. Yale University Press.

- Discord Safety Center. (2023). AutoMod and Safety Setup Guide. Discord.

- Partner, D. (2023). The Hidden Cost of AI: The Psychological Toll on Content Moderators. The Verge. https://www.theverge.com/2023/moderation-mental-health-impact

- Microsoft Azure. (2024). Azure AI Content Safety: Detect harmful user-generated content. Microsoft. https://azure.microsoft.com/en-us/products/ai-services/ai-content-safety