Algorithms are incredibly crucial for making choices in many sectors these days, like hiring people, categorizing photographs, and the criminal justice system. These algorithms could say they are fair and operate well, but they can also make biases that are already in their training data worse and stronger. When these automated systems always offer biased outcomes to particular groups, this is called algorithm bias. A lot of the time, these outcomes don’t reveal facts and statistics; they show differences in society. Businesses and developers that use these technologies need to know that algorithm bias can have a huge impact on people and communities.

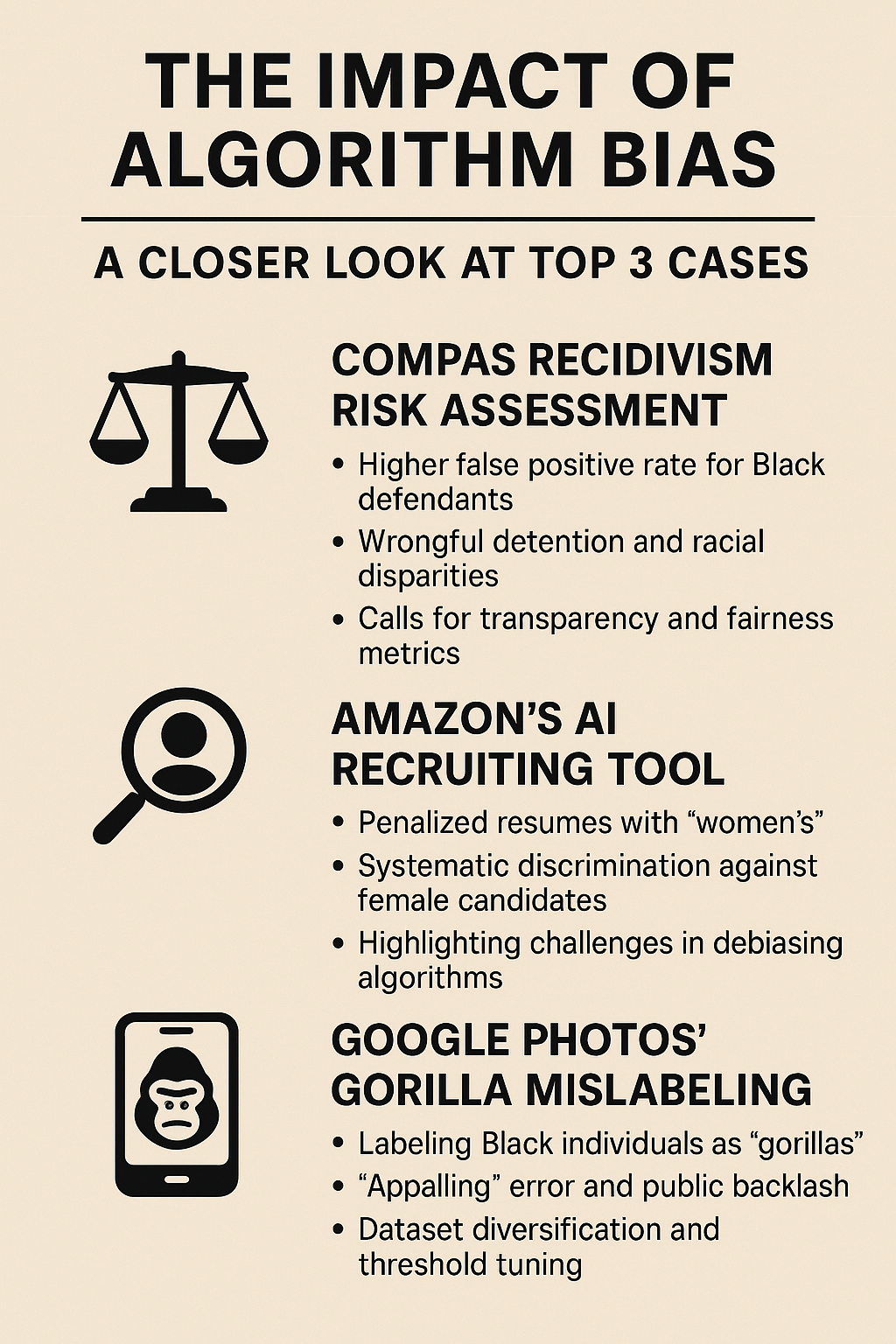

This article goes into greater detail about how algorithm bias influences things by presenting three crucial examples:

- The criminal justice system uses the COMPAS Recidivism Risk Assessment.

- Amazon’s AI tool for identifying people to work for.

- It’s not true that Google Photos can find photographs of gorillas.

We can observe how bias got in, what really happened, and what we can learn from each case to make sure that computers don’t make the wrong decisions.

What Does It Mean for an Algorithm to Not Be Fair?

Algorithm bias is when the results of a system always have an effect on specific groups of individuals. This could happen for a few reasons:

- Biased Training Data: Data from the past that reveals how unjust people are in society, such when there are too many officers in specific districts.

- Model Design Choices: Picking traits or giving them weights that make stereotypes stronger by accident.

- Not telling the truth: It’s challenging to detect and repair flaws when algorithms are “black-boxed.”

Some of the impacts are getting hired unfairly, going to jail for no reason, and having others talk badly about you on social media. Ethicists, lawmakers, data scientists, and the people who are affected all need to work together to fix these issues.

Case 1: A Brief Overview of the COMPAS Recidivism Risk Assessment

Northpointe (now Equivant) produced COMPAS, which stands for Correctional Offender Management Profiling for Alternative Sanctions. All of the states in the U.S. utilize it to determine out how likely it is that a defendant will commit another crime. Courts and parole boards have used COMPAS results to decide on bail, punishments, and the terms of release.

Evidence of Bias

A research by ProPublica that changed the game found that COMPAS was almost twice as likely to mistakenly categorize Black defendants as high risk compared to white defendants, even though both groups had the same odds of committing another crime. Black defendants who didn’t commit another crime were incorrectly branded “high risk” 44.9% of the time. 23.5% of the time, white defendants were incorrectly deemed “high risk.”

Later research in Science Advances showed that COMPAS’s overall accuracy (around 65%) was practically the same as that of simple logistic regression with less features. But it still produced differing percentages of false positives for different racial groups: 44.8% for Black defendants and 23.5% for white defendants. The most recent study from Williams College in 2024 revealed that COMPAS made racial inequities in Broward County worse, even though it lowered the total number of inmates in jail.

What Happens in Real Life:

- Black defendants got a lot of false positives, which meant they had to stay in jail longer until their trial. This made their money and social problems worse.

- People in communities that acquired unfair risk rankings didn’t believe in AI systems or the courts anymore because they lost faith in them.

- People were anxious about due process, openness, and if it was acceptable to make life-changing judgments using procedures that weren’t transparent.

Trying to Make Things Better

- They want risk assessment models that everyone can view so that outside auditors may check them out.

- Fairness Metrics: Using more than one definition of fairness, such equalized chances, to make sure that all groups make the same number of mistakes.

- Human-in-the-Loop: Making sure that computer scores help judges but don’t tell them what to do.

Case 2: Amazon’s AI Tool for Hiring

Background From 2014 to 2017, Amazon built a hiring engine that uses AI to automatically sort resumes and rank candidates. It was supposed to offer candidates a score of 1 to 5 stars based on hiring data from the past 10 years, most of which came from men.

Evidence of Bias According to a Reuters article, the engine awarded lower ratings to resumes that contained the word “women’s” in them (like “women’s chess club”) and to graduates of all-women’s universities. This made it tougher for women to get jobs. Reuters. The ACLU argued the tool “systematically discriminated against women applying for technical jobs such as software engineer positions.”

What Really Happened:

- Amazon gave up on the tool in 2017 because it understood it would be hard to make it fair for everyone.

- Risk to Reputation: The example revealed that even huge tech businesses with good AI teams can nonetheless replicate how people think.

- Awareness in the industry: More and more individuals talked about fairness in AI hiring, and it became more and more popular to utilize frameworks to discover prejudice.

Trying to Make Things Better

- Counterfactual data augmentation and feature re-weighting can help you fix data that isn’t right. These are two strategies to stop bias.

- Third-Party Audits: Getting other auditors and stakeholders to look into recruiting algorithms to see if they operate differently for different groups.

- One technique to make sure that data is fair is to make sure that training datasets have people from different ethnicities, genders, and other groups.

Case 3: The Story of the “Gorilla” Glitch in Google Photos

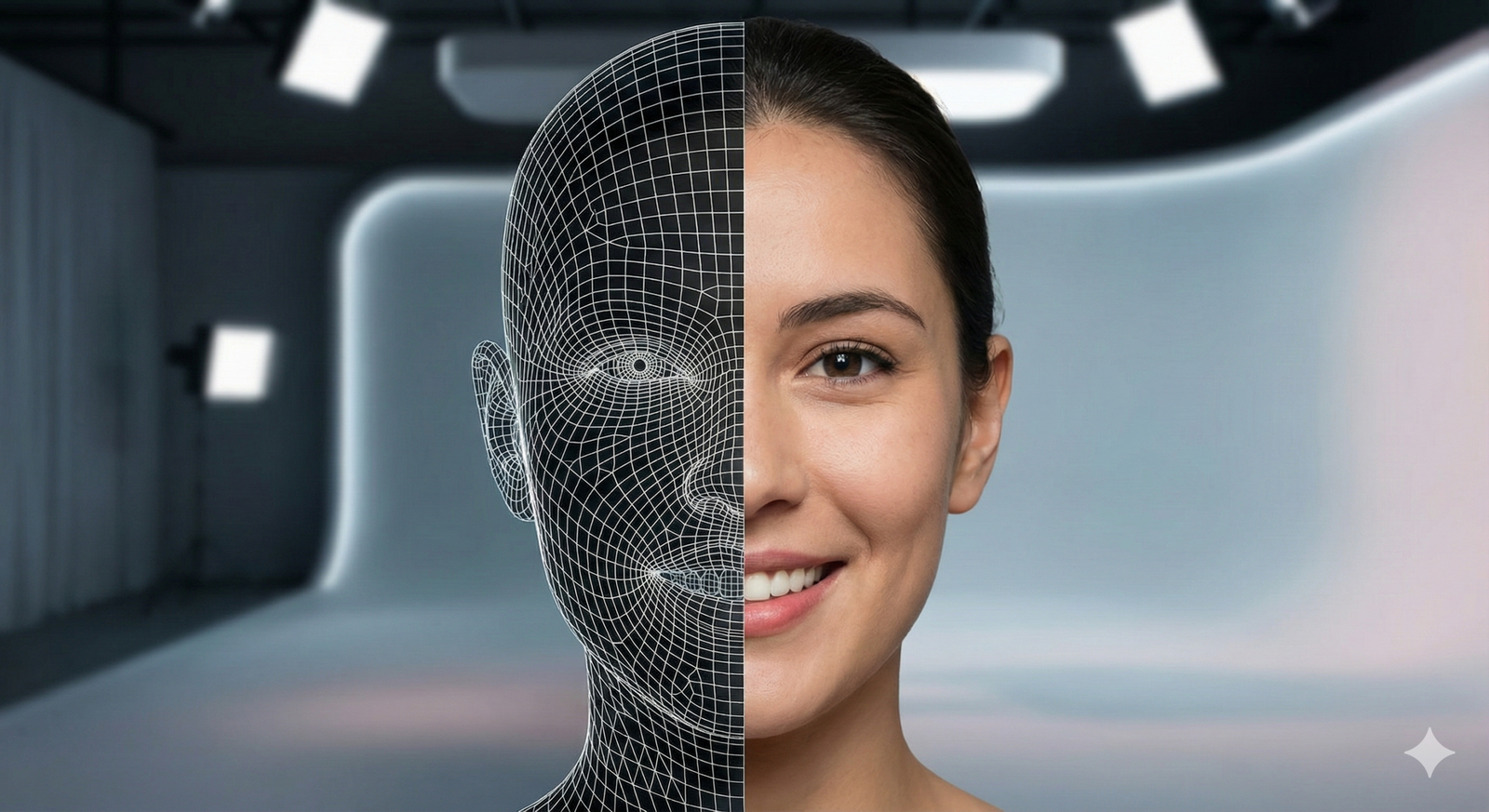

In 2015, individuals found out that Google Photos had incorrectly labeled photographs of Black people as “gorillas.” The experience indicated that commercial image-recognition algorithms had problems recognizing people of different races differently.

Proof of Bias Jacky Alcine, who lives in Brooklyn, found an album named “Gorillas” that featured images of him and a friend in it. Google said they were sorry at first and termed the blunder “appalling,” but instead of rectifying it, they quickly pulled “gorilla” off the list of labels in their API. Later tests showed that Google Photos still couldn’t discern the difference between gorillas, but Google Assistant and the Cloud Vision API could. The Verge

Things That Happen in Real Life:

- Emotional Harm: This form of mislabeling affects people and communities by making wrong ideas stronger.

- Technical Workarounds: Google’s quick remedy of taking away labels didn’t solve bias; it made things harder to use.

- People started to say that there should be rules for facial recognition software that everyone must obey and that people who don’t work for the company should check them.

Trying to Improve Things

- Dataset Diversification: Making sure that the training photographs show a variety of skin tones, scenarios, and places.

- Threshold tuning is the process of setting up confidence thresholds that tell users which classifications they are not convinced about.

- Regular audits: To make sure that people are doing their jobs correctly, bias checks should be done often and the results should be made public.

What We’ve Learned From Diverse Scenarios and What Works Best

- Putting data in order and checking it: It’s highly crucial to find and fix problems with training datasets before they happen.

- People outside the organization can trust it more and check over it more easily when it includes open-source models or explicit instructions on how decisions are made.

- Ethicists, domain specialists, and communities that will be affected should be involved in every step of multi-stakeholder governance, from design to deployment.

- Continuous Monitoring: Bias varies over time, thus we need to look at models anew when society and data distributions change.

- Following new standards like the EU AI Act and IEEE’s norms of conduct can help companies keep out of trouble.

Algorithm bias isn’t simply a computer problem; it could potentially make things worse in the real world. The COMPAS, Amazon hiring, and Google Photos instances all demonstrate a different side of this problem: risk scores that aren’t clear and affect court judgments, hiring algorithms that are biased and hinder diversity in the workplace, and improper labeling in consumer apps. To fix algorithm bias, we need to be stringent with our data, make it obvious who is in control of the models, and genuinely listen to the individuals who are most affected. The only way to be confident that AI is fair and reliable is to employ an approach that is based on EEAT and covers everything.

Frequently Asked Questions (FAQs)

Q1: What does it mean for an algorithm to not be fair?A1: Bias can happen if the training data doesn’t contain everyone, the wrong features are picked, the labels aren’t balanced, or not enough bias is found during development.

Q2: How can businesses find out if their algorithms are unfair?A2: By employing fairness metrics (such equalized odds and demographic parity), doing tests that aren’t done, and having other people look at the results.

Q3: Are there laws that stop algorithms from giving one group an advantage over another?A3: Yes, governments like the EU are adopting legislation (like the EU AI Act) that stipulate AI systems that are high-risk must be open about what they do and pass conformity assessments.

Q4: Is it ever feasible to entirely “fix” algorithms that aren’t fair?A4: It’s hard to get rid of prejudice altogether, but working with stakeholders, keeping an eye on things, and continually attempting to make things better will make it much less likely that you will receive unjust results.

Q5: What can people do to make sure that firms are held accountable for AI that isn’t fair?A5: You may make people responsible by placing pressure on them, telling them to be honest, and invoking your rights under data protection and anti-discrimination legislation.

References

- Angwin, J.; Larson, J. Machine Bias. ProPublica. 23 May 2016. ProPublica

- Dressel, J.; Farid, H. “The accuracy, fairness, and limits of predicting recidivism.” Science Advances, January 2018. https://www.science.org/doi/10.1126/sciadv.aao5580 Science

- Bahl, U. Algorithms in Judges’ Hands: Incarceration and Inequity in Broward County, Florida. Williams College. 22 May 2024. https://www.williams.edu/compass/compas-study.pdf Wikipedia

- Dastin, J. “Amazon scraps secret AI recruiting tool that showed bias against women.” Reuters, 10 October 2018. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK0AG/ Reuters

- “Why Amazon’s Automated Hiring Tool Discriminated Against Women.” ACLU, 10 October 2018. American Civil Liberties Union

- Zhang, M. “Google Photos Tags Two African-Americans As Gorillas.” Forbes, 1 July 2015. https://www.forbes.com/sites/mzhang/2015/07/01/google-photos-tags-two-african-americans-as-gorillas/ Forbes

- Vincent, J. “Google ‘fixed’ its racist algorithm by removing gorillas from its image service.” The Verge, 12 January 2018. The Verge