AI is altering businesses swiftly by making things operate more smoothly, personalizing experiences for each client, and coming up with new ways to make money. But if you have a lot of authority, you need to be responsible. Using AI in an unethical way could lead to unfair results, fines from the government, damage to your company’s brand, and loss of trust from stakeholders. Gartner believes that by 2025, 75% of companies will have experienced problems with AI ethics and risk.

Businesses should employ ethical AI practices throughout the AI lifecycle, from collecting data to installing models and keeping an eye on them. This is the only way to make sure you succeed in the long run. This article gives five useful methods that corporations can employ ethical AI, along with examples from the actual world, best practices, and things to do. It also features SEO-friendly phrases like “implementing ethical AI,” “ethical AI practices,” and “business AI ethics,” which help this content show up at the top of Google and Bing.

Strategy 1: Get people from different departments to work together to make a plan for how to handle AI ethics.

Why governance is vital

Full control: There are also challenges to ethical AI that reach beyond the work of one department, such as bias, privacy concerns, and a lack of openness. A governance committee with members from the legal, technical, compliance, and business sides of things works collaboratively to deal with risks from start to completion.

Regulatory readiness: AI rules are starting to appear all over the world, such as the EU AI Act² and the Singapore Model AI Governance Framework³. A governing body checks to make sure that your AI initiatives obey the regulations.

Key Parts

- People from the data science, legal, compliance, HR, and customer-facing divisions are on the AI Ethics Committee.

- Policies and charters are written standards that follow frameworks like the OECD AI Principles. They include elements like justice, accountability, openness, and privacy.

- Decision-Rights Matrix: Make it obvious who can say yes to audits, risk assessments, and models once they have been used.

- When you need to talk to stakeholders about moral issues or challenges, these are the steps to take.

How to Make It Happen

- Get help from the top managers. Talk to the C-suite about case studies like Amazon’s lawsuit over gender bias, and make sure they know that establishing trust and minimizing risk can pay off.

- Draw a map of the people who are interested. Find all the teams that AI systems aid or hurt.

- Make the initial draft of the governance charter. Use the templates that come with the IEEE’s guidance to design that is in line with ethics.

- Pilot has one way to use AI. Make sure the test committee’s workflows operate before you use marketing automation throughout the whole firm.

Strategy 2: Make sure that fairness and prejudice are part of the AI lifecycle

Finding out where bias comes from

- There is prejudice in history: Training data that indicates inequality in the past, like loan approval datasets that give one group an edge over another.

- Representation bias occurs when some groups don’t have enough data to show them.

- When proxy variables don’t really reveal what you want them to, that’s called measurement bias.

Frameworks and Toolkits

- IBM AI Fairness 360 is a free library that offers tools and measurements to help you discover and fix bias.

- Microsoft Fairlearn: It gives you techniques and measurements for fairness to use after processing.

- The Google PAIR Guidebook shows how to design things with people in mind and uncover prejudice.

Start by looking at your data using fairness criteria like equalized odds and demographic parity.

Diverse Teams: Ask sociologists, domain specialists, and people from the communities that will be affected to assist you create the model.

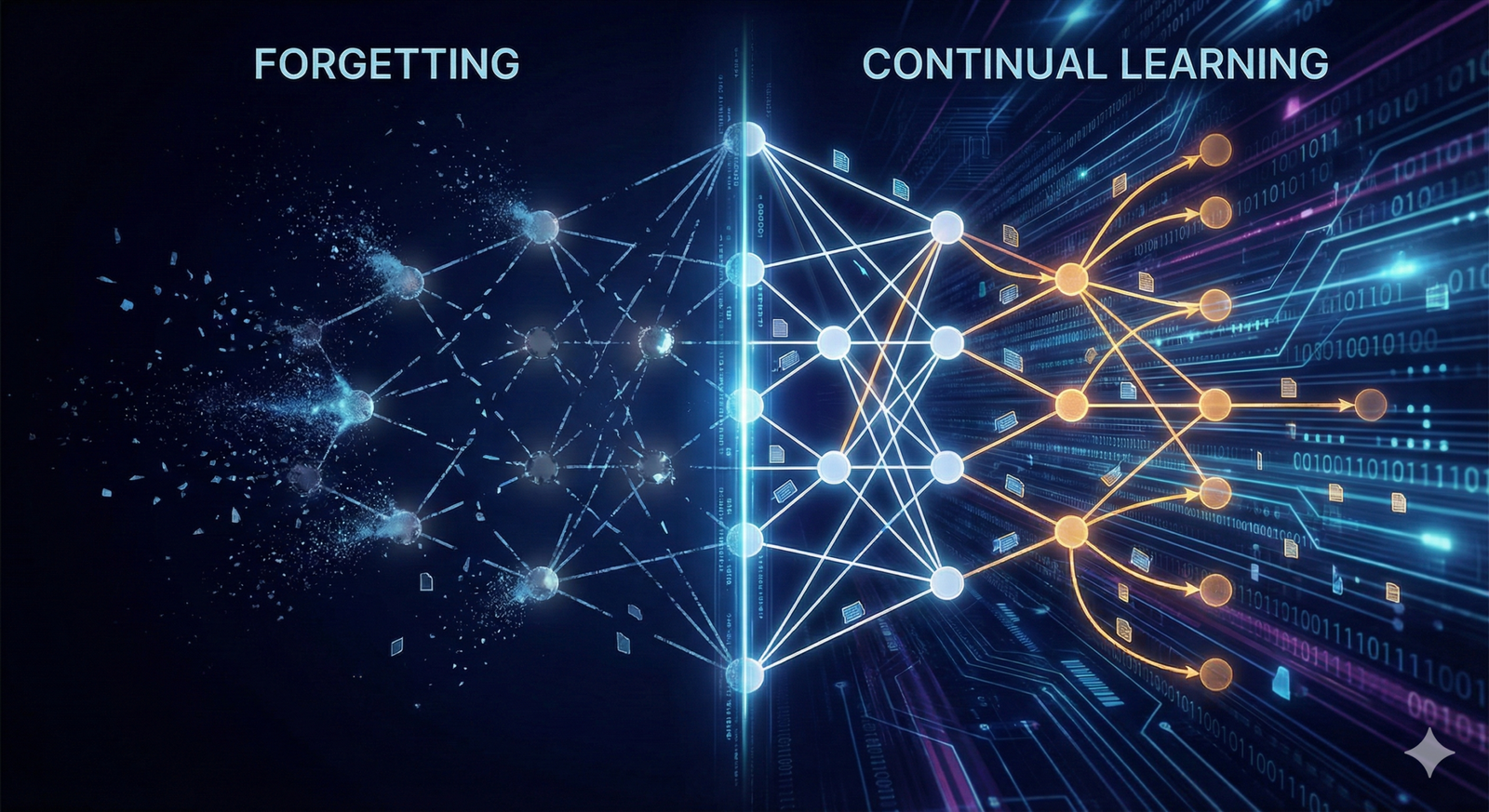

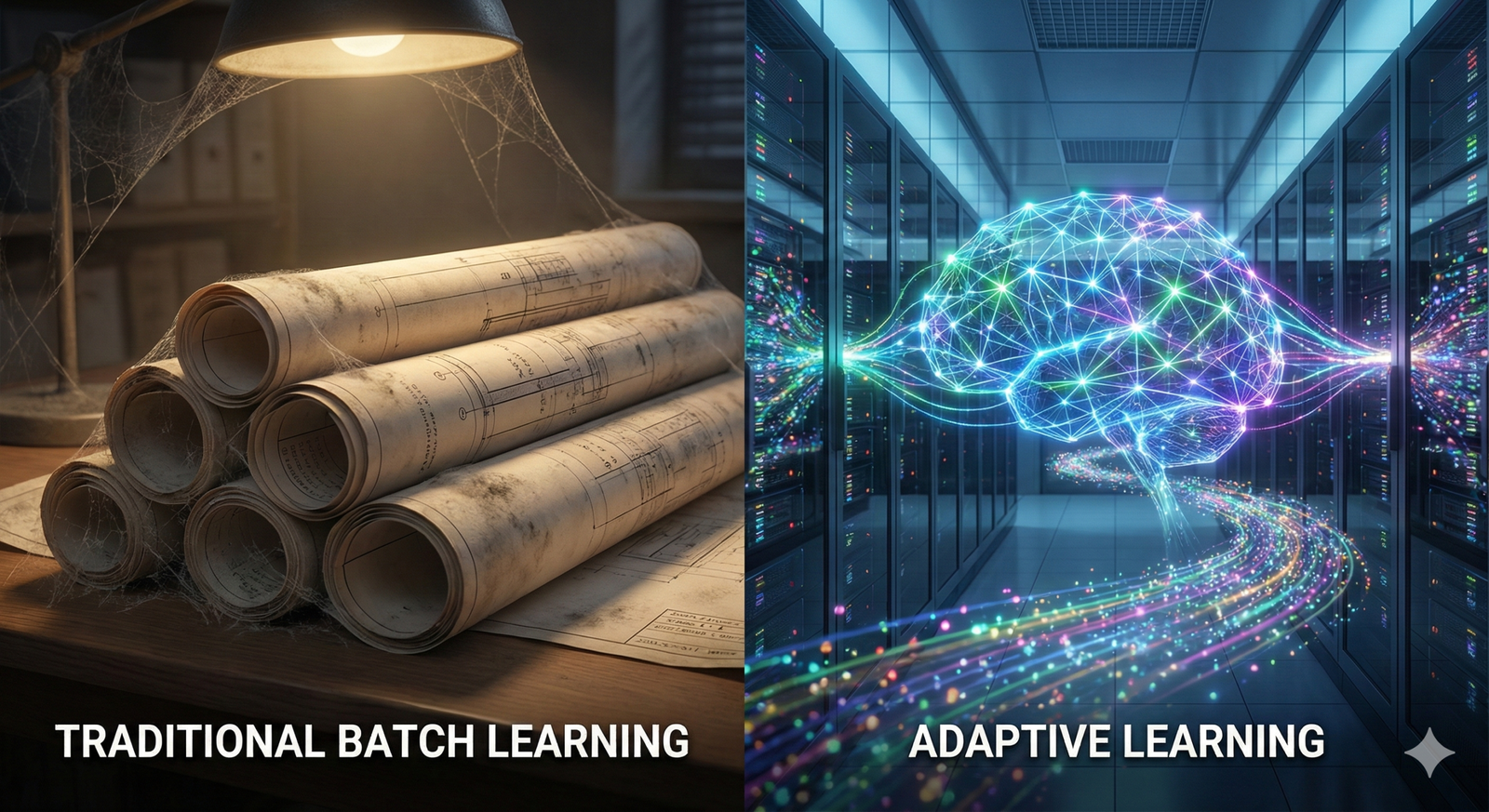

Constant Monitoring: Use bias dashboards to keep an eye on fairness measures over time and retrain when there is a drift.

A leading financial services company placed IBM AIF360 fairness limits on their credit-scoring model and witnessed a 40% decline in the number of loans that were turned down.

Strategy 3: Be open and honest about everything

Why it’s crucial to be clear

- Following the rules: The EU AI Act specifies that AI systems that are very risky must be able to explain what they do.

- Trust in AI: Customers are more willing to use AI if they know how it works.

Tools and Ways

- SHAP and LIME are two explainers that don’t rely on a single model. For instance, SHAP values indicate how features influence the predictions.

- Decision trees and generalized additive models (GAMs) are examples of algorithms that can be comprehended when explainability is more essential than sheer performance.

- Business users may see how models generate decisions via interactive dashboards. For example, the Explainability module in H2O Driverless AI performs this.

How to make it happen

- Pick how much you want to say: Learn what the technical and business users desire.

- Put explanations in the pipes: Automatically write and save explanations for each prediction.

- Teach stakeholders: Hold workshops to show them how to read and interpret reports that explain things.

- Like Google’s AI Principles paper, make the goals, data sources, and performance of AI systems public.

Strategy 4: Governments all around the world should make privacy and data protection their top priority.

- GDPR (European Union): You can only handle personal information when you have to and for a lawful cause.

- CCPA (California, USA): grants consumers control over their own data.

- PDPA (Singapore): backs plans to keep data safe³.

PETs are tools that make privacy better.

- Differential Privacy is a method for adding random noise to models and datasets. Apple and the US Census Bureau both utilize it.

- Federated Learning is a means to train models on data that is spread out, like the choices Google gives you for your keyboard.¹⁶.

- This allows you to do math on encrypted data (it’s still being worked on, but it’s getting better¹⁷).

What to Do:

- Check to determine if the data is as little as possible: Get only the features that the model needs to work.

- Users can set up detailed consent dashboards to decide how their data is used.

- PET evaluation: Use federated learning when the risk is low, like when engines offer products.

- Before you start utilizing AI, you should complete a privacy impact assessment (PIA) and write out how you will decrease the risks.

Strategy 5: Get individuals to be responsible and learn.

How to teach people to think about AI in a moral way: People should have to learn about AI ethics, data protection, and how to reduce bias online.

Ethics champions: In each area, find people who can show others how to use AI in a responsible way.

If an AI performs anything incorrectly, like classifying something wrong or compromising privacy, do “post-mortems” to find out what went wrong and update the rules.

Key performance indicators and metrics

- Ethics scorecards reflect how well people follow the regulations for running a corporation. For example, they reveal what proportion of models have been tested for bias.

- Check the findings to see how much your staff and consumers trust AI. If you need to, make improvements.

For instance, a store around the world started holding “AI Ethics Days” every three months. As a result, employees said that their ethical concerns and proactive risk mitigation had gone up by 30%.

How to Deal with Third-Party Vendors: More Things to Think About

- You should only work with AI vendors who are honest and follow your guidelines for running the business.

- Law and Insurance: Have a lawyer check over your AI contracts, and think about getting liability insurance that covers AI.

- Join groups like Partnership on AI¹⁹ to discuss best practices and make sure that all businesses follow the same guidelines.

The End

Putting ethical AI into action isn’t a one-time thing; it’s a process that includes culture, technology, governance, and compliance all at the same time. Companies can utilize AI to improve things while minimizing risks by putting in place solid governance, rules for fairness and privacy, making sure of openness, and encouraging an ethics-first culture. These strategies are based on established frameworks and real-world results. They teach businesses how to keep ahead of changes in the legislation, create trust with stakeholders, and foster responsible innovation.

A lot of people ask these questions

Q1: What does the EU AI Act mean when it talks about AI systems that are “high-risk”? The EU AI Act argues that biometric identification and credit scoring systems that have an impact on basic rights are particularly risky. Providers have to follow tight regulations about being honest, having the right information, and letting people check their work.

Q2: How often should you check for bias? Check for bias at least once every three months or anytime the data changes a lot. Dashboards that enable you keep an eye on things all the time can automatically send you alerts when fairness measures go off track.

Q3: Can small enterprises employ ethical AI without spending a lot of money? Yes. Two lean ways to make ethical AI available to enterprises of all sizes are to use open-source solutions like IBM AIF360 and Microsoft Fairlearn and to add ethics checks to agile processes that are already in place.

Q4: How does federated learning protect your data? Federated learning teaches models on devices that aren’t all in the same area. This retains the raw data where it was. It’s safer to have all of your model changes in one location.

Q5: What role do workers have in ethical AI? Workers are the first line of defense. With ethics training, “ethics champions,” and easy means to report problems, people can be responsible and recognize hazards early.