The concept of “identity” is undergoing a radical transformation. For decades, our digital presence was defined by static usernames, flat profile pictures, and text-based bios. Today, we stand on the precipice of a new era where our digital selves—and the entities we interact with—are becoming three-dimensional, autonomous, and incredibly lifelike.

This is the domain of AI avatars and digital humans in the metaverse. It is no longer just about choosing a hairstyle for a video game character; it is about creating persistent, intelligent representations of ourselves, or interacting with entirely synthetic beings that can hold conversations, show empathy, and perform complex tasks.

As the metaverse—a convergence of physical and digital realities—evolves, the “beings” that inhabit it are evolving too. We are moving from silent puppets controlled by keyboards to intelligent agents driven by generative AI. This guide explores the technological backbone, the transformative use cases, and the profound ethical questions surrounding this new frontier of digital existence.

Key Takeaways

- Evolution of Presence: Avatars are shifting from static, user-controlled puppets to dynamic, AI-driven entities capable of semi-autonomous behavior.

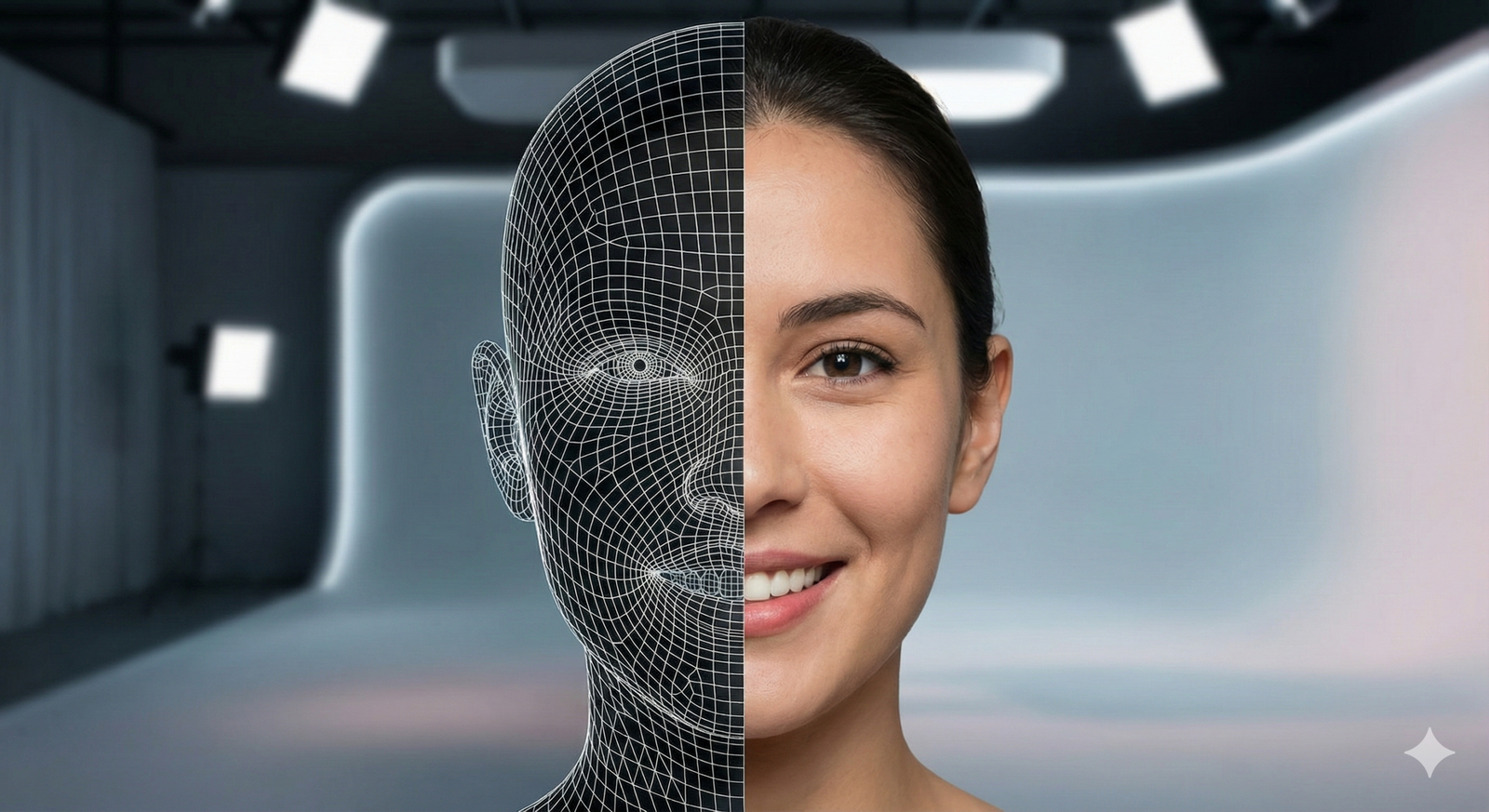

- The “Digital Human” Tier: A distinction exists between stylized avatars (like cartoons) and “digital humans,” which aim for photorealistic fidelity and psychological depth.

- Generative AI as the Brain: Large Language Models (LLMs) and multimodal AI are providing the “brains” for these avatars, allowing for natural, unscripted conversation.

- Enterprise Utility: Beyond gaming, digital humans serve as 24/7 customer service agents, corporate trainers, and brand ambassadors.

- The Interoperability Challenge: A major hurdle remains taking your digital identity seamlessly from one virtual platform to another.

- Ethical Frontiers: The rise of autonomous avatars introduces risks regarding identity theft, deepfakes, and the manipulation of human emotions.

Defining the Digital Population

Before diving into the technology, we must clarify the terminology. In this guide, we distinguish between three core concepts that are often used interchangeably but represent different functionalities.

1. The User Avatar (The Digital Puppet)

Historically, an avatar is a digital representation driven entirely by a human user. When you move your controller or VR headset, the avatar moves. It has no brain of its own; it is a suit you wear in the digital world.

- Current State: evolving from blocky characters to high-fidelity scans.

- AI Role: AI is increasingly used to interpret user facial expressions (via cameras) and map them onto the avatar in real-time, known as “retargeting.”

2. The AI Avatar (The Intelligent Agent)

An AI avatar is a digital character controlled by software rather than a human. In gaming, these are Non-Player Characters (NPCs). In business, they are virtual assistants.

- Current State: Moving from pre-scripted dialogue trees (“Press A to say Hello”) to generative conversations where the avatar generates unique responses based on context.

- AI Role: Handles perception (seeing/hearing the user), cognition (deciding what to say/do), and expression (animating the face and voice).

3. Digital Humans (The Photorealistic Standard)

“Digital Human” refers specifically to the visual fidelity and psychological complexity of the entity. A digital human is designed to look and behave indistinguishably from a real person.

- Use Case: High-end service roles, virtual influencers, and cinema.

- Constraint: They require immense processing power, often rendering in the cloud rather than on local devices.

The Technology Stack: Anatomy of a Digital Human

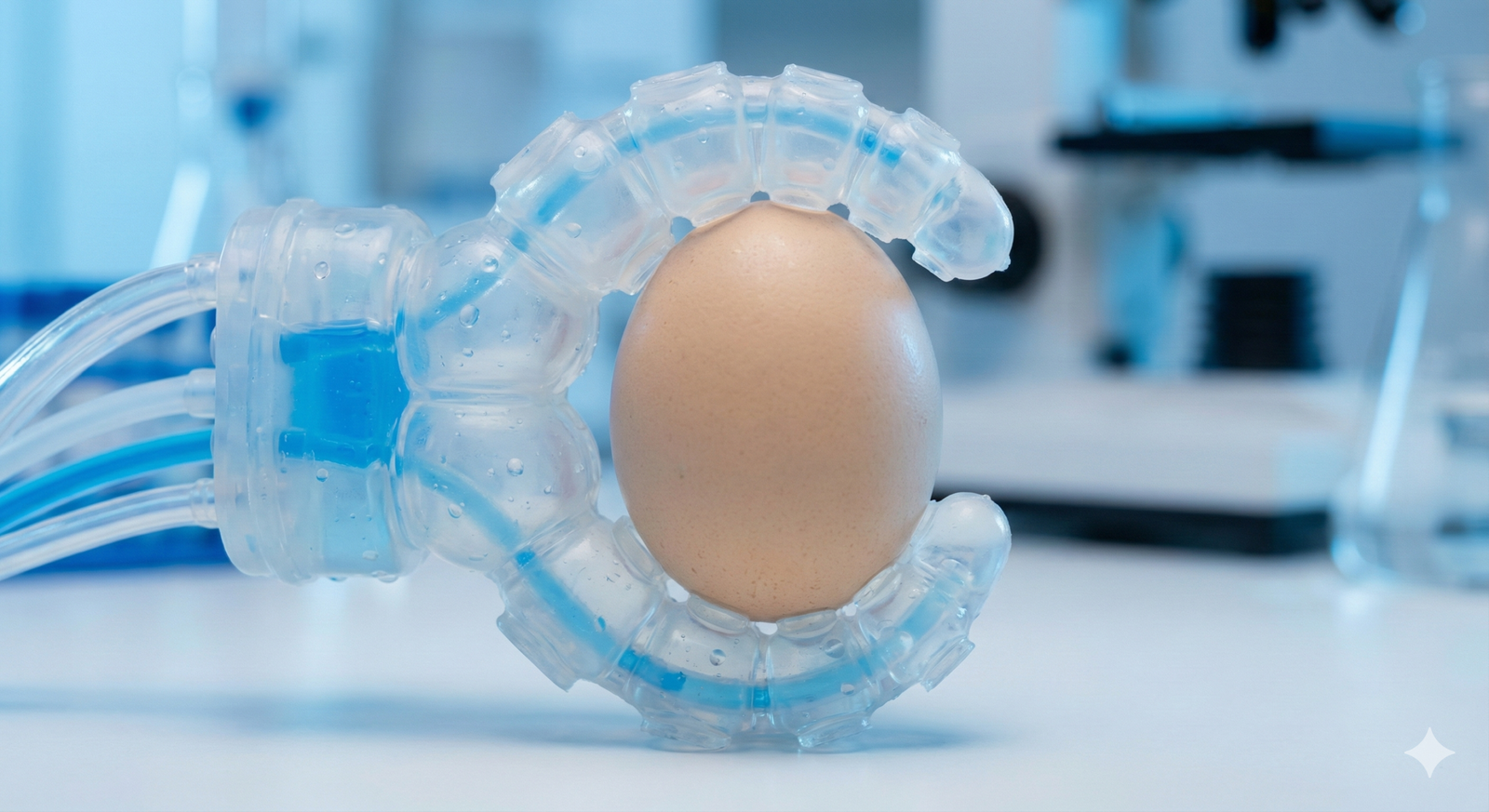

Creating a believable digital human in the metaverse requires a convergence of several cutting-edge technologies. It is not enough to look real; the entity must act real.

The Visual Layer: Rendering and Animation

The “skin” of the avatar relies on advanced 3D engines, primarily Unreal Engine (with its MetaHuman Creator) and Unity.

- Geometry and Textures: Modern pipelines use photogrammetry (scanning real people) or generative 3D modeling to create skin textures that include pores, wrinkles, and subsurface scattering (how light penetrates skin).

- Rigging: This is the digital skeleton. AI is now automating the rigging process, predicting how skin should stretch and fold when a “bone” moves, saving artists hundreds of hours.

- Neural Rendering: Techniques like Gaussian Splatting and NeRFs (Neural Radiance Fields) are beginning to replace traditional polygon meshes. These allow AI to generate 3D scenes from 2D images, creating hyper-realistic avatars that are lighter to render.

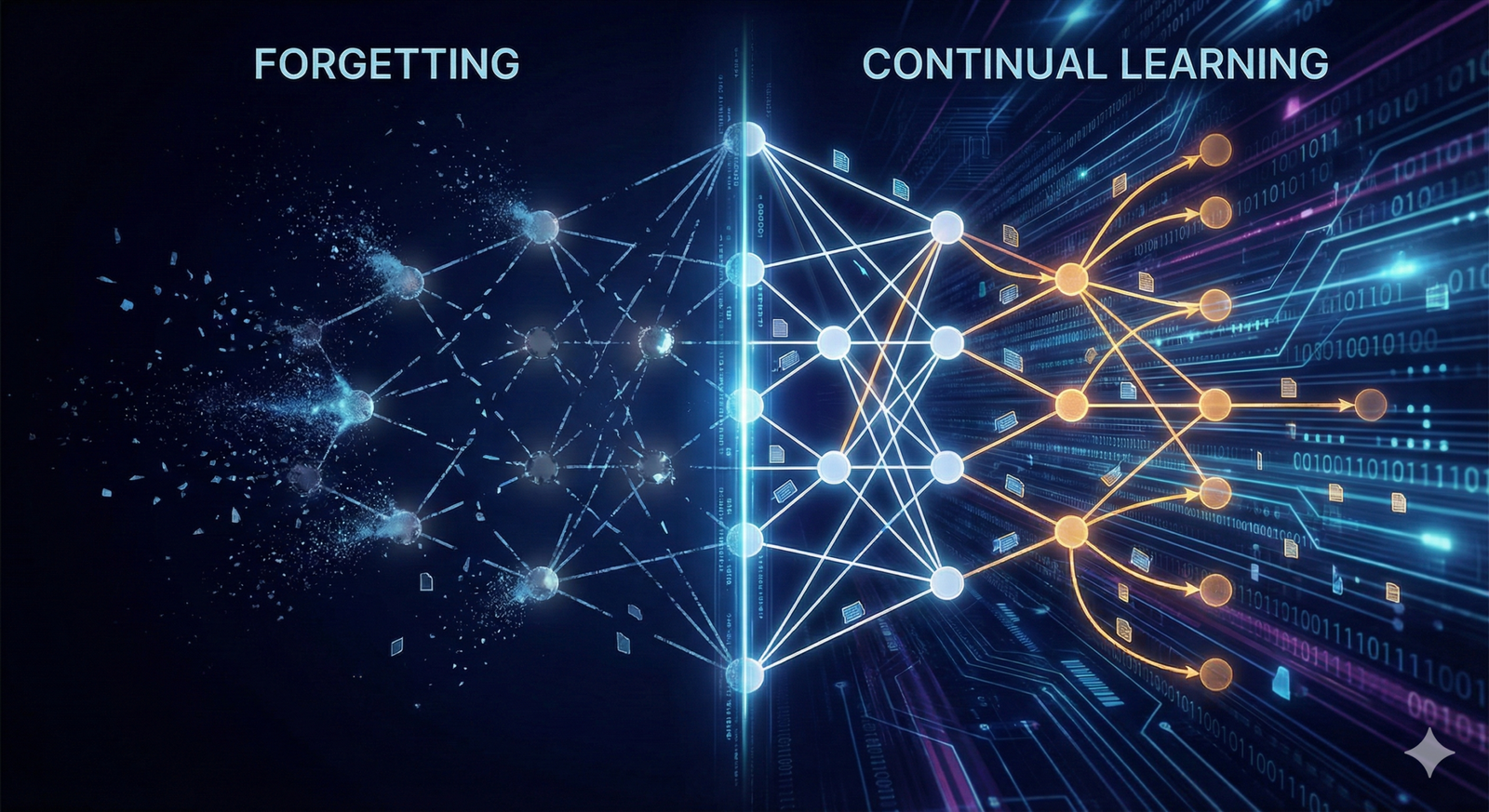

The Cognitive Layer: Large Language Models (LLMs)

The “brain” is what separates modern AI avatars from the chat-bots of the past. By integrating LLMs (like GPT-4, Claude, or Llama models) into the avatar’s backend, developers can give the avatar a personality file.

- Context Window: The avatar can remember previous interactions, creating a sense of relationship over time.

- Personality Tuning: Developers define a “system prompt” (e.g., “You are a grumpy medieval shopkeeper”). The LLM ensures all generated dialogue adheres to this persona, regardless of what the user asks.

- Guardrails: To prevent the avatar from saying offensive things or hallucinating facts, strict output filters are applied before the text is sent to the speech engine.

The Expression Layer: Audio-to-Face

Once the brain generates a text response, the avatar must speak it. This involves two steps happening in milliseconds:

- Text-to-Speech (TTS): Neural TTS engines generate a human-sounding voice with appropriate intonation (prosody).

- Lip Sync and Micro-expressions: This is critical. Old games used “flapping jaw” animations. Modern AI analyzes the audio wave and procedurally animates the mouth, eyes, and eyebrows to match the emotional tone of the voice. If the AI detects a “sad” tone, it might slightly droop the eyelids and corners of the mouth.

The Evolution of NPCs: From Scripted Loops to “Smart” Beings

One of the most immediate impacts of AI avatars is in the gaming and entertainment sectors of the metaverse. For decades, NPCs (Non-Player Characters) have been the furniture of video games—static, predictable, and possessing limited loops of dialogue.

The Problem with Traditional NPCs

In a traditional RPG (Role-Playing Game), if you ask a villager about a topic the developers didn’t write a script for, the NPC will simply repeat a default line: “I don’t know about that.” This breaks immersion. It creates a “glass wall” around the player’s freedom.

Generative Agents

Generative AI allows for the creation of “Smart NPCs.” These agents have:

- Goals and Motivations: Instead of a script, the NPC is given a motivation (e.g., “protect the village gate”).

- Observation: The NPC “sees” player actions. If you drop a weapon, the NPC might comment on it or pick it up, not because it was scripted to, but because its logic dictates that weapons are dangerous/valuable.

- Dynamic Dialogue: You can speak to these avatars via microphone, and they respond in character.

Example Scenario: In a detective metaverse mystery, you interrogate a witness.

- Old Way: Pick from three dialogue options.

- New Way: You ask, “Why were you nervous when the lights went out?” The AI analyzes your question, checks its “memory” of the event, checks its personality (nervous/secretive), and generates a stuttering, evasive answer that was never pre-written by a human writer.

Business Use Cases: The Rise of the Digital Workforce

While gaming is flashy, the economic engine of digital humans lies in enterprise applications. Companies are deploying AI avatars to handle tasks that require a “human touch” but are too expensive to staff with humans 24/7.

1. Customer Experience and Support

Text chatbots are efficient but impersonal. Voice bots (IVR) are often frustrating. A digital human face adds non-verbal communication—a nod of understanding, a smile of greeting—that increases trust and patience.

- Kiosks and Concierges: In virtual metaverse banking branches or digital hotel lobbies, AI avatars can guide users, answer complex account questions, and authorize transactions.

- Emotional De-escalation: Some systems use computer vision to detect if a customer is looking angry (via their webcam or avatar). The AI agent can then adjust its tone to be more soothing and apologetic, a nuance text bots miss.

2. Corporate Training and Soft Skills

Training employees to handle difficult conversations (firing someone, negotiating a deal, handling harassment) is hard to simulate.

- Roleplay Simulations: Employees put on a VR headset and interact with a digital human programmed to be difficult. The AI reacts to the employee’s specific choice of words and body language. This provides a safe sandbox to fail and learn without real-world consequences.

3. Digital Twins for Professionals

Executives and influencers are creating “Digital Twins”—AI copies of themselves.

- Scaling Presence: A CEO cannot attend every meeting in the metaverse. A digital twin, trained on the CEO’s past speeches and decision-making data, could attend low-stakes introductory meetings, present information, and answer basic questions in the CEO’s voice and likeness.

- Fashion and Influencing: Virtual influencers (like Lil Miquela, though initially human-scripted) are moving toward autonomy. Brands can hire a digital human to model clothes in the metaverse 24/7, interacting with thousands of fans simultaneously in a way a human model never could.

4. Healthcare and Therapy

There is a severe shortage of mental health professionals globally. While AI cannot replace a doctor, it can bridge the gap.

- Therapeutic Avatars: Studies have shown that some patients feel more comfortable disclosing embarrassing or traumatic information to a virtual avatar than a real human, as they do not fear judgment. AI avatars can conduct preliminary screenings or cognitive behavioral therapy (CBT) exercises.

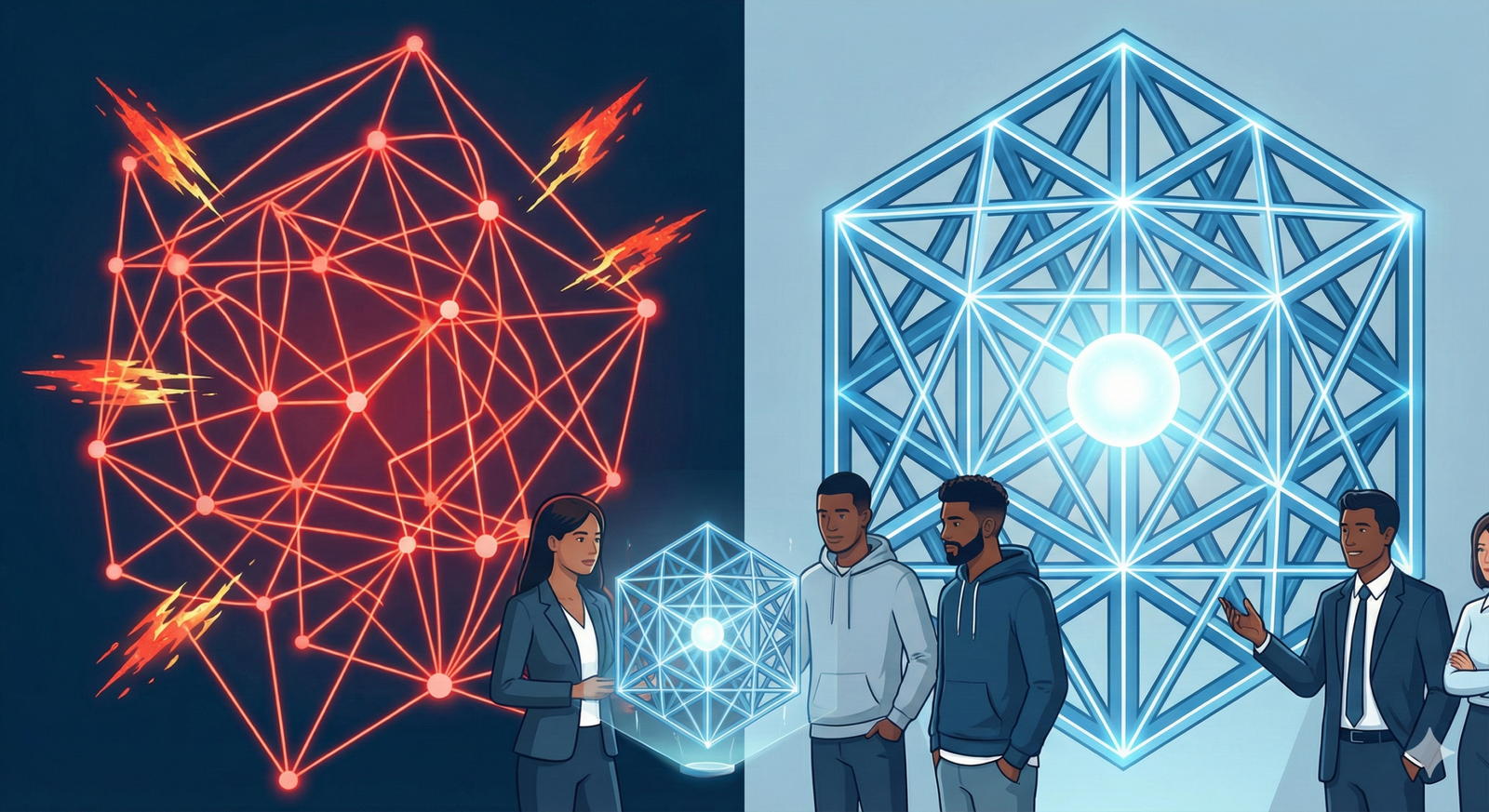

Interoperability: The “Passport” of the Metaverse

For digital humans and avatars to be truly useful, they cannot be trapped in one application (a “walled garden”). Users want to take their identity—their avatar skin, their digital clothing, and their reputation—from a meeting in Microsoft Mesh to a concert in Fortnite.

The Challenge of Standards

Currently, a 3D model built for one engine often breaks in another. The “bones” don’t match, the textures look wrong, or the file format is incompatible.

Emerging Solutions

- VRM and glTF: These are file formats specifically designed for interoperable avatars. VRM, popular in the V-Tuber community, defines standards for humanoid avatars so they can be loaded into any supporting application.

- USD (Universal Scene Description): Championed by Pixar and NVIDIA, this is becoming the HTML of the 3D web, allowing complex 3D data to be moved between tools.

- Blockchain Identity: Web3 technologies propose using NFTs not just as receipts for JPEGs, but as containers for avatar assets. Your wallet becomes your backpack, verifying you own the “skin” you are wearing across different metaverse platforms.

How to Create Digital Humans: A General Workflow

For creators and businesses looking to enter this space, the barrier to entry has lowered significantly. Here is what the workflow looks like in practice as of the mid-2020s.

Step 1: Generation vs. Scanning

- Scanning: For a “Digital Twin,” you use a rig of DSLR cameras (or even a high-end smartphone with LiDAR) to capture a real person’s geometry.

- Generation: Tools like MetaHuman Creator allow you to sculpt a human from scratch using sliders (DNA blending). Newer generative AI tools allow “Text-to-3D,” where you type “Cyberpunk warrior with a scar” and the AI generates the mesh.

Step 2: Intelligence Integration

Once the mesh exists, it is connected to an API (Application Programming Interface).

- Developers connect the visual front-end to an LLM back-end (like OpenAI’s API or a private Llama instance).

- They define the “knowledge base”—uploading PDFs or manuals that the avatar is allowed to reference.

Step 3: Deployment

The avatar is deployed via a cloud streaming service (Pixel Streaming) if high fidelity is required, or optimized (decimated) to run locally on mobile devices or VR headsets.

Ethical Considerations and Safety Guardrails

The ability to create realistic, persuasive digital humans brings distinct ethical perils. As we humanize technology, we must ensure it remains humane.

1. The Deepfake Dilemma

If creating a digital human becomes easy, creating a fake version of a politician, CEO, or ex-partner also becomes easy.

- Identity Theft: In the metaverse, “identity theft” could mean someone wearing your face and voice, committing actions that ruin your reputation.

- Verification: We likely need cryptographic watermarking (like the C2PA standard) to verify that an avatar is authorized by the human it depicts.

2. The Uncanny Valley and Manipulation

Highly realistic avatars can be persuasive.

- Emotional Manipulation: An AI avatar that knows exactly how to smile and use your name might manipulate you into buying products or agreeing to terms you normally wouldn’t. This is “super-persuasion.”

- Vulnerable Populations: Children or the elderly may have trouble distinguishing between a sentient human and an empathetic AI, leading to one-sided emotional attachments (parasocial relationships) that can be exploited by the platform owners.

3. Representation and Bias

If the generative tools used to create avatars are trained on biased data, the resulting “default” digital humans will reflect those biases.

- Diversity: Developers must ensure avatar creation tools allow for a full spectrum of ethnic features, body types, and disabilities, preventing the metaverse from becoming a homogenized space.

4. The Proteus Effect

This psychological phenomenon suggests that the appearance of our avatar changes how we behave.

- Users wearing taller, more attractive avatars tend to negotiate more aggressively.

- Users wearing “inventor” avatars tend to brainstorm more creatively.

- Risk: If we spend too much time as idealized digital selves, it may lead to body dysmorphia or dissatisfaction with our physical reality.

Future Outlook: The Era of Agentic AI

We are currently in the transition phase. We have the graphics (Unreal Engine 5) and we have the chat capabilities (LLMs). The next phase is Agency.

Future digital humans will not just stand in a virtual lobby waiting for you to talk to them. They will have:

- Long-term Memory: Remembering you from a conversation three months ago.

- Autonomy: Performing tasks while you are offline. You might send your digital agent to scout virtual real estate locations, negotiate a price, and report back to you.

- Multi-modal Sensing: They will “see” via your camera and “hear” via your mic, understanding the physical context of your room (e.g., “I see you’re drinking coffee, do you want a morning briefing?”).

The Convergence

Eventually, the line between an operating system and a digital human will blur. We won’t click icons; we will ask our AI companion to “organize my files.” The metaverse interface will not be a menu; it will be a face.

Conclusion

AI avatars and digital humans represent the interface of the future. They humanize the abstract complexity of the internet, providing us with guides, companions, and new forms of self-expression. However, this power demands responsibility. As we populate the metaverse with synthetic beings, we must prioritize consent, authenticity, and user safety.

For businesses, the time to experiment is now—starting with controlled use cases like customer service or internal training. For individuals, the future holds a fluidity of identity we have never experienced before. We are building a world where who we are is limited only by our imagination, and the software we choose to run.

Next Steps for Engagement

If you are interested in exploring this space:

- For Creators: Experiment with Unreal Engine’s MetaHuman (free to use) to understand the rigging and fidelity possibilities.

- For Developers: Look into Inworld AI or Convai, platforms specifically designed to give brains to NPCs.

- For Business Leaders: Audit your customer service workflows to identify high-volume, repetitive interactions that could be humanized by a digital agent.

Frequently Asked Questions (FAQs)

1. What is the difference between an avatar and a digital human? An avatar usually refers to a user-controlled representation, often stylized or gamified. A digital human is an AI-driven, photorealistic entity that mimics human behavior, appearance, and psychology, often functioning autonomously as an assistant or NPC.

2. Can AI avatars work in VR headsets? Yes, but with limitations. Highly photorealistic digital humans require immense processing power, often too much for standalone VR headsets. They are usually rendered in the cloud and streamed to the headset (Pixel Streaming), or simplified (decimated) to run locally.

3. Are digital humans expensive to create? The cost has dropped dramatically. While Hollywood-grade digital doubles used to cost hundreds of thousands of dollars, tools like MetaHuman and generative AI allow creators to build high-fidelity characters in minutes for free or low subscription costs. The cost is now mostly in the AI running costs (tokens) and cloud rendering.

4. How do AI avatars “hear” and “see” inside the metaverse? They use APIs. For hearing, they use Speech-to-Text (Whisper, Google STT) to convert your voice to text for the LLM. For seeing, they access game engine data (knowing where players are standing) or use computer vision to interpret visual inputs.

5. Is it safe to share personal data with an AI avatar? You should treat an AI avatar like a web form. Behind the face is a database. If the avatar is provided by a reputable company (like a bank), it is generally as safe as their banking app. However, be cautious with unknown avatars in open metaverse spaces, as they could be recording data.

6. Can I make money with a digital human? Yes. The “Virtual Influencer” economy is growing. Creators build digital personas, grow social media followings, and secure brand deals. Additionally, businesses license digital humans for customer support or training videos.

7. What prevents an AI avatar from saying offensive things? Developers use “guardrails.” This is a layer of software that intercepts the AI’s response before it is spoken. It checks for toxicity, hate speech, or off-topic content. If flagged, the response is blocked or rewritten.

8. Will AI avatars replace human actors? This is a major point of contention (e.g., the SAG-AFTRA strikes). While AI avatars handle background roles or digital crowds efficiently, the consensus is that they currently lack the subtle emotional depth and artistic choice of human actors for lead roles, though this gap is narrowing.

9. Can I take my avatar from Fortnite to Roblox? Not directly yet. Each platform uses different file formats, art styles, and rigging systems. “Interoperability” is a major technical goal, but currently, you usually need a separate version of your avatar for each platform.

10. What is the “Uncanny Valley”? The Uncanny Valley is a feeling of unease or revulsion people feel when a robot or avatar looks almost human but not quite perfect (e.g., dead eyes or stiff movement). Developers aim to either stay stylized (cartoony) or jump over the valley to perfect realism to avoid this effect.

References

- Unreal Engine. (2024). MetaHuman Creator Documentation and Features. Epic Games. https://www.unrealengine.com/en-US/metahuman

- NVIDIA. (2023). NVIDIA ACE (Avatar Cloud Engine) for Games. NVIDIA Developer. https://developer.nvidia.com/ace

- Bailenson, J. (2018). Experience on Demand: What Virtual Reality Is, How It Works, and What It Can Do. W. W. Norton & Company. (Source for Proteus Effect).

- Inworld AI. (2024). The Future of NPCs: Generative AI in Gaming. Inworld. https://inworld.ai

- Khronos Group. (2022). glTF and VRM Standards for 3D Interoperability. https://www.khronos.org/gltf/

- Microsoft. (2023). Responsible AI Standard v2: General Requirements. Microsoft AI Principles. https://www.microsoft.com/en-us/ai/responsible-ai

- Unity Technologies. (2024). AI and Machine Learning in Unity: Sentis and Muse. https://unity.com/ai

- Adobe. (2024). Content Authenticity Initiative (C2PA) Technical Specifications. https://contentauthenticity.org/

- Seymour, M., et al. (2021). The Face of Artificial Intelligence: The Role of Digital Humans in Business. Journal of Business Research.

- Ready Player Me. (2024). Cross-game Avatar Platform Documentation. https://readyplayer.me/