The concept of the “metaverse”—a persistent, shared, 3D digital universe—has evolved rapidly from science fiction to a tangible technological frontier. However, a significant bottleneck has always plagued its development: the sheer difficulty of building it. Traditionally, creating high-fidelity virtual environments required armies of 3D artists, animators, and coders working for years to manually place every polygon and texture.

Enter AI-generated virtual worlds.

Generative AI has fundamentally shifted the equation. Instead of manually sculpting every tree, building, and non-player character (NPC), creators can now use intelligent algorithms to generate massive, intricate environments in a fraction of the time. From text-to-3D asset creation to Large Language Models (LLMs) that power the minds of virtual inhabitants, AI is not just a tool for the metaverse; it is the engine that will likely build it.

In this guide, “AI-generated virtual worlds” refers to 3D environments where significant portions of the geometry, textures, logic, or narrative are created via generative artificial intelligence, rather than manual human labor.

Key Takeaways

- Scale and Speed: AI allows for the creation of infinite, persistent worlds that would be impossible to build manually.

- Democratization: Tools like text-to-3D prompts enable non-coders to become world-builders.

- Dynamic Interactivity: AI-driven NPCs offer unscripted, organic conversations, replacing static dialogue trees.

- Cost Efficiency: Automating asset creation drastically reduces the budget required for game development and industrial simulations.

- Digital Twins: Beyond gaming, AI virtual worlds are revolutionizing industries through precise simulations of factories and cities.

Who this is for (and who it isn’t)

- This guide is for: Game developers, spatial computing enthusiasts, digital creators, business strategists looking at “digital twins,” and educators interested in immersive learning.

- This guide isn’t for: Readers looking for crypto-currency investment advice or specific tutorials on trading NFTs within virtual land platforms.

What Are AI-Generated Virtual Worlds?

To understand AI-generated virtual worlds, we must distinguish them from traditional methods of digital creation.

The Shift from Procedural to Generative

For decades, games like Minecraft or No Man’s Sky used procedural generation. This involved strict, rule-based algorithms (mathematical formulas) to arrange pre-made assets. While effective for scale, the results were often repetitive or “samey.”

Generative AI differs because it relies on machine learning models trained on vast datasets of images, 3D models, and code. It doesn’t just arrange existing blocks; it synthesizes new content. It understands context, style, and semantics. If you ask a generative AI for a “weather-beaten cyberpunk noodle shop,” it creates the geometry and textures based on its “understanding” of those concepts, resulting in a unique asset rather than a rearrangement of generic “building” blocks.

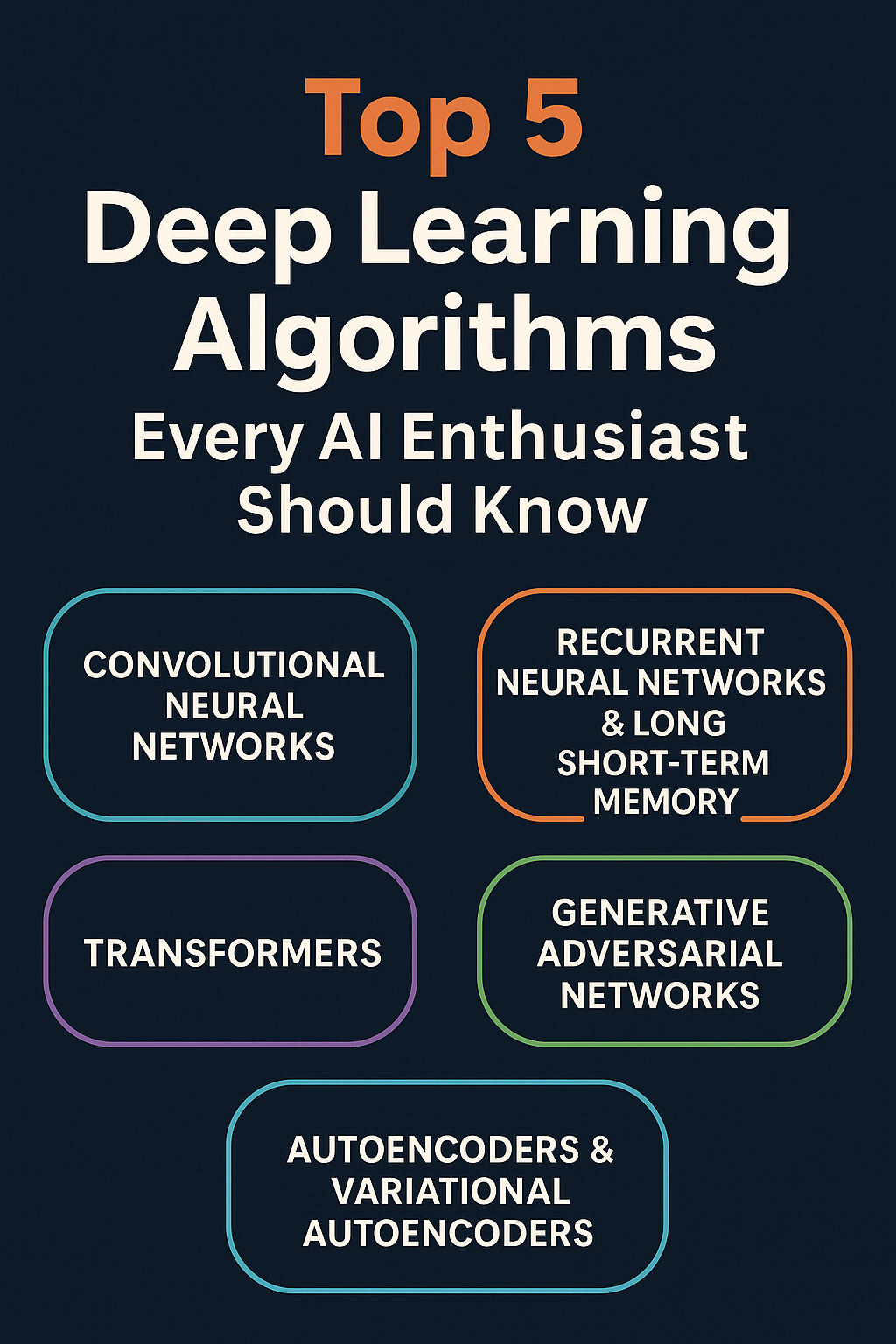

The Three Pillars of Generation

AI contributes to virtual worlds in three specific dimensions:

- Asset Generation: Creating the static objects (cars, trees, buildings).

- Environment Generation: assembling those assets into a coherent layout (terrain, weather, lighting).

- Behavioral Generation: Driving the logic, physics, and NPC interactions that make the world feel alive.

The Engine Room: How Generative AI Builds 3D Spaces

Understanding the mechanism behind these worlds helps demystify the “magic.” Currently, several technologies converge to make this possible.

Text-to-3D and Image-to-3D

Just as tools like Midjourney transformed 2D art, new models are transforming 3D modeling.

- NeRFs (Neural Radiance Fields): This technology uses AI to synthesize novel views of complex 3D scenes from a set of 2D images. It allows AI to “see” an object from a few photos and reconstruct it as a volumetrically accurate 3D model.

- Gaussian Splatting: A newer rendering technique that represents scenes as millions of 3D “splats” (ellipsoids). It is faster to render than NeRFs and is becoming a standard for capturing real-world environments into virtual ones.

- Vector and Mesh Generation: AI models are now capable of outputting clean “topology”—the wireframe mesh that 3D engines need. Early AI models produced “messy” geometry that broke game engines; modern tools are learning to output optimized meshes ready for animation.

Neural Physics and Rendering

Traditionally, light and physics in video games are approximations—”faked” to look real. AI is introducing neural rendering, where deep learning models predict how light should bounce off a surface in real-time, achieving photorealism with lower computational costs than traditional ray tracing.

Similarly, AI handles physics simulation. Instead of manually coding the breaking point of a wooden bridge, AI physics engines can predict how materials fracture based on training data from real-world physics, making destruction and interaction in the metaverse feel visceral and unpredictable.

Breathing Life into the Void: AI for Dynamic Content

A beautiful world is useless if it is empty. This is where AI moves from being a “carpenter” to a “director.”

The Rise of Intelligent NPCs

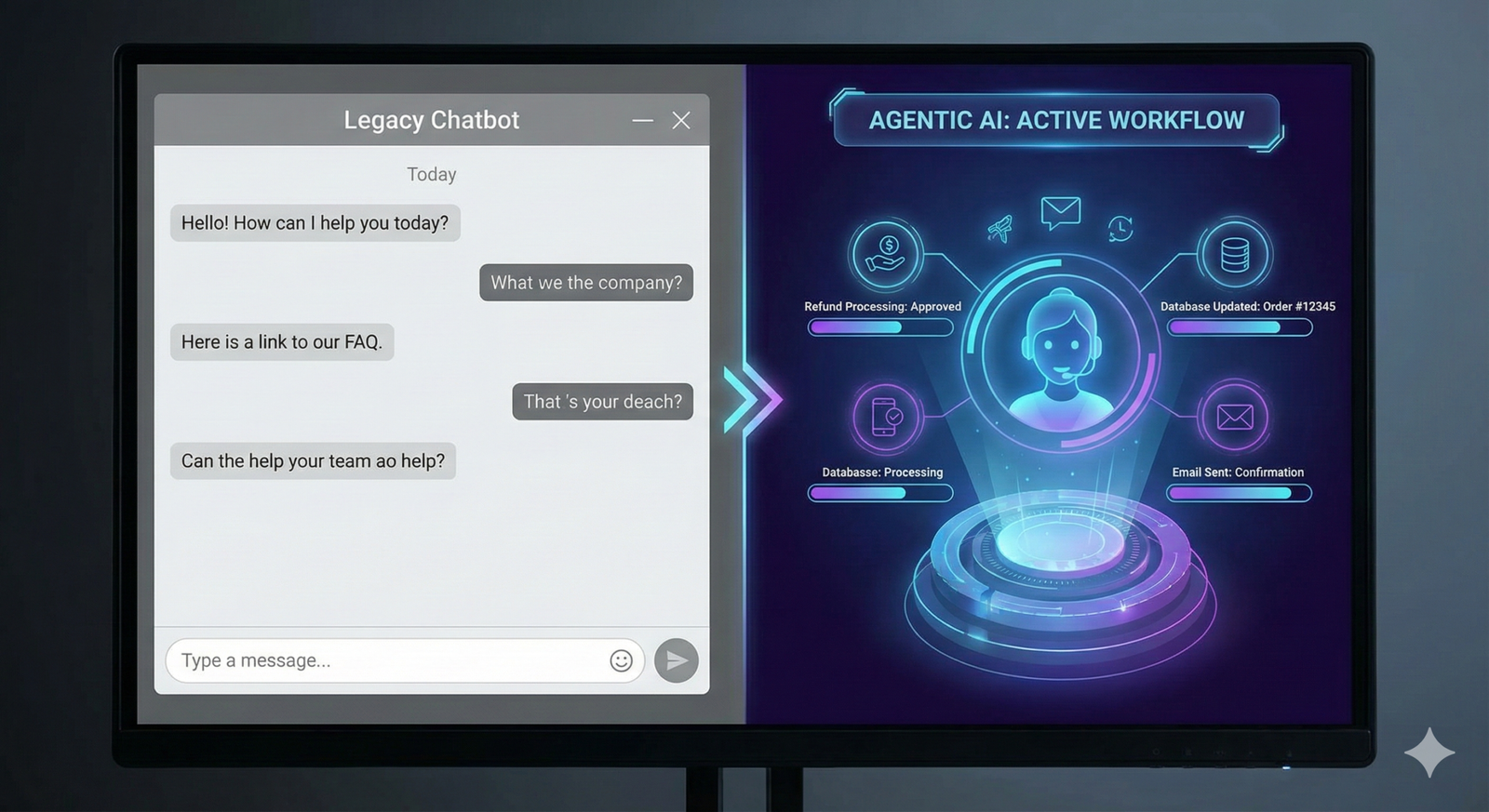

For forty years, Non-Player Characters (NPCs) have been restricted to “decision trees.” If you asked an NPC a question they weren’t programmed to answer, they would repeat a generic line.

With the integration of Large Language Models (LLMs) into game engines:

- Contextual Awareness: NPCs remember past interactions. If you insulted a shopkeeper yesterday, they might refuse to sell to you today.

- Goal-Oriented Behavior: innovative AI agents can be given a motivation (e.g., “protect the village”) and will autonomously decide how to achieve it based on player actions.

- Infinite Dialogue: Scriptwriters no longer need to write every line. They write the character bio and history, and the AI improvises the dialogue in character.

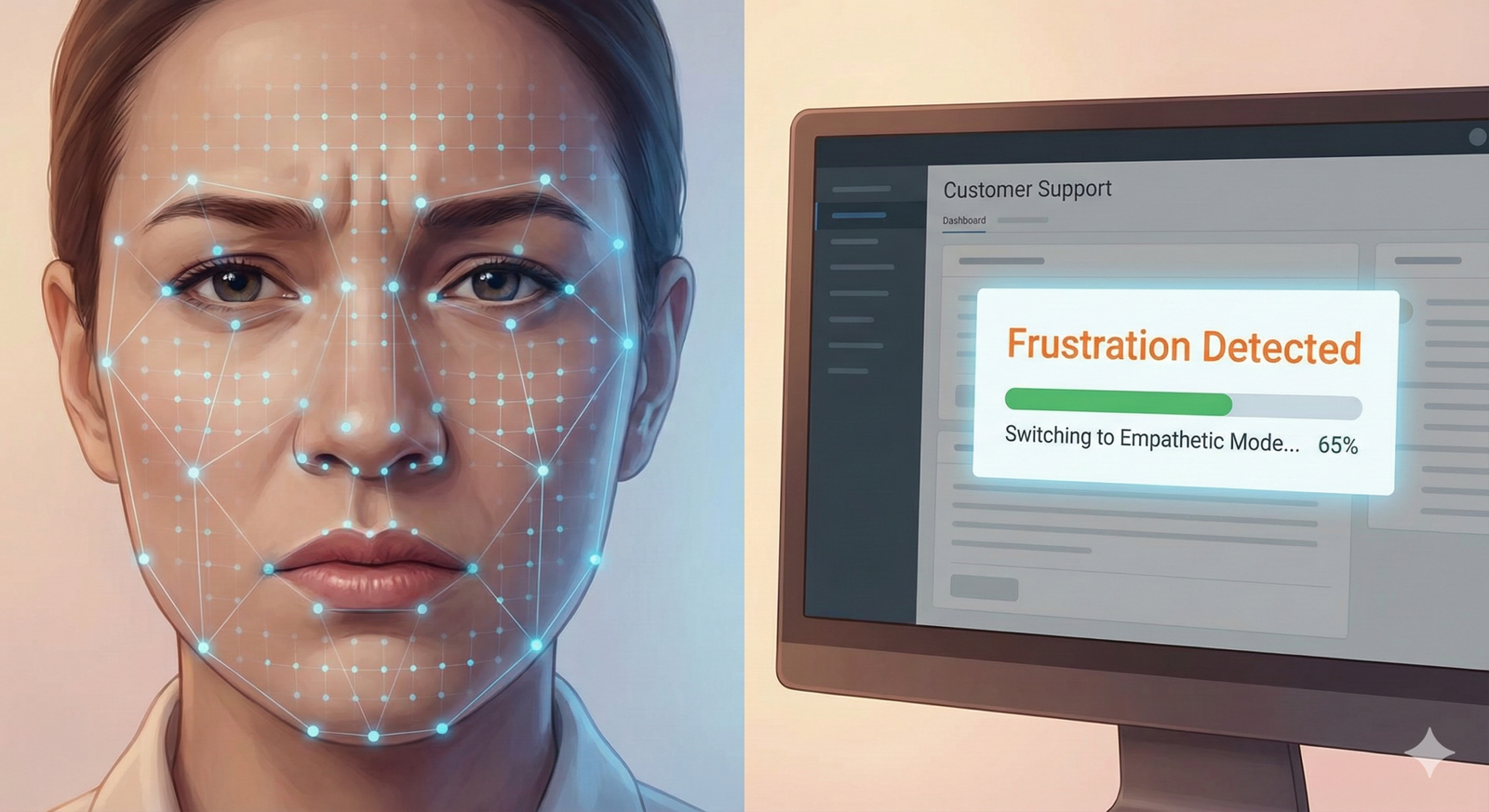

Adaptive Narratives

AI serves as a “Game Master.” It monitors player engagement. If a player looks bored, the AI director can dynamically spawn an event, change the weather, or adjust the difficulty. This creates a virtual world that personalizes itself to the user’s emotional state and skill level in real-time.

Key Components of the AI-Metaverse Stack

If you are looking to build or invest in this space, you need to recognize the infrastructure layers.

1. The Foundation Models

These are the massive AI models trained on 3D data. Companies like NVIDIA, OpenAI, and specialized startups train these models on billions of 3D objects to “teach” the AI what a table, a dragon, or a spaceship looks like from every angle.

2. The Orchestration Layer

This layer connects the AI to the virtual world engine. It translates the AI’s creative output into a format the engine understands (e.g., USD – Universal Scene Description).

- Example: NVIDIA Omniverse acts as a hub, allowing assets generated by different AI tools to coexist in a single, physically accurate simulation.

3. The User Interface (Prompt Interfaces)

This is how creators talk to the machine. We are moving away from complex coding interfaces to natural language interfaces.

- Example: “Create a forest biome with bioluminescent flora and a heavy fog.” The interface parses this, calls the asset generators, places the terrain, and adjusts the lighting settings automatically.

Use Cases Beyond Gaming

While gaming is the most visible frontier, the economic impact of AI-generated worlds is likely higher in industrial and social sectors.

Industrial Digital Twins

A digital twin is a virtual replica of a physical object or system.

- Manufacturing: Automakers like BMW use AI-generated worlds to simulate factory floors. They can reconfigure the assembly line in the virtual world to test efficiency before moving a single physical machine.

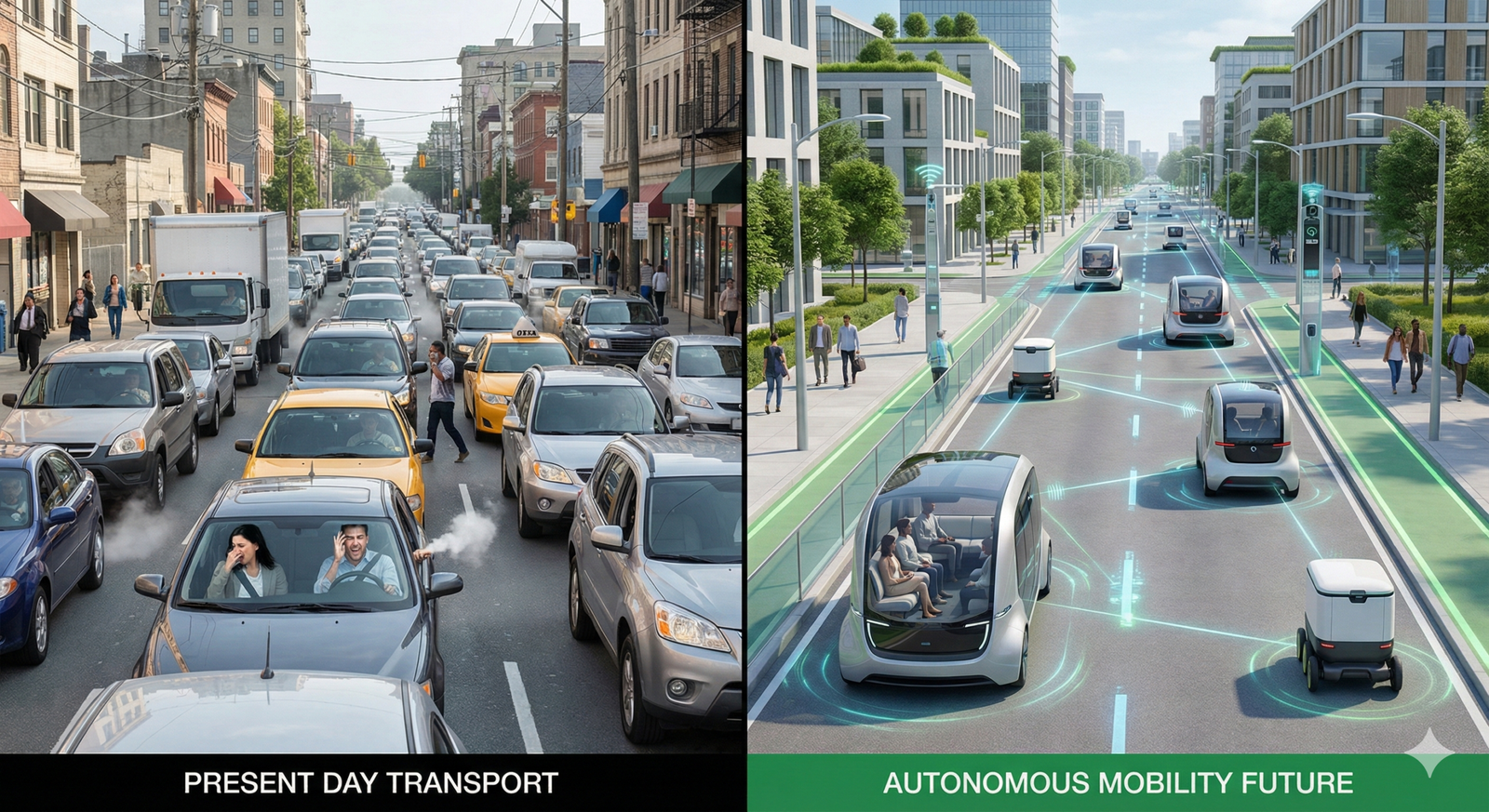

- Urban Planning: Cities generate virtual replicas to model traffic flow or disaster response. AI populates these cities with millions of “virtual agents” simulating real human behavior to test how a new subway line might affect commute times.

Immersive Education and Training

AI can generate historical recreations on the fly. A history class could virtually visit Ancient Rome, where the environment is historically accurate (based on AI synthesis of archaeological data) and the citizens speak and act according to historical records.

- Medical Training: AI generates infinite variations of virtual surgeries, introducing complications (like unexpected bleeding) to test the surgeon’s adaptability, something static simulations cannot do.

Virtual Real Estate and Architecture

Architects use generative design to explore thousands of building configurations. AI can “grow” a building design that maximizes natural light and structural integrity while minimizing material use, allowing clients to walk through the generated structure in VR before approval.

Practical Tools and Platforms Leading the Charge

As of early 2026, the landscape is populated by both tech giants and agile startups.

| Category | Tool/Platform | Primary Function |

| Infrastructure | NVIDIA Omniverse | A platform for connecting 3D pipelines and developing industrial metaverse applications using USD. |

| User Creation | Roblox | uses generative AI to allow users to code via natural language and generate textures for their games. |

| Asset Creation | CSM.ai / Luma AI | specialized in turning video/images into high-quality 3D assets ready for game engines. |

| NPC Logic | Inworld AI | Provides the “brains” for NPCs, handling dialogue, voice synthesis, and emotional intelligence. |

| Environment | Promethean AI | An assistant that helps artists fill virtual spaces (e.g., “add a messy desk here”) by selecting assets from a library. |

Common Mistakes and Challenges

Despite the hype, building AI-generated worlds is fraught with technical and design pitfalls.

1. The “Empty World” Syndrome

Just because AI can generate an infinite world doesn’t mean it should.

- The Mistake: creating vast, sprawling landscapes that look pretty but have nothing to do.

- The Reality: Density of interaction matters more than square mileage. An AI-generated city with 10,000 buildings is boring if you can’t enter any of them or if they all look vaguely identical.

2. Hallucinations in 3D

We know text AIs hallucinate (invent facts). 3D AIs hallucinate geometry.

- The Issue: You might ask for a “car,” and the AI generates a vehicle that looks perfect from the front but has three wheels and no doors from the back.

- The Fix: Human-in-the-loop validation is still essential. AI is the drafter; the human is the editor.

3. Compute Costs and Latency

Running a photorealistic world where every texture is generated on the fly and every NPC uses a cloud-based LLM is incredibly expensive.

- The Constraint: Latency. If an NPC takes 3 seconds to “think” of a response to your greeting, the immersion breaks. Edge computing and optimized “small language models” (SLMs) running on-device are currently being developed to solve this.

4. Copyright and Ownership

If an AI generates a virtual building based on training data from real-world architects, who owns the design?

- Legal Uncertainty: As of 2026, copyright laws regarding AI-generated works remain in flux globally. Using AI assets in commercial metaverse projects carries a risk of future litigation or uncopyrightability (meaning you can’t stop others from copying your AI-generated world).

The Ethics of Infinite Realities

As we populate the metaverse with AI, we face significant ethical questions that go beyond technology.

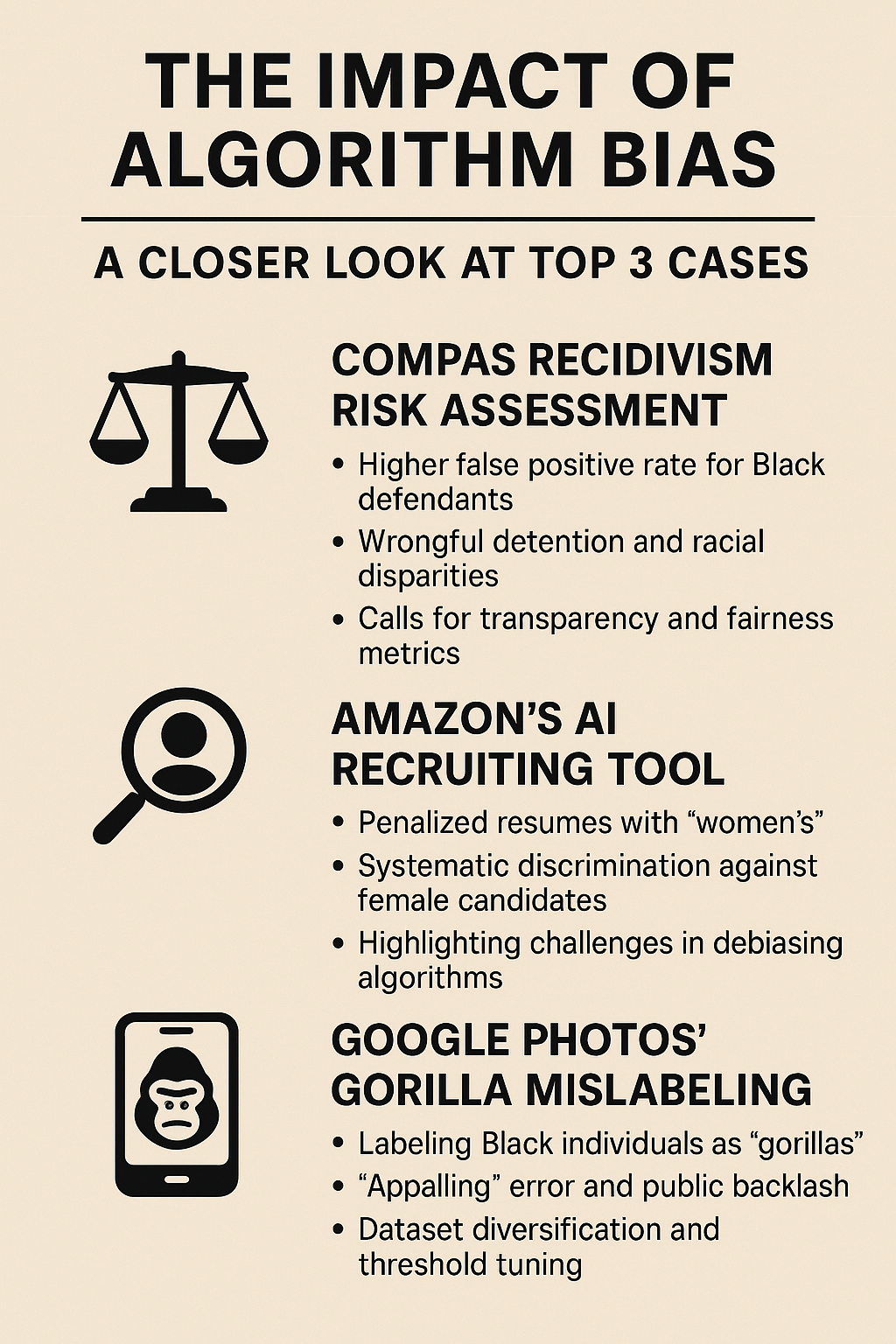

Bias in World Building

If an AI model is trained primarily on Western architecture and demographics, the “default” virtual world it generates will reflect that bias. This risks creating a metaverse that excludes cultural nuance or diversity, enforcing a homogenized digital culture.

Toxicity and Moderation

AI NPCs can be manipulated (“jailbroken”) to say offensive things. Furthermore, in an unmoderated AI-generated world, users might generate content that violates safety standards (e.g., generating hate symbols). Automated moderation systems must be as sophisticated as the generative tools.

The “Deepfake” Reality

In a high-fidelity metaverse, AI can generate avatars that look and sound exactly like real people without their consent. The potential for identity theft and fraud in virtual spaces is a major security concern for platform holders.

Future Outlook: From Static to Sentinel

The trajectory of AI-generated virtual worlds points toward autonomous evolution.

Currently, we are in the “Prompt Era,” where a human prompts the AI to build. The next phase is the “Gardener Era.” In this future state, developers will set the initial parameters (the “seed”) of a world—its physics, its lore, its aesthetic style—and the AI will grow the world over time.

Imagine a virtual forest that grows in real-time. If players chop down too many trees, the AI “ecosystem manager” creates erosion effects, changes the river paths, and spawns different aggressive wildlife to defend the scarce resources. The world becomes a living organism, reacting to the collective actions of its inhabitants without human developers needing to patch in changes manually.

Furthermore, interoperability remains the holy grail. AI may eventually serve as the “universal translator” between different virtual worlds, automatically converting your avatar and assets from a Fortnite-style aesthetic to a Roblox-style aesthetic as you hop between platforms.

Related Topics to Explore

- Spatial Computing: The broader hardware ecosystem (Apple Vision Pro, Meta Quest) that displays these AI worlds.

- Web3 and Blockchain: How ownership of AI-generated assets is tracked using tokens and NFTs.

- Edge AI: Processing data on-device to reduce the latency of AI interactions in virtual reality.

- Extended Reality (XR): The spectrum covering VR, AR, and Mixed Reality.

- Prompt Engineering for 3D: The emerging skill set of describing 3D structures for AI models.

Conclusion

AI-generated virtual worlds represent a paradigm shift in how we interact with digital space. By moving from manual construction to AI-assisted cultivation, we are unlocking a scale of creativity that was previously impossible. Whether for gaming, industrial simulation, or social connection, generative AI is the bricklayer, the architect, and the storyteller of the metaverse.

For creators and businesses, the next step is experimentation. The tools are no longer theoretical; they are here. Start small—experiment with text-to-3D asset generation or implement a simple LLM-based NPC in a test environment. The metaverse of the future will not be built by hand; it will be grown, prompt by prompt.

FAQs

1. How does AI generate 3D models from text? AI models use deep learning techniques trained on vast datasets of paired images and text. When you input a text prompt, the AI predicts the 3D geometry (point clouds or meshes) and textures that correspond to that description, often refining a noisy shape into a clear object over several iterations.

2. Can AI create an entire game without human help? As of early 2026, not reliably. While AI can generate assets, code snippets, and dialogue, stitching them into a cohesive, bug-free, and fun gameplay loop still requires human oversight, game design expertise, and “glue” code.

3. Is the metaverse the same as Virtual Reality (VR)? No. VR is a way to access digital spaces (via headsets). The metaverse is the concept of the persistent, shared digital spaces themselves. You can access the metaverse via VR, AR, or even a standard desktop screen.

4. What are the costs associated with AI-generated worlds? While AI reduces labor costs (fewer hours needed for modeling), it increases compute costs. Generating high-fidelity 3D assets and running intelligent NPCs requires significant GPU power and cloud API usage fees.

5. Are AI-generated virtual worlds safe for children? Safety depends on the platform’s moderation. Because AI generation can be unpredictable, there is a risk of generating inappropriate content. Most reputable platforms use secondary AI systems to filter and moderate content before it is shown to users.

6. Will AI replace 3D artists and game designers? It will likely replace tasks, not roles. The role of a 3D artist is shifting from “modeling every vertex” to “curating and refining AI outputs.” Creative direction and high-level design skills will become more valuable than repetitive manual execution.

7. Can I own the land in an AI-generated world? This depends on the platform. In centralized platforms (like Roblox), the company owns the servers. In decentralized (Web3) platforms, ownership might be tokenized via NFTs. However, the AI generation aspect itself does not guarantee ownership.

8. What is a “Neural Radiance Field” (NeRF)? NeRF is a technology that uses AI to reconstruct a 3D scene from 2D images. It figures out how light travels through the scene to render it from new angles, making it easier to turn real-world videos into virtual world environments.

9. How do “Digital Twins” help the environment? By simulating systems (like traffic or energy grids) in a virtual world, engineers can optimize them for efficiency without wasting real resources. For example, testing a new traffic light sequence in a digital twin can reduce real-world idling and emissions.

10. What is the difference between an NPC and an AI Agent? A traditional NPC follows a pre-written script. An AI Agent has autonomy; it perceives its environment, makes decisions based on goals, and generates its own actions or dialogue dynamically.

References

- NVIDIA. (n.d.). NVIDIA Omniverse: The Platform for Building and Operating Metaverse Applications. NVIDIA Corporation. https://www.nvidia.com/en-us/omniverse/

- Roblox Corporation. (2024). Generative AI on Roblox: Empowering Creation. Roblox Blog. https://blog.roblox.com/

- McKinsey & Company. (2023). Value creation in the metaverse. McKinsey & Company. https://www.mckinsey.com/capabilities/growth-marketing-and-sales/our-insights/value-creation-in-the-metaverse

- Unity Technologies. (2024). Unity Muse: AI for Content Creation. Unity. https://unity.com/products/muse

- Inworld AI. (2024). The Future of NPCs: AI Engines for Gaming. Inworld. https://inworld.ai/

- Mildenhall, B., et al. (2020). NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. European Conference on Computer Vision (ECCV). https://www.matthewtancik.com/nerf

- Ball, M. (2022). The Metaverse: And How It Will Revolutionize Everything. Liveright. (Book reference for foundational concepts).

- BMW Group. (2023). BMW iFACTORY: The virtual production of the future. BMW Group PressClub. https://www.press.bmwgroup.com/

- Epic Games. (2023). Procedural Content Generation Framework in Unreal Engine. Unreal Engine Documentation. https://docs.unrealengine.com/

- Cao, Y., et al. (2023). Large Language Models in the Metaverse: A Survey. arXiv preprint. https://arxiv.org/