In the traditional landscape of customer service, empathy was a strictly human trait—hard to scale, difficult to measure, and impossible to automate. A support agent might intuitively sense a customer’s hesitation, but the software they used saw only binary data: a ticket opened, a ticket closed. Today, that paradigm is shifting rapidly. AI emotional analytics in customer experience represents a technological leap from merely managing transactions to understanding the human feelings behind them.

As businesses strive to compete on experience rather than just price, the ability to gauge frustration, joy, confusion, and anger in real-time is becoming a critical differentiator. This guide explores the depths of “Affective Computing”—the study and development of systems that can recognize, interpret, process, and simulate human affects—and how it is reshaping the way brands interact with their audiences.

Scope of this Guide: In this guide, “emotional analytics” refers to the use of artificial intelligence, specifically Natural Language Processing (NLP) and computer vision, to interpret human emotional states from voice, text, and visual data. We will cover the mechanics of the technology, practical applications in contact centers and product feedback, ethical privacy concerns, and implementation frameworks. We will not cover general biological sensors (like heart rate monitors) unless they are directly integrated into consumer-facing CX platforms.

Key Takeaways:

- Beyond Sentiment: Emotional analytics goes deeper than positive/negative sentiment, identifying specific states like “anxious,” “sarcastic,” or “urgent.”

- Multimodal Input: It combines text, voice tone (prosody), and facial expressions for a holistic view of the customer.

- Real-Time Coaching: The technology provides live feedback to agents, helping them de-escalate conflicts before they result in churn.

- Ethical Guardrails: Privacy, consent, and bias mitigation are not optional; they are foundational to successful deployment.

- Operational Efficiency: By predicting emotional trajectories, businesses can route complex calls to senior staff automatically.

What Is AI Emotional Analytics?

At its core, AI emotional analytics is the process of using software to identify and assess human emotions. While traditional sentiment analysis might tell you that a customer review is “negative,” emotional analytics aims to tell you why and how negative. Is the customer angry about a billing error, or disappointed in a feature gap? The distinction matters immensely for the resolution strategy.

The Evolution from Sentiment to Emotion

Sentiment analysis has been a staple of social listening for over a decade. It operates on a polarity scale: positive, neutral, or negative. However, human communication is rarely that simple. A phrase like “Great, another delay,” is linguistically positive (“Great”) but emotionally negative (sarcastic/frustrated).

Emotional analytics uses advanced Natural Language Processing (NLP) and machine learning models trained on vast datasets of human interaction to detect these nuances. It categorizes inputs into a broader spectrum of emotions, often based on psychological frameworks like Ekman’s six basic emotions: anger, disgust, fear, happiness, sadness, and surprise.

The Three Pillars of Data Collection

To build an accurate picture of customer sentiment, AI systems analyze three primary types of data:

- Textual Data (NLP): Analyzing written words in emails, chat logs, and social media. The AI looks for keywords, sentence structure, and punctuation patterns (e.g., all caps or repeated question marks).

- Audio Data (Voice Analytics): Analyzing the way words are said. This involves measuring pitch, tone, cadence, volume, and silence. This field, known as prosody analysis, can detect stress or hesitation even if the spoken words are polite.

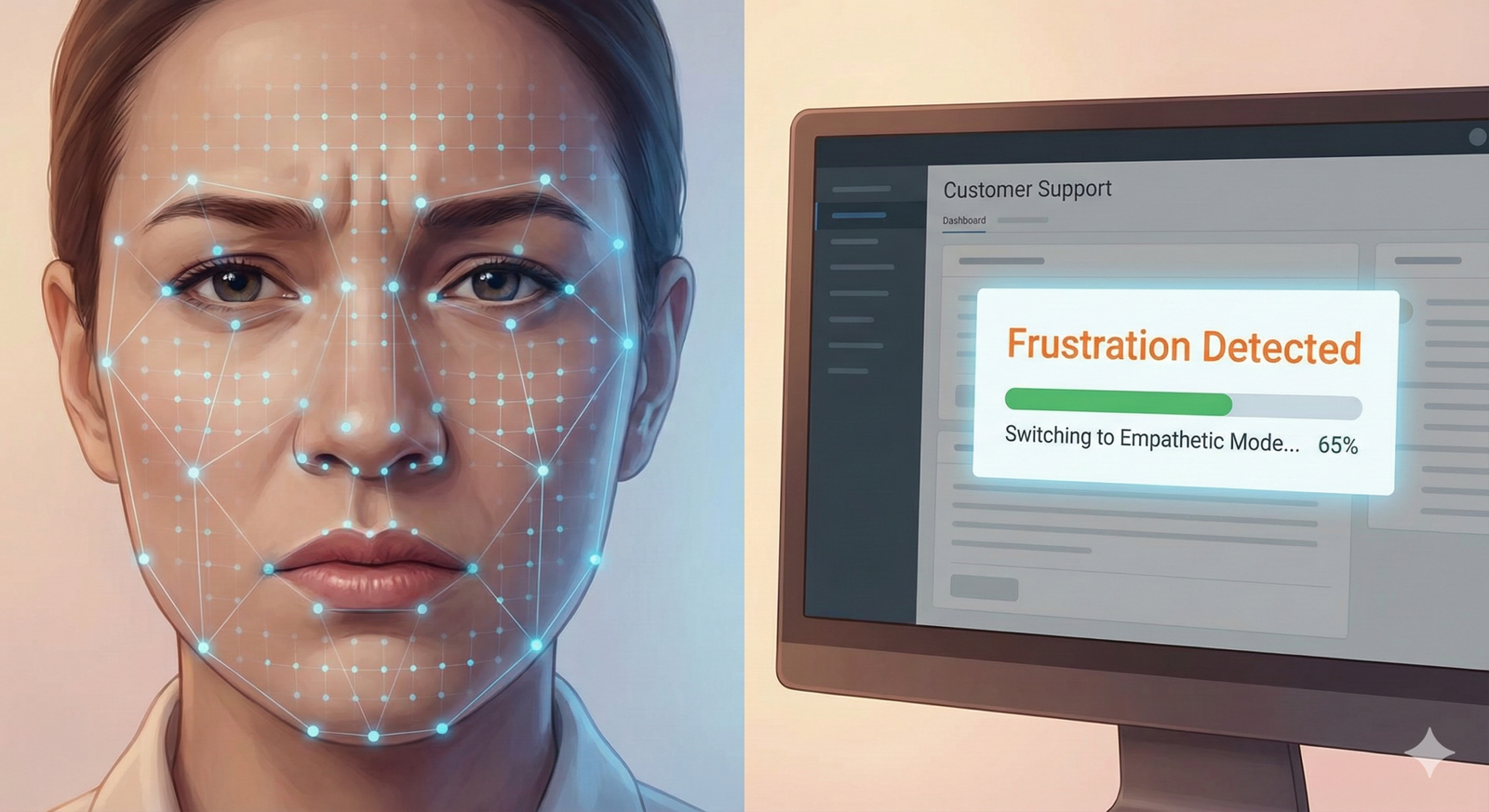

- Visual Data (Computer Vision): Used primarily in video calls or physical retail environments (where permitted). Algorithms map facial landmarks (eyebrows, mouth corners) to detect micro-expressions associated with confusion or delight.

How It Works: The Technology Behind the Feelings

Understanding the mechanics of AI emotional analytics helps demystify the “magic” and clarifies the limitations of the technology. It is not mind-reading; it is pattern recognition at a massive scale.

Natural Language Processing (NLP) and Semantics

Modern NLP models, such as those based on transformer architectures, understand context better than ever. They analyze the relationship between words. For example, in the sentence “The battery life is a joke,” the AI understands that “joke” in this context implies failure, not humor.

Deep learning models are trained on labelled datasets where humans have tagged sentences with specific emotions. Over time, the model learns that specific combinations of adjectives and adverbs correlate with high-intensity emotions.

Voice Intonation and Acoustic Features

Voice analysis is perhaps the most powerful tool in the contact center. The AI decomposes audio waves into quantifiable metrics:

- Jitter and Shimmer: Micro-fluctuations in pitch and loudness that often indicate stress or nervousness.

- Speech Rate: Rapid speech can indicate anxiety or anger, while very slow speech might indicate confusion or fatigue.

- Latency: The time gap between the agent finishing a sentence and the customer responding. Long latency can signal disengagement or thoughtful consideration, depending on the context.

The Integration Layer

The most sophisticated platforms use “multimodal fusion.” They do not rely on just one signal. If a customer says “I’m fine” (text positive) but speaks in a flat, monotone voice (audio neutral/negative) and has a furrowed brow (visual negative), the AI weighs the conflicting signals. Usually, non-verbal cues (voice and face) are weighted higher than text when determining true emotional state.

Why CX Leaders Are Adopting Emotion AI

The adoption of AI emotional analytics in customer experience is driven by the need to humanize digital interactions at scale. As of January 2026, the primary drivers for adoption include:

1. Reducing Customer Churn

Churn rarely happens overnight. It is usually the result of a series of micro-frustrations. Emotional analytics helps visualize the “Emotional Customer Journey.” By identifying the exact moments where excitement turns to confusion or patience turns to anger, businesses can intervene proactively.

2. Enhancing Agent Performance and Well-being

Customer support is a high-burnout profession. Agents often face abusive or highly stressed customers for hours on end.

- Support: Emotion AI can detect when an agent is dealing with a hostile caller and automatically flag a supervisor to intervene.

- Training: Instead of reviewing random calls, managers can filter for calls tagged with “high anger” or “sudden delight” to find coaching moments or best-practice examples.

3. Hyper-Personalization

When a brand knows how you feel, they can tailor their response. If a customer is detected as “anxious” during a mortgage application process, the chatbot can switch from a concise, factual tone to a more reassuring, detailed, and slower-paced communication style.

4. Quantifying the “Unquantifiable”

For decades, CX metrics were limited to post-interaction surveys like Net Promoter Score (NPS) or Customer Satisfaction (CSAT). The problem with surveys is response bias; usually, only the very happy or very angry respond. Emotional analytics scores every interaction, providing a 100% sample size of customer sentiment, rather than a 5% survey response rate.

Key Use Cases in Customer Experience

The application of this technology spans across various touchpoints. Here is how it looks in practice.

Call Center Optimization

This is the most mature use case.

- Real-Time Pop-ups: As an agent speaks, a dashboard shows a live “Empathy Score.” If the customer raises their voice, the system prompts the agent: “Customer sounds frustrated. Try validating their concern before offering a solution.”

- Routing Logic: Interactive Voice Response (IVR) systems can analyze the tone of a customer’s voice before they even reach an agent. A customer shouting “Agent!” repeatedly can be routed to a specialist retention team rather than a general support queue.

Product Experience and Feedback

Product teams use emotional analytics to scour unstructured data (Reddit threads, support tickets, app reviews).

- Feature Validation: Instead of just knowing that users dislike an update, AI can reveal that users feel “betrayed” by a pricing change versus “confused” by a UI change. The former requires a PR response; the latter requires a tutorial.

Intelligent Chatbots and Virtual Assistants

Standard chatbots often fail because they are tone-deaf. They might respond with a cheerful “Happy to help!” emoji after a customer complains about a stolen credit card.

- Adaptive Tone: AI-enhanced bots detect the severity of the user’s input. If the input is “My account was hacked,” the bot adopts a serious, urgent, and formal tone, suppressing marketing messages and emojis.

Telehealth and Financial Services

In high-stakes industries, emotion is a risk indicator.

- Finance: During loan interviews, voice analysis can detect hesitation or stress markers that might indicate fraud or coercion (though this must be balanced carefully against regulatory ethics).

- Telehealth: Mental health apps use text analysis to detect downward trends in a patient’s mood, triggering alerts to human therapists if “hopelessness” markers increase.

Ethical Considerations and Privacy Challenges

The power to read emotions comes with significant responsibility. AI emotional analytics in customer experience sits right at the intersection of helpfulness and surveillance. This is a critical “Your Money or Your Life” (YMYL) adjacent topic because mishandling this data can lead to discrimination or privacy violations.

The “Consent” Dilemma

Customers generally know their calls are “recorded for quality assurance.” They rarely understand that their voice patterns are being dissected to build a psychological profile.

- Transparency: Best practice dictates explicit disclosure. “This call utilizes AI to analyze voice patterns for quality purposes.”

- Opt-Out: GDPR and other privacy frameworks increasingly require that users be able to opt-out of automated profiling.

Bias in Training Data

AI models are only as good as their training data. If a model is trained primarily on the voices and faces of one demographic group, it will fail to accurately interpret others.

- Cultural Misinterpretation: In some cultures, speaking loudly and interrupting is a sign of engagement and interest. In others, it is a sign of aggression. An AI trained on Western norms might flag a passionate discussion as “hostile,” leading to unfair treatment of the customer.

- Accent Bias: Voice analytics engines often struggle with heavy accents, potentially rating non-native speakers as “confused” or “uncooperative” simply because the transcription confidence is low.

The “Pseudo-Science” Risk

There is ongoing scientific debate about how reliably facial expressions map to internal emotions. A person might smile out of politeness while feeling frustrated. Relying too heavily on AI scores can lead to emotional invalidation, where a company tells a customer, “Our system says you were happy with the call,” when the customer explicitly says they were not.

Regulation and Compliance

As of 2026, regulations like the EU AI Act place strict categorization on emotion recognition systems, particularly in education and employment, but increasingly in consumer profiling. Companies must ensure their data retention policies are distinct for biometric data (voiceprints/face scans) versus standard text logs.

Implementing Emotional Analytics: A Strategic Framework

For organizations looking to deploy AI emotional analytics in customer experience, a phased approach reduces risk and maximizes value.

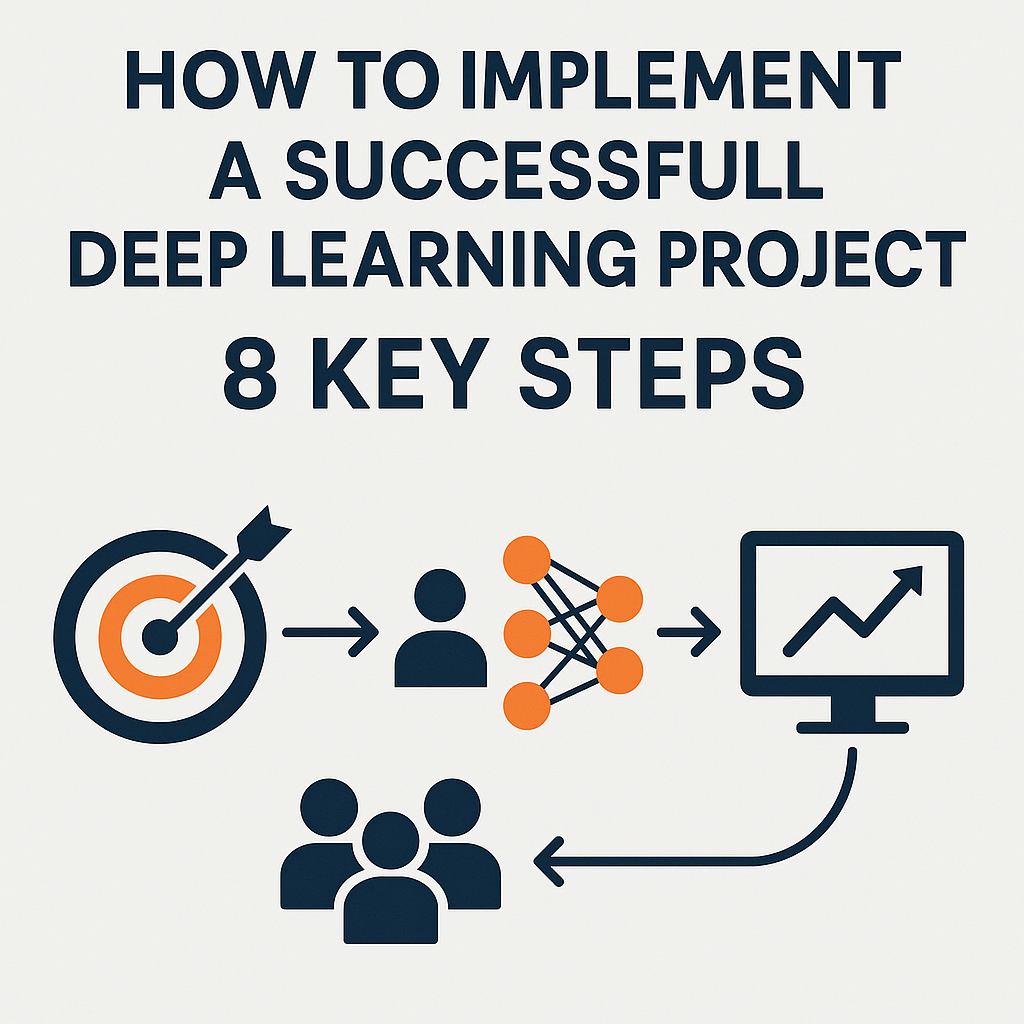

Phase 1: Assessment and Compliance

Before buying software, consult legal and compliance teams.

- Data Audit: Where will the data come from? (Calls, chats, emails).

- Legal Check: Does your privacy policy cover biometric analysis?

- Goal Setting: Are you trying to reduce handle time, improve NPS, or reduce agent churn?

Phase 2: Pilot with Historical Data

Do not go live immediately. Start by analyzing historical data.

- Benchmarking: Run the AI on last year’s calls. Does the AI’s “anger” identification correlate with calls that ended in a cancelled subscription?

- Calibration: Adjust the sensitivity. If the AI flags every raised voice as anger, it will create too much noise.

Phase 3: Agent-Assist (The “Copilot” Model)

Deploy the tool as an assistant, not a supervisor.

- Live Guidance: Give agents real-time tips.

- Feedback Loop: Allow agents to thumb-up or thumb-down the AI’s assessment. “The AI said the customer was angry, but they were actually making a joke.” This human-in-the-loop feedback is vital for model tuning.

Phase 4: Full Integration and Automation

Once the model is trusted (typically 3-6 months), integrate it into routing and automated workflows.

- Automated QA: Replace manual call listening with AI-scoring of 100% of calls, with humans reviewing only the outliers (bottom 5% and top 5%).

Comparison of Leading Technologies

When evaluating vendors, it is helpful to understand the different approaches.

| Feature | Text Analysis (NLP) | Voice/Audio Analysis | Computer Vision (Video) |

| Primary Data Source | Emails, Chat logs, Social Media | Phone calls, Voicemails | Video calls, In-store cameras |

| Emotions Detected | Sentiment (Pos/Neg), Intent, Urgency | Stress, Hesitation, Energy Levels | Engagement, Confusion, Joy |

| Privacy Risk | Low (Content is usually recorded) | Medium (Biometric voice data) | High (Facial biometrics) |

| Implementation Cost | Low to Medium | Medium | High |

| Best For | High-volume ticket categorization | Call center coaching & routing | Usability testing & retail analysis |

Common Mistakes and Pitfalls

Implementing AI emotional analytics in customer experience is not “plug and play.” Here are the most common failures.

1. The “Big Brother” Effect

If agents feel the AI is being used solely to punish them for “bad tone,” they will revolt or game the system.

- Solution: Frame the technology as a shield for agents (e.g., “The AI proves you did everything right even though the customer yelled”) rather than a sword.

2. Ignoring Context

An AI might detect “high energy/loud volume” and classify it as anger. However, the customer might just be in a noisy environment or naturally loud.

- Solution: Ensure agents can override the AI score. Never use the AI score as the sole metric for performance reviews.

3. Over-Reliance on Aggregate Scores

Looking at a department-wide “Empathy Score” of 8/10 hides the fact that one specific product line is generating 100% of the anger.

- Solution: Always segment emotional data by product, region, and customer tenure.

4. Failure to Act

Collecting data without action is waste. Knowing your customers are frustrated about shipping delays provides no value if you do not fix the shipping delays.

- Solution: Create a “Insight-to-Action” workflow where systemic emotional trends are reported directly to product and operations teams, not just support.

The Future of Emotion AI in CX

As we look toward the latter half of the 2020s, the integration of Generative AI and Affective Computing is creating new possibilities.

- Generative Empathy: Large Language Models (LLMs) will not just detect emotion but will generate responses that perfectly match the emotional resonance required. If a customer is sad, the generated email draft will use softer, comforting language. If they are in a hurry, it will be clipped and efficient.

- Predictive Emotional Modeling: AI will predict emotional reactions before a product launch. By simulating millions of customer personas, companies might test marketing copy to see if it triggers “confusion” or “excitement” before a single human sees it.

- VR and The Metaverse: As customer service moves into virtual reality, emotional analytics will track avatar body language and digital proxemics (how close avatars stand to each other) to gauge comfort levels.

Who This Is For (and Who It Isn’t)

This technology is best suited for:

- High-Volume Contact Centers: Where manual QA is impossible to scale.

- High-Stakes Service Industries: Healthcare, Insurance, and Banking where misunderstanding a customer can have legal or financial consequences.

- Global Brands: That need to standardize empathy across diverse regions and languages.

This is likely overkill for:

- Small Businesses: A team of 3 agents talking to 10 customers a day does not need AI to tell them if a customer is angry; they already know.

- Transactional Low-Touch Services: If your interaction is a 10-second ticket validation, emotional depth is rarely required.

Conclusion

AI emotional analytics in customer experience is more than just a shiny new tool; it is a necessary evolution in a world where digital distance often creates emotional disconnection. By translating the messy, complex, and invisible signals of human emotion into structured data, businesses can finally listen—truly listen—to the voice of the customer.

However, the technology must be wielded with care. It is a supplement to human connection, not a replacement for it. The goal is not to create machines that feel, but to create machines that help humans understand each other better. As you consider adopting these tools, remember that the ultimate metric is not the accuracy of the algorithm, but the trust and loyalty it builds with your customer base.

Next Steps: If you are ready to explore emotional analytics, start by auditing your current call recordings. Listen to 10 “failed” calls and ask: “Could a machine have detected the tone change before the agent did?” If the answer is yes, you have a business case.

Frequently Asked Questions (FAQs)

1. Can AI emotional analytics really understand sarcasm? Sarcasm is one of the hardest challenges for AI because the text (“That’s just great”) contradicts the sentiment. However, modern multimodal systems that analyze voice tone (pitch drops, elongated vowels) alongside text have a much higher success rate in detecting sarcasm than text-only models.

2. Is using emotional analytics on customers legal? Yes, in most jurisdictions, provided there is consent. This is usually covered under the standard “call recorded for quality purposes” disclosure. However, strictly regulated regions like the EU (under GDPR and the AI Act) have specific requirements regarding biometric data and profiling. Always consult legal counsel.

3. Does this technology replace human customer service agents? No. It is designed to augment them. AI is great at categorization and flagging, but it cannot genuinely care. The “human in the loop” is essential to validate the AI’s insights and provide the genuine connection that frustrated customers seek.

4. How accurate is emotion AI? Accuracy varies by modality and vendor. Voice analysis typically claims 70-80% accuracy in controlled environments. In noisy real-world call centers, this can drop. It is reliable for spotting trends (e.g., “anger is up 20% this week”) but should not be trusted blindly for individual judgments without human verification.

5. What is the difference between sentiment analysis and emotional analytics? Sentiment analysis is 2D: Positive, Neutral, Negative. Emotional analytics is 3D: It identifies specific states like Joy, Anger, Fear, Sadness, Disgust, and Surprise, as well as intensity (arousal) and valence (positivity/negativity).

6. Can accents affect the accuracy of voice analytics? Yes. Algorithms trained primarily on American English speakers may misinterpret the pitch variations or cadence of non-native speakers or different regional dialects. Leading vendors are actively working to diversify their training datasets to mitigate this bias.

7. How much does it cost to implement AI emotional analytics? Costs vary widely. Some cloud-based Contact Center as a Service (CCaaS) platforms include basic sentiment features in their standard per-seat licensing ($50-$150/agent/month). Specialized, standalone emotional intelligence platforms can cost significantly more and often require enterprise-level integration contracts.

8. How does real-time agent coaching work? The software runs in the background of the agent’s desktop. When it detects specific emotional triggers (e.g., customer interrupting frequently, high voice pitch), it displays a small notification or “whisper” to the agent with advice like “Slow down,” “Apologize,” or “Manager alerted.”

9. Can emotional analytics work on email and chat? Yes, via Natural Language Processing (NLP). While it lacks voice signals, it looks for capitalization, punctuation, response time, and specific emotional keywords. It is generally less rich than voice analysis but still highly effective for triage and routing.

10. What metrics should we track to measure success? Do not just track the “Empathy Score.” Track the outcome of using the tool. Look for improvements in First Contact Resolution (FCR), reductions in Average Handle Time (AHT) for complex calls, and correlations between high AI empathy scores and high post-call CSAT ratings.

References

- MIT Media Lab. (n.d.). Affective Computing Group Projects. Massachusetts Institute of Technology. https://www.media.mit.edu/groups/affective-computing/overview/

- European Parliament. (2024). EU AI Act: Rules for Artificial Intelligence. Official Journal of the European Union. https://artificialintelligenceact.eu/

- Hume AI. (2023). The Science of Expression: Measuring Voice and Face. Hume AI Research. https://hume.ai/science

- McKinsey & Company. (2023). The future of customer experience: Personalized, white-glove service for all. McKinsey.

- Harvard Business Review. (2022). The Risks of Using AI to Interpret Human Emotions. HBR.org.

- Information Commissioner’s Office (ICO). (2023). Biometric data and biometric recognition technology guidance. ICO UK. https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/

- Gartner. (2024). Magic Quadrant for the CRM Customer Engagement Center. Gartner Research. https://www.gartner.com/en/research/magic-quadrant

- Nielsen Norman Group. (2023). Sentiment Analysis in UX: What It Is and How It Works. NN/g. https://www.nngroup.com/articles/sentiment-analysis/

- Ekman, P. (1992). An argument for basic emotions. Cognition and Emotion, 6(3-4), 169-200. (Foundational academic source for emotional frameworks).

- Salesforce. (2024). State of the Connected Customer Report, 6th Edition. Salesforce Research. https://www.salesforce.com/resources/research-reports/state-of-the-connected-customer/