The promise of machine learning in healthcare is no longer theoretical. From detecting disease earlier to streamlining hospital operations and accelerating drug discovery, machine learning (ML) is reshaping how care is delivered, documented, and financed. This guide walks decision-makers, clinicians, informaticists, and health-tech builders through the seven most transformative applications, with concrete steps to get started, guardrails to stay safe, and metrics to prove value. You’ll see how to turn data into clinical and operational wins—without requiring a PhD in data science.

Disclaimer: This article is for general information and education. It is not a substitute for professional medical advice. Always consult qualified clinicians and your compliance team for decisions about patient care and regulatory obligations.

Key takeaways

- Machine learning improves accuracy and timing in diagnosis and risk prediction, especially in imaging-heavy specialties and acute care.

- Operational wins are immediate and measurable: fewer no-shows, shorter waits, better staffing, and smoother bed flow.

- Ambient AI and clinical NLP reduce documentation time and burnout while improving note quality—when implemented with strong privacy and validation controls.

- Remote monitoring + ML turns continuous signals from wearables and home devices into earlier interventions and fewer readmissions.

- Precision medicine and drug discovery benefit from ML’s ability to integrate multi-omics, imaging, and EHR data to personalize therapy and discover candidates faster.

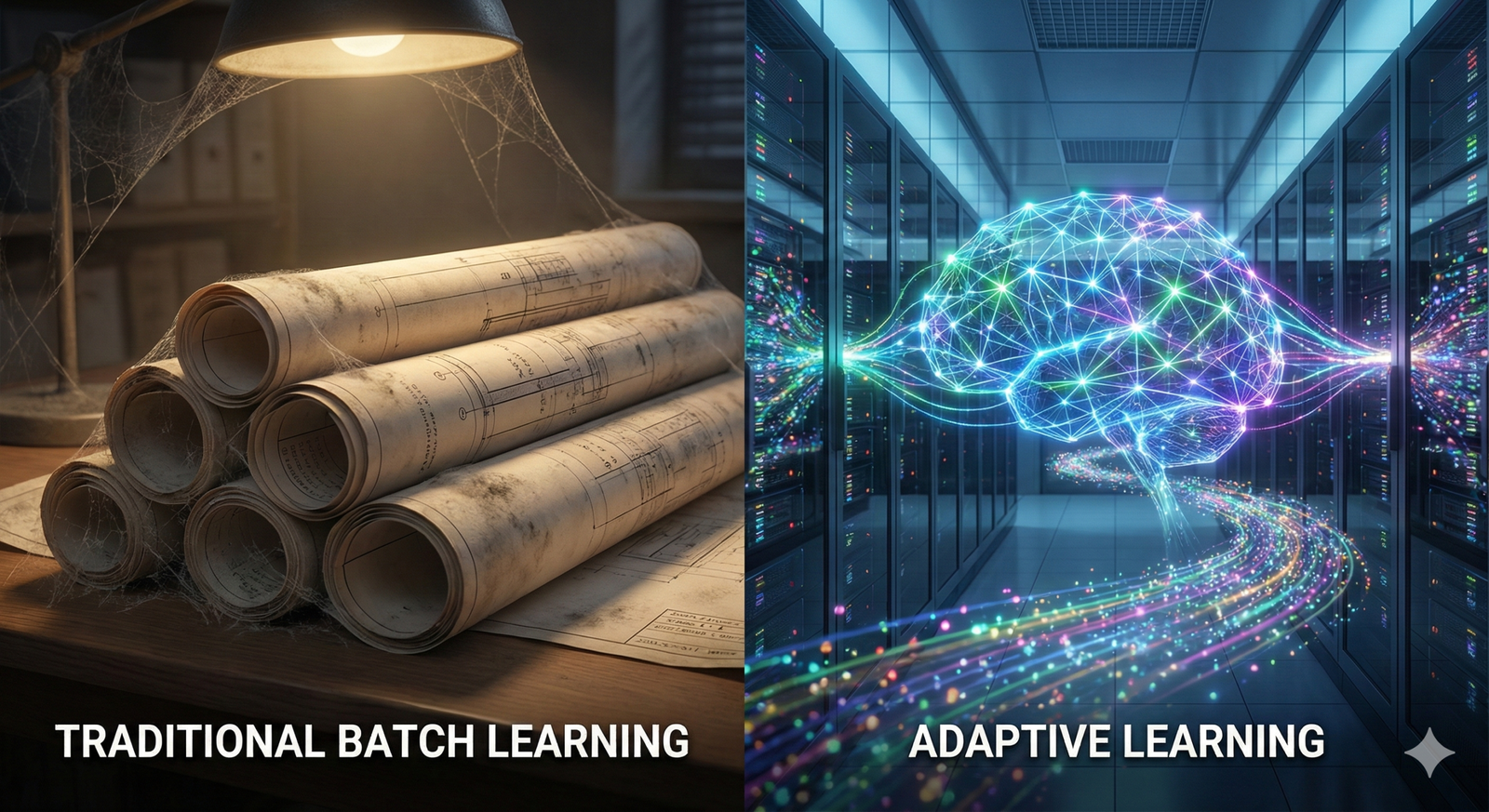

- Governance matters: bias mitigation, federated learning, and model monitoring are as critical as model accuracy.

- Start small, measure relentlessly, and scale with clear clinical ownership, defined KPIs, and a safety-first playbook.

1) Earlier, more accurate diagnosis in imaging and pathology

What it is & why it matters

ML models—especially deep learning—identify patterns in radiology and pathology images that humans may miss. In mammography, chest imaging, dermatology, and digital pathology, AI can prioritize studies, highlight suspicious regions, and support second-reader workflows. The core benefit: find more true positives with fewer false alarms and reduce reading workload.

Requirements & low-cost options

- Data & infrastructure: DICOM or whole-slide image data; integration with PACS/LIS; GPU support or vendor-hosted inference.

- People: Radiologist/pathologist champions, an imaging informatics lead, and an IT interface engineer.

- Software: FDA/CE-marked tools for clinical use; research tools for pilots.

- Budget alternatives: Start with vendor trials, limited-scope pilots (e.g., after-hours lists), or cloud-based offerings to avoid upfront GPU costs.

Beginner implementation steps

- Pick one high-impact use case (e.g., breast screening triage or pulmonary nodule detection) with clear outcomes (cancer detection rate, recall rate, reading time).

- Run a retrospective validation on local data to confirm performance parity on your population.

- Pilot in a shadow mode, where clinicians review AI outputs but clinical decisions remain unchanged.

- Move to assisted mode (AI highlights/triage) with human oversight; set thresholds and escalation rules.

- Monitor KPIs monthly and recalibrate sensitivity/specificity to meet clinical and operational goals.

Beginner modifications & progressions

- Simplify: Begin with worklist triage (flagged positives first) before lesion-level assistance.

- Scale up: Add additional modalities (e.g., digital pathology) after the first deployment stabilizes.

- Advance: Introduce quality-assurance analytics (miss trends, inter-reader variability) and continuous learning loops.

Recommended frequency & KPIs

- Cadence: Weekly QA reports; quarterly model performance reviews.

- KPIs: Cancer detection rate, recall rate, positive predictive value, reading time per study, additional findings requiring follow-up.

Safety, caveats & common mistakes

- Dataset shift: Performance can drop if your scanners, population, or protocols differ from training data—validate locally.

- Automation bias: Clinicians may over-trust model outputs—reinforce “assist, not replace.”

- Equity checks: Audit performance across sex, age, and skin tone (for derm) to detect disparities.

- Governance: Use only cleared devices for clinical decisions; log decisions and overrides.

Mini-plan (example)

- This month: Validate a mammography AI on last year’s cases and compare detection/recall.

- Next month: Shadow-mode pilot in one site with weekly QA huddles.

- Quarter 2: Assisted reading across the network with monthly drift checks.

2) Predictive analytics for risk stratification and early intervention

What it is & why it matters

ML predicts adverse events (e.g., sepsis, deterioration, readmission) hours to days in advance, giving teams a window to act. These models analyze vitals, labs, notes, and medication patterns to surface high-risk patients and trigger standardized bundles.

Requirements & low-cost options

- Data: Near-real-time EHR data feeds (vitals, labs, orders); clear sepsis/readmission labels for training.

- People: Clinical sponsor (ICU/ED/medicine), data engineer, ML engineer, quality lead.

- Software: Existing EHR predictive scores, open-source baselines, or commercial tools.

- Low-cost: Start with a rules-based baseline (e.g., qSOFA) to establish a comparator; add ML on top.

Beginner implementation steps

- Define the target and action: e.g., “predict sepsis 6 hours early → trigger sepsis bundle within 60 minutes.”

- Assemble a de-identified retrospective cohort and train a simple, interpretable model (e.g., gradient boosting).

- Prospective silent pilot to measure alert precision/recall and actionability.

- Activate clinical workflow: alert routing, acknowledgment, and a standing order set.

- Measure impact on protocol adherence, time-to-antibiotics, ICU transfers, and length of stay.

Beginner modifications & progressions

- Simplify: Limit to one care unit to tighten feedback loops.

- Scale: Expand across ED/inpatient; add deterioration and readmission models.

- Advance: Use explanation dashboards (SHAP) and personalized care-path prompts.

Recommended frequency & KPIs

- Cadence: Daily alert dashboards; monthly model recalibration checks.

- KPIs: AUROC/PPV, time-to-intervention, bundle adherence, LOS, ICU days, in-hospital mortality, 30-day readmissions.

Safety, caveats & common mistakes

- Alert fatigue: Tune thresholds and cap alerts per nurse/physician shift.

- Silent failures: Monitor “missed event” reports; capture overrides and reasons.

- Fairness: Audit by age, sex, race/ethnicity, insurance status; correct if disparities appear.

- Regulatory: Treat clinical deployment like introducing a device—change control, monitoring, and safety committee review.

Mini-plan (example)

- Week 1–2: Train and validate a sepsis predictor; compare against existing score.

- Week 3: Silent pilot in the ED with daily huddles.

- Week 4: Go-live with protocolized actions and tight alert governance.

3) Personalized treatment and precision medicine

What it is & why it matters

ML integrates genomics, proteomics, imaging, and clinical history to predict therapy response and toxicity. In oncology and pharmacogenomics, models help choose regimens, titrate doses, and anticipate immune-related adverse events, moving from one-size-fits-all to tailored care.

Requirements & low-cost options

- Data: Sequencing results (panel or WES), structured EHR data, radiology/pathology features.

- People: Molecular tumor board, informatics lead, biostatistician/ML scientist.

- Software: Secure pipelines for variant calling, feature extraction, and model deployment.

- Budget-friendly: Start with publicly available models and risk scores; partner with academic labs.

Beginner implementation steps

- Pick a focused decision point (e.g., adjuvant chemo benefit in early-stage cancer or dose adjustments based on PGx).

- Establish a data dictionary and de-identify datasets for development.

- Validate against known biomarkers to build clinical trust.

- Operationalize through tumor boards: present model explanations alongside standard guidelines.

- Track outcomes (response rates, progression-free survival proxies, toxicity rates).

Beginner modifications & progressions

- Simplify: Start with pharmacogenomics for a single drug class (e.g., anticoagulants).

- Scale up: Introduce multimodal models (images + genomics + notes).

- Advance: Adaptive dosing recommendations with clinician-set guardrails.

Recommended frequency & KPIs

- Cadence: Case-by-case review; quarterly cohort outcomes updates.

- KPIs: Objective response rate, dose-limiting toxicities, treatment changes informed by model, time-to-treatment.

Safety, caveats & common mistakes

- Overfitting on small omics datasets: Use external validation and conservative thresholds.

- Interpretability: Provide feature importance and rationale for recommendations.

- Consent & privacy: Genomic data is uniquely identifiable—strengthen governance and access controls.

Mini-plan (example)

- Quarter 1: Stand up a PGx-guided dosing program in cardiology clinics.

- Quarter 2: Add an imaging-genomics model for a single oncology line of therapy.

- Quarter 3: Expand through the molecular tumor board for broader adoption.

4) Operational efficiency: scheduling, no-shows, staffing, and patient flow

What it is & why it matters

Healthcare operations generate abundant data. ML uses it to predict demand, reduce no-shows, optimize schedules, and manage beds, cutting wait times and improving throughput without new buildings or staff.

Requirements & low-cost options

- Data: Scheduling metadata, historical arrivals, weather/seasonality, bed census, discharge predictions.

- People: Operations lead, access/throughput manager, data engineer/analyst.

- Software: EHR analytics + ML forecasting; workforce management tools.

- Budget-friendly: Begin with no-show prediction + targeted reminders; adopt cloud notebooks and open-source libraries.

Beginner implementation steps

- Target a bottleneck (e.g., MRI waits, clinic no-shows, ED surges).

- Build a simple forecasting model (e.g., gradient boosting) including calendar and external features.

- Design interventions: double-booking rules, SMS reminders for high-risk no-shows, shift optimization.

- Pilot, A/B test, and quantify impact on throughput and patient experience.

- Scale with a command-center dashboard and governance for changes.

Beginner modifications & progressions

- Simplify: Focus on one clinic or modality; implement only reminders first.

- Scale: Add overbooking logic and dynamic slot release.

- Advance: Hospital-wide bed management with discharge prediction and transfer orchestration.

Recommended frequency & KPIs

- Cadence: Daily/shift dashboards; monthly optimization reviews.

- KPIs: No-show rate, average wait time, door-to-doc time (ED), boarding hours, bed occupancy, on-time starts, staff overtime.

Safety, caveats & common mistakes

- Equity risk: Overbooking can disproportionately burden certain patients—monitor for unintended disparities.

- Gaming the metric: Align incentives to quality, not just volume.

- Change fatigue: Communicate pilot scope, duration, and “what’s in it for me” to frontline teams.

Mini-plan (example)

- Month 1: Predict no-shows and send tailored reminders; measure reductions.

- Month 2: Add smart overbooking rules for high-accuracy slots.

- Month 3: Forecast ED arrivals and adjust staffing templates.

5) Clinical documentation, ambient AI, and coding support

What it is & why it matters

Ambient AI listens (with consent) to clinician-patient conversations and drafts notes, orders, and patient instructions, while NLP assists with coding and quality capture. The result: less pajama time, better notes, and happier clinicians—if implemented safely.

Requirements & low-cost options

- Data & integrations: Microphone or mobile capture, secure transcription, EHR APIs for note import.

- People: CMIO/CNIO sponsor, privacy officer, frontline champions, and a health information management lead.

- Software: Ambient scribe platform + clinical NLP for problem list, quality measures, and CDI prompts.

- Budget-friendly: Start with a subset of specialties (primary care, orthopedics) using vendor trials.

Beginner implementation steps

- Select note types (e.g., SOAP, H&P) and define acceptance criteria (accuracy, time saved).

- Run a controlled pilot: 10–20 clinicians, with structured feedback each week.

- Establish a review workflow: clinician must verify and sign; capture correction reasons.

- Extend to coding/CDI: enable suggested diagnoses, laterality, and specificity prompts.

- Measure impact: time in notes, after-hours EHR time, note quality, and patient satisfaction.

Beginner modifications & progressions

- Simplify: Use AI as a dictation enhancement before ambient capture.

- Scale: Add templates per specialty and patient instruction generation.

- Advance: Real-time safety prompts (e.g., drug-dose checks) with careful guardrails.

Recommended frequency & KPIs

- Cadence: Weekly time-savings reports; monthly quality audits.

- KPIs: Minutes per note, after-hours EHR time, note completeness, coding capture, clinician burnout scores.

Safety, caveats & common mistakes

- Privacy/consent: Obtain explicit patient consent; display a clear indicator during capture.

- Hallucinations/omissions: Require clinician verification; audit for insertions and missing negatives.

- Equity & language access: Ensure performance across accents and non-English visits; provide interpreter pathways.

Mini-plan (example)

- Week 1–2: Pilot ambient notes in family medicine; baseline time-in-notes.

- Week 3–4: Expand to orthopedics; enable CDI suggestions; publish time-savings.

6) Remote patient monitoring (RPM) and wearable-driven insights

What it is & why it matters

Wearables and home devices stream vitals, activity, and symptoms. ML models detect anomalies, stratify risk, and trigger outreach, helping prevent avoidable admissions and supporting chronic care at scale.

Requirements & low-cost options

- Devices: FDA-cleared wearables, BP cuffs, scales, oximeters; Bluetooth hubs.

- Connectivity: Patient apps or cellular hubs; secure cloud ingestion.

- People: Care managers, telehealth nurses, data analyst.

- Budget-friendly: Start with one condition (heart failure) and a limited device kit; leverage reimbursement codes where available.

Beginner implementation steps

- Define the goal (e.g., reduce 30-day readmissions for heart failure by 10%).

- Enroll a manageable cohort and stream data to a rules+ML risk score.

- Design outreach tiers: self-management tips, RN call, tele-visit, same-day clinic slot.

- Close the loop: log outreach, interventions, and outcomes for learning.

- Iterate thresholds to balance sensitivity with care team workload.

Beginner modifications & progressions

- Simplify: Begin with rule-based alerts; layer ML as data grows.

- Scale: Add arrhythmia detection, COPD, diabetes; integrate pharmacy refills.

- Advance: Personalized behavioral nudges and relapse-prediction models.

Recommended frequency & KPIs

- Cadence: Daily dashboards for active alerts; monthly outcomes review.

- KPIs: Readmissions, ED visits, alert precision, patient adherence, nurse workload, time-to-intervention.

Safety, caveats & common mistakes

- False positives & alarm fatigue: Calibrate to reduce nuisance alerts; escalate gradually.

- Digital divide: Offer device setup support and cellular options for patients without smartphones.

- Data security: Encrypt end-to-end; handle device recalls and firmware updates swiftly.

Mini-plan (example)

- Month 1: Pilot 50 heart-failure patients with weight and BP monitoring.

- Month 2: Add activity/sleep signals and ML-based decompensation risk.

- Month 3: Expand to COPD with pulse oximetry and symptom diaries.

7) Drug discovery, clinical trials, and real-world evidence

What it is & why it matters

ML accelerates target identification, molecule design, toxicity prediction, and patient recruitment, compressing timelines and improving the odds of success. Generative models simulate binding and ADMET properties; ML on EHRs flags eligible participants and endpoints faster.

Requirements & low-cost options

- Data: Wet-lab assay data, structural biology, omics, and curated trial data; de-identified EHRs for feasibility.

- People: Multi-disciplinary team (computational chemists, biologists, statisticians, clinicians).

- Software: Molecular modeling/ML platforms; secure compute; MLOps for reproducibility.

- Budget-friendly: Use academic collaborations and open servers for structure prediction; start with in-silico triage to reduce wet-lab burden.

Beginner implementation steps

- Define the narrow scope: e.g., virtual screen for inhibitors in a validated pathway or trial cohort finder for a single protocol.

- Stand up a data/label pipeline with version control and assay standards.

- Run prospective validations (e.g., synthesize top candidates; cross-validate recruitment predictions).

- Integrate with lab/clinical workflows: LIMS handoff, protocol inclusion/exclusion automation.

- Iterate and publish internal benchmarks to build confidence and funding.

Beginner modifications & progressions

- Simplify: Start with de-risked targets or repurposing libraries.

- Scale: Move from docking + QSAR to multimodal generative models.

- Advance: Adaptive trials with ML-guided dose titration under regulatory oversight.

Recommended frequency & KPIs

- Cadence: Sprint-based cycles with monthly portfolio reviews.

- KPIs: Hit rate uplift, time-to-lead, in-vitro success rates, screen-to-synthesis ratio, recruitment velocity and diversity.

Safety, caveats & common mistakes

- Overclaiming in silico results: Require orthogonal assays and animal data before clinical optimism.

- Data leakage: Strict separation of training and test sets; blinded validations.

- Regulatory readiness: Maintain traceability, SOPs, and model documentation for audits.

Mini-plan (example)

- Quarter 1: In-silico triage of 1M compounds to 500 candidates; bench test top 50.

- Quarter 2: Launch an ML-augmented cohort finder for one oncology trial.

- Quarter 3: Expand to toxicity prediction and dose-finding simulations.

Quick-start checklist (print this)

- Executive sponsor (clinical + operations) with a clear use case and business case

- Data access pathway approved by privacy/compliance

- Baseline metric defined (current state)

- Simple, interpretable model or cleared device to start

- Pilot plan with success criteria and sunset rules

- Human-in-the-loop review and override logging

- Equity and bias monitoring plan

- Post-go-live monitoring (performance + safety)

- Communication plan for clinicians and patients

- Budget/contract for scaling if pilot succeeds

Troubleshooting & common pitfalls

- “Great AUROC, poor clinical impact.” Re-define the action tied to the prediction. Optimize for PPV and actionability, not just discrimination.

- “Too many alerts.” Raise thresholds, cap per-shift alerts, and suppress duplicates. Use tiered escalation.

- “Model works in sandbox, fails in production.” Address data drift, missing values, and interface mismatches. Implement continuous monitoring and weekly error triage.

- “Clinicians don’t trust it.” Provide explanations, pilot with champions, and publish transparent results—good and bad.

- “Equity concerns.” Stratify performance by demographics and social determinants; retrain or adjust thresholds where disparities appear.

- “Privacy blocks progress.” Explore federated learning, secure enclaves, and de-identification pipelines; involve the privacy officer early.

- “Vendor lock-in.” Insist on exportable data, documented APIs, and model cards.

How to measure progress (and prove ROI)

- Clinical outcomes: detection rates, time-to-therapy, mortality, readmissions, ED returns, toxicities avoided.

- Process measures: bundle adherence, time-in-notes, time-to-bed, appointment completion rates.

- Experience: clinician burnout scores, patient satisfaction (top-box), staff overtime.

- Economics: contribution margin per case, bed-days saved, avoided penalties (readmissions), throughput increases.

- Safety & fairness: override rates, incident reports, performance parity across subgroups, model drift indicators.

Tip: Pre-register your pilot’s primary outcome and analysis plan. Even internal “pre-registration” improves credibility.

A simple 4-week starter roadmap

Week 1: Pick your wedge + baseline

- Choose one use case (e.g., no-show prediction).

- Pull 6–12 months of data; compute baseline KPIs.

- Draft a one-page protocol (population, intervention, metrics, ethics).

Week 2: Build + validate

- Train a transparent model; validate with temporal split.

- Draft the workflow (reminders, overbooking rules).

- Prepare a safety checklist and equity analysis plan.

Week 3: Pilot

- Soft launch with 1–2 clinics; daily stand-ups.

- Track alert/action funnel: prediction → outreach → outcome.

- Tweak thresholds for precision and workload balance.

Week 4: Decide to scale or stop

- Compare to baseline; conduct a brief cost-benefit analysis.

- If scaling: create a roll-out calendar, training plan, and monitoring dashboard.

- Document lessons learned and publish internally.

FAQs

1) Do we need massive datasets to benefit from ML?

No. Many high-value use cases (no-show prediction, documentation assistance) work well with thousands—not millions—of examples. Start with simpler models and grow as your data matures.

2) Will ML replace clinicians?

No. The most successful deployments keep clinicians in the loop, using ML to surface risks, draft notes, and support—not replace—decisions.

3) How do we prevent bias against underrepresented groups?

Audit performance by demographic segments, include diverse training data, and adjust thresholds or retrain as needed. Establish an equity review in your AI governance committee.

4) What about regulatory approvals?

If the tool influences diagnosis or treatment, use market-authorized devices or follow a regulated path. For internally developed models that guide workflows, maintain robust validation, monitoring, and documentation.

5) How do we protect patient privacy?

Minimize data, de-identify where possible, restrict access, encrypt at rest and in transit, and consider federated learning to keep raw data local.

6) How do we win clinician trust?

Start with pain-point wins (documentation time, queue management), share transparent metrics, provide easy overrides, and respond quickly to feedback.

7) What KPIs convince executives?

Tie your initiative to throughput, avoidable admissions, clinician time saved, and quality metrics tied to reimbursement. Express gains in financial terms when possible.

8) How do we handle drift over time?

Monitor input distributions and calibration; schedule periodic recalibration or retraining. Keep a rollback plan ready.

9) Can small hospitals or clinics do this?

Yes. Begin with vendor tools or lightweight models in the cloud, target a single clinic or service line, and scale gradually.

10) What skills do we need in-house?

A clinical sponsor, a data engineer/analyst, and an integration engineer. You can contract model development initially; retain ownership of data, metrics, and governance.

11) How do we measure documentation improvements safely?

Track minutes per note and after-hours EHR time, but also audit for accuracy and omissions. Require clinician sign-off and periodic quality sampling.

12) What’s a realistic first-year goal?

Two to three use cases in steady state, each with clear outcome improvements and documented safety monitoring.

Conclusion

Machine learning is already elevating clinical quality, easing operational bottlenecks, and giving clinicians time back with patients. The organizations that succeed aren’t the ones with the flashiest models—they’re the ones that pick focused problems, measure rigorously, put safety first, and scale what works. Start with one wedge, prove it, and let the momentum compound.

Call to action: Choose one use case from this list, set a baseline this week, and launch a four-week pilot—your future patients (and clinicians) will thank you.

References

- Artificial Intelligence-Enabled Medical Devices — U.S. Food & Drug Administration. Updated July 10, 2025. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-enabled-medical-devices

- Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices — U.S. Food & Drug Administration. 2025. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices

- Artificial Intelligence and Machine Learning in Software as a Medical Device — U.S. Food & Drug Administration (Draft Guidance). March 25, 2025. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device

- As AI device market grows, FDA’s accounting goes silent — STAT. June 20, 2025. https://www.statnews.com/2025/06/20/fda-ai-reporting-goes-silent-more-medical-devices-hit-market/

- Nationwide real-world implementation of AI for cancer screening — Nature Medicine. 2025. https://www.nature.com/articles/s41591-024-03408-6

- Artificial intelligence for breast cancer screening — Nature Communications. 2025. https://www.nature.com/articles/s41467-025-57469-3

- AI-supported breast cancer screening: new results suggest even higher accuracy — ecancer. February 5, 2025. https://ecancer.org/en/news/25975-ai-supported-breast-cancer-screening-new-results-suggest-even-higher-accuracy

- Pivotal trial of an autonomous AI-based diagnostic system — npj Digital Medicine. 2018. https://www.nature.com/articles/s41746-018-0040-6

- Autonomous Artificial Intelligence in Diabetic Retinopathy — Ophthalmology and Therapy (Open Access). 2020. https://pmc.ncbi.nlm.nih.gov/articles/PMC8120059/

- FDA-Authorized AI/ML Tool for Sepsis Prediction — NEJM AI. 2024. https://ai.nejm.org/doi/full/10.1056/AIoa2400867

- Artificial Intelligence in Sepsis Management: An Overview — Medicina (Open Access). 2025. https://pmc.ncbi.nlm.nih.gov/articles/PMC11722371/

- Early detection of sepsis using machine learning algorithms — Journal of Intensive Care. 2024. https://pmc.ncbi.nlm.nih.gov/articles/PMC11523135/

- Virtual Scribes and Physician Time Spent on Electronic Health Records — JAMA Network Open. 2024. https://jamanetwork.com/journals/jamanetworkopen/fullarticle/2819249

- Virtual Scribes and Physician Time Spent on Electronic Health Records (Open Access version) — JAMA Network Open. 2024. https://pmc.ncbi.nlm.nih.gov/articles/PMC11127114/

1 Comment